Maurizio Gabbrielli

Learning Factors in AI-Augmented Education: A Comparative Study of Middle and High School Students

Dec 24, 2025Abstract:The increasing integration of AI tools in education has led prior research to explore their impact on learning processes. Nevertheless, most existing studies focus on higher education and conventional instructional contexts, leaving open questions about how key learning factors are related in AI-mediated learning environments and how these relationships may vary across different age groups. Addressing these gaps, our work investigates whether four critical learning factors, experience, clarity, comfort, and motivation, maintain coherent interrelationships in AI-augmented educational settings, and how the structure of these relationships differs between middle and high school students. The study was conducted in authentic classroom contexts where students interacted with AI tools as part of programming learning activities to collect data on the four learning factors and students' perceptions. Using a multimethod quantitative analysis, which combined correlation analysis and text mining, we revealed markedly different dimensional structures between the two age groups. Middle school students exhibit strong positive correlations across all dimensions, indicating holistic evaluation patterns whereby positive perceptions in one dimension generalise to others. In contrast, high school students show weak or near-zero correlations between key dimensions, suggesting a more differentiated evaluation process in which dimensions are assessed independently. These findings reveal that perception dimensions actively mediate AI-augmented learning and that the developmental stage moderates their interdependencies. This work establishes a foundation for the development of AI integration strategies that respond to learners' developmental levels and account for age-specific dimensional structures in student-AI interactions.

Optimizing the Training Diet: Data Mixture Search for Robust Time Series Forecasting

Dec 12, 2025

Abstract:The standard paradigm for training deep learning models on sensor data assumes that more data is always better. However, raw sensor streams are often imbalanced and contain significant redundancy, meaning that not all data points contribute equally to model generalization. In this paper, we show that, in some cases, "less is more" when considering datasets. We do this by reframing the data selection problem: rather than tuning model hyperparameters, we fix the model and optimize the composition of the training data itself. We introduce a framework for discovering the optimal "training diet" from a large, unlabeled time series corpus. Our framework first uses a large-scale encoder and k-means clustering to partition the dataset into distinct, behaviorally consistent clusters. These clusters represent the fundamental 'ingredients' available for training. We then employ the Optuna optimization framework to search the high-dimensional space of possible data mixtures. For each trial, Optuna proposes a specific sampling ratio for each cluster, and a new training set is constructed based on this recipe. A smaller target model is then trained and evaluated. Our experiments reveal that this data-centric search consistently discovers data mixtures that yield models with significantly higher performance compared to baselines trained on the entire dataset. Specifically - evaluated on PMSM dataset - our method improved performance from a baseline MSE of 1.70 to 1.37, a 19.41% improvement.

A Computational Model of Inclusive Pedagogy: From Understanding to Application

May 02, 2025Abstract:Human education transcends mere knowledge transfer, it relies on co-adaptation dynamics -- the mutual adjustment of teaching and learning strategies between agents. Despite its centrality, computational models of co-adaptive teacher-student interactions (T-SI) remain underdeveloped. We argue that this gap impedes Educational Science in testing and scaling contextual insights across diverse settings, and limits the potential of Machine Learning systems, which struggle to emulate and adaptively support human learning processes. To address this, we present a computational T-SI model that integrates contextual insights on human education into a testable framework. We use the model to evaluate diverse T-SI strategies in a realistic synthetic classroom setting, simulating student groups with unequal access to sensory information. Results show that strategies incorporating co-adaptation principles (e.g., bidirectional agency) outperform unilateral approaches (i.e., where only the teacher or the student is active), improving the learning outcomes for all learning types. Beyond the testing and scaling of context-dependent educational insights, our model enables hypothesis generation in controlled yet adaptable environments. This work bridges non-computational theories of human education with scalable, inclusive AI in Education systems, providing a foundation for equitable technologies that dynamically adapt to learner needs.

One Model to Train them All: Hierarchical Self-Distillation for Enhanced Early Layer Embeddings

Mar 04, 2025Abstract:Deploying language models often requires handling model size vs. performance trade-offs to satisfy downstream latency constraints while preserving the model's usefulness. Model distillation is commonly employed to reduce model size while maintaining acceptable performance. However, distillation can be inefficient since it involves multiple training steps. In this work, we introduce MODULARSTARENCODER, a modular multi-exit encoder with 1B parameters, useful for multiple tasks within the scope of code retrieval. MODULARSTARENCODER is trained with a novel self-distillation mechanism that significantly improves lower-layer representations-allowing different portions of the model to be used while still maintaining a good trade-off in terms of performance. Our architecture focuses on enhancing text-to-code and code-to-code search by systematically capturing syntactic and semantic structures across multiple levels of representation. Specific encoder layers are targeted as exit heads, allowing higher layers to guide earlier layers during training. This self-distillation effect improves intermediate representations, increasing retrieval recall at no extra training cost. In addition to the multi-exit scheme, our approach integrates a repository-level contextual loss that maximally utilizes the training context window, further enhancing the learned representations. We also release a new dataset constructed via code translation, seamlessly expanding traditional text-to-code benchmarks with code-to-code pairs across diverse programming languages. Experimental results highlight the benefits of self-distillation through multi-exit supervision.

Affordably Fine-tuned LLMs Provide Better Answers to Course-specific MCQs

Jan 10, 2025

Abstract:In education, the capability of generating human-like text of Large Language Models (LLMs) inspired work on how they can increase the efficiency of learning and teaching. We study the affordability of these models for educators and students by investigating how LLMs answer multiple-choice questions (MCQs) with respect to hardware constraints and refinement techniques. We explore this space by using generic pre-trained LLMs (the 7B, 13B, and 70B variants of LLaMA-2) to answer 162 undergraduate-level MCQs from a course on Programming Languages (PL) -- the MCQ dataset is a contribution of this work, which we make publicly available. Specifically, we dissect how different factors, such as using readily-available material -- (parts of) the course's textbook -- for fine-tuning and quantisation (to decrease resource usage) can change the accuracy of the responses. The main takeaway is that smaller textbook-based fine-tuned models outperform generic larger ones (whose pre-training requires conspicuous resources), making the usage of LLMs for answering MCQs resource- and material-wise affordable.

Multimodal Side-Tuning for Document Classification

Jan 23, 2023

Abstract:In this paper, we propose to exploit the side-tuning framework for multimodal document classification. Side-tuning is a methodology for network adaptation recently introduced to solve some of the problems related to previous approaches. Thanks to this technique it is actually possible to overcome model rigidity and catastrophic forgetting of transfer learning by fine-tuning. The proposed solution uses off-the-shelf deep learning architectures leveraging the side-tuning framework to combine a base model with a tandem of two side networks. We show that side-tuning can be successfully employed also when different data sources are considered, e.g. text and images in document classification. The experimental results show that this approach pushes further the limit for document classification accuracy with respect to the state of the art.

On the evaluation of (meta-)solver approaches

Feb 17, 2022

Abstract:Meta-solver approaches exploits a number of individual solvers to potentially build a better solver. To assess the performance of meta-solvers, one can simply adopt the metrics typically used for individual solvers (e.g., runtime or solution quality), or employ more specific evaluation metrics (e.g., by measuring how close the meta-solver gets to its virtual best performance). In this paper, based on some recently published works, we provide an overview of different performance metrics for evaluating (meta-)solvers, by underlying their strengths and weaknesses.

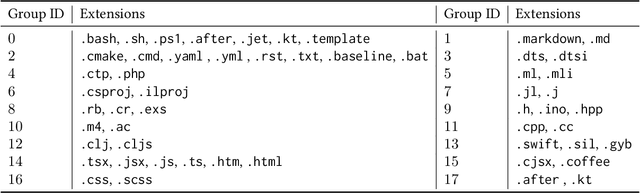

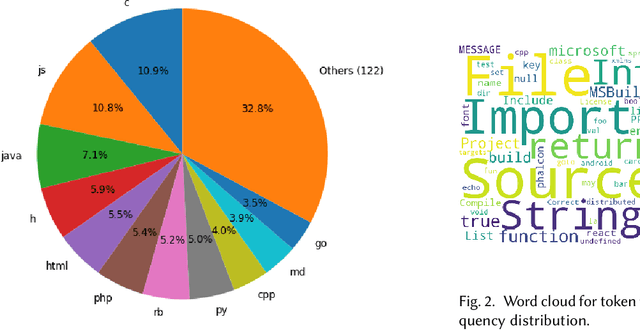

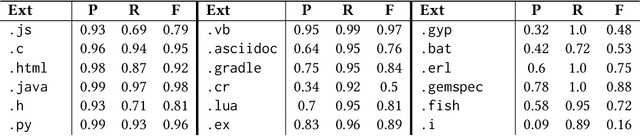

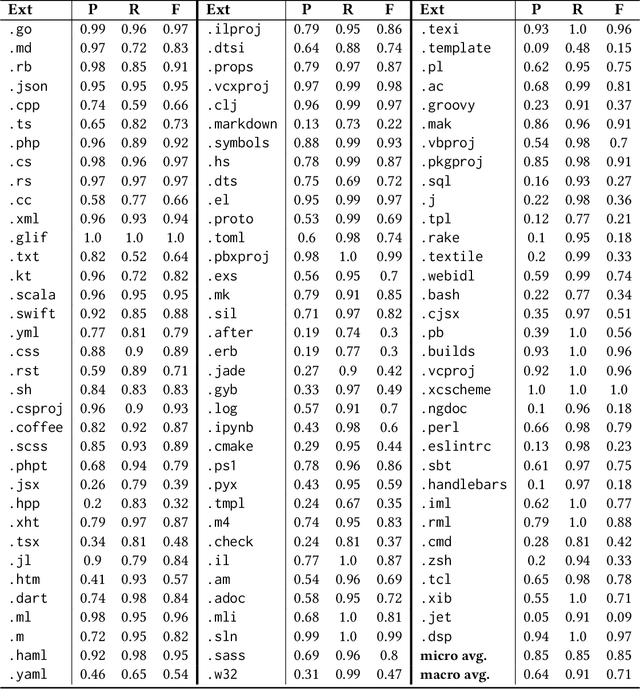

Content-Based Textual File Type Detection at Scale

Jan 21, 2021

Abstract:Programming language detection is a common need in the analysis of large source code bases. It is supported by a number of existing tools that rely on several features, and most notably file extensions, to determine file types. We consider the problem of accurately detecting the type of files commonly found in software code bases, based solely on textual file content. Doing so is helpful to classify source code that lack file extensions (e.g., code snippets posted on the Web or executable scripts), to avoid misclassifying source code that has been recorded with wrong or uncommon file extensions, and also shed some light on the intrinsic recognizability of source code files. We propose a simple model that (a) use a language-agnostic word tokenizer for textual files, (b) group tokens in 1-/2-grams, (c) build feature vectors based on N-gram frequencies, and (d) use a simple fully connected neural network as classifier. As training set we use textual files extracted from GitHub repositories with at least 1000 stars, using existing file extensions as ground truth. Despite its simplicity the proposed model reaches 85% in our experiments for a relatively high number of recognized classes (more than 130 file types).

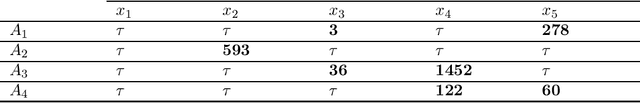

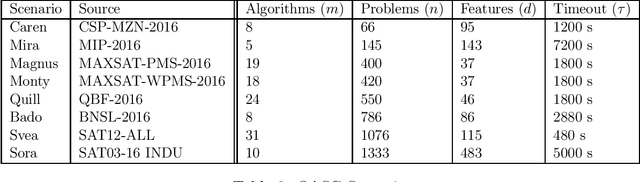

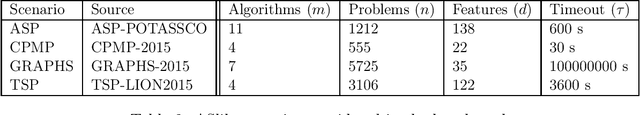

sunny-as2: Enhancing SUNNY for Algorithm Selection

Sep 07, 2020

Abstract:SUNNY is an Algorithm Selection (AS) technique originally tailored for Constraint Programming (CP). SUNNY enables to schedule, from a portfolio of solvers, a subset of solvers to be run on a given CP problem. This approach has proved to be effective for CP problems, and its parallel version won many gold medals in the Open category of the MiniZinc Challenge -- the yearly international competition for CP solvers. In 2015, the ASlib benchmarks were released for comparing AS systems coming from disparate fields (e.g., ASP, QBF, and SAT) and SUNNY was extended to deal with generic AS problems. This led to the development of sunny-as2, an algorithm selector based on SUNNY for ASlib scenarios. A preliminary version of sunny-as2 was submitted to the Open Algorithm Selection Challenge (OASC) in 2017, where it turned out to be the best approach for the runtime minimization of decision problems. In this work, we present the technical advancements of sunny-as2, including: (i) wrapper-based feature selection; (ii) a training approach combining feature selection and neighbourhood size configuration; (iii) the application of nested cross-validation. We show how sunny-as2 performance varies depending on the considered AS scenarios, and we discuss its strengths and weaknesses. Finally, we also show how sunny-as2 improves on its preliminary version submitted to OASC.

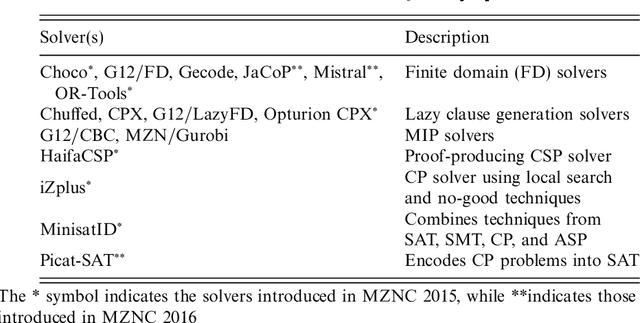

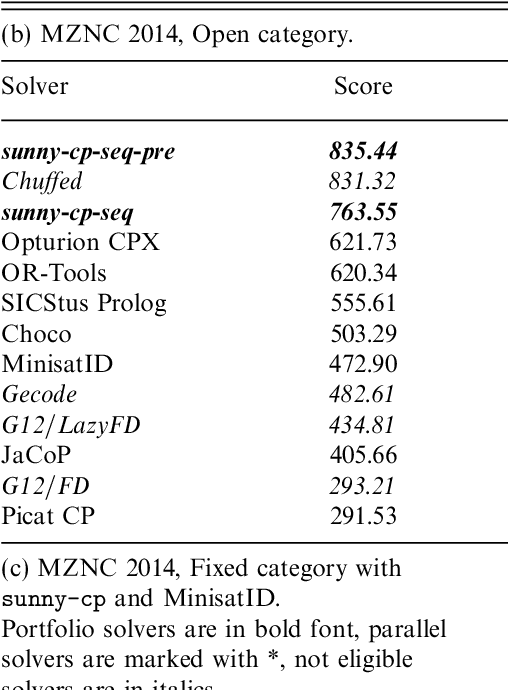

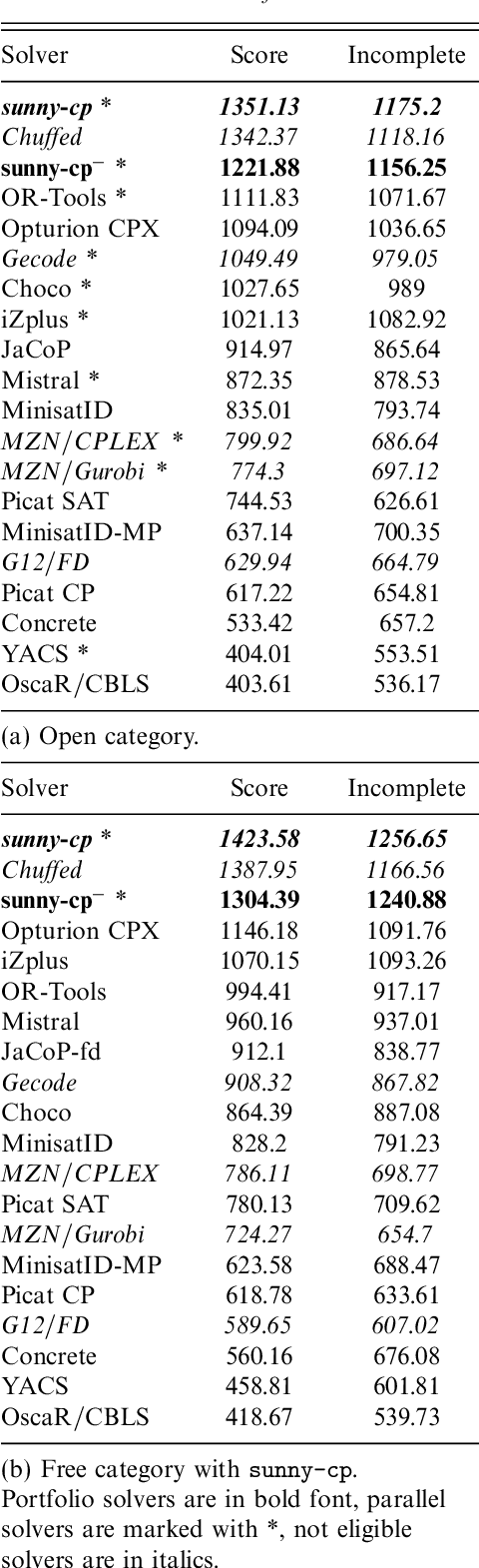

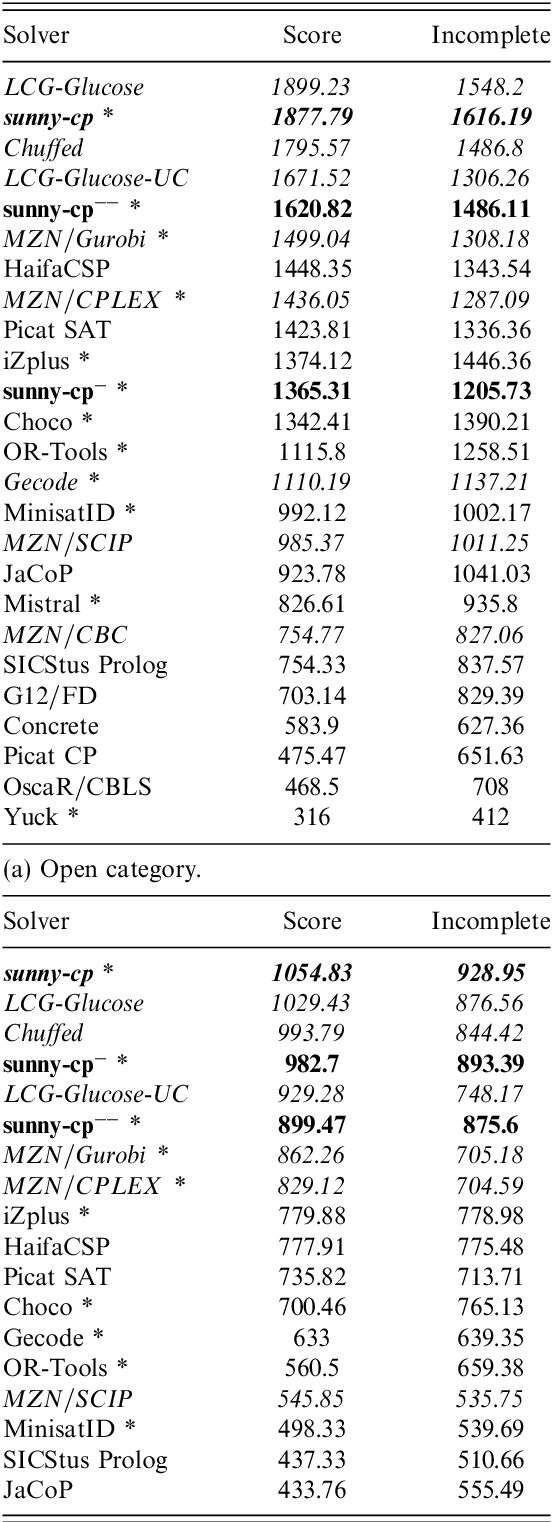

SUNNY-CP and the MiniZinc Challenge

Jul 05, 2017

Abstract:In Constraint Programming (CP) a portfolio solver combines a variety of different constraint solvers for solving a given problem. This fairly recent approach enables to significantly boost the performance of single solvers, especially when multicore architectures are exploited. In this work we give a brief overview of the portfolio solver sunny-cp, and we discuss its performance in the MiniZinc Challenge---the annual international competition for CP solvers---where it won two gold medals in 2015 and 2016. Under consideration in Theory and Practice of Logic Programming (TPLP)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge