Matthew O'Donnell

Deep Learning-Driven Quantitative Spectroscopic Photoacoustic Imaging for Segmentation and Oxygen Saturation Estimation

Dec 17, 2025Abstract:Spectroscopic photoacoustic (sPA) imaging can potentially estimate blood oxygenation saturation (sO2) in vivo noninvasively. However, quantitatively accurate results require accurate optical fluence estimates. Robust modeling in heterogeneous tissue, where light with different wavelengths can experience significantly different absorption and scattering, is difficult. In this work, we developed a deep neural network (Hybrid-Net) for sPA imaging to simultaneously estimate sO2 in blood vessels and segment those vessels from surrounding background tissue. sO2 error was minimized only in blood vessels segmented in Hybrid-Net, resulting in more accurate predictions. Hybrid-Net was first trained on simulated sPA data (at 700 nm and 850 nm) representing initial pressure distributions from three-dimensional Monte Carlo simulations of light transport in breast tissue. Then, for experimental verification, the network was retrained on experimental sPA data (at 700 nm and 850 nm) acquired from simple tissue mimicking phantoms with an embedded blood pool. Quantitative measures were used to evaluate Hybrid-Net performance with an averaged segmentation accuracy of >= 0.978 in simulations with varying noise levels (0dB-35dB) and 0.998 in the experiment, and an averaged sO2 mean squared error of <= 0.048 in simulations with varying noise levels (0dB-35dB) and 0.003 in the experiment. Overall, these results show that Hybrid-Net can provide accurate blood oxygenation without estimating the optical fluence, and this study could lead to improvements in in-vivo sO2 estimation.

Combined fluorescence and photoacoustic imaging of tozuleristide in muscle tissue in vitro -- toward optically-guided solid tumor surgery: feasibility studies

Oct 31, 2025Abstract:Near-infrared fluorescence (NIRF) can deliver high-contrast, video-rate, non-contact imaging of tumor-targeted contrast agents with the potential to guide surgeries excising solid tumors. However, it has been met with skepticism for wide-margin excision due to sensitivity and resolution limitations at depths larger than ~5 mm in tissue. To address this limitation, fast-sweep photoacoustic-ultrasound (PAUS) imaging is proposed to complement NIRF. In an exploratory in vitro feasibility study using dark-red bovine muscle tissue, we observed that PAUS scanning can identify tozuleristide, a clinical stage investigational imaging agent, at a concentration of 20 uM from the background at depths of up to ~34 mm, highly extending the capabilities of NIRF alone. The capability of spectroscopic PAUS imaging was tested by direct injection of 20 uM tozuleristide into bovine muscle tissue at a depth of ~ 8 mm. It is shown that laser-fluence compensation and strong clutter suppression enabled by the unique capabilities of the fast-sweep approach greatly improve spectroscopic accuracy and the PA detection limit, and strongly reduce image artifacts. Thus, the combined NIRF-PAUS approach can be promising for comprehensive pre- (with PA) and intra- (with NIRF) operative solid tumor detection and wide-margin excision in optically guided solid tumor surgery.

Joint Segmentation and Image Reconstruction with Error Prediction in Photoacoustic Imaging using Deep Learning

Jul 02, 2024Abstract:Deep learning has been used to improve photoacoustic (PA) image reconstruction. One major challenge is that errors cannot be quantified to validate predictions when ground truth is unknown. Validation is key to quantitative applications, especially using limited-bandwidth ultrasonic linear detector arrays. Here, we propose a hybrid Bayesian convolutional neural network (Hybrid-BCNN) to jointly predict PA image and segmentation with error (uncertainty) predictions. Each output pixel represents a probability distribution where error can be quantified. The Hybrid-BCNN was trained with simulated PA data and applied to both simulations and experiments. Due to the sparsity of PA images, segmentation focuses Hybrid-BCNN on minimizing the loss function in regions with PA signals for better predictions. The results show that accurate PA segmentations and images are obtained, and error predictions are highly statistically correlated to actual errors. To leverage error predictions, confidence processing created PA images above a specific confidence level.

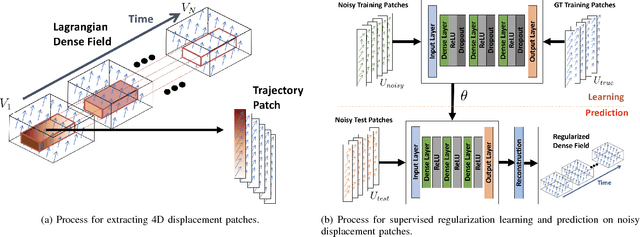

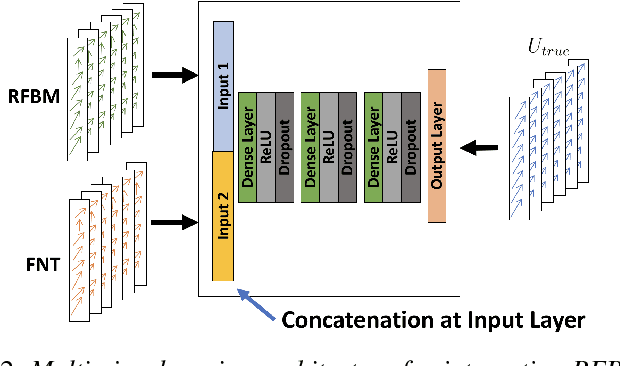

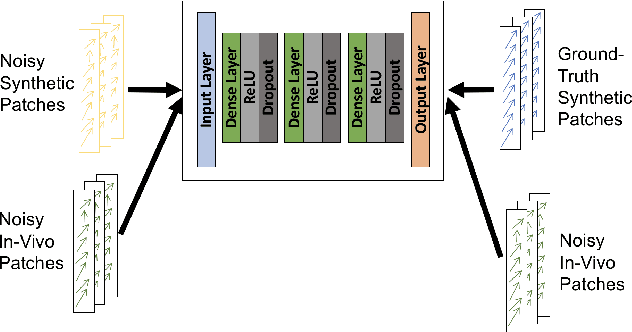

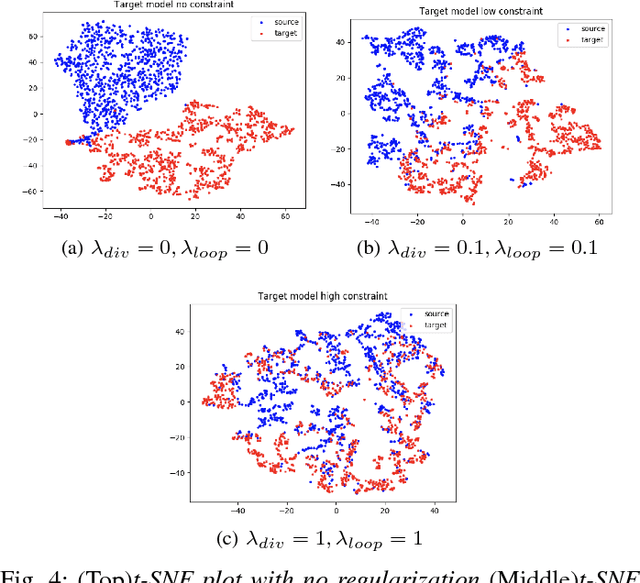

Learning-based Regularization for Cardiac Strain Analysis with Ability for Domain Adaptation

Jul 12, 2018

Abstract:Reliable motion estimation and strain analysis using 3D+time echocardiography (4DE) for localization and characterization of myocardial injury is valuable for early detection and targeted interventions. However, motion estimation is difficult due to the low-SNR that stems from the inherent image properties of 4DE, and intelligent regularization is critical for producing reliable motion estimates. In this work, we incorporated the notion of domain adaptation into a supervised neural network regularization framework. We first propose an unsupervised autoencoder network with biomechanical constraints for learning a latent representation that is shown to have more physiologically plausible displacements. We extended this framework to include a supervised loss term on synthetic data and showed the effects of biomechanical constraints on the network's ability for domain adaptation. We validated both the autoencoder and semi-supervised regularization method on in vivo data with implanted sonomicrometers. Finally, we showed the ability of our semi-supervised learning regularization approach to identify infarcted regions using estimated regional strain maps with good agreement to manually traced infarct regions from postmortem excised hearts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge