Marie Chabert

PG-DPIR: An efficient plug-and-play method for high-count Poisson-Gaussian inverse problems

Apr 14, 2025

Abstract:Poisson-Gaussian noise describes the noise of various imaging systems thus the need of efficient algorithms for Poisson-Gaussian image restoration. Deep learning methods offer state-of-the-art performance but often require sensor-specific training when used in a supervised setting. A promising alternative is given by plug-and-play (PnP) methods, which consist in learning only a regularization through a denoiser, allowing to restore images from several sources with the same network. This paper introduces PG-DPIR, an efficient PnP method for high-count Poisson-Gaussian inverse problems, adapted from DPIR. While DPIR is designed for white Gaussian noise, a naive adaptation to Poisson-Gaussian noise leads to prohibitively slow algorithms due to the absence of a closed-form proximal operator. To address this, we adapt DPIR for the specificities of Poisson-Gaussian noise and propose in particular an efficient initialization of the gradient descent required for the proximal step that accelerates convergence by several orders of magnitude. Experiments are conducted on satellite image restoration and super-resolution problems. High-resolution realistic Pleiades images are simulated for the experiments, which demonstrate that PG-DPIR achieves state-of-the-art performance with improved efficiency, which seems promising for on-ground satellite processing chains.

Deep priors for satellite image restoration with accurate uncertainties

Dec 05, 2024

Abstract:Satellite optical images, upon their on-ground receipt, offer a distorted view of the observed scene. Their restoration, classically including denoising, deblurring, and sometimes super-resolution, is required before their exploitation. Moreover, quantifying the uncertainty related to this restoration could be valuable by lowering the risk of hallucination and avoiding propagating these biases in downstream applications. Deep learning methods are now state-of-the-art for satellite image restoration. However, they require to train a specific network for each sensor and they do not provide the associated uncertainties. This paper proposes a generic method involving a single network to restore images from several sensors and a scalable way to derive the uncertainties. We focus on deep regularization (DR) methods, which learn a deep prior on target images before plugging it into a model-based optimization scheme. First, we introduce VBLE-xz, which solves the inverse problem in the latent space of a variational compressive autoencoder, estimating the uncertainty jointly in the latent and in the image spaces. It enables scalable posterior sampling with relevant and calibrated uncertainties. Second, we propose the denoiser-based method SatDPIR, adapted from DPIR, which efficiently computes accurate point estimates. We conduct a comprehensive set of experiments on very high resolution simulated and real Pleiades images, asserting both the performance and robustness of the proposed methods. VBLE-xz and SatDPIR achieve state-of-the-art results compared to direct inversion methods. In particular, VBLE-xz is a scalable method to get realistic posterior samples and accurate uncertainties, while SatDPIR represents a compelling alternative to direct inversion methods when uncertainty quantification is not required.

Variational Bayes image restoration with compressive autoencoders

Nov 29, 2023

Abstract:Regularization of inverse problems is of paramount importance in computational imaging. The ability of neural networks to learn efficient image representations has been recently exploited to design powerful data-driven regularizers. While state-of-the-art plug-and-play methods rely on an implicit regularization provided by neural denoisers, alternative Bayesian approaches consider Maximum A Posteriori (MAP) estimation in the latent space of a generative model, thus with an explicit regularization. However, state-of-the-art deep generative models require a huge amount of training data compared to denoisers. Besides, their complexity hampers the optimization of the latent MAP. In this work, we propose to use compressive autoencoders for latent estimation. These networks, which can be seen as variational autoencoders with a flexible latent prior, are smaller and easier to train than state-of-the-art generative models. We then introduce the Variational Bayes Latent Estimation (VBLE) algorithm, which performs this estimation within the framework of variational inference. This allows for fast and easy (approximate) posterior sampling. Experimental results on image datasets BSD and FFHQ demonstrate that VBLE reaches similar performance than state-of-the-art plug-and-play methods, while being able to quantify uncertainties faster than other existing posterior sampling techniques.

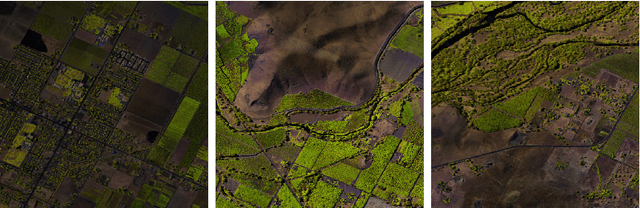

CD-GAN: a robust fusion-based generative adversarial network for unsupervised change detection between heterogeneous images

Mar 02, 2022

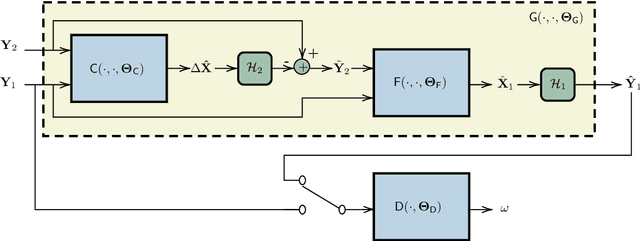

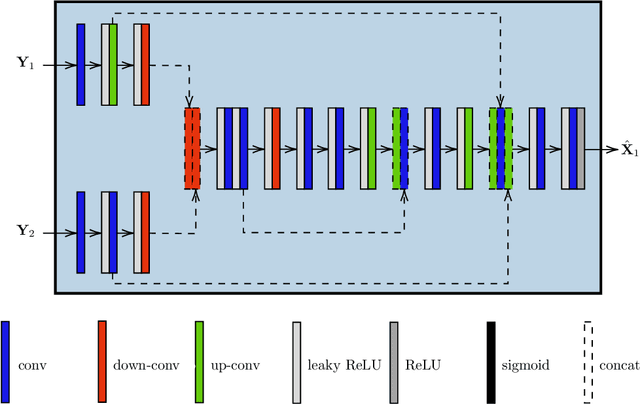

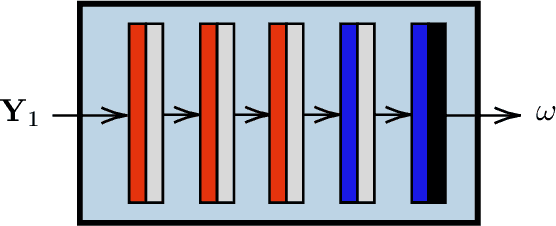

Abstract:In the context of Earth observation, the detection of changes is performed from multitemporal images acquired by sensors with possibly different characteristics and modalities. Even when restricting to the optical modality, this task has proved to be challenging as soon as the sensors provide images of different spatial and/or spectral resolutions. This paper proposes a novel unsupervised change detection method dedicated to such so-called heterogeneous optical images. This method capitalizes on recent advances which frame the change detection problem into a robust fusion framework. More precisely, we show that a deep adversarial network designed and trained beforehand to fuse a pair of multiband images can be easily complemented by a network with the same architecture to perform change detection. The resulting overall architecture itself follows an adversarial strategy where the fusion network and the additional network are interpreted as essential building blocks of a generator. A comparison with state-of-the-art change detection methods demonstrate the versatility and the effectiveness of the proposed approach.

Coupled dictionary learning for unsupervised change detection between multi-sensor remote sensing images

Jul 21, 2018

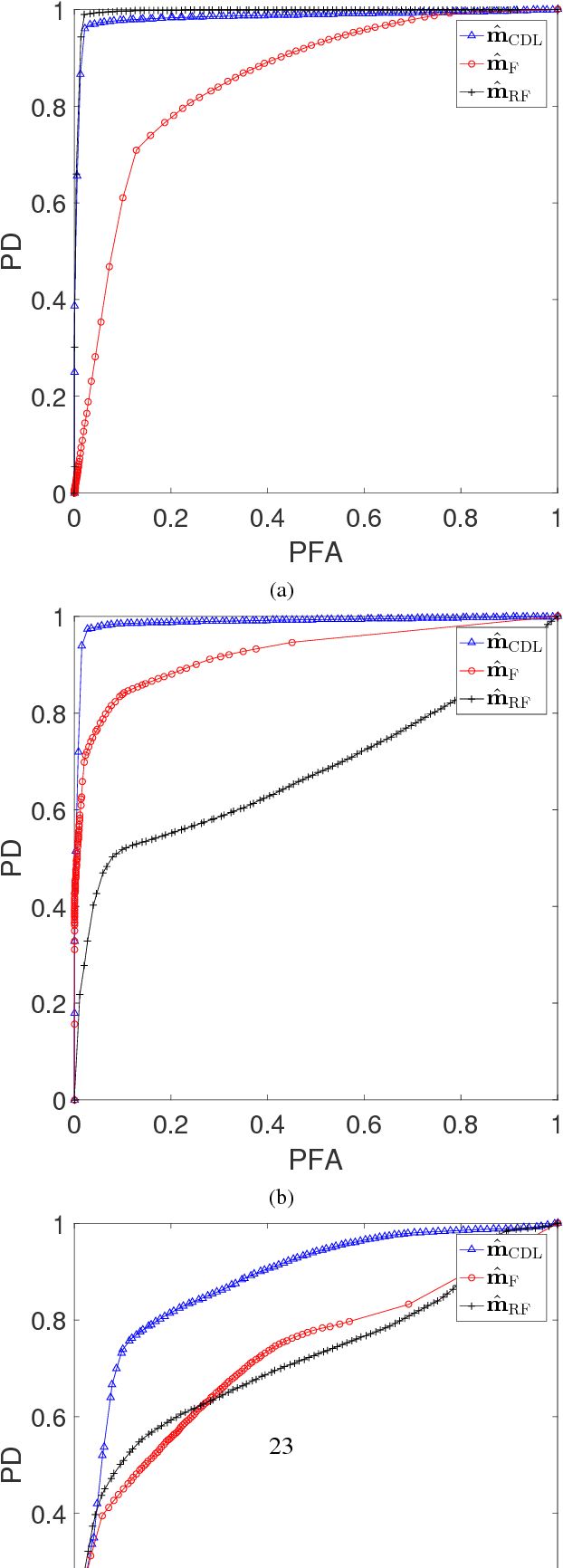

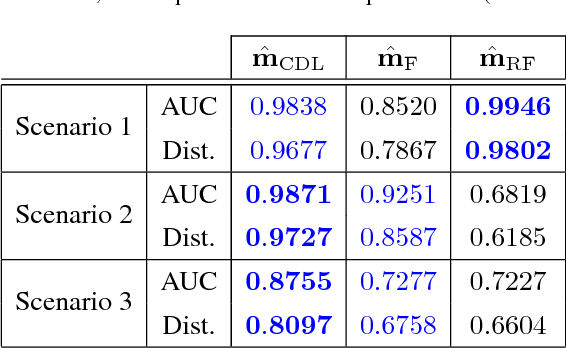

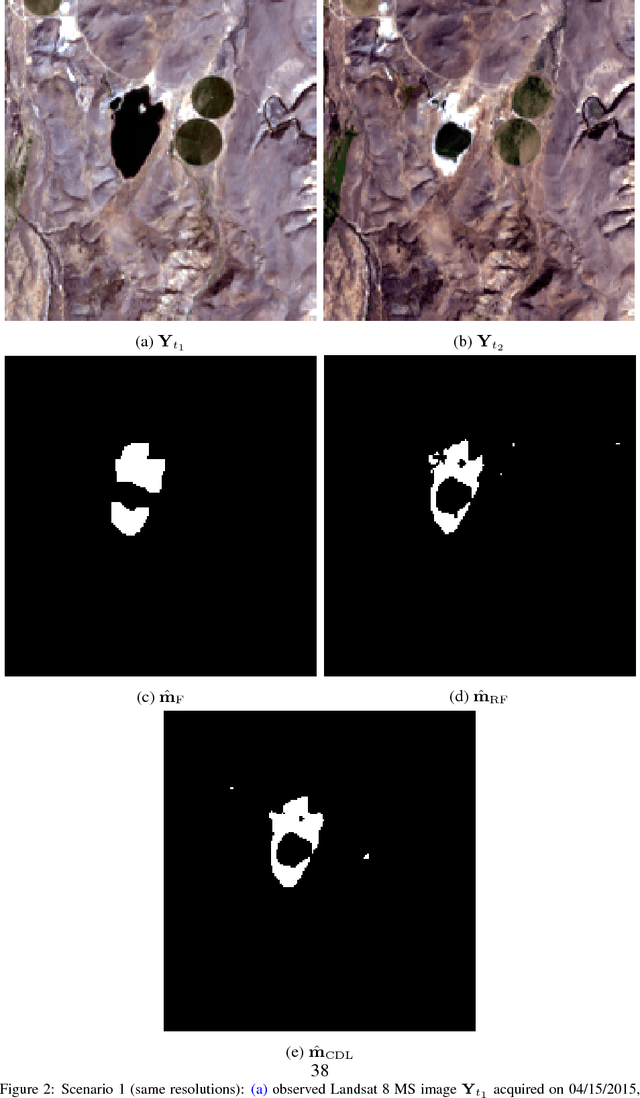

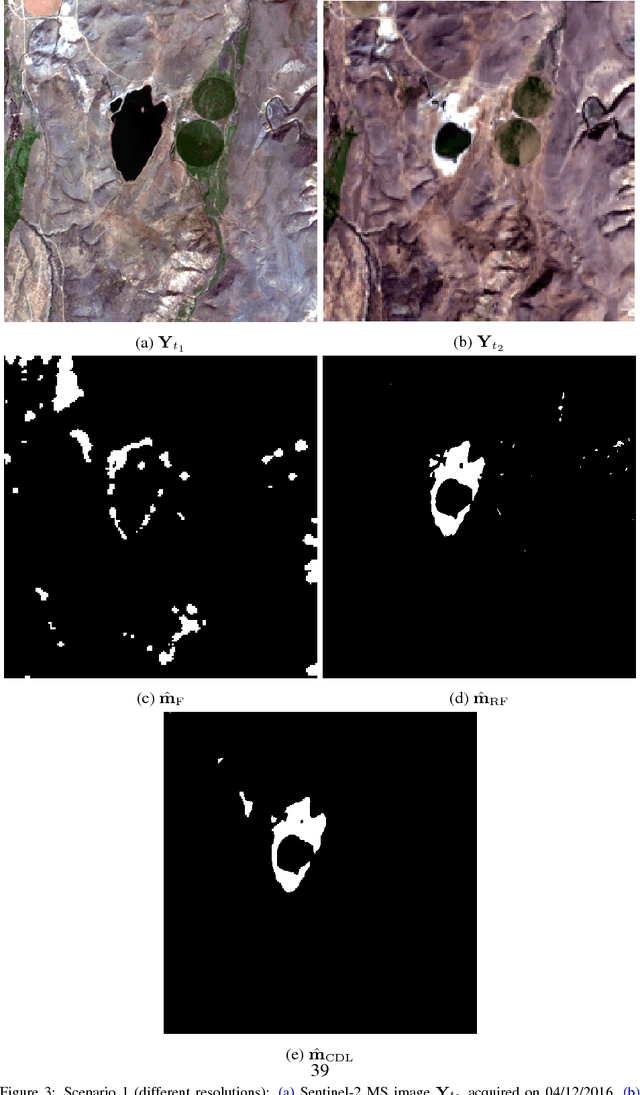

Abstract:Archetypal scenarios for change detection generally consider two images acquired through sensors of the same modality. However, in some specific cases such as emergency situations, the only images available may be those acquired through sensors with different characteristics. This paper addresses the problem of unsupervisedly detecting changes between two observed images acquired by different sensors. These sensor dissimilarities introduce additional issues in the context of operational change detection that are not addressed by most of classical methods. This paper introduces a novel framework to effectively exploit the available information by modeling the two observed images as a sparse linear combination of atoms belonging to an overcomplete pair of coupled dictionaries learnt from each observed image. As they cover the same geographical location, codes are expected to be globally similar except for possible changes in sparse spatial locations. Thus, the change detection task is envisioned through a dual code estimation which enforces spatial sparsity in the difference between the estimated codes associated with each image. This problem is formulated as an inverse problem which is iteratively solved using an efficient proximal alternating minimization algorithm accounting for nonsmooth and nonconvex functions. The proposed method is applied to real multisensor images with simulated yet realistic and real images. A comparison with state-of-the-art change detection methods evidences the accuracy of the proposed strategy.

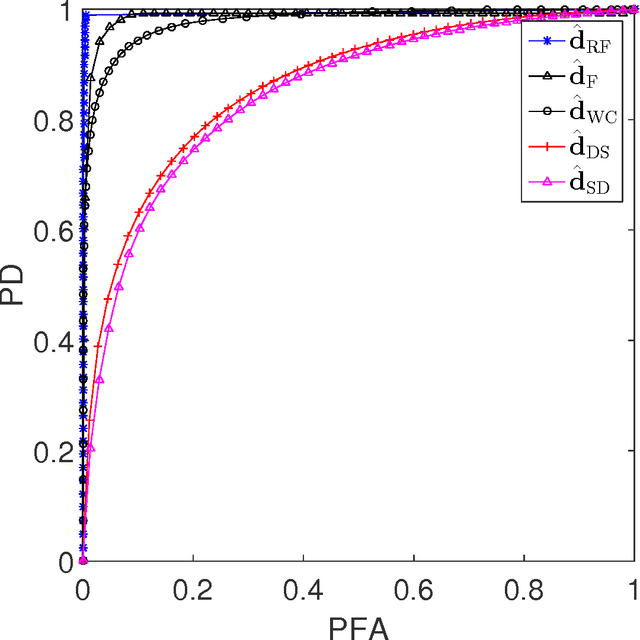

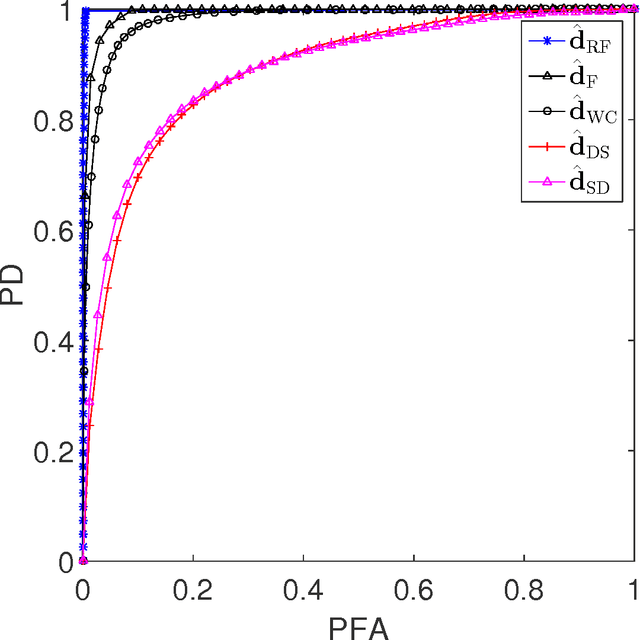

Robust fusion algorithms for unsupervised change detection between multi-band optical images - A comprehensive case study

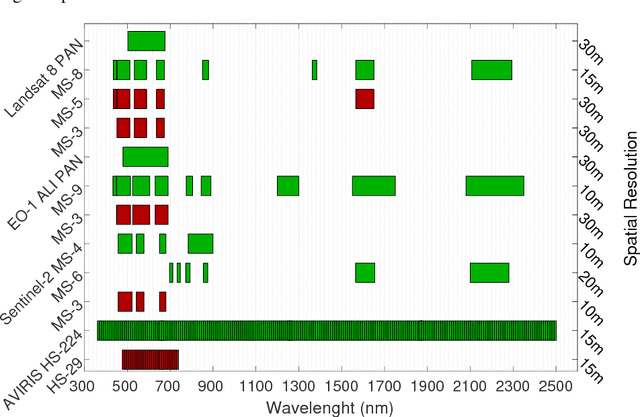

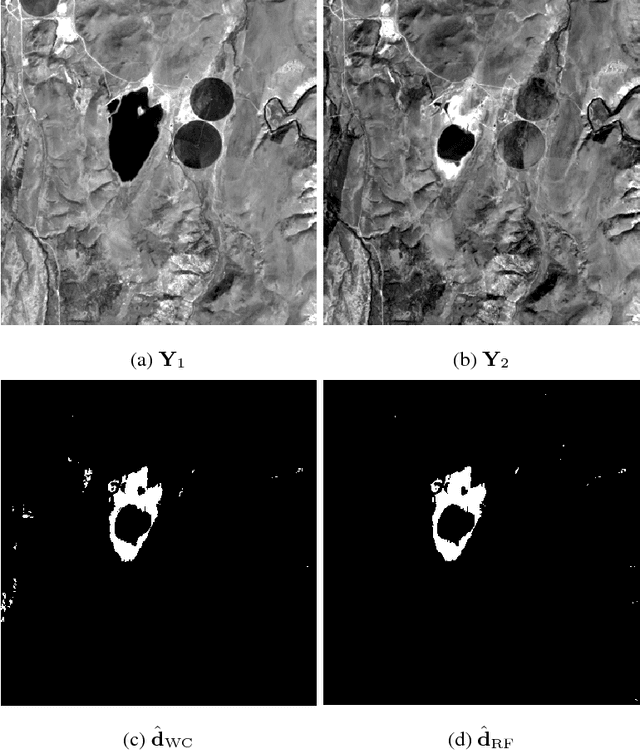

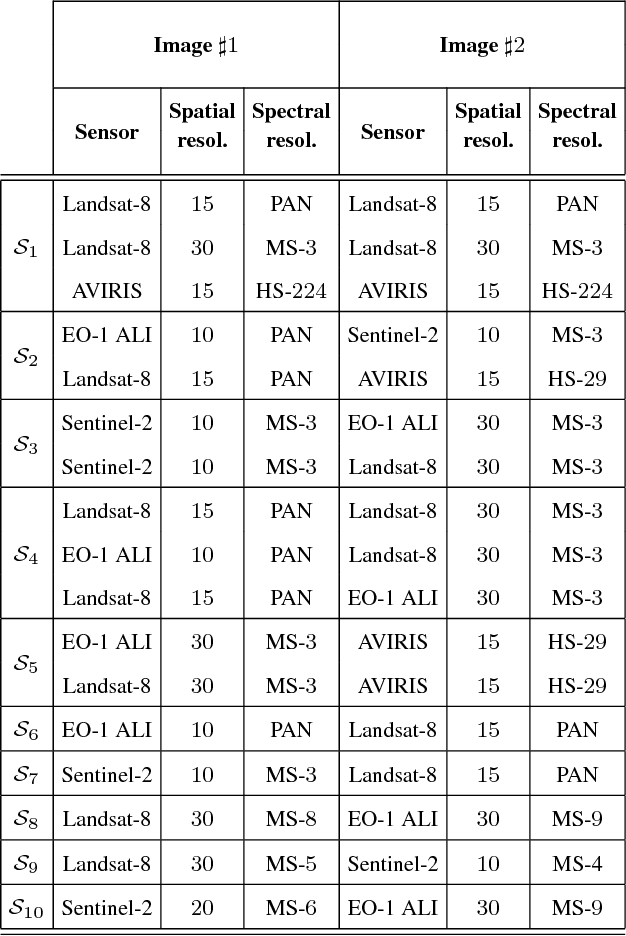

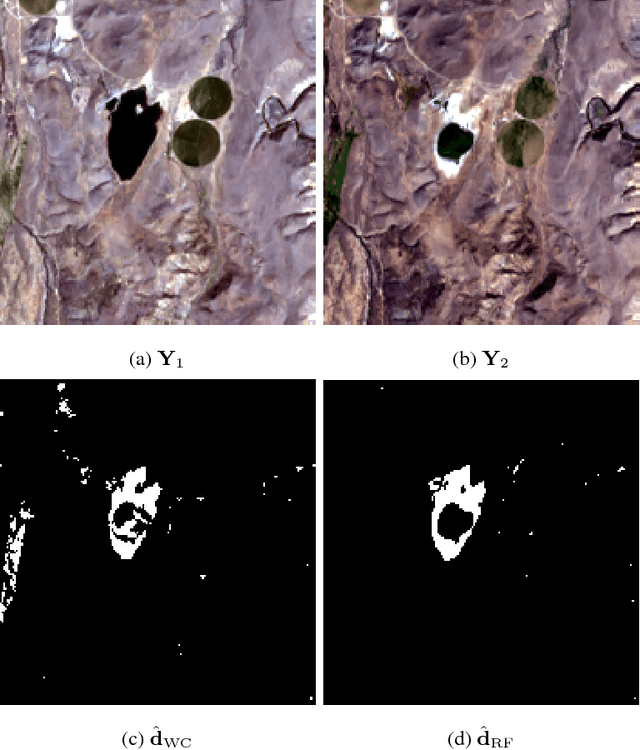

Apr 09, 2018

Abstract:Unsupervised change detection techniques are generally constrained to two multi-band optical images acquired at different times through sensors sharing the same spatial and spectral resolution. This scenario is suitable for a straight comparison of homologous pixels such as pixel-wise differencing. However, in some specific cases such as emergency situations, the only available images may be those acquired through different kinds of sensors with different resolutions. Recently some change detection techniques dealing with images with different spatial and spectral resolutions, have been proposed. Nevertheless, they are focused on a specific scenario where one image has a high spatial and low spectral resolution while the other has a low spatial and high spectral resolution. This paper addresses the problem of detecting changes between any two multi-band optical images disregarding their spatial and spectral resolution disparities. We propose a method that effectively uses the available information by modeling the two observed images as spatially and spectrally degraded versions of two (unobserved) latent images characterized by the same high spatial and high spectral resolutions. Covering the same scene, the latent images are expected to be globally similar except for possible changes in spatially sparse locations. Thus, the change detection task is envisioned through a robust fusion task which enforces the differences between the estimated latent images to be spatially sparse. We show that this robust fusion can be formulated as an inverse problem which is iteratively solved using an alternate minimization strategy. The proposed framework is implemented for an exhaustive list of applicative scenarios and applied to real multi-band optical images. A comparison with state-of-the-art change detection methods evidences the accuracy of the proposed robust fusion-based strategy.

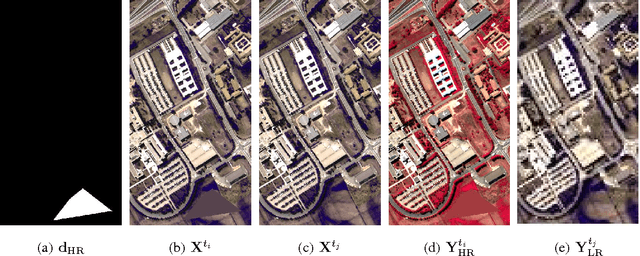

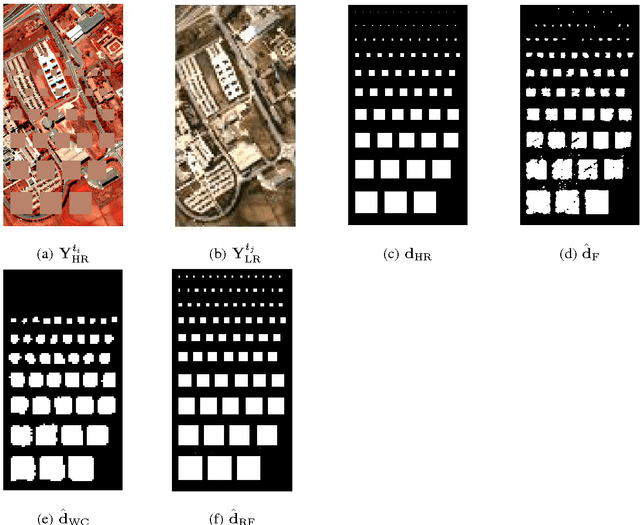

Robust Fusion of Multi-Band Images with Different Spatial and Spectral Resolutions for Change Detection

Sep 20, 2016

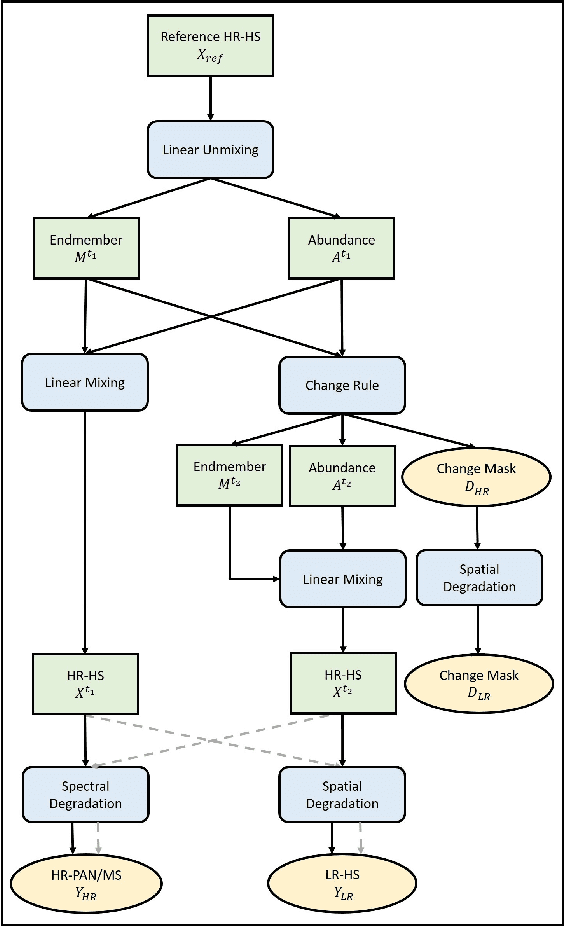

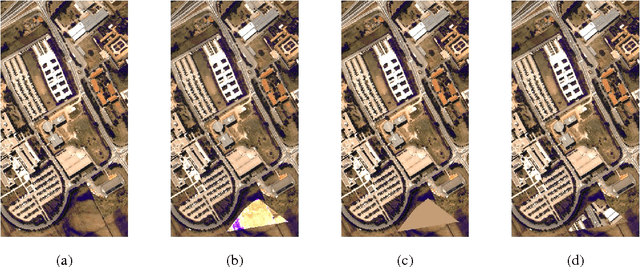

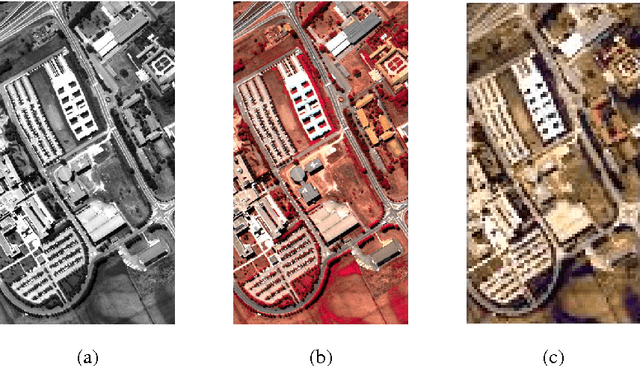

Abstract:Archetypal scenarios for change detection generally consider two images acquired through sensors of the same modality. However, in some specific cases such as emergency situations, the only images available may be those acquired through different kinds of sensors. More precisely, this paper addresses the problem of detecting changes between two multi-band optical images characterized by different spatial and spectral resolutions. This sensor dissimilarity introduces additional issues in the context of operational change detection. To alleviate these issues, classical change detection methods are applied after independent preprocessing steps (e.g., resampling) used to get the same spatial and spectral resolutions for the pair of observed images. Nevertheless, these preprocessing steps tend to throw away relevant information. Conversely, in this paper, we propose a method that more effectively uses the available information by modeling the two observed images as spatial and spectral versions of two (unobserved) latent images characterized by the same high spatial and high spectral resolutions. As they cover the same scene, these latent images are expected to be globally similar except for possible changes in sparse spatial locations. Thus, the change detection task is envisioned through a robust multi-band image fusion method which enforces the differences between the estimated latent images to be spatially sparse. This robust fusion problem is formulated as an inverse problem which is iteratively solved using an efficient block-coordinate descent algorithm. The proposed method is applied to real panchormatic/multispectral and hyperspectral images with simulated realistic changes. A comparison with state-of-the-art change detection methods evidences the accuracy of the proposed strategy.

Detecting Changes Between Optical Images of Different Spatial and Spectral Resolutions: a Fusion-Based Approach

Sep 20, 2016

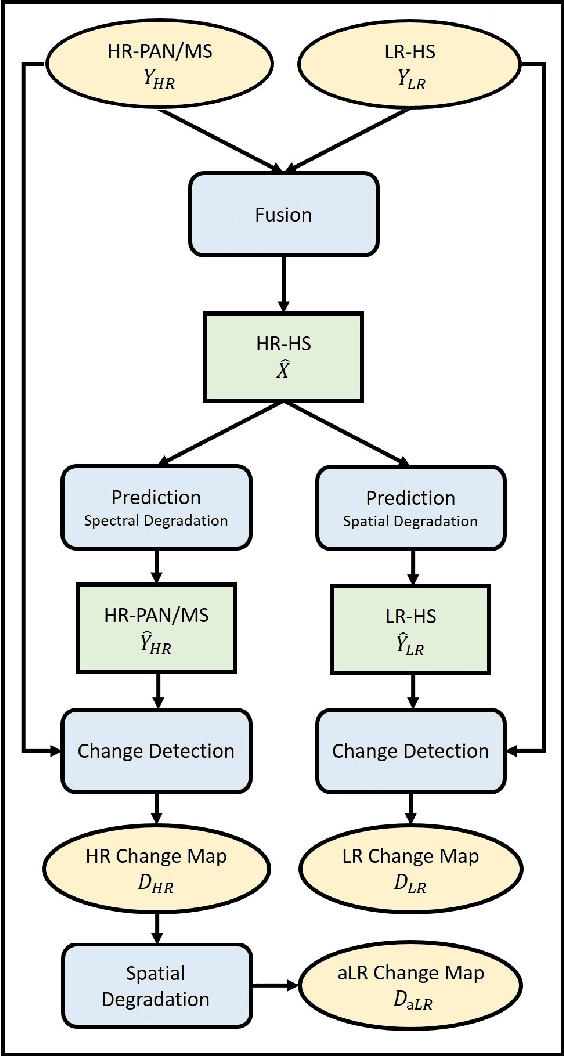

Abstract:Change detection is one of the most challenging issues when analyzing remotely sensed images. Comparing several multi-date images acquired through the same kind of sensor is the most common scenario. Conversely, designing robust, flexible and scalable algorithms for change detection becomes even more challenging when the images have been acquired by two different kinds of sensors. This situation arises in case of emergency under critical constraints. This paper presents, to the best of authors' knowledge, the first strategy to deal with optical images characterized by dissimilar spatial and spectral resolutions. Typical considered scenarios include change detection between panchromatic or multispectral and hyperspectral images. The proposed strategy consists of a 3-step procedure: i) inferring a high spatial and spectral resolution image by fusion of the two observed images characterized one by a low spatial resolution and the other by a low spectral resolution, ii) predicting two images with respectively the same spatial and spectral resolutions as the observed images by degradation of the fused one and iii) implementing a decision rule to each pair of observed and predicted images characterized by the same spatial and spectral resolutions to identify changes. The performance of the proposed framework is evaluated on real images with simulated realistic changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge