Luoyan Zhong

Curvature-Aware Calibration of Tactile Sensors for Accurate Force Estimation on Non-Planar Surfaces

Oct 29, 2025

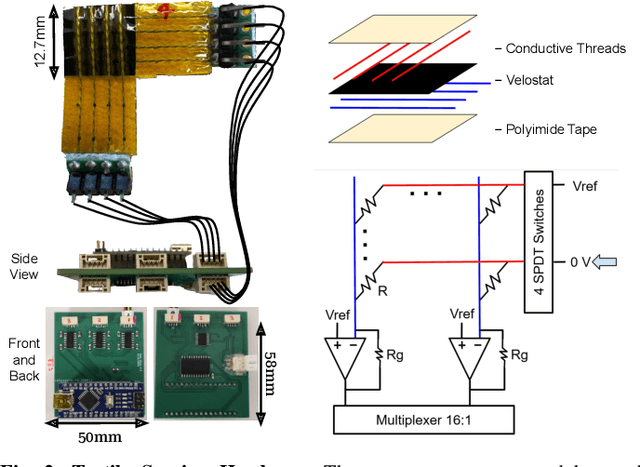

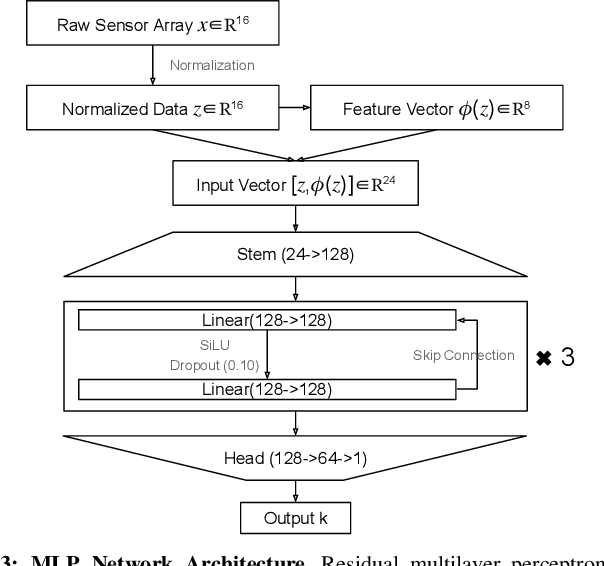

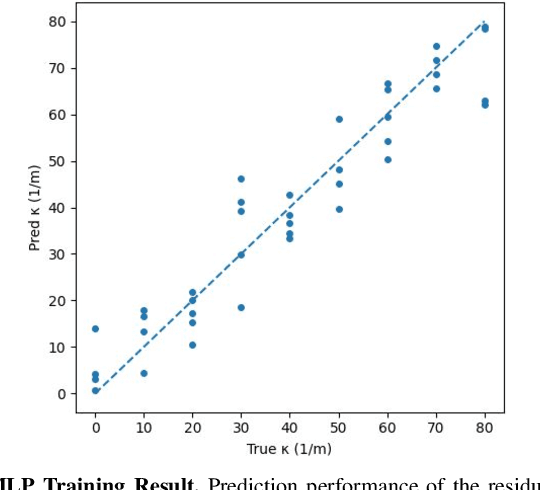

Abstract:Flexible tactile sensors are increasingly used in real-world applications such as robotic grippers, prosthetic hands, wearable gloves, and assistive devices, where they need to conform to curved and irregular surfaces. However, most existing tactile sensors are calibrated only on flat substrates, and their accuracy and consistency degrade once mounted on curved geometries. This limitation restricts their reliability in practical use. To address this challenge, we develop a calibration model for a widely used resistive tactile sensor design that enables accurate force estimation on one-dimensional curved surfaces. We then train a neural network (a multilayer perceptron) to predict local curvature from baseline sensor outputs recorded under no applied load, achieving an R2 score of 0.91. The proposed approach is validated on five daily objects with varying curvatures under forces from 2 N to 8 N. Results show that the curvature-aware calibration maintains consistent force accuracy across all surfaces, while flat-surface calibration underestimates force as curvature increases. Our results demonstrate that curvature-aware modeling improves the accuracy, consistency, and reliability of flexible tactile sensors, enabling dependable performance across real-world applications.

CushSense: Soft, Stretchable, and Comfortable Tactile-Sensing Skin for Physical Human-Robot Interaction

May 06, 2024

Abstract:Whole-arm tactile feedback is crucial for robots to ensure safe physical interaction with their surroundings. This paper introduces CushSense, a fabric-based soft and stretchable tactile-sensing skin designed for physical human-robot interaction (pHRI) tasks such as robotic caregiving. Using stretchable fabric and hyper-elastic polymer, CushSense identifies contacts by monitoring capacitive changes due to skin deformation. CushSense is cost-effective ($\sim$US\$7 per taxel) and easy to fabricate. We detail the sensor design and fabrication process and perform characterization, highlighting its high sensing accuracy (relative error of 0.58%) and durability (0.054% accuracy drop after 1000 interactions). We also present a user study underscoring its perceived safety and comfort for the assistive task of limb manipulation. We open source all sensor-related resources on https://emprise.cs.cornell.edu/cushsense.

RABBIT: A Robot-Assisted Bed Bathing System with Multimodal Perception and Integrated Compliance

Jan 26, 2024Abstract:This paper introduces RABBIT, a novel robot-assisted bed bathing system designed to address the growing need for assistive technologies in personal hygiene tasks. It combines multimodal perception and dual (software and hardware) compliance to perform safe and comfortable physical human-robot interaction. Using RGB and thermal imaging to segment dry, soapy, and wet skin regions accurately, RABBIT can effectively execute washing, rinsing, and drying tasks in line with expert caregiving practices. Our system includes custom-designed motion primitives inspired by human caregiving techniques, and a novel compliant end-effector called Scrubby, optimized for gentle and effective interactions. We conducted a user study with 12 participants, including one participant with severe mobility limitations, demonstrating the system's effectiveness and perceived comfort. Supplementary material and videos can be found on our website https://emprise.cs.cornell.edu/rabbit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge