Lucas Lacasa

On the Role of Consistency Between Physics and Data in Physics-Informed Neural Networks

Feb 11, 2026Abstract:Physics-informed neural networks (PINNs) have gained significant attention as a surrogate modeling strategy for partial differential equations (PDEs), particularly in regimes where labeled data are scarce and physical constraints can be leveraged to regularize the learning process. In practice, however, PINNs are frequently trained using experimental or numerical data that are not fully consistent with the governing equations due to measurement noise, discretization errors, or modeling assumptions. The implications of such data-to-PDE inconsistencies on the accuracy and convergence of PINNs remain insufficiently understood. In this work, we systematically analyze how data inconsistency fundamentally limits the attainable accuracy of PINNs. We introduce the concept of a consistency barrier, defined as an intrinsic lower bound on the error that arises from mismatches between the fidelity of the data and the exact enforcement of the PDE residual. To isolate and quantify this effect, we consider the 1D viscous Burgers equation with a manufactured analytical solution, which enables full control over data fidelity and residual errors. PINNs are trained using datasets of progressively increasing numerical accuracy, as well as perfectly consistent analytical data. Results show that while the inclusion of the PDE residual allows PINNs to partially mitigate low-fidelity data and recover the dominant physical structure, the training process ultimately saturates at an error level dictated by the data inconsistency. When high-fidelity numerical data are employed, PINN solutions become indistinguishable from those trained on analytical data, indicating that the consistency barrier is effectively removed. These findings clarify the interplay between data quality and physics enforcement in PINNs providing practical guidance for the construction and interpretation of physics-informed surrogate models.

Leveraging chaos in the training of artificial neural networks

Jun 10, 2025Abstract:Traditional algorithms to optimize artificial neural networks when confronted with a supervised learning task are usually exploitation-type relaxational dynamics such as gradient descent (GD). Here, we explore the dynamics of the neural network trajectory along training for unconventionally large learning rates. We show that for a region of values of the learning rate, the GD optimization shifts away from purely exploitation-like algorithm into a regime of exploration-exploitation balance, as the neural network is still capable of learning but the trajectory shows sensitive dependence on initial conditions -- as characterized by positive network maximum Lyapunov exponent --. Interestingly, the characteristic training time required to reach an acceptable accuracy in the test set reaches a minimum precisely in such learning rate region, further suggesting that one can accelerate the training of artificial neural networks by locating at the onset of chaos. Our results -- initially illustrated for the MNIST classification task -- qualitatively hold for a range of supervised learning tasks, learning architectures and other hyperparameters, and showcase the emergent, constructive role of transient chaotic dynamics in the training of artificial neural networks.

Aerodynamic and structural airfoil shape optimisation via Transfer Learning-enhanced Deep Reinforcement Learning

May 05, 2025

Abstract:The main objective of this paper is to introduce a transfer learning-enhanced, multi-objective, deep reinforcement learning (DRL) methodology that is able to optimise the geometry of any airfoil based on concomitant aerodynamic and structural criteria. To showcase the method, we aim to maximise the lift-to-drag ratio $C_L/C_D$ while preserving the structural integrity of the airfoil -- as modelled by its maximum thickness -- and train the DRL agent using a list of different transfer learning (TL) strategies. The performance of the DRL agent is compared with Particle Swarm Optimisation (PSO), a traditional gradient-free optimisation method. Results indicate that DRL agents are able to perform multi-objective shape optimisation, that the DRL approach outperforms PSO in terms of computational efficiency and shape optimisation performance, and that the TL-enhanced DRL agent achieves performance comparable to the DRL one, while further saving substantial computational resources.

Towards certification: A complete statistical validation pipeline for supervised learning in industry

Nov 04, 2024

Abstract:Methods of Machine and Deep Learning are gradually being integrated into industrial operations, albeit at different speeds for different types of industries. The aerospace and aeronautical industries have recently developed a roadmap for concepts of design assurance and integration of neural network-related technologies in the aeronautical sector. This paper aims to contribute to this paradigm of AI-based certification in the context of supervised learning, by outlining a complete validation pipeline that integrates deep learning, optimization and statistical methods. This pipeline is composed by a directed graphical model of ten steps. Each of these steps is addressed by a merging key concepts from different contributing disciplines (from machine learning or optimization to statistics) and adapting them to an industrial scenario, as well as by developing computationally efficient algorithmic solutions. We illustrate the application of this pipeline in a realistic supervised problem arising in aerostructural design: predicting the likelikood of different stress-related failure modes during different airflight maneuvers based on a (large) set of features characterising the aircraft internal loads and geometric parameters.

Dynamical stability and chaos in artificial neural network trajectories along training

Apr 08, 2024

Abstract:The process of training an artificial neural network involves iteratively adapting its parameters so as to minimize the error of the network's prediction, when confronted with a learning task. This iterative change can be naturally interpreted as a trajectory in network space -- a time series of networks -- and thus the training algorithm (e.g. gradient descent optimization of a suitable loss function) can be interpreted as a dynamical system in graph space. In order to illustrate this interpretation, here we study the dynamical properties of this process by analyzing through this lens the network trajectories of a shallow neural network, and its evolution through learning a simple classification task. We systematically consider different ranges of the learning rate and explore both the dynamical and orbital stability of the resulting network trajectories, finding hints of regular and chaotic behavior depending on the learning rate regime. Our findings are put in contrast to common wisdom on convergence properties of neural networks and dynamical systems theory. This work also contributes to the cross-fertilization of ideas between dynamical systems theory, network theory and machine learning

An effective theory of collective deep learning

Oct 19, 2023

Abstract:Unraveling the emergence of collective learning in systems of coupled artificial neural networks is an endeavor with broader implications for physics, machine learning, neuroscience and society. Here we introduce a minimal model that condenses several recent decentralized algorithms by considering a competition between two terms: the local learning dynamics in the parameters of each neural network unit, and a diffusive coupling among units that tends to homogenize the parameters of the ensemble. We derive the coarse-grained behavior of our model via an effective theory for linear networks that we show is analogous to a deformed Ginzburg-Landau model with quenched disorder. This framework predicts (depth-dependent) disorder-order-disorder phase transitions in the parameters' solutions that reveal the onset of a collective learning phase, along with a depth-induced delay of the critical point and a robust shape of the microscopic learning path. We validate our theory in realistic ensembles of coupled nonlinear networks trained in the MNIST dataset under privacy constraints. Interestingly, experiments confirm that individual networks -- trained only with private data -- can fully generalize to unseen data classes when the collective learning phase emerges. Our work elucidates the physics of collective learning and contributes to the mechanistic interpretability of deep learning in decentralized settings.

Predicting e-commerce customer conversion from minimal temporal patterns on symbolized clickstream trajectories

Jul 03, 2019

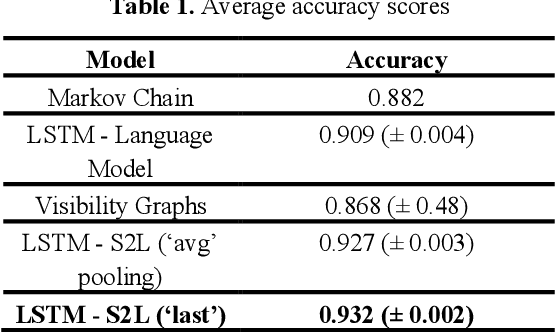

Abstract:Knowing if a user is a buyer or window shopper solely based on clickstream data is of crucial importance for e-commerce platforms seeking to implement real-time accurate NBA (next best action) policies. However, due to the low frequency of conversion events and the noisiness of browsing data, classifying user sessions is very challenging. In this paper, we address the clickstream classification problem in the eCommerce industry and present three major contributions to the burgeoning field of AI-for-retail: first, we collected, normalized and prepared a novel dataset of live shopping sessions from a major European e-commerce website; second, we use the dataset to test in a controlled environment strong baselines and SOTA models from the literature; finally, we propose a new discriminative neural model that outperforms neural architectures recently proposed at Rakuten labs.

Prediction is very hard, especially about conversion. Predicting user purchases from clickstream data in fashion e-commerce

Jun 30, 2019

Abstract:Knowing if a user is a buyer vs window shopper solely based on clickstream data is of crucial importance for ecommerce platforms seeking to implement real-time accurate NBA (next best action) policies. However, due to the low frequency of conversion events and the noisiness of browsing data, classifying user sessions is very challenging. In this paper, we address the clickstream classification problem in the fashion industry and present three major contributions to the burgeoning field of AI in fashion: first, we collected, normalized and prepared a novel dataset of live shopping sessions from a major European e-commerce fashion website; second, we use the dataset to test in a controlled environment strong baselines and SOTA models from the literature; finally, we propose a new discriminative neural model that outperforms neural architectures recently proposed at Rakuten labs.

Effect of antipsychotics on community structure in functional brain networks

Jun 04, 2018

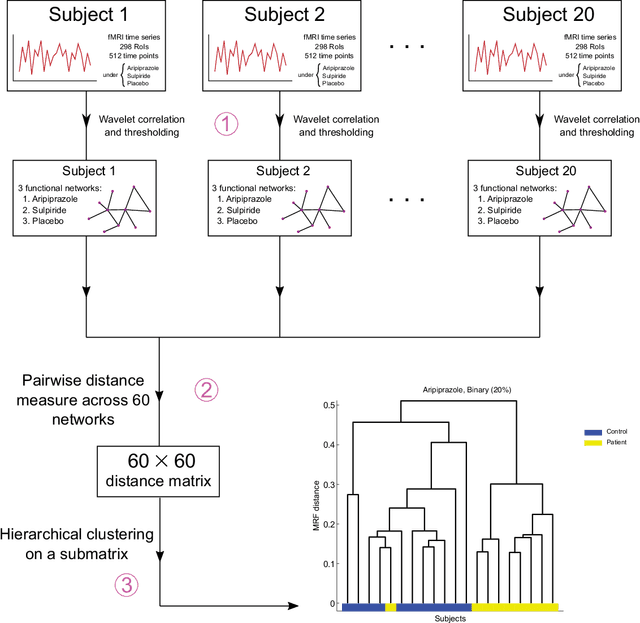

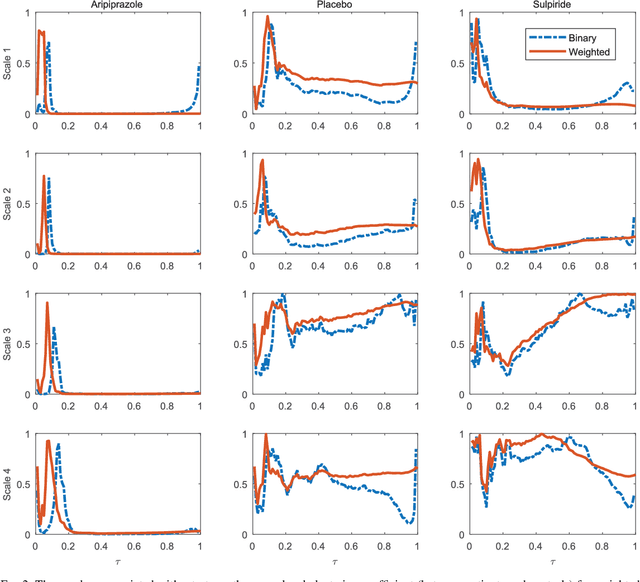

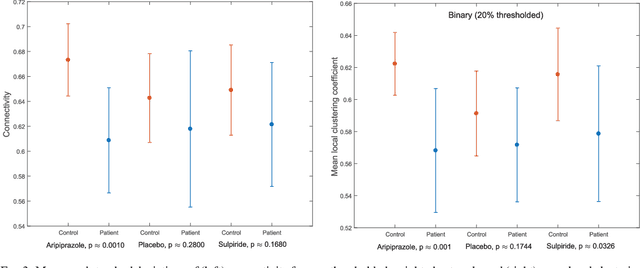

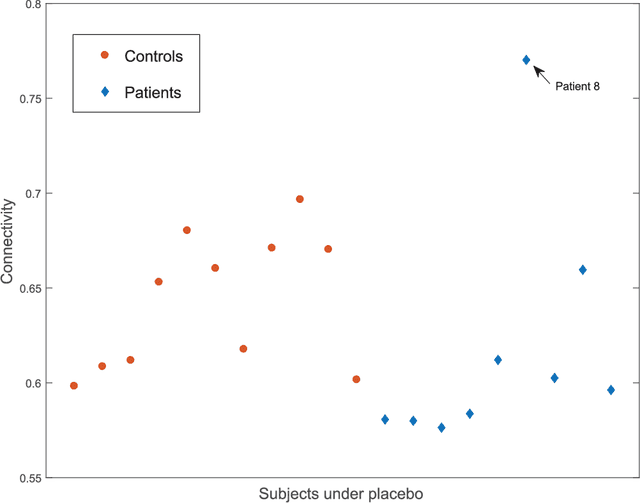

Abstract:Schizophrenia, a mental disorder that is characterized by abnormal social behavior and failure to distinguish one's own thoughts and ideas from reality, has been associated with structural abnormalities in the architecture of functional brain networks. Using various methods from network analysis, we examine the effect of two classical therapeutic antipsychotics --- Aripiprazole and Sulpiride --- on the structure of functional brain networks of healthy controls and patients who have been diagnosed with schizophrenia. We compare the community structures of functional brain networks of different individuals using mesoscopic response functions, which measure how community structure changes across different scales of a network. We are able to do a reasonably good job of distinguishing patients from controls, and we are most successful at this task on people who have been treated with Aripiprazole. We demonstrate that this increased separation between patients and controls is related only to a change in the control group, as the functional brain networks of the patient group appear to be predominantly unaffected by this drug. This suggests that Aripiprazole has a significant and measurable effect on community structure in healthy individuals but not in individuals who are diagnosed with schizophrenia. In contrast, we find for individuals are given the drug Sulpiride that it is more difficult to separate the networks of patients from those of controls. Overall, we observe differences in the effects of the drugs (and a placebo) on community structure in patients and controls and also that this effect differs across groups. We thereby demonstrate that different types of antipsychotic drugs selectively affect mesoscale structures of brain networks, providing support that mesoscale structures such as communities are meaningful functional units in the brain.

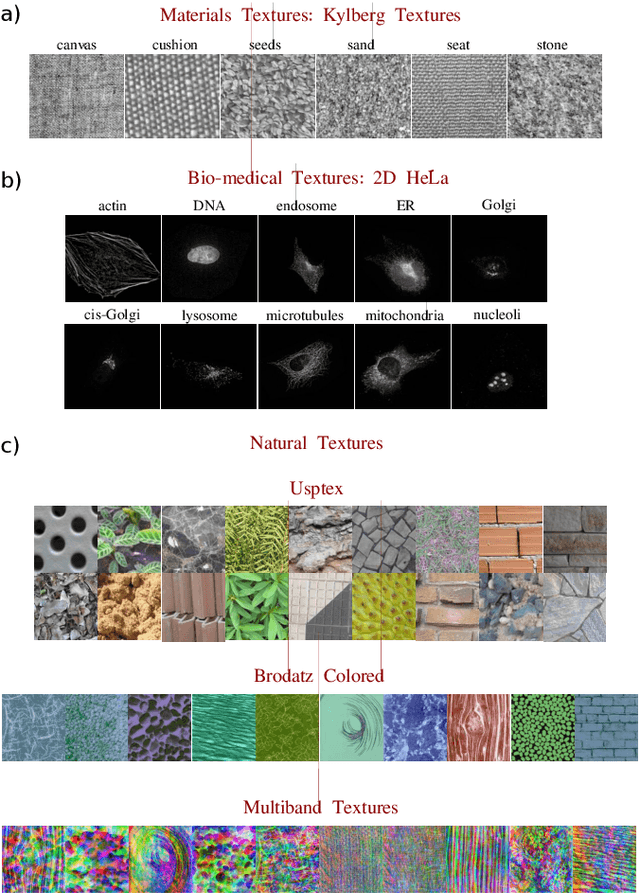

Visibility graphs for image processing

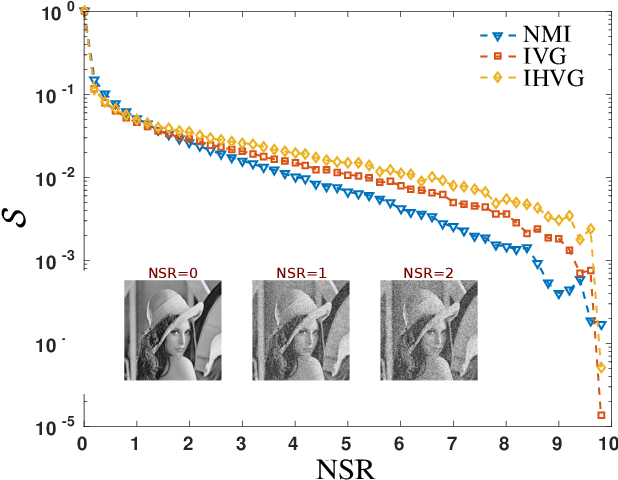

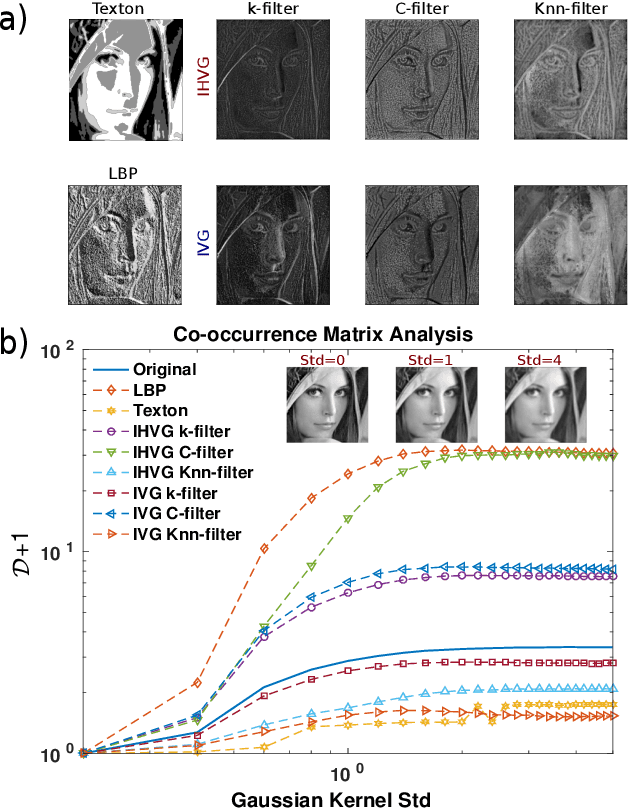

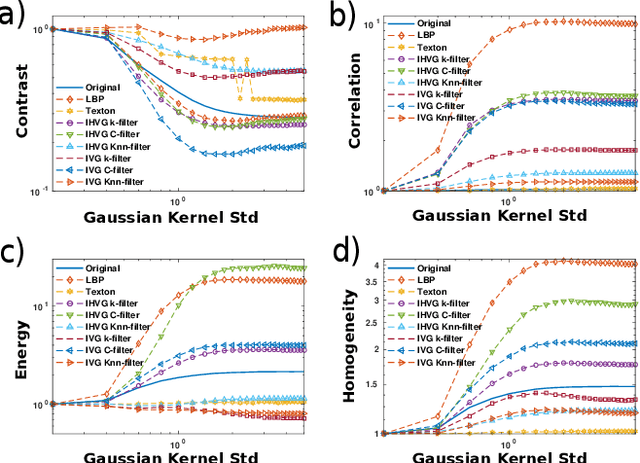

Apr 19, 2018

Abstract:The family of image visibility graphs (IVGs) have been recently introduced as simple algorithms by which scalar fields can be mapped into graphs. Here we explore the usefulness of such operator in the scenario of image processing and image classification. We demonstrate that the link architecture of the image visibility graphs encapsulates relevant information on the structure of the images and we explore their potential as image filters and compressors. We introduce several graph features, including the novel concept of Visibility Patches, and show through several examples that these features are highly informative, computationally efficient and universally applicable for general pattern recognition and image classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge