Lior Limonad

The WHY in Business Processes: Unification of Causal Process Models

May 28, 2025Abstract:Causal reasoning is essential for business process interventions and improvement, requiring a clear understanding of causal relationships among activity execution times in an event log. Recent work introduced a method for discovering causal process models but lacked the ability to capture alternating causal conditions across multiple variants. This raises the challenges of handling missing values and expressing the alternating conditions among log splits when blending traces with varying activities. We propose a novel method to unify multiple causal process variants into a consistent model that preserves the correctness of the original causal models, while explicitly representing their causal-flow alternations. The method is formally defined, proved, evaluated on three open and two proprietary datasets, and released as an open-source implementation.

Agentic AI Process Observability: Discovering Behavioral Variability

May 26, 2025Abstract:AI agents that leverage Large Language Models (LLMs) are increasingly becoming core building blocks of modern software systems. A wide range of frameworks is now available to support the specification of such applications. These frameworks enable the definition of agent setups using natural language prompting, which specifies the roles, goals, and tools assigned to the various agents involved. Within such setups, agent behavior is non-deterministic for any given input, highlighting the critical need for robust debugging and observability tools. In this work, we explore the use of process and causal discovery applied to agent execution trajectories as a means of enhancing developer observability. This approach aids in monitoring and understanding the emergent variability in agent behavior. Additionally, we complement this with LLM-based static analysis techniques to distinguish between intended and unintended behavioral variability. We argue that such instrumentation is essential for giving developers greater control over evolving specifications and for identifying aspects of functionality that may require more precise and explicit definitions.

Selecting the Right LLM for eGov Explanations

Apr 27, 2025Abstract:The perceived quality of the explanations accompanying e-government services is key to gaining trust in these institutions, consequently amplifying further usage of these services. Recent advances in generative AI, and concretely in Large Language Models (LLMs) allow the automation of such content articulations, eliciting explanations' interpretability and fidelity, and more generally, adapting content to various audiences. However, selecting the right LLM type for this has become a non-trivial task for e-government service providers. In this work, we adapted a previously developed scale to assist with this selection, providing a systematic approach for the comparative analysis of the perceived quality of explanations generated by various LLMs. We further demonstrated its applicability through the tax-return process, using it as an exemplar use case that could benefit from employing an LLM to generate explanations about tax refund decisions. This was attained through a user study with 128 survey respondents who were asked to rate different versions of LLM-generated explanations about tax refund decisions, providing a methodological basis for selecting the most appropriate LLM. Recognizing the practical challenges of conducting such a survey, we also began exploring the automation of this process by attempting to replicate human feedback using a selection of cutting-edge predictive techniques.

Monetizing Currency Pair Sentiments through LLM Explainability

Jul 29, 2024

Abstract:Large language models (LLMs) play a vital role in almost every domain in today's organizations. In the context of this work, we highlight the use of LLMs for sentiment analysis (SA) and explainability. Specifically, we contribute a novel technique to leverage LLMs as a post-hoc model-independent tool for the explainability of SA. We applied our technique in the financial domain for currency-pair price predictions using open news feed data merged with market prices. Our application shows that the developed technique is not only a viable alternative to using conventional eXplainable AI but can also be fed back to enrich the input to the machine learning (ML) model to better predict future currency-pair values. We envision our results could be generalized to employing explainability as a conventional enrichment for ML input for better ML predictions in general.

Towards a Benchmark for Causal Business Process Reasoning with LLMs

Jun 08, 2024Abstract:Large Language Models (LLMs) are increasingly used for boosting organizational efficiency and automating tasks. While not originally designed for complex cognitive processes, recent efforts have further extended to employ LLMs in activities such as reasoning, planning, and decision-making. In business processes, such abilities could be invaluable for leveraging on the massive corpora LLMs have been trained on for gaining deep understanding of such processes. In this work, we plant the seeds for the development of a benchmark to assess the ability of LLMs to reason about causal and process perspectives of business operations. We refer to this view as Causally-augmented Business Processes (BP^C). The core of the benchmark comprises a set of BP^C related situations, a set of questions about these situations, and a set of deductive rules employed to systematically resolve the ground truth answers to these questions. Also with the power of LLMs, the seed is then instantiated into a larger-scale set of domain-specific situations and questions. Reasoning on BP^C is of crucial importance for process interventions and process improvement. Our benchmark could be used in one of two possible modalities: testing the performance of any target LLM and training an LLM to advance its capability to reason about BP^C.

How well can large language models explain business processes?

Jan 23, 2024

Abstract:Large Language Models (LLMs) are likely to play a prominent role in future AI-augmented business process management systems (ABPMSs) catering functionalities across all system lifecycle stages. One such system's functionality is Situation-Aware eXplainability (SAX), which relates to generating causally sound and yet human-interpretable explanations that take into account the process context in which the explained condition occurred. In this paper, we present the SAX4BPM framework developed to generate SAX explanations. The SAX4BPM suite consists of a set of services and a central knowledge repository. The functionality of these services is to elicit the various knowledge ingredients that underlie SAX explanations. A key innovative component among these ingredients is the causal process execution view. In this work, we integrate the framework with an LLM to leverage its power to synthesize the various input ingredients for the sake of improved SAX explanations. Since the use of LLMs for SAX is also accompanied by a certain degree of doubt related to its capacity to adequately fulfill SAX along with its tendency for hallucination and lack of inherent capacity to reason, we pursued a methodological evaluation of the quality of the generated explanations. To this aim, we developed a designated scale and conducted a rigorous user study. Our findings show that the input presented to the LLMs aided with the guard-railing of its performance, yielding SAX explanations having better-perceived fidelity. This improvement is moderated by the perception of trust and curiosity. More so, this improvement comes at the cost of the perceived interpretability of the explanation.

The WHY in Business Processes: Discovery of Causal Execution Dependencies

Oct 23, 2023Abstract:A crucial element in predicting the outcomes of process interventions and making informed decisions about the process is unraveling the genuine relationships between the execution of process activities. Contemporary process discovery algorithms exploit time precedence as their main source of model derivation. Such reliance can sometimes be deceiving from a causal perspective. This calls for faithful new techniques to discover the true execution dependencies among the tasks in the process. To this end, our work offers a systematic approach to the unveiling of the true causal business process by leveraging an existing causal discovery algorithm over activity timing. In addition, this work delves into a set of conditions under which process mining discovery algorithms generate a model that is incongruent with the causal business process model, and shows how the latter model can be methodologically employed for a sound analysis of the process. Our methodology searches for such discrepancies between the two models in the context of three causal patterns, and derives a new view in which these inconsistencies are annotated over the mined process model. We demonstrate our methodology employing two open process mining algorithms, the IBM Process Mining tool, and the LiNGAM causal discovery technique. We apply it on a synthesized dataset and on two open benchmark data sets.

Mimicking the Maestro: Exploring the Efficacy of a Virtual AI Teacher in Fine Motor Skill Acquisition

Oct 16, 2023

Abstract:Motor skills, especially fine motor skills like handwriting, play an essential role in academic pursuits and everyday life. Traditional methods to teach these skills, although effective, can be time-consuming and inconsistent. With the rise of advanced technologies like robotics and artificial intelligence, there is increasing interest in automating such teaching processes using these technologies, via human-robot and human-computer interactions. In this study, we examine the potential of a virtual AI teacher in emulating the techniques of human educators for motor skill acquisition. We introduce an AI teacher model that captures the distinct characteristics of human instructors. Using a Reinforcement Learning environment tailored to mimic teacher-learner interactions, we tested our AI model against four guiding hypotheses, emphasizing improved learner performance, enhanced rate of skill acquisition, and reduced variability in learning outcomes. Our findings, validated on synthetic learners, revealed significant improvements across all tested hypotheses. Notably, our model showcased robustness across different learners and settings and demonstrated adaptability to handwriting. This research underscores the potential of integrating Reinforcement Learning and Imitation Learning models with robotics in revolutionizing the teaching of critical motor skills.

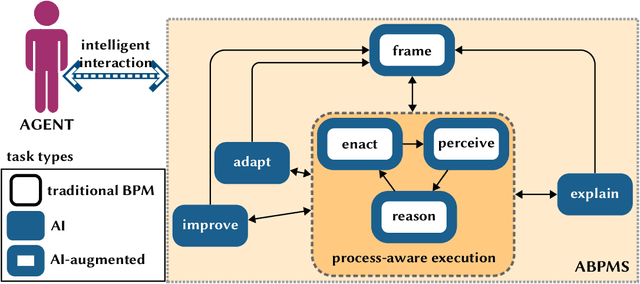

Augmented Business Process Management Systems: A Research Manifesto

Feb 03, 2022

Abstract:Augmented Business Process Management Systems (ABPMSs) are an emerging class of process-aware information systems that draws upon trustworthy AI technology. An ABPMS enhances the execution of business processes with the aim of making these processes more adaptable, proactive, explainable, and context-sensitive. This manifesto presents a vision for ABPMSs and discusses research challenges that need to be surmounted to realize this vision. To this end, we define the concept of ABPMS, we outline the lifecycle of processes within an ABPMS, we discuss core characteristics of an ABPMS, and we derive a set of challenges to realize systems with these characteristics.

Expecting the Unexpected: Developing Autonomous-System Design Principles for Reacting to Unpredicted Events and Conditions

Jan 25, 2020Abstract:When developing autonomous systems, engineers and other stakeholders make great effort to prepare the system for all foreseeable events and conditions. However, these systems are still bound to encounter events and conditions that were not considered at design time. For reasons like safety, cost, or ethics, it is often highly desired that these new situations be handled correctly upon first encounter. In this paper we first justify our position that there will always exist unpredicted events and conditions, driven among others by: new inventions in the real world; the diversity of world-wide system deployments and uses; and, the non-negligible probability that multiple seemingly unlikely events, which may be neglected at design time, will not only occur, but occur together. We then argue that despite this unpredictability property, handling these events and conditions is indeed possible. Hence, we offer and exemplify design principles that when applied in advance, can enable systems to deal, in the future, with unpredicted circumstances. We conclude with a discussion of how this work and a broader theoretical study of the unexpected can contribute toward a foundation of engineering principles for developing trustworthy next-generation autonomous systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge