Linghao Sun

SuperAD: A Training-free Anomaly Classification and Segmentation Method for CVPR 2025 VAND 3.0 Workshop Challenge Track 1: Adapt & Detect

May 26, 2025Abstract:In this technical report, we present our solution to the CVPR 2025 Visual Anomaly and Novelty Detection (VAND) 3.0 Workshop Challenge Track 1: Adapt & Detect: Robust Anomaly Detection in Real-World Applications. In real-world industrial anomaly detection, it is crucial to accurately identify anomalies with physical complexity, such as transparent or reflective surfaces, occlusions, and low-contrast contaminations. The recently proposed MVTec AD 2 dataset significantly narrows the gap between publicly available benchmarks and anomalies found in real-world industrial environments. To address the challenges posed by this dataset--such as complex and varying lighting conditions and real anomalies with large scale differences--we propose a fully training-free anomaly detection and segmentation method based on feature extraction using the DINOv2 model named SuperAD. Our method carefully selects a small number of normal reference images and constructs a memory bank by leveraging the strong representational power of DINOv2. Anomalies are then segmented by performing nearest neighbor matching between test image features and the memory bank. Our method achieves competitive results on both test sets of the MVTec AD 2 dataset.

Back Attention Knowledge Transfer for Low-resource Named Entity Recognition

Jun 04, 2019

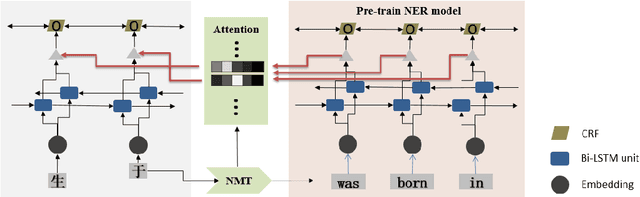

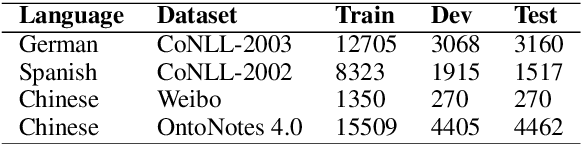

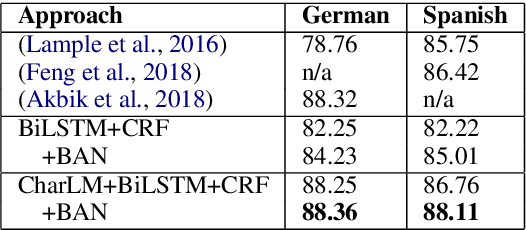

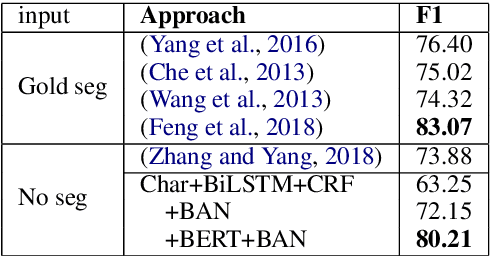

Abstract:In recent years, great success has been achieved in the field of natural language processing (NLP), thanks in part to the considerable amount of annotated resources. For named entity recognition (NER), most languages do not have such an abundance of labeled data, so the performances of those languages are comparatively lower. To improve the performance, we propose a general approach called Back Attention Network (BAN). BAN uses translation system to translate other language sentences into English and utilizes the pre-trained English NER model to get task-specific information. After that, BAN applies a new mechanism named back attention knowledge transfer to improve the semantic representation, which aids in generation of the result. Experiments on three different language datasets indicate that our approach outperforms other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge