Likun Shi

CrossVid: A Comprehensive Benchmark for Evaluating Cross-Video Reasoning in Multimodal Large Language Models

Nov 15, 2025Abstract:Cross-Video Reasoning (CVR) presents a significant challenge in video understanding, which requires simultaneous understanding of multiple videos to aggregate and compare information across groups of videos. Most existing video understanding benchmarks focus on single-video analysis, failing to assess the ability of multimodal large language models (MLLMs) to simultaneously reason over various videos. Recent benchmarks evaluate MLLMs' capabilities on multi-view videos that capture different perspectives of the same scene. However, their limited tasks hinder a thorough assessment of MLLMs in diverse real-world CVR scenarios. To this end, we introduce CrossVid, the first benchmark designed to comprehensively evaluate MLLMs' spatial-temporal reasoning ability in cross-video contexts. Firstly, CrossVid encompasses a wide spectrum of hierarchical tasks, comprising four high-level dimensions and ten specific tasks, thereby closely reflecting the complex and varied nature of real-world video understanding. Secondly, CrossVid provides 5,331 videos, along with 9,015 challenging question-answering pairs, spanning single-choice, multiple-choice, and open-ended question formats. Through extensive experiments on various open-source and closed-source MLLMs, we observe that Gemini-2.5-Pro performs best on CrossVid, achieving an average accuracy of 50.4%. Notably, our in-depth case study demonstrates that most current MLLMs struggle with CVR tasks, primarily due to their inability to integrate or compare evidence distributed across multiple videos for reasoning. These insights highlight the potential of CrossVid to guide future advancements in enhancing MLLMs' CVR capabilities.

Tensor Oriented No-Reference Light Field Image Quality Assessment

Sep 05, 2019

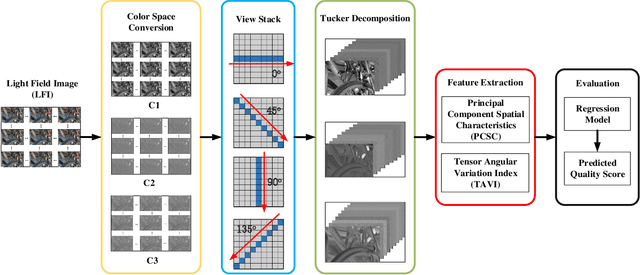

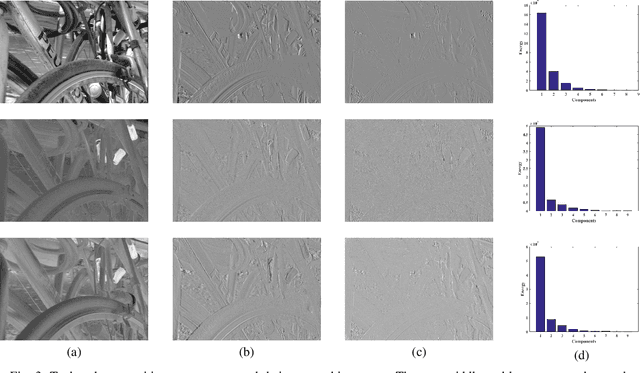

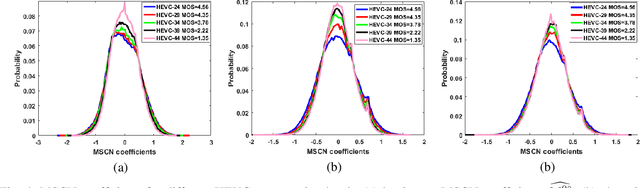

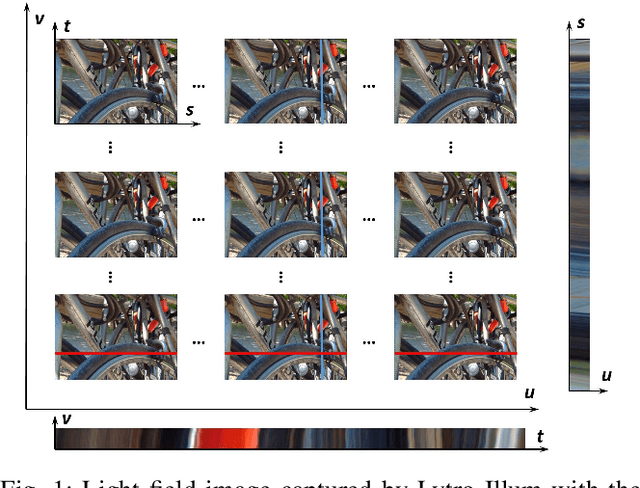

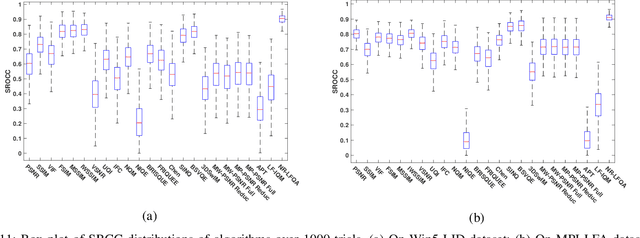

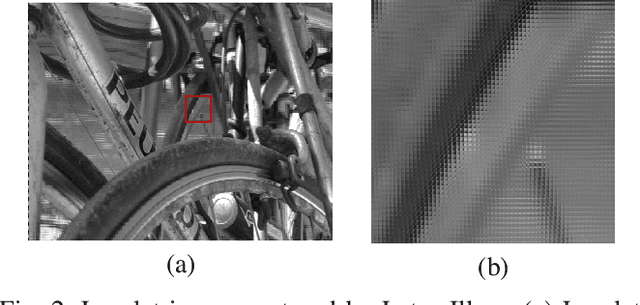

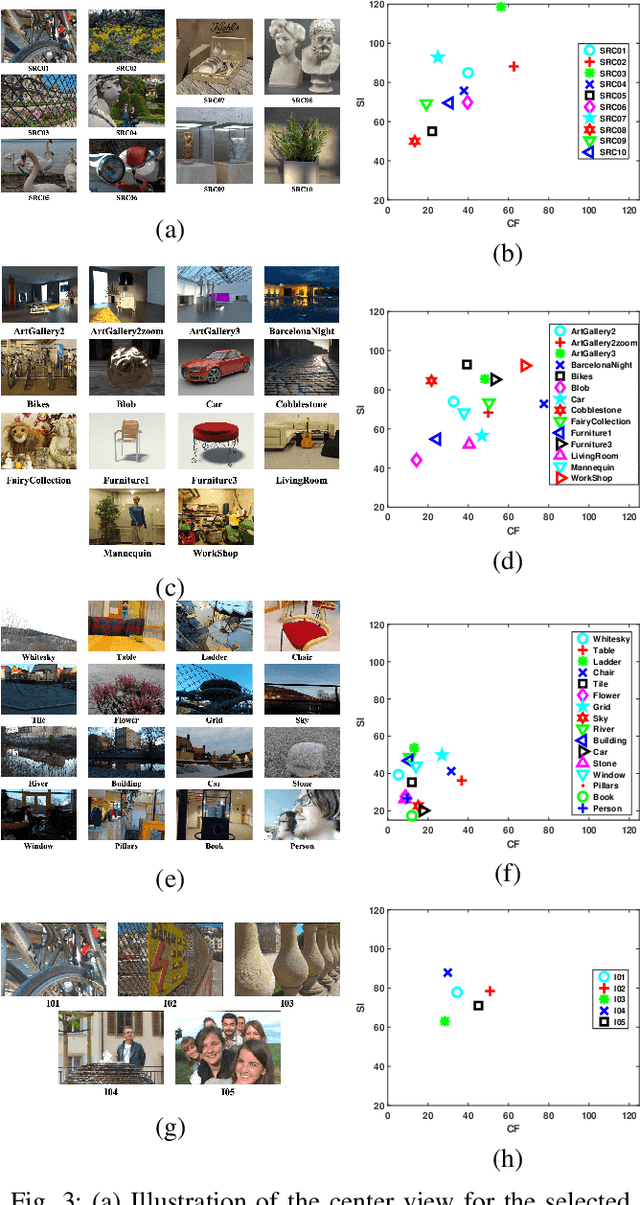

Abstract:Light field image (LFI) quality assessment is becoming more and more important, which helps to better guide the acquisition, processing and application of immersive media. However, due to the inherent high dimensional characteristics of LFI, the LFI quality assessment turns into a multi-dimensional problem that requires consideration of the quality degradation in both spatial and angular dimensions. Therefore, we propose a novel Tensor oriented No-reference Light Field image Quality evaluator (Tensor-NLFQ) based on tensor theory. Specifically, since the LFI is regarded as a low-rank 4D tensor, the principle components of four oriented sub-aperture view stacks are obtained via Tucker decomposition. Then, the Principal Component Spatial Characteristic (PCSC) is designed to measure the spatial-dimensional quality of LFI considering its global naturalness and local frequency properties. Finally, the Tensor Angular Variation Index (TAVI) is proposed to measure angular consistency quality by analyzing the structural similarity distribution between the first principal component and each view in the view stack. Extensive experimental results on four publicly available LFI quality databases demonstrate that the proposed Tensor-NLFQ model outperforms state-of-the-art 2D, 3D, multi-view, and LFI quality assessment algorithms.

No-Reference Light Field Image Quality Assessment Based on Spatial-Angular Measurement

Aug 17, 2019

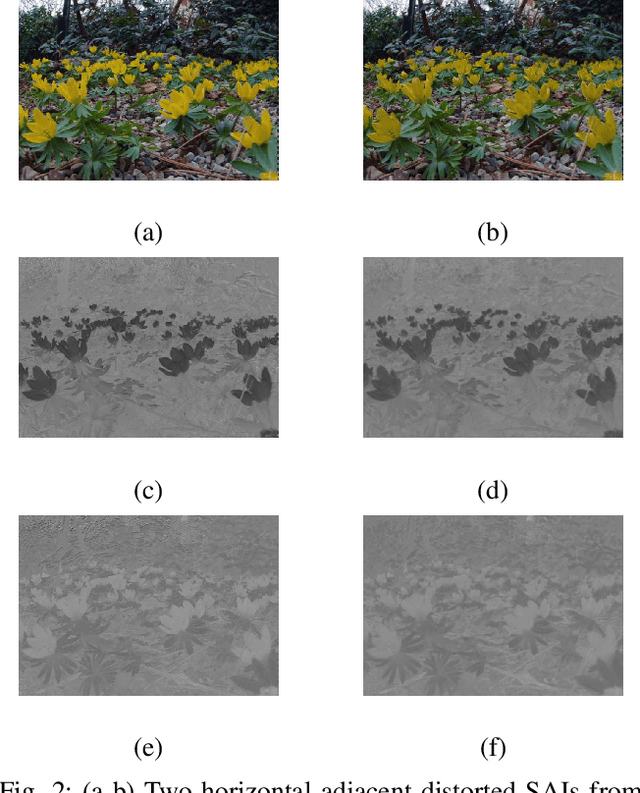

Abstract:Light field image quality assessment (LFI-QA) is a significant and challenging research problem. It helps to better guide light field acquisition, processing and applications. However, only a few objective models have been proposed and none of them completely consider intrinsic factors affecting the LFI quality. In this paper, we propose a No-Reference Light Field image Quality Assessment (NR-LFQA) scheme, where the main idea is to quantify the LFI quality degradation through evaluating the spatial quality and angular consistency. We first measure the spatial quality deterioration by capturing the naturalness distribution of the light field cyclopean image array, which is formed when human observes the LFI. Then, as a transformed representation of LFI, the Epipolar Plane Image (EPI) contains the slopes of lines and involves the angular information. Therefore, EPI is utilized to extract the global and local features from LFI to measure angular consistency degradation. Specifically, the distribution of gradient direction map of EPI is proposed to measure the global angular consistency distortion in the LFI. We further propose the weighted local binary pattern to capture the characteristics of local angular consistency degradation. Extensive experimental results on four publicly available LFI quality datasets demonstrate that the proposed method outperforms state-of-the-art 2D, 3D, multi-view, and LFI quality assessment algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge