Liheng Bian

Agile wide-field imaging with selective high resolution

Jun 11, 2021

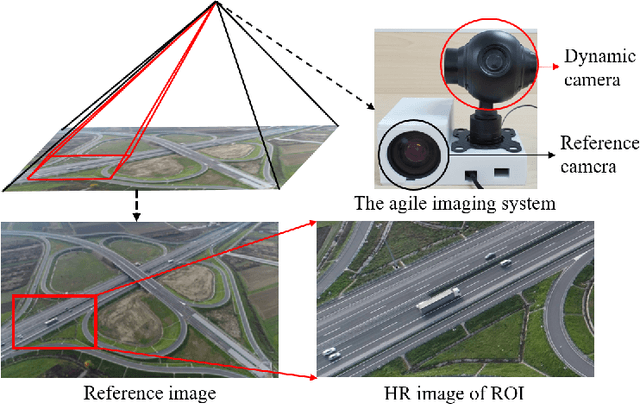

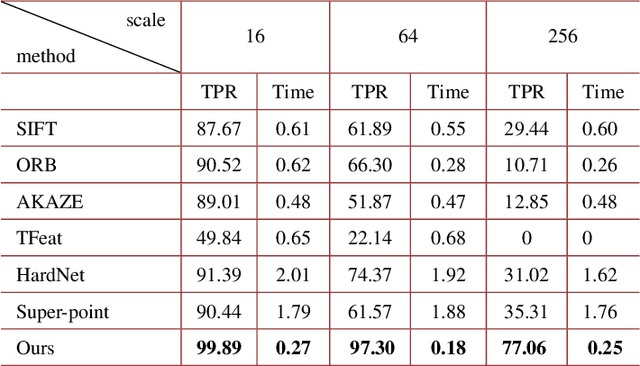

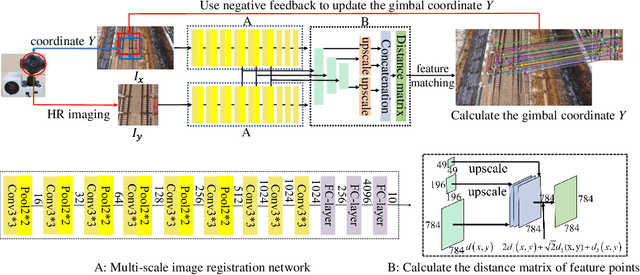

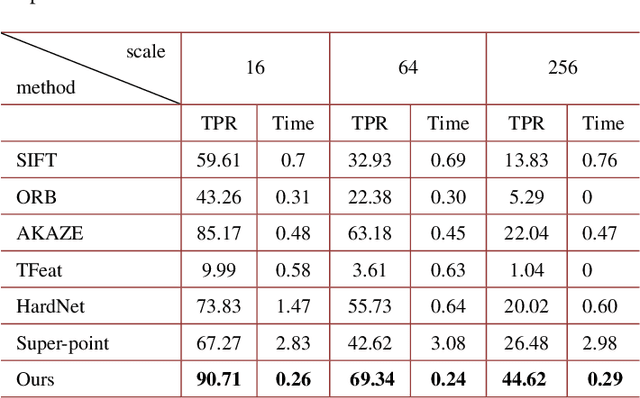

Abstract:Wide-field and high-resolution (HR) imaging is essential for various applications such as aviation reconnaissance, topographic mapping and safety monitoring. The existing techniques require a large-scale detector array to capture HR images of the whole field, resulting in high complexity and heavy cost. In this work, we report an agile wide-field imaging framework with selective high resolution that requires only two detectors. It builds on the statistical sparsity prior of natural scenes that the important targets locate only at small regions of interests (ROI), instead of the whole field. Under this assumption, we use a short-focal camera to image wide field with a certain low resolution, and use a long-focal camera to acquire the HR images of ROI. To automatically locate ROI in the wide field in real time, we propose an efficient deep-learning based multiscale registration method that is robust and blind to the large setting differences (focal, white balance, etc) between the two cameras. Using the registered location, the long-focal camera mounted on a gimbal enables real-time tracking of the ROI for continuous HR imaging. We demonstrated the novel imaging framework by building a proof-of-concept setup with only 1181 gram weight, and assembled it on an unmanned aerial vehicle for air-to-ground monitoring. Experiments show that the setup maintains 120$^{\circ}$ wide field-of-view (FOV) with selective 0.45$mrad$ instantaneous FOV.

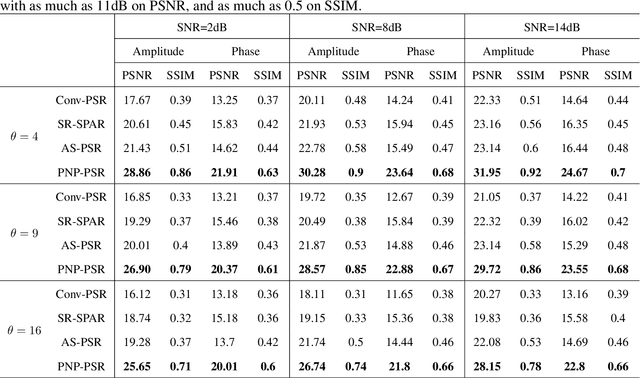

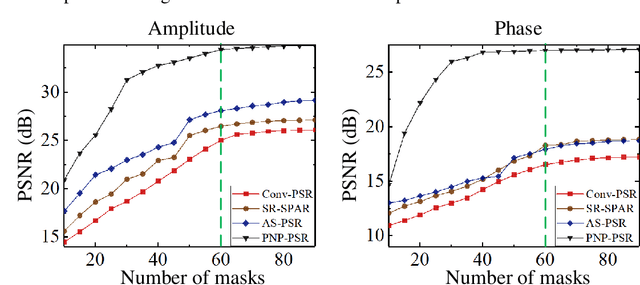

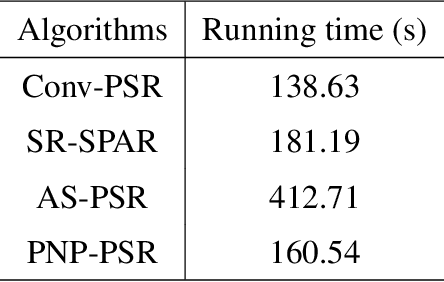

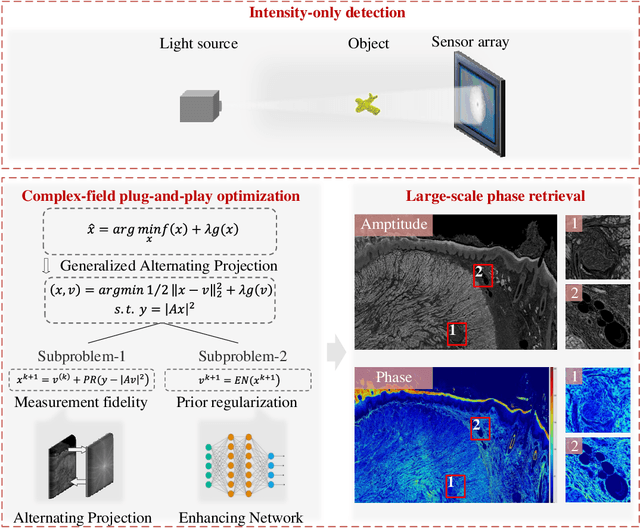

Plug-and-play optimization for pixel super-resolution phase retrieval

May 31, 2021

Abstract:In order to increase signal-to-noise ratio in measurement, most imaging detectors sacrifice resolution to increase pixel size in confined area. Although the pixel super-resolution technique (PSR) enables resolution enhancement in such as digital holographic imaging, it suffers from unsatisfied reconstruction quality. In this work, we report a high-fidelity plug-and-play optimization method for PSR phase retrieval, termed as PNP-PSR. It decomposes PSR reconstruction into independent sub-problems based on the generalized alternating projection framework. An alternating projection operator and an enhancing neural network are derived to tackle the measurement fidelity and statistical prior regularization, respectively. In this way, PNP-PSR incorporates the advantages of individual operators, achieving both high efficiency and noise robustness. We compare PNP-PSR with the existing PSR phase retrieval algorithms with a series of simulations and experiments, and PNP-PSR outperforms the existing algorithms with as much as 11dB on PSNR. The enhanced imaging fidelity enables one-order-of-magnitude higher cell counting precision.

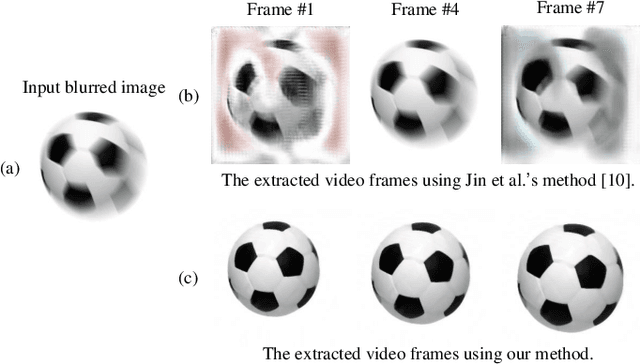

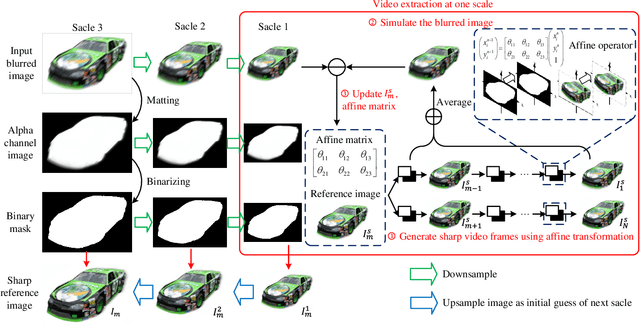

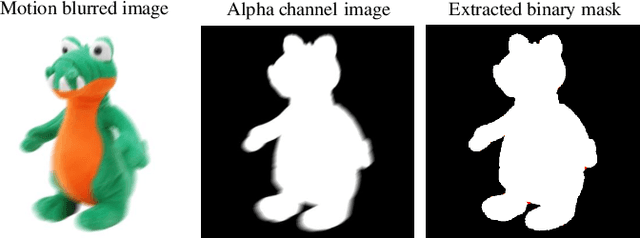

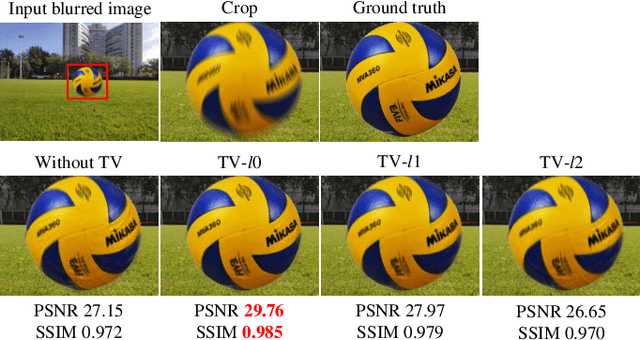

Affine-modeled video extraction from a single motion blurred image

Apr 08, 2021

Abstract:A motion-blurred image is the temporal average of multiple sharp frames over the exposure time. Recovering these sharp video frames from a single blurred image is nontrivial, due to not only its strong ill-posedness, but also various types of complex motion in reality such as rotation and motion in depth. In this work, we report a generalized video extraction method using the affine motion modeling, enabling to tackle multiple types of complex motion and their mixing. In its workflow, the moving objects are first segemented in the alpha channel. This allows separate recovery of different objects with different motion. Then, we reduce the variable space by modeling each video clip as a series of affine transformations of a reference frame, and introduce the $l0$-norm total variation regularization to attenuate the ringing artifact. The differentiable affine operators are employed to realize gradient-descent optimization of the affine model, which follows a novel coarse-to-fine strategy to further reduce artifacts. As a result, both the affine parameters and sharp reference image are retrieved. They are finally input into stepwise affine transformation to recover the sharp video frames. The stepwise retrieval maintains the nature to bypass the frame order ambiguity. Experiments on both public datasets and real captured data validate the state-of-the-art performance of the reported technique.

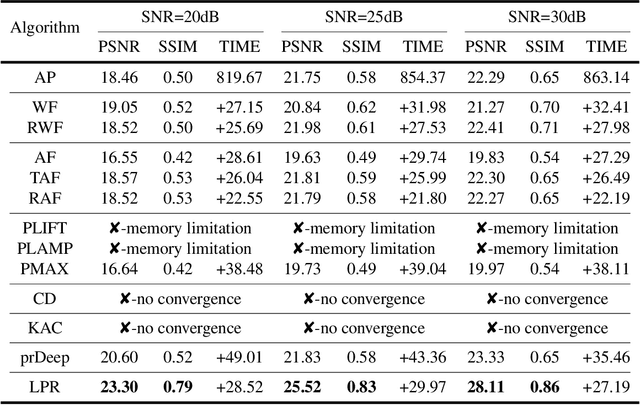

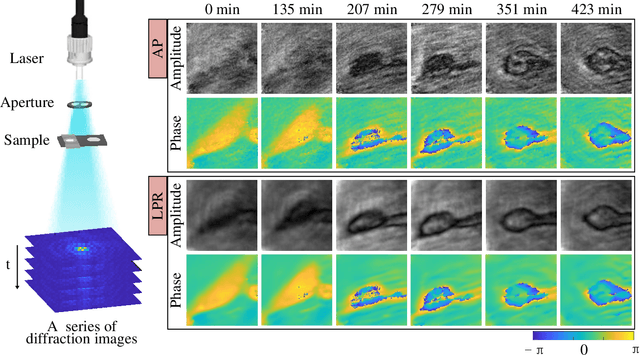

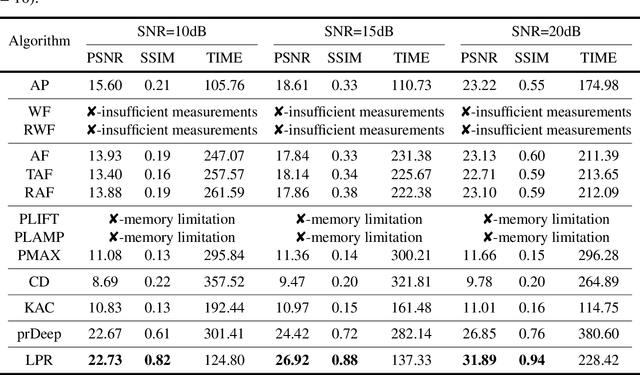

Large-scale phase retrieval

Apr 06, 2021

Abstract:High-throughput computational imaging requires efficient processing algorithms to retrieve multi-dimensional and multi-scale information. In computational phase imaging, phase retrieval (PR) is required to reconstruct both amplitude and phase in complex space from intensity-only measurements. The existing PR algorithms suffer from the tradeoff among low computational complexity, robustness to measurement noise and strong generalization on different modalities. In this work, we report an efficient large-scale phase retrieval technique termed as LPR. It extends the plug-and-play generalized-alternating-projection framework from real space to nonlinear complex space. The alternating projection solver and enhancing neural network are respectively derived to tackle the measurement formation and statistical prior regularization. This framework compensates the shortcomings of each operator, so as to realize high-fidelity phase retrieval with low computational complexity and strong generalization. We applied the technique for a series of computational phase imaging modalities including coherent diffraction imaging, coded diffraction pattern imaging, and Fourier ptychographic microscopy. Extensive simulations and experiments validate that the technique outperforms the existing PR algorithms with as much as 17dB enhancement on signal-to-noise ratio, and more than one order-of-magnitude increased running efficiency. Besides, we for the first time demonstrate ultra-large-scale phase retrieval at the 8K level (7680$\times$4320 pixels) in minute-level time.

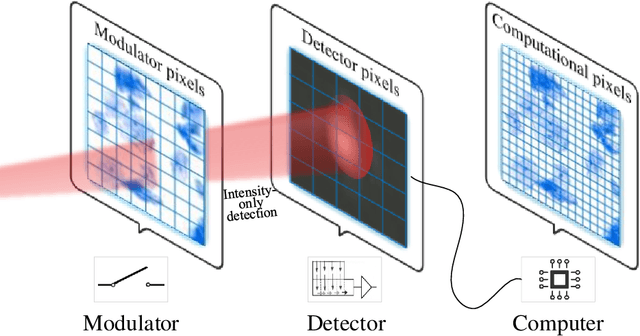

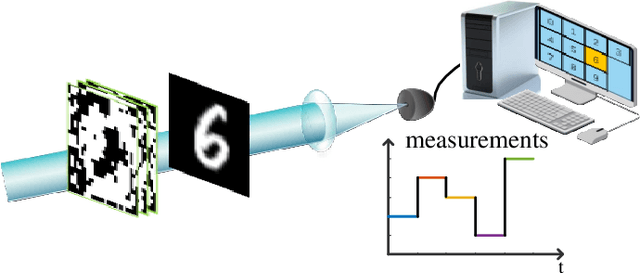

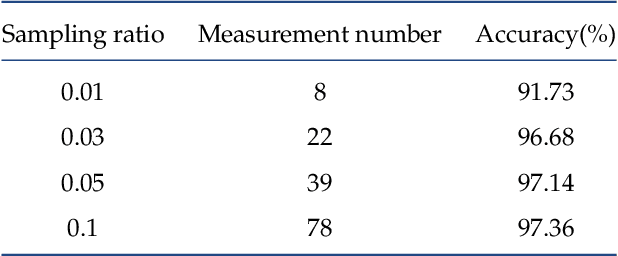

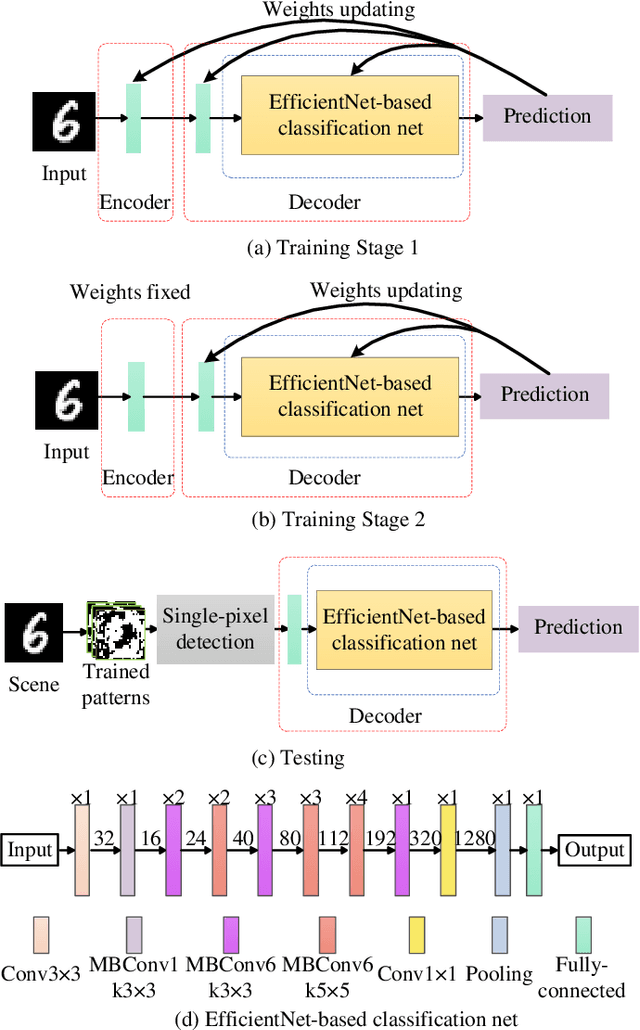

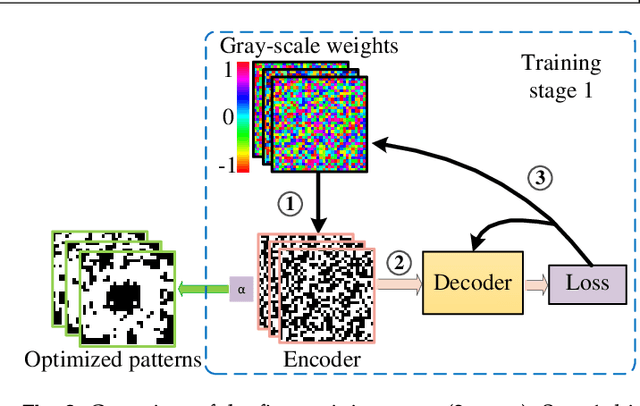

Non-imaging single-pixel sensing with optimized binary modulation

Sep 27, 2019

Abstract:The conventional high-level sensing techniques require high-fidelity images as input to extract target features, which are produced by either complex imaging hardware or high-complexity reconstruction algorithms. In this letter, we propose single-pixel sensing (SPS) that performs high-level sensing directly from coupled measurements of a single-pixel detector, without the conventional image acquisition and reconstruction process. The technique consists of three steps including binary light modulation that can be physically implemented at $\sim$22kHz, single-pixel coupled detection owning wide working spectrum and high signal-to-noise ratio, and end-to-end deep-learning based sensing that reduces both hardware and software complexity. Besides, the binary modulation is trained and optimized together with the sensing network, which ensures least required measurements and optimal sensing accuracy. The effectiveness of SPS is demonstrated on the classification task of handwritten MNIST dataset, and 96.68% classification accuracy at $\sim$1kHz is achieved. The reported single-pixel sensing technique is a novel framework for highly efficient machine intelligence.

Experimental comparison of single-pixel imaging algorithms

Oct 24, 2017

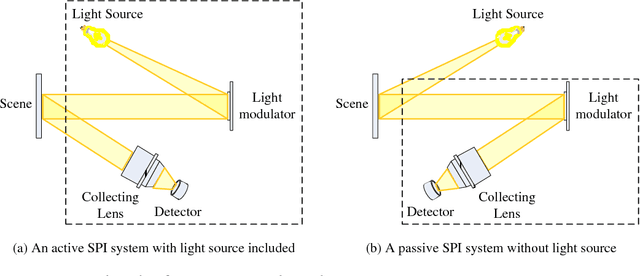

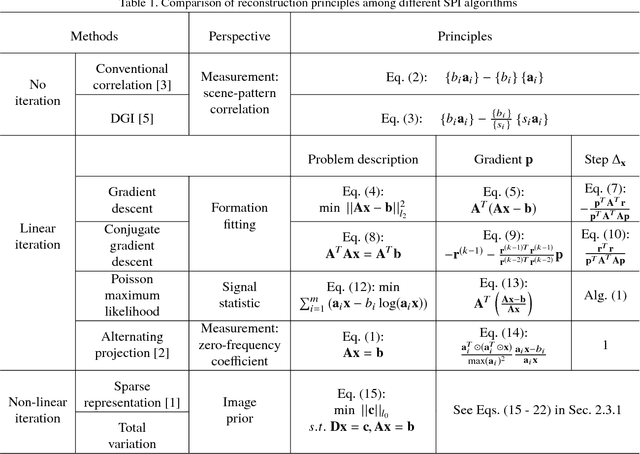

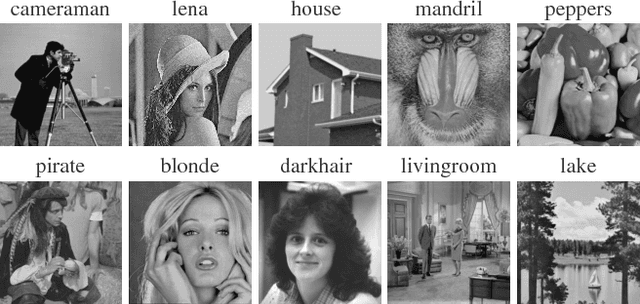

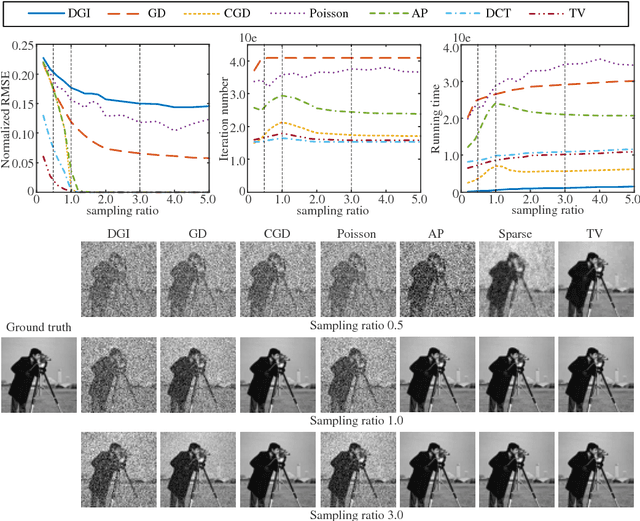

Abstract:Single-pixel imaging (SPI) is a novel technique capturing 2D images using a photodiode, instead of conventional 2D array sensors. SPI owns high signal-to-noise ratio, wide spectrum range, low cost, and robustness to light scattering. Various algorithms have been proposed for SPI reconstruction, including the linear correlation methods, the alternating projection method (AP), and the compressive sensing based methods. However, there has been no comprehensive review discussing respective advantages, which is important for SPI's further applications and development. In this paper, we reviewed and compared these algorithms in a unified reconstruction framework. Besides, we proposed two other SPI algorithms including a conjugate gradient descent based method (CGD) and a Poisson maximum likelihood based method. Both simulations and experiments validate the following conclusions: to obtain comparable reconstruction accuracy, the compressive sensing based total variation regularization method (TV) requires the least measurements and consumes the least running time for small-scale reconstruction; the CGD and AP methods run fastest in large-scale cases; the TV and AP methods are the most robust to measurement noise. In a word, there are trade-offs between capture efficiency, computational complexity and robustness to noise among different SPI algorithms. We have released our source code for non-commercial use.

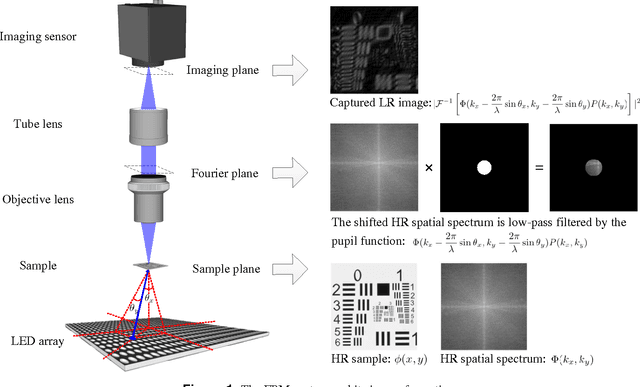

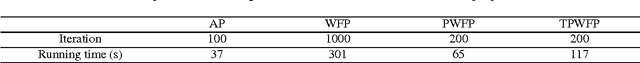

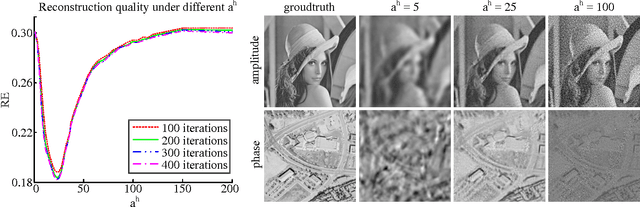

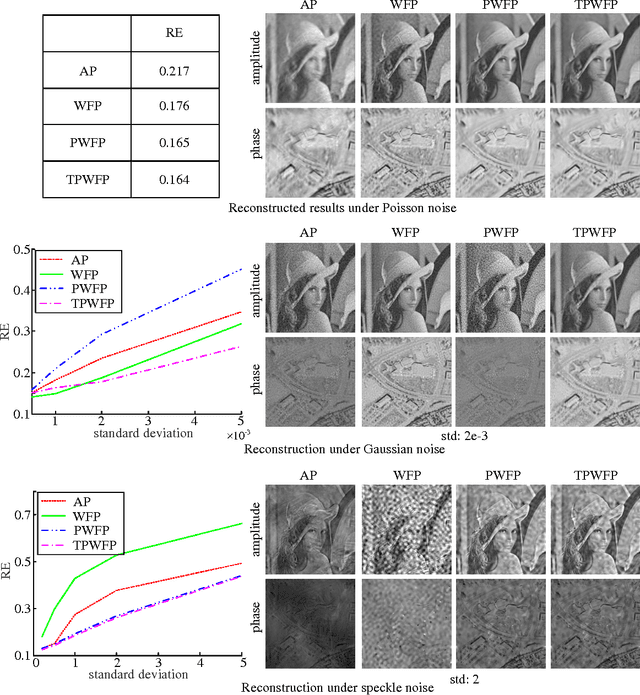

Fourier ptychographic reconstruction using Poisson maximum likelihood and truncated Wirtinger gradient

Mar 01, 2016

Abstract:Fourier ptychographic microscopy (FPM) is a novel computational coherent imaging technique for high space-bandwidth product imaging. Mathematically, Fourier ptychographic (FP) reconstruction can be implemented as a phase retrieval optimization process, in which we only obtain low resolution intensity images corresponding to the sub-bands of the sample's high resolution (HR) spatial spectrum, and aim to retrieve the complex HR spectrum. In real setups, the measurements always suffer from various degenerations such as Gaussian noise, Poisson noise, speckle noise and pupil location error, which would largely degrade the reconstruction. To efficiently address these degenerations, we propose a novel FP reconstruction method under a gradient descent optimization framework in this paper. The technique utilizes Poisson maximum likelihood for better signal modeling, and truncated Wirtinger gradient for error removal. Results on both simulated data and real data captured using our laser FPM setup show that the proposed method outperforms other state-of-the-art algorithms. Also, we have released our source code for non-commercial use.

Multi-frame denoising of high speed optical coherence tomography data using inter-frame and intra-frame priors

Nov 29, 2014Abstract:Optical coherence tomography (OCT) is an important interferometric diagnostic technique which provides cross-sectional views of the subsurface microstructure of biological tissues. However, the imaging quality of high-speed OCT is limited due to the large speckle noise. To address this problem, this paper proposes a multi-frame algorithmic method to denoise OCT volume. Mathematically, we build an optimization model which forces the temporally registered frames to be low rank, and the gradient in each frame to be sparse, under logarithmic image formation and noise variance constraints. Besides, a convex optimization algorithm based on the augmented Lagrangian method is derived to solve the above model. The results reveal that our approach outperforms the other methods in terms of both speckle noise suppression and crucial detail preservation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge