Lars J. Grimm

BreastSegNet: Multi-label Segmentation of Breast MRI

Jul 18, 2025Abstract:Breast MRI provides high-resolution imaging critical for breast cancer screening and preoperative staging. However, existing segmentation methods for breast MRI remain limited in scope, often focusing on only a few anatomical structures, such as fibroglandular tissue or tumors, and do not cover the full range of tissues seen in scans. This narrows their utility for quantitative analysis. In this study, we present BreastSegNet, a multi-label segmentation algorithm for breast MRI that covers nine anatomical labels: fibroglandular tissue (FGT), vessel, muscle, bone, lesion, lymph node, heart, liver, and implant. We manually annotated a large set of 1123 MRI slices capturing these structures with detailed review and correction from an expert radiologist. Additionally, we benchmark nine segmentation models, including U-Net, SwinUNet, UNet++, SAM, MedSAM, and nnU-Net with multiple ResNet-based encoders. Among them, nnU-Net ResEncM achieves the highest average Dice scores of 0.694 across all labels. It performs especially well on heart, liver, muscle, FGT, and bone, with Dice scores exceeding 0.73, and approaching 0.90 for heart and liver. All model code and weights are publicly available, and we plan to release the data at a later date.

Deep learning analysis of breast MRIs for prediction of occult invasive disease in ductal carcinoma in situ

Nov 28, 2017

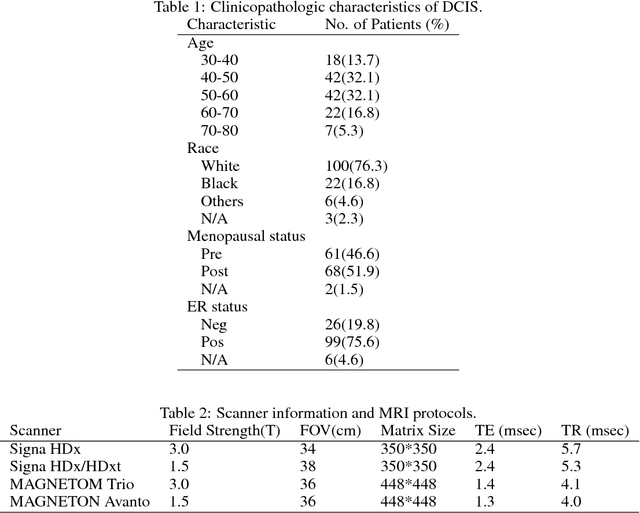

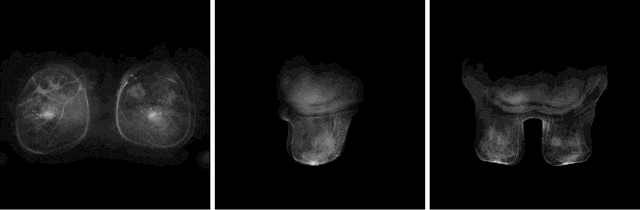

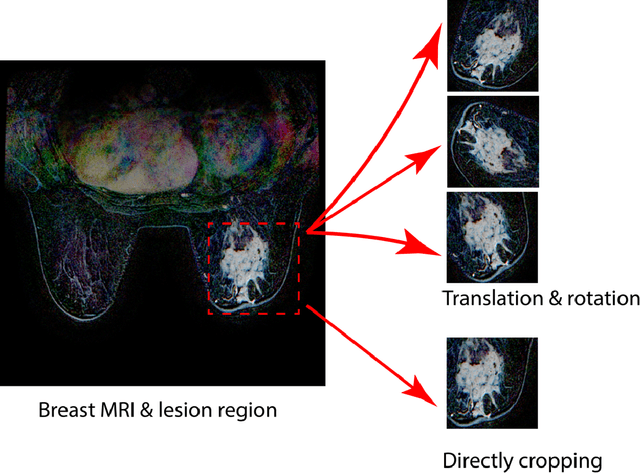

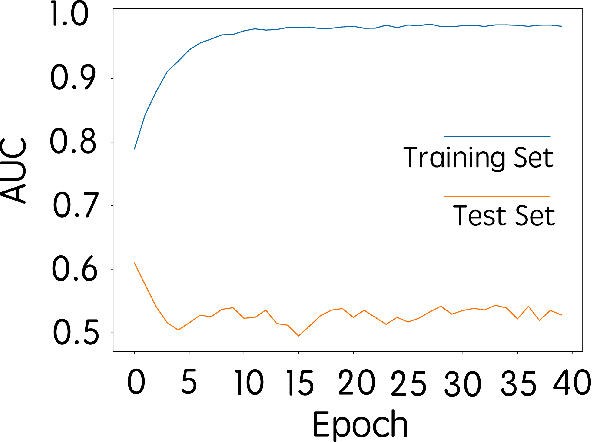

Abstract:Purpose: To determine whether deep learning-based algorithms applied to breast MR images can aid in the prediction of occult invasive disease following the di- agnosis of ductal carcinoma in situ (DCIS) by core needle biopsy. Material and Methods: In this institutional review board-approved study, we analyzed dynamic contrast-enhanced fat-saturated T1-weighted MRI sequences of 131 patients at our institution with a core needle biopsy-confirmed diagnosis of DCIS. The patients had no preoperative therapy before breast MRI and no prior history of breast cancer. We explored two different deep learning approaches to predict whether there was a hidden (occult) invasive component in the analyzed tumors that was ultimately detected at surgical excision. In the first approach, we adopted the transfer learning strategy, in which a network pre-trained on a large dataset of natural images is fine-tuned with our DCIS images. Specifically, we used the GoogleNet model pre-trained on the ImageNet dataset. In the second approach, we used a pre-trained network to extract deep features, and a support vector machine (SVM) that utilizes these features to predict the upstaging of the DCIS. We used 10-fold cross validation and the area under the ROC curve (AUC) to estimate the performance of the predictive models. Results: The best classification performance was obtained using the deep features approach with GoogleNet model pre-trained on ImageNet as the feature extractor and a polynomial kernel SVM used as the classifier (AUC = 0.70, 95% CI: 0.58- 0.79). For the transfer learning based approach, the highest AUC obtained was 0.53 (95% CI: 0.41-0.62). Conclusion: Convolutional neural networks could potentially be used to identify occult invasive disease in patients diagnosed with DCIS at the initial core needle biopsy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge