Kshitij Goel

Carnegie Mellon University

Quadrotor Navigation using Reinforcement Learning with Privileged Information

Sep 09, 2025Abstract:This paper presents a reinforcement learning-based quadrotor navigation method that leverages efficient differentiable simulation, novel loss functions, and privileged information to navigate around large obstacles. Prior learning-based methods perform well in scenes that exhibit narrow obstacles, but struggle when the goal location is blocked by large walls or terrain. In contrast, the proposed method utilizes time-of-arrival (ToA) maps as privileged information and a yaw alignment loss to guide the robot around large obstacles. The policy is evaluated in photo-realistic simulation environments containing large obstacles, sharp corners, and dead-ends. Our approach achieves an 86% success rate and outperforms baseline strategies by 34%. We deploy the policy onboard a custom quadrotor in outdoor cluttered environments both during the day and night. The policy is validated across 20 flights, covering 589 meters without collisions at speeds up to 4 m/s.

Zero-Shot Metric Depth Estimation via Monocular Visual-Inertial Rescaling for Autonomous Aerial Navigation

Sep 09, 2025

Abstract:This paper presents a methodology to predict metric depth from monocular RGB images and an inertial measurement unit (IMU). To enable collision avoidance during autonomous flight, prior works either leverage heavy sensors (e.g., LiDARs or stereo cameras) or data-intensive and domain-specific fine-tuning of monocular metric depth estimation methods. In contrast, we propose several lightweight zero-shot rescaling strategies to obtain metric depth from relative depth estimates via the sparse 3D feature map created using a visual-inertial navigation system. These strategies are compared for their accuracy in diverse simulation environments. The best performing approach, which leverages monotonic spline fitting, is deployed in the real-world on a compute-constrained quadrotor. We obtain on-board metric depth estimates at 15 Hz and demonstrate successful collision avoidance after integrating the proposed method with a motion primitives-based planner.

Rapid Quadrotor Navigation in Diverse Environments using an Onboard Depth Camera

Nov 07, 2024

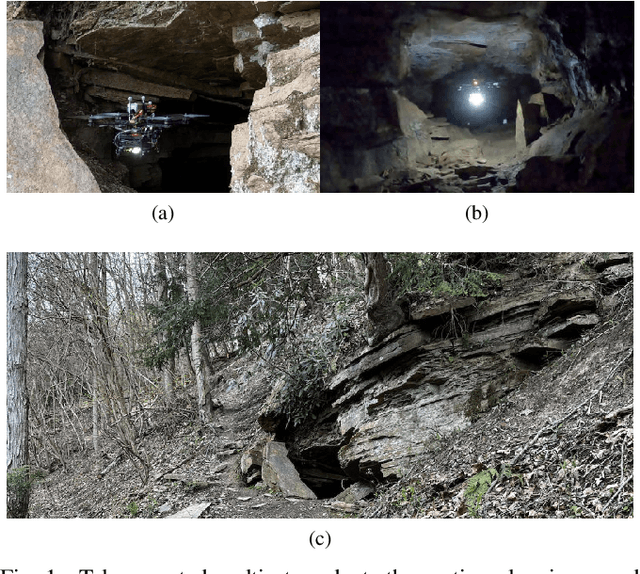

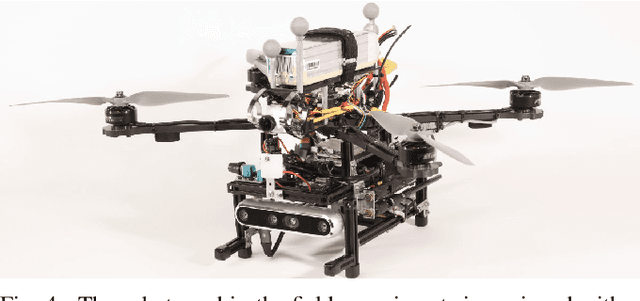

Abstract:Search and rescue environments exhibit challenging 3D geometry (e.g., confined spaces, rubble, and breakdown), which necessitates agile and maneuverable aerial robotic systems. Because these systems are size, weight, and power (SWaP) constrained, rapid navigation is essential for maximizing environment coverage. Onboard autonomy must be robust to prevent collisions, which may endanger rescuers and victims. Prior works have developed high-speed navigation solutions for autonomous aerial systems, but few have considered safety for search and rescue applications. These works have also not demonstrated their approaches in diverse environments. We bridge this gap in the state of the art by developing a reactive planner using forward-arc motion primitives, which leverages a history of RGB-D observations to safely maneuver in close proximity to obstacles. At every planning round, a safe stopping action is scheduled, which is executed if no feasible motion plan is found at the next planning round. The approach is evaluated in thousands of simulations and deployed in diverse environments, including caves and forests. The results demonstrate a 24% increase in success rate compared to state-of-the-art approaches.

Decentralized Uncertainty-Aware Active Search with a Team of Aerial Robots

Oct 11, 2024Abstract:Rapid search and rescue is critical to maximizing survival rates following natural disasters. However, these efforts are challenged by the need to search large disaster zones, lack of reliability in the communications infrastructure, and a priori unknown numbers of objects of interest (OOIs), such as injured survivors. Aerial robots are increasingly being deployed for search and rescue due to their high mobility, but there remains a gap in deploying multi-robot autonomous aerial systems for methodical search of large environments. Prior works have relied on preprogrammed paths from human operators or are evaluated only in simulation. We bridge these gaps in the state of the art by developing and demonstrating a decentralized active search system, which biases its trajectories to take additional views of uncertain OOIs. The methodology leverages stochasticity for rapid coverage in communication denied scenarios. When communications are available, robots share poses, goals, and OOI information to accelerate the rate of search. Extensive simulations and hardware experiments in Bloomingdale, OH, are conducted to validate the approach. The results demonstrate the active search approach outperforms greedy coverage-based planning in communication-denied scenarios while maintaining comparable performance in communication-enabled scenarios.

Distance and Collision Probability Estimation from Gaussian Surface Models

Jan 31, 2024Abstract:This paper describes continuous-space methodologies to estimate the collision probability, Euclidean distance and gradient between an ellipsoidal robot model and an environment surface modeled as a set of Gaussian distributions. Continuous-space collision probability estimation is critical for uncertainty-aware motion planning. Most collision detection and avoidance approaches assume the robot is modeled as a sphere, but ellipsoidal representations provide tighter approximations and enable navigation in cluttered and narrow spaces. State-of-the-art methods derive the Euclidean distance and gradient by processing raw point clouds, which is computationally expensive for large workspaces. Recent advances in Gaussian surface modeling (e.g. mixture models, splatting) enable compressed and high-fidelity surface representations. Few methods exist to estimate continuous-space occupancy from such models. They require Gaussians to model free space and are unable to estimate the collision probability, Euclidean distance and gradient for an ellipsoidal robot. The proposed methods bridge this gap by extending prior work in ellipsoid-to-ellipsoid Euclidean distance and collision probability estimation to Gaussian surface models. A geometric blending approach is also proposed to improve collision probability estimation. The approaches are evaluated with numerical 2D and 3D experiments using real-world point cloud data.

Incremental Multimodal Surface Mapping via Self-Organizing Gaussian Mixture Models

Sep 19, 2023Abstract:This letter describes an incremental multimodal surface mapping methodology, which represents the environment as a continuous probabilistic model. This model enables high-resolution reconstruction while simultaneously compressing spatial and intensity point cloud data. The strategy employed in this work utilizes Gaussian mixture models (GMMs) to represent the environment. While prior GMM-based mapping works have developed methodologies to determine the number of mixture components using information-theoretic techniques, these approaches either operate on individual sensor observations, making them unsuitable for incremental mapping, or are not real-time viable, especially for applications where high-fidelity modeling is required. To bridge this gap, this letter introduces a spatial hash map for rapid GMM submap extraction combined with an approach to determine relevant and redundant data in a point cloud. These contributions increase computational speed by an order of magnitude compared to state-of-the-art incremental GMM-based mapping. In addition, the proposed approach yields a superior tradeoff in map accuracy and size when compared to state-of-the-art mapping methodologies (both GMM- and not GMM-based). Evaluations are conducted using both simulated and real-world data. The software is released open-source to benefit the robotics community.

GIRA: Gaussian Mixture Models for Inference and Robot Autonomy

Jun 30, 2023

Abstract:Large-scale deployments of robot teams are challenged by the need to share high-resolution perceptual information over low-bandwidth communication channels. Individual size, weight, and power constrained robots rely on environment models to assess navigability and safely traverse unstructured and complex environments. State of the art perception frameworks construct these models via multiple disparate pipelines that reuse the same underlying sensor data, which leads to increased computation, redundancy, and complexity. To bridge this gap, this paper introduces GIRA -- an open-source framework for compact, high-resolution environment modeling using Gaussian mixture models (GMMs). GIRA provides fundamental robotics capabilities such as high-fidelity reconstruction, pose estimation, and occupancy modeling in a single continuous representation.

Probabilistic Point Cloud Modeling via Self-Organizing Gaussian Mixture Models

Jan 31, 2023Abstract:This letter presents a continuous probabilistic modeling methodology for spatial point cloud data using finite Gaussian Mixture Models (GMMs) where the number of components are adapted based on the scene complexity. Few hierarchical and adaptive methods have been proposed to address the challenge of balancing model fidelity with size. Instead, state-of-the-art mapping approaches require tuning parameters for specific use cases, but do not generalize across diverse environments. To address this gap, we utilize a self-organizing principle from information-theoretic learning to automatically adapt the complexity of the GMM model based on the relevant information in the sensor data. The approach is evaluated against existing point cloud modeling techniques on real-world data with varying degrees of scene complexity.

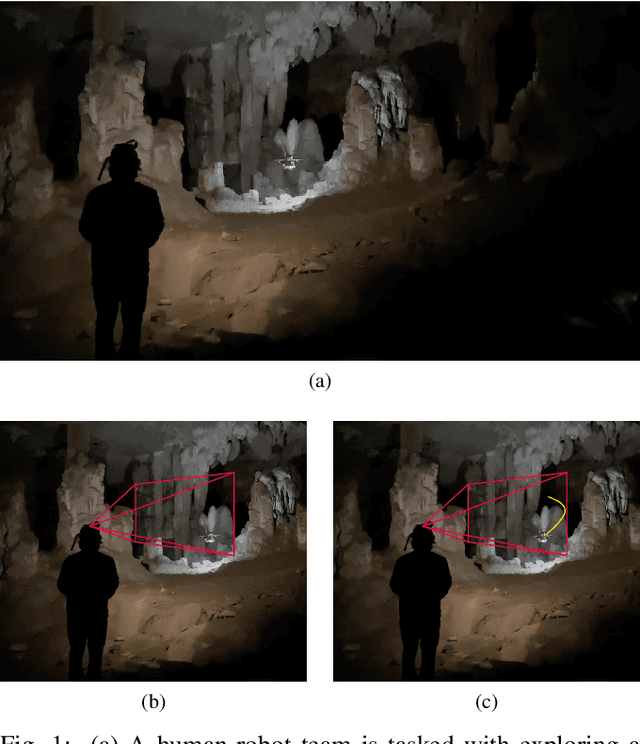

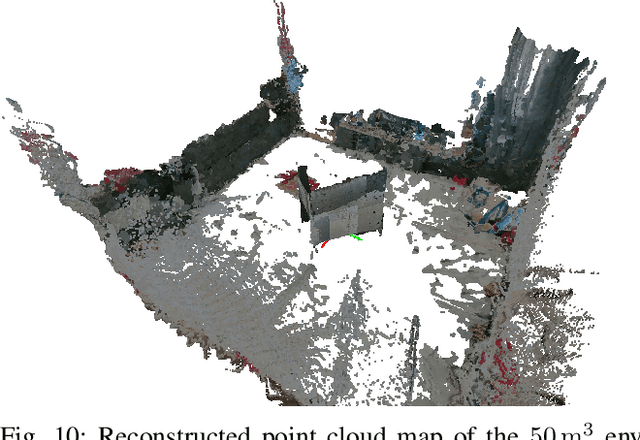

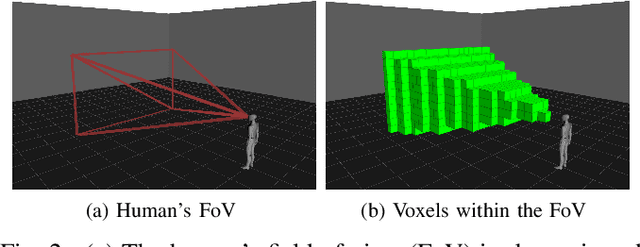

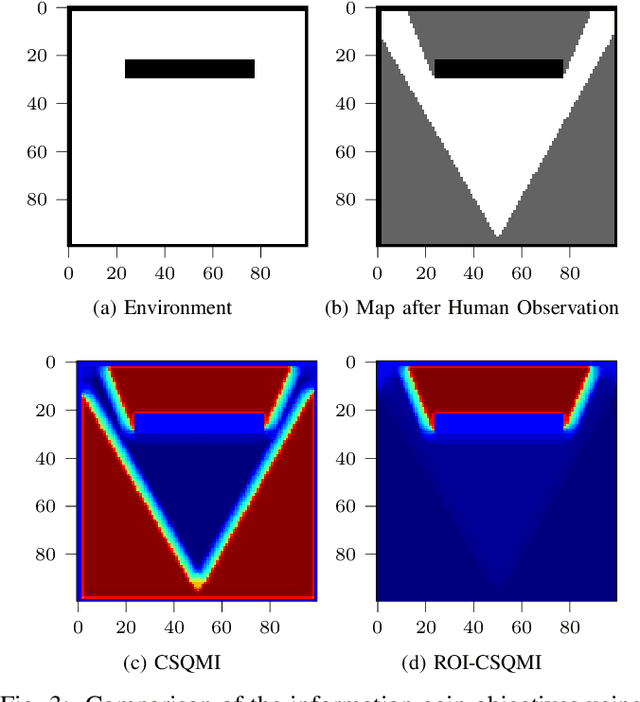

Collaborative Human-Robot Exploration via Implicit Coordination

Sep 19, 2022

Abstract:This paper develops a methodology for collaborative human-robot exploration that leverages implicit coordination. Most autonomous single- and multi-robot exploration systems require a remote operator to provide explicit guidance to the robotic team. Few works consider how to embed the human partner alongside robots to provide guidance in the field. A remaining challenge for collaborative human-robot exploration is efficient communication of goals from the human to the robot. In this paper we develop a methodology that implicitly communicates a region of interest from a helmet-mounted depth camera on the human's head to the robot and an information gain-based exploration objective that biases motion planning within the viewpoint provided by the human. The result is an aerial system that safely accesses regions of interest that may not be immediately viewable or reachable by the human. The approach is evaluated in simulation and with hardware experiments in a motion capture arena. Videos of the simulation and hardware experiments are available at: https://youtu.be/7jgkBpVFIoE.

Hierarchical Collision Avoidance for Adaptive-Speed Multirotor Teleoperation

Sep 17, 2022

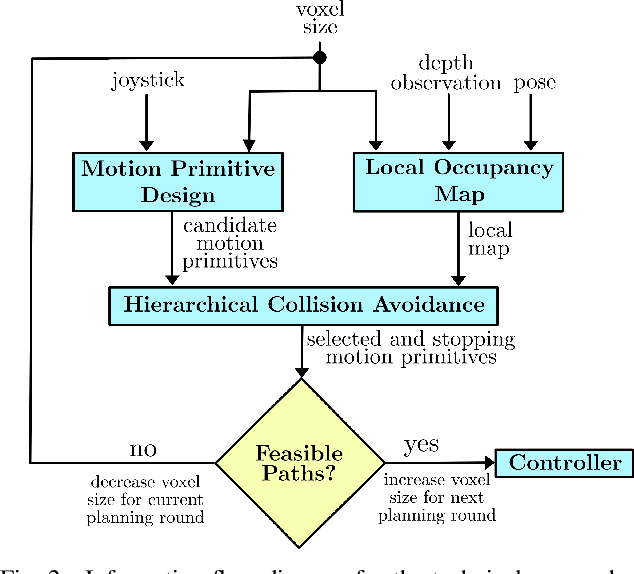

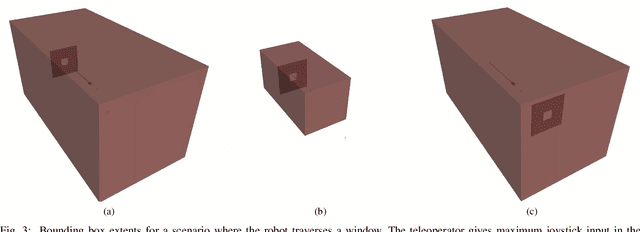

Abstract:This paper improves safe motion primitives-based teleoperation of a multirotor by developing a hierarchical collision avoidance method that modulates maximum speed based on environment complexity and perceptual constraints. Safe speed modulation is challenging in environments that exhibit varying clutter. Existing methods fix maximum speed and map resolution, which prevents vehicles from accessing tight spaces and places the cognitive load for changing speed on the operator. We address these gaps by proposing a high-rate (10 Hz) teleoperation approach that modulates the maximum vehicle speed through hierarchical collision checking. The hierarchical collision checker simultaneously adapts the local map's voxel size and maximum vehicle speed to ensure motion planning safety. The proposed methodology is evaluated in simulation and real-world experiments and compared to a non-adaptive motion primitives-based teleoperation approach. The results demonstrate the advantages of the proposed teleoperation approach both in time taken and the ability to complete the task without requiring the user to specify a maximum vehicle speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge