Kosta Dakic

A Multi-Drone Multi-View Dataset and Deep Learning Framework for Pedestrian Detection and Tracking

Nov 06, 2025Abstract:Multi-drone surveillance systems offer enhanced coverage and robustness for pedestrian tracking, yet existing approaches struggle with dynamic camera positions and complex occlusions. This paper introduces MATRIX (Multi-Aerial TRacking In compleX environments), a comprehensive dataset featuring synchronized footage from eight drones with continuously changing positions, and a novel deep learning framework for multi-view detection and tracking. Unlike existing datasets that rely on static cameras or limited drone coverage, MATRIX provides a challenging scenario with 40 pedestrians and a significant architectural obstruction in an urban environment. Our framework addresses the unique challenges of dynamic drone-based surveillance through real-time camera calibration, feature-based image registration, and multi-view feature fusion in bird's-eye-view (BEV) representation. Experimental results demonstrate that while static camera methods maintain over 90\% detection and tracking precision and accuracy metrics in a simplified MATRIX environment without an obstruction, 10 pedestrians and a much smaller observational area, their performance significantly degrades in the complex environment. Our proposed approach maintains robust performance with $\sim$90\% detection and tracking accuracy, as well as successfully tracks $\sim$80\% of trajectories under challenging conditions. Transfer learning experiments reveal strong generalization capabilities, with the pretrained model achieving much higher detection and tracking accuracy performance compared to training the model from scratch. Additionally, systematic camera dropout experiments reveal graceful performance degradation, demonstrating practical robustness for real-world deployments where camera failures may occur. The MATRIX dataset and framework provide essential benchmarks for advancing dynamic multi-view surveillance systems.

Resource-Efficient Multiview Perception: Integrating Semantic Masking with Masked Autoencoders

Oct 07, 2024

Abstract:Multiview systems have become a key technology in modern computer vision, offering advanced capabilities in scene understanding and analysis. However, these systems face critical challenges in bandwidth limitations and computational constraints, particularly for resource-limited camera nodes like drones. This paper presents a novel approach for communication-efficient distributed multiview detection and tracking using masked autoencoders (MAEs). We introduce a semantic-guided masking strategy that leverages pre-trained segmentation models and a tunable power function to prioritize informative image regions. This approach, combined with an MAE, reduces communication overhead while preserving essential visual information. We evaluate our method on both virtual and real-world multiview datasets, demonstrating comparable performance in terms of detection and tracking performance metrics compared to state-of-the-art techniques, even at high masking ratios. Our selective masking algorithm outperforms random masking, maintaining higher accuracy and precision as the masking ratio increases. Furthermore, our approach achieves a significant reduction in transmission data volume compared to baseline methods, thereby balancing multiview tracking performance with communication efficiency.

Spiking Neural Networks for Detecting Satellite-Based Internet-of-Things Signals

Apr 08, 2023Abstract:With the rapid growth of IoT networks, ubiquitous coverage is becoming increasingly necessary. Low Earth Orbit (LEO) satellite constellations for IoT have been proposed to provide coverage to regions where terrestrial systems cannot. However, LEO constellations for uplink communications are severely limited by the high density of user devices, which causes a high level of co-channel interference. This research presents a novel framework that utilizes spiking neural networks (SNNs) to detect IoT signals in the presence of uplink interference. The key advantage of SNNs is the extremely low power consumption relative to traditional deep learning (DL) networks. The performance of the spiking-based neural network detectors is compared against state-of-the-art DL networks and the conventional matched filter detector. Results indicate that both DL and SNN-based receivers surpass the matched filter detector in interference-heavy scenarios, owing to their capacity to effectively distinguish target signals amidst co-channel interference. Moreover, our work highlights the ultra-low power consumption of SNNs compared to other DL methods for signal detection. The strong detection performance and low power consumption of SNNs make them particularly suitable for onboard signal detection in IoT LEO satellites, especially in high interference conditions.

HybNet: A Hybrid Deep Learning - Matched Filter Approach for IoT Signal Detection

Nov 23, 2021

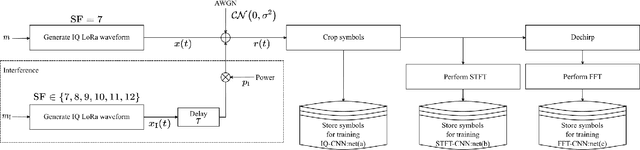

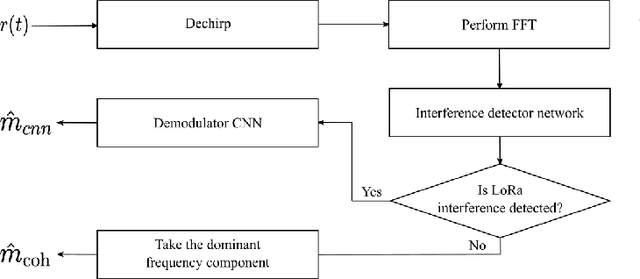

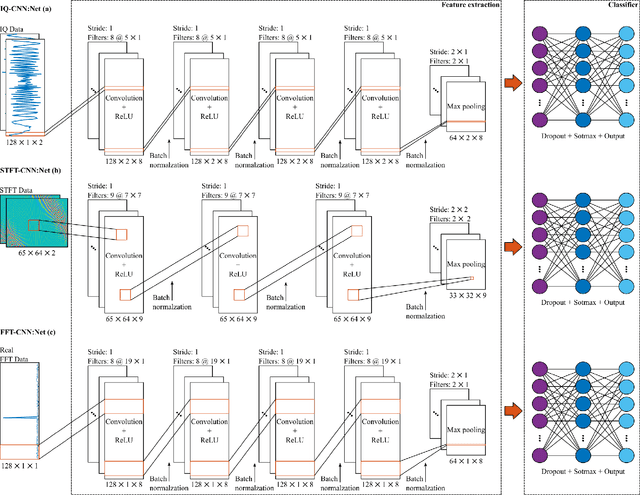

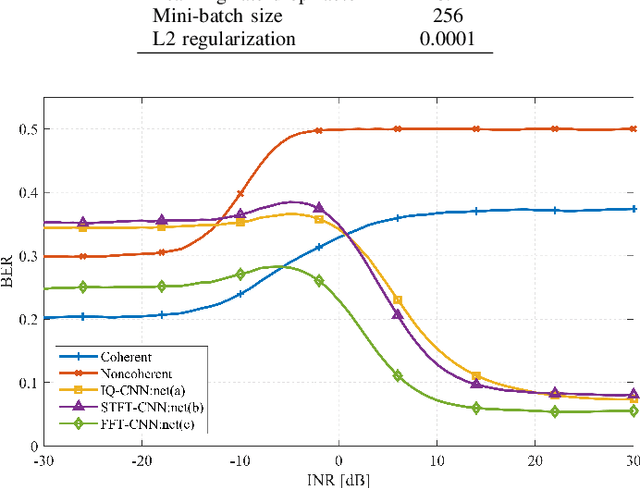

Abstract:Random access schemes are widely used in IoT wireless access networks to accommodate simplicity and power consumption constraints. As a result, the interference arising from overlapping IoT transmissions is a significant issue in such networks. Traditional signal detection methods are based on the well-established matched filter using the complex conjugate of the signal, which is proven as the optimal filter under additive white Gaussian noise. However, with the colored interference arising from the overlapping IoT transmissions, deep learning approaches are being considered as a better alternative. In this paper, we present a hybrid framework, HybNet, that switches between deep learning and match filter pathways based on the detected interference level. This helps the detector work in a broader range of conditions, optimally leveraging the matched filter and deep learning robustness. We compare the performance of several possible data modalities and detection architectures concerning the interference-to-noise ratio, demonstrating that the proposed HybNet surpasses the complex conjugate matched filter performance under interference-limited scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge