Kiran Vaidhya Venkadesh

Medical Imaging AI Competitions Lack Fairness

Dec 19, 2025

Abstract:Benchmarking competitions are central to the development of artificial intelligence (AI) in medical imaging, defining performance standards and shaping methodological progress. However, it remains unclear whether these benchmarks provide data that are sufficiently representative, accessible, and reusable to support clinically meaningful AI. In this work, we assess fairness along two complementary dimensions: (1) whether challenge datasets are representative of real-world clinical diversity, and (2) whether they are accessible and legally reusable in line with the FAIR principles. To address this question, we conducted a large-scale systematic study of 241 biomedical image analysis challenges comprising 458 tasks across 19 imaging modalities. Our findings show substantial biases in dataset composition, including geographic location, modality-, and problem type-related biases, indicating that current benchmarks do not adequately reflect real-world clinical diversity. Despite their widespread influence, challenge datasets were frequently constrained by restrictive or ambiguous access conditions, inconsistent or non-compliant licensing practices, and incomplete documentation, limiting reproducibility and long-term reuse. Together, these shortcomings expose foundational fairness limitations in our benchmarking ecosystem and highlight a disconnect between leaderboard success and clinical relevance.

Robust Kidney Abnormality Segmentation: A Validation Study of an AI-Based Framework

May 12, 2025Abstract:Kidney abnormality segmentation has important potential to enhance the clinical workflow, especially in settings requiring quantitative assessments. Kidney volume could serve as an important biomarker for renal diseases, with changes in volume correlating directly with kidney function. Currently, clinical practice often relies on subjective visual assessment for evaluating kidney size and abnormalities, including tumors and cysts, which are typically staged based on diameter, volume, and anatomical location. To support a more objective and reproducible approach, this research aims to develop a robust, thoroughly validated kidney abnormality segmentation algorithm, made publicly available for clinical and research use. We employ publicly available training datasets and leverage the state-of-the-art medical image segmentation framework nnU-Net. Validation is conducted using both proprietary and public test datasets, with segmentation performance quantified by Dice coefficient and the 95th percentile Hausdorff distance. Furthermore, we analyze robustness across subgroups based on patient sex, age, CT contrast phases, and tumor histologic subtypes. Our findings demonstrate that our segmentation algorithm, trained exclusively on publicly available data, generalizes effectively to external test sets and outperforms existing state-of-the-art models across all tested datasets. Subgroup analyses reveal consistent high performance, indicating strong robustness and reliability. The developed algorithm and associated code are publicly accessible at https://github.com/DIAGNijmegen/oncology-kidney-abnormality-segmentation.

Improving Automated COVID-19 Grading with Convolutional Neural Networks in Computed Tomography Scans: An Ablation Study

Sep 21, 2020

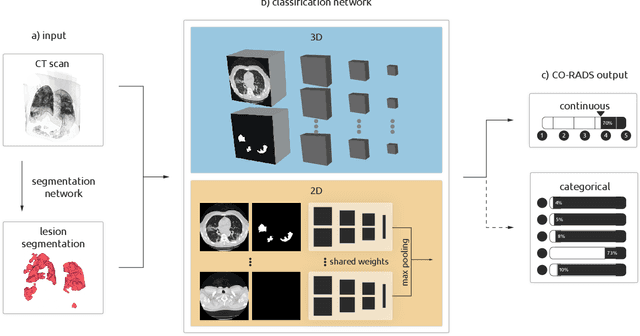

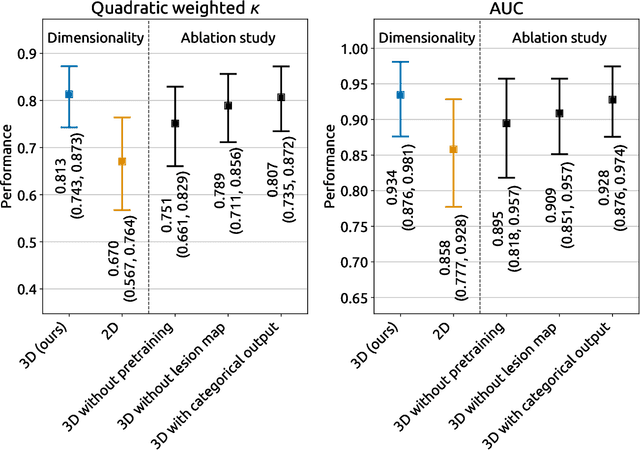

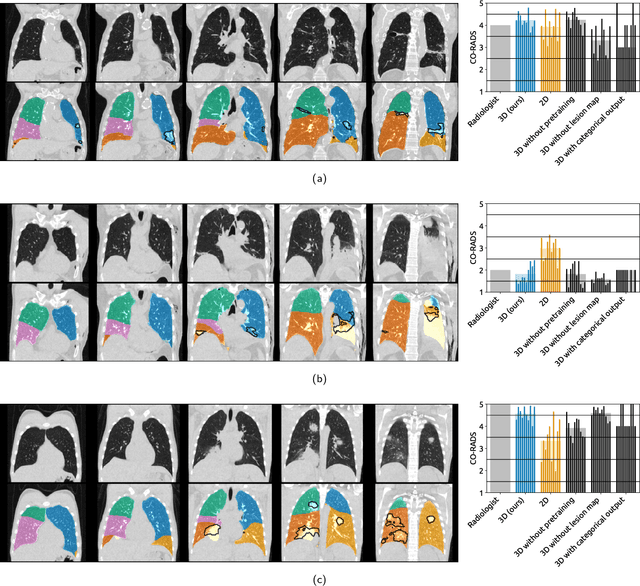

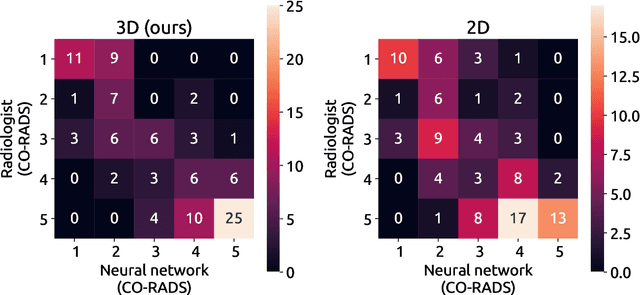

Abstract:Amidst the ongoing pandemic, several studies have shown that COVID-19 classification and grading using computed tomography (CT) images can be automated with convolutional neural networks (CNNs). Many of these studies focused on reporting initial results of algorithms that were assembled from commonly used components. The choice of these components was often pragmatic rather than systematic. For instance, several studies used 2D CNNs even though these might not be optimal for handling 3D CT volumes. This paper identifies a variety of components that increase the performance of CNN-based algorithms for COVID-19 grading from CT images. We investigated the effectiveness of using a 3D CNN instead of a 2D CNN, of using transfer learning to initialize the network, of providing automatically computed lesion maps as additional network input, and of predicting a continuous instead of a categorical output. A 3D CNN with these components achieved an area under the ROC curve (AUC) of 0.934 on our test set of 105 CT scans and an AUC of 0.923 on a publicly available set of 742 CT scans, a substantial improvement in comparison with a previously published 2D CNN. An ablation study demonstrated that in addition to using a 3D CNN instead of a 2D CNN transfer learning contributed the most and continuous output contributed the least to improving the model performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge