Kenneth Lai

Dynamic Epistemic Friction in Dialogue

Jun 12, 2025Abstract:Recent developments in aligning Large Language Models (LLMs) with human preferences have significantly enhanced their utility in human-AI collaborative scenarios. However, such approaches often neglect the critical role of "epistemic friction," or the inherent resistance encountered when updating beliefs in response to new, conflicting, or ambiguous information. In this paper, we define dynamic epistemic friction as the resistance to epistemic integration, characterized by the misalignment between an agent's current belief state and new propositions supported by external evidence. We position this within the framework of Dynamic Epistemic Logic (Van Benthem and Pacuit, 2011), where friction emerges as nontrivial belief-revision during the interaction. We then present analyses from a situated collaborative task that demonstrate how this model of epistemic friction can effectively predict belief updates in dialogues, and we subsequently discuss how the model of belief alignment as a measure of epistemic resistance or friction can naturally be made more sophisticated to accommodate the complexities of real-world dialogue scenarios.

TRACE: Real-Time Multimodal Common Ground Tracking in Situated Collaborative Dialogues

Mar 12, 2025

Abstract:We present TRACE, a novel system for live *common ground* tracking in situated collaborative tasks. With a focus on fast, real-time performance, TRACE tracks the speech, actions, gestures, and visual attention of participants, uses these multimodal inputs to determine the set of task-relevant propositions that have been raised as the dialogue progresses, and tracks the group's epistemic position and beliefs toward them as the task unfolds. Amid increased interest in AI systems that can mediate collaborations, TRACE represents an important step forward for agents that can engage with multiparty, multimodal discourse.

Speech Is Not Enough: Interpreting Nonverbal Indicators of Common Knowledge and Engagement

Dec 08, 2024

Abstract:Our goal is to develop an AI Partner that can provide support for group problem solving and social dynamics. In multi-party working group environments, multimodal analytics is crucial for identifying non-verbal interactions of group members. In conjunction with their verbal participation, this creates an holistic understanding of collaboration and engagement that provides necessary context for the AI Partner. In this demo, we illustrate our present capabilities at detecting and tracking nonverbal behavior in student task-oriented interactions in the classroom, and the implications for tracking common ground and engagement.

Common Ground Tracking in Multimodal Dialogue

Mar 26, 2024

Abstract:Within Dialogue Modeling research in AI and NLP, considerable attention has been spent on ``dialogue state tracking'' (DST), which is the ability to update the representations of the speaker's needs at each turn in the dialogue by taking into account the past dialogue moves and history. Less studied but just as important to dialogue modeling, however, is ``common ground tracking'' (CGT), which identifies the shared belief space held by all of the participants in a task-oriented dialogue: the task-relevant propositions all participants accept as true. In this paper we present a method for automatically identifying the current set of shared beliefs and ``questions under discussion'' (QUDs) of a group with a shared goal. We annotate a dataset of multimodal interactions in a shared physical space with speech transcriptions, prosodic features, gestures, actions, and facets of collaboration, and operationalize these features for use in a deep neural model to predict moves toward construction of common ground. Model outputs cascade into a set of formal closure rules derived from situated evidence and belief axioms and update operations. We empirically assess the contribution of each feature type toward successful construction of common ground relative to ground truth, establishing a benchmark in this novel, challenging task.

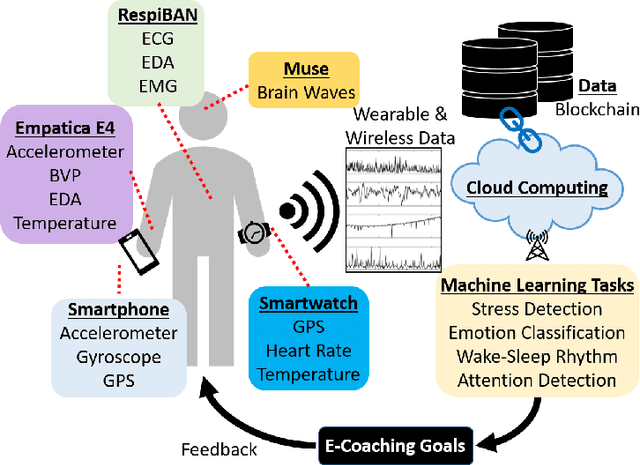

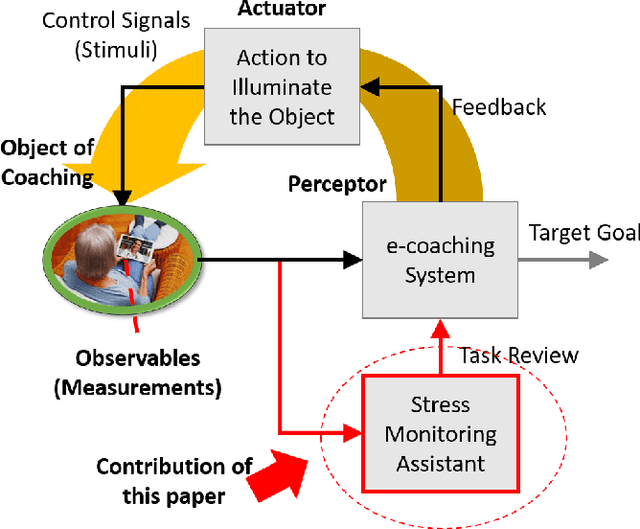

Intelligent Stress Assessment for e-Coaching

Nov 03, 2023Abstract:This paper considers the adaptation of the e-coaching concept at times of emergencies and disasters, through aiding the e-coaching with intelligent tools for monitoring humans' affective state. The states such as anxiety, panic, avoidance, and stress, if properly detected, can be mitigated using the e-coaching tactic and strategy. In this work, we focus on a stress monitoring assistant tool developed on machine learning techniques. We provide the results of an experimental study using the proposed method.

Assessing Upper Limb Motor Function in the Immediate Post-Stroke Perioud Using Accelerometry

Nov 01, 2023

Abstract:Accelerometry has been extensively studied as an objective means of measuring upper limb function in patients post-stroke. The objective of this paper is to determine whether the accelerometry-derived measurements frequently used in more long-term rehabilitation studies can also be used to monitor and rapidly detect sudden changes in upper limb motor function in more recently hospitalized stroke patients. Six binary classification models were created by training on variable data window times of paretic upper limb accelerometer feature data. The models were assessed on their effectiveness for differentiating new input data into two classes: severe or moderately severe motor function. The classification models yielded Area Under the Curve (AUC) scores that ranged from 0.72 to 0.82 for 15-minute data windows to 0.77 to 0.94 for 120-minute data windows. These results served as a preliminary assessment and a basis on which to further investigate the efficacy of using accelerometry and machine learning to alert healthcare professionals to rapid changes in motor function in the days immediately following a stroke.

Hand Gesture Classification on Praxis Dataset: Trading Accuracy for Expense

Nov 01, 2023

Abstract:In this paper, we investigate hand gesture classifiers that rely upon the abstracted 'skeletal' data recorded using the RGB-Depth sensor. We focus on 'skeletal' data represented by the body joint coordinates, from the Praxis dataset. The PRAXIS dataset contains recordings of patients with cortical pathologies such as Alzheimer's disease, performing a Praxis test under the direction of a clinician. In this paper, we propose hand gesture classifiers that are more effective with the PRAXIS dataset than previously proposed models. Body joint data offers a compressed form of data that can be analyzed specifically for hand gesture recognition. Using a combination of windowing techniques with deep learning architecture such as a Recurrent Neural Network (RNN), we achieved an overall accuracy of 70.8% using only body joint data. In addition, we investigated a long-short-term-memory (LSTM) to extract and analyze the movement of the joints through time to recognize the hand gestures being performed and achieved a gesture recognition rate of 74.3% and 67.3% for static and dynamic gestures, respectively. The proposed approach contributed to the task of developing an automated, accurate, and inexpensive approach to diagnosing cortical pathologies for multiple healthcare applications.

* 8 pages, 6 figures

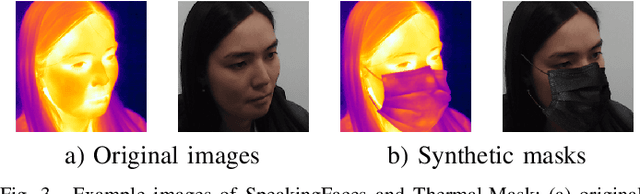

Fairness on Synthetic Visual and Thermal Mask Images

Sep 19, 2022

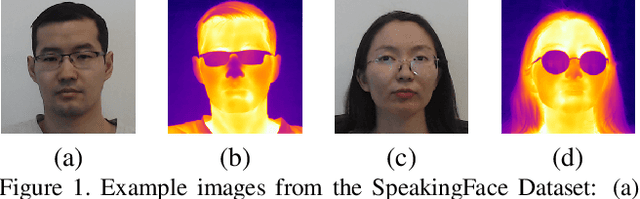

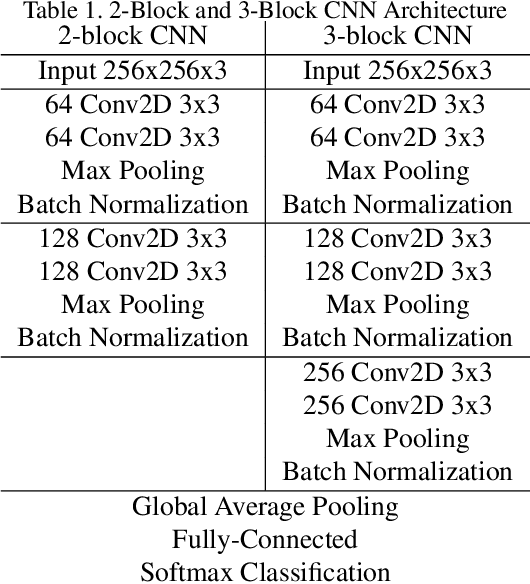

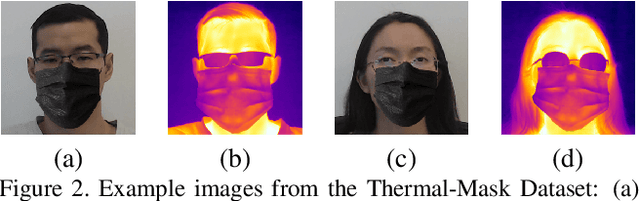

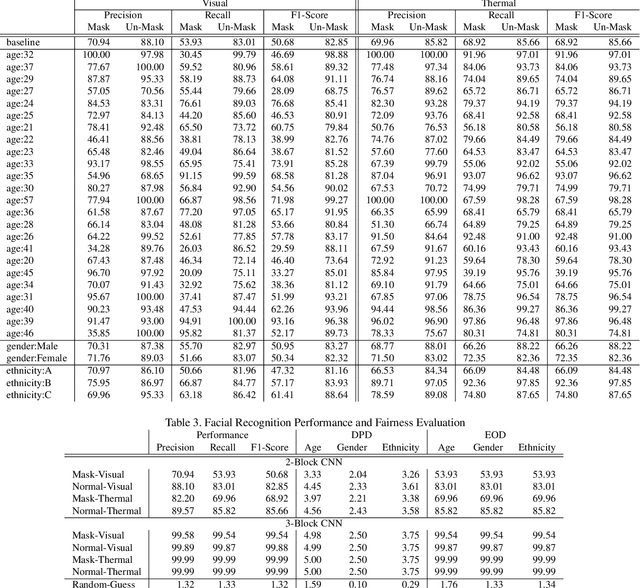

Abstract:In this paper, we study performance and fairness on visual and thermal images and expand the assessment to masked synthetic images. Using the SpeakingFace and Thermal-Mask dataset, we propose a process to assess fairness on real images and show how the same process can be applied to synthetic images. The resulting process shows a demographic parity difference of 1.59 for random guessing and increases to 5.0 when the recognition performance increases to a precision and recall rate of 99.99\%. We indicate that inherently biased datasets can deeply impact the fairness of any biometric system. A primary cause of a biased dataset is the class imbalance due to the data collection process. To address imbalanced datasets, the classes with fewer samples can be augmented with synthetic images to generate a more balanced dataset resulting in less bias when training a machine learning system. For biometric-enabled systems, fairness is of critical importance, while the related concept of Equity, Diversity, and Inclusion (EDI) is well suited for the generalization of fairness in biometrics, in this paper, we focus on the 3 most common demographic groups age, gender, and ethnicity.

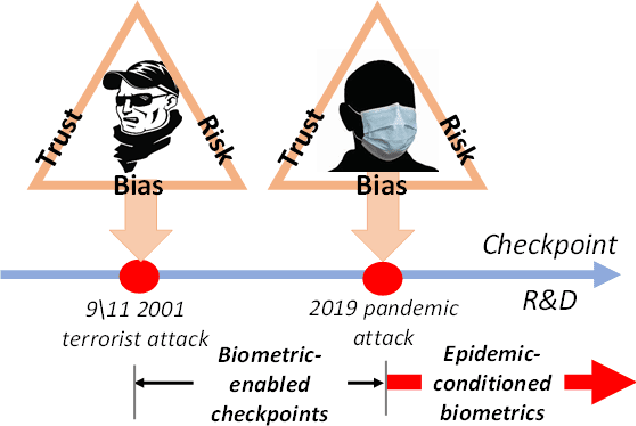

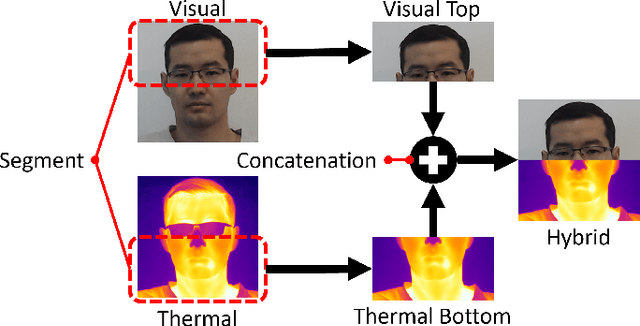

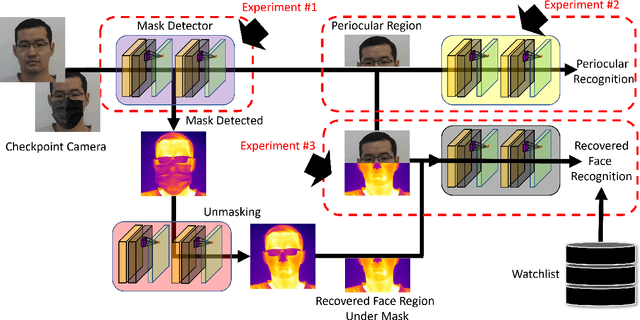

Biometrics in the Time of Pandemic: 40% Masked Face Recognition Degradation can be Reduced to 2%

Jan 03, 2022

Abstract:In this study of the face recognition on masked versus unmasked faces generated using Flickr-Faces-HQ and SpeakingFaces datasets, we report 36.78% degradation of recognition performance caused by the mask-wearing at the time of pandemics, in particular, in border checkpoint scenarios. We have achieved better performance and reduced the degradation to 1.79% using advanced deep learning approaches in the cross-spectral domain.

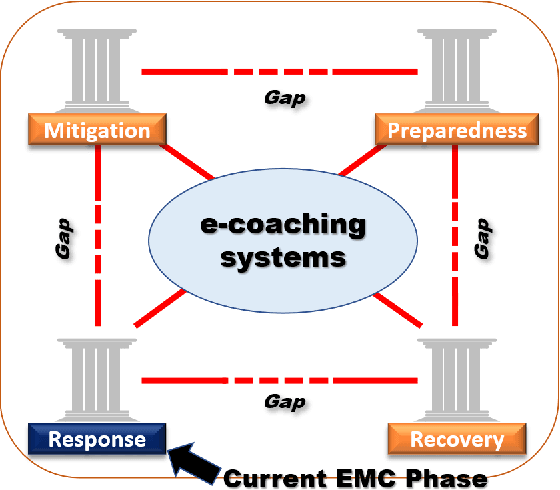

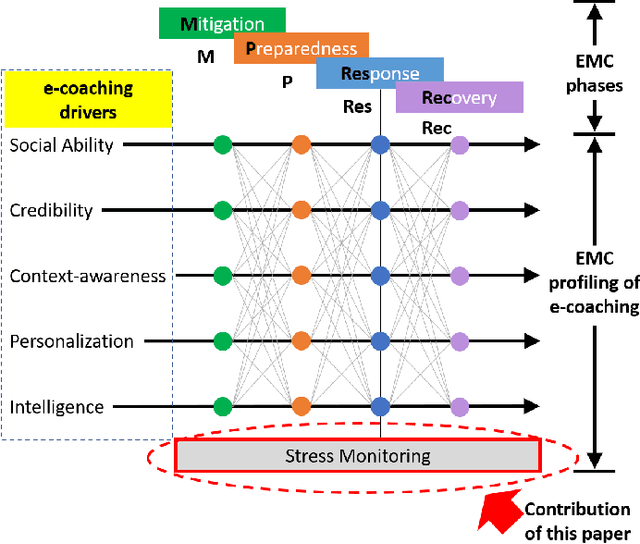

Counter-Epidemiological Projections of e-Coaching

May 24, 2021

Abstract:This paper considers e-coaching at times of pandemic. It utilizes the Emergency Management Cycle (EMC), a core doctrine for managing disasters. The EMC dimensions provide a useful taxonomical view for the development and application of e-coaching systems, emphasizing technological and societal issues. Typical pandemic symptoms such as anxiety, panic, avoidance, and stress, if properly detected, can be mitigated using the e-coaching tactic and strategy. In this work, we focus on a stress monitoring assistant developed upon machine learning techniques. We provide the results of an experimental study of a prototype of such an assistant. Our study leads to the conclusion that stress monitoring shall become a valuable component of e-coaching at all EMC phases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge