José Miguel Hernández-Lobato

Variational Depth Search in ResNets

Feb 27, 2020

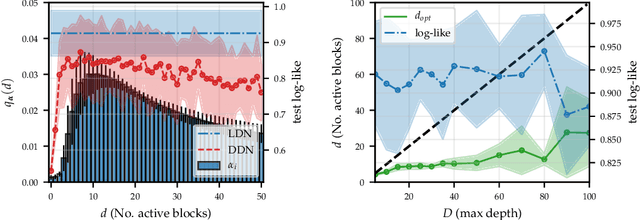

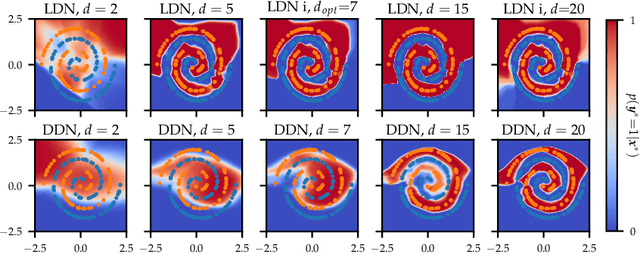

Abstract:One-shot neural architecture search allows joint learning of weights and network architecture, reducing computational cost. We limit our search space to the depth of residual networks and formulate an analytically tractable variational objective that allows for obtaining an unbiased approximate posterior over depths in one-shot. We propose a heuristic to prune our networks based on this distribution. We compare our proposed method against manual search over network depths on the MNIST, Fashion-MNIST, SVHN datasets. We find that pruned networks do not incur a loss in predictive performance, obtaining accuracies competitive with unpruned networks. Marginalising over depth allows us to obtain better-calibrated test-time uncertainty estimates than regular networks, in a single forward pass.

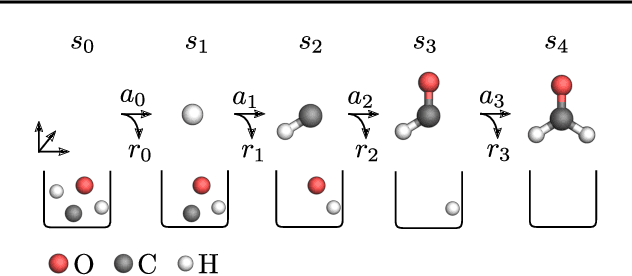

Reinforcement Learning for Molecular Design Guided by Quantum Mechanics

Feb 18, 2020

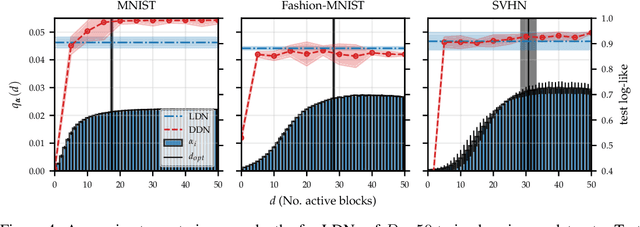

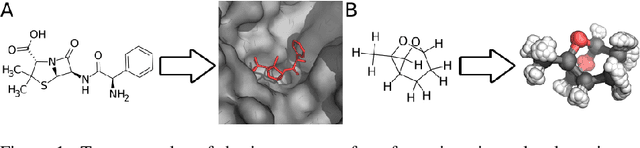

Abstract:Automating molecular design using deep reinforcement learning (RL) holds the promise of accelerating the discovery of new chemical compounds. A limitation of existing approaches is that they work with molecular graphs and thus ignore the location of atoms in space, which restricts them to 1) generating single organic molecules and 2) heuristic reward functions. To address this, we present a novel RL formulation for molecular design in Cartesian coordinates, thereby extending the class of molecules that can be built. Our reward function is directly based on fundamental physical properties such as the energy, which we approximate via fast quantum-chemical methods. To enable progress towards de-novo molecular design, we introduce MolGym, an RL environment comprising several challenging molecular design tasks along with baselines. In our experiments, we show that our agent can efficiently learn to solve these tasks from scratch by working in a translation and rotation invariant state-action space.

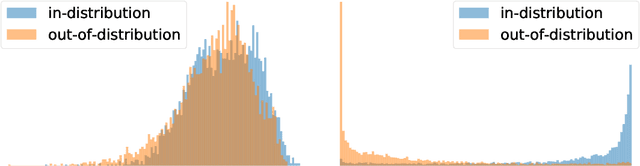

Bayesian Variational Autoencoders for Unsupervised Out-of-Distribution Detection

Dec 11, 2019

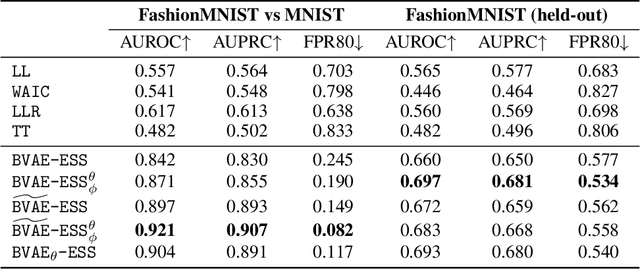

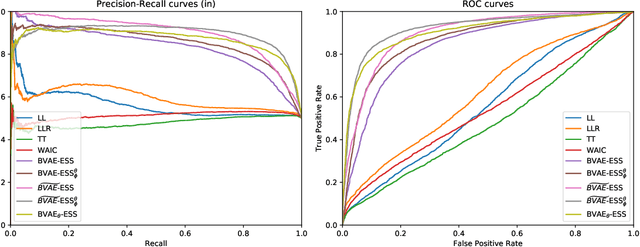

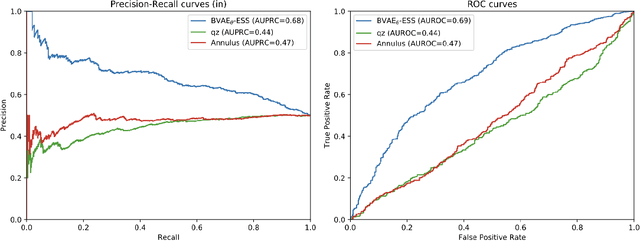

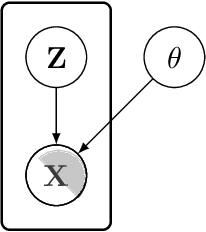

Abstract:Despite their successes, deep neural networks still make unreliable predictions when faced with test data drawn from a distribution different to that of the training data, constituting a major problem for AI safety. While this motivated a recent surge in interest in developing methods to detect such out-of-distribution (OoD) inputs, a robust solution is still lacking. We propose a new probabilistic, unsupervised approach to this problem based on a Bayesian variational autoencoder model, which estimates a full posterior distribution over the decoder parameters using stochastic gradient Markov chain Monte Carlo, instead of fitting a point estimate. We describe how information-theoretic measures based on this posterior can then be used to detect OoD data both in input space as well as in the model's latent space. The effectiveness of our approach is empirically demonstrated.

A Generative Model for Molecular Distance Geometry

Oct 03, 2019

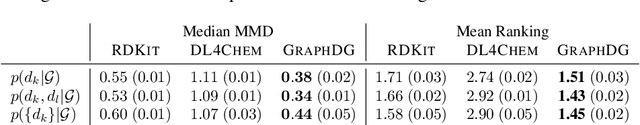

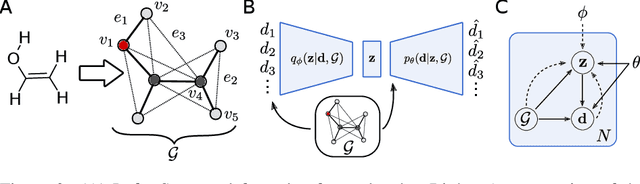

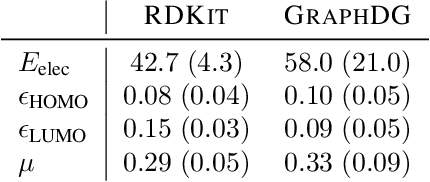

Abstract:Computing equilibrium states for many-body systems, such as molecules, is a long-standing challenge. In the absence of methods for generating statistically independent samples, great computational effort is invested in simulating these systems using, for example, Markov chain Monte Carlo. We present a probabilistic model that generates such samples for molecules from their graph representations. Our model learns a low-dimensional manifold that preserves the geometry of local atomic neighborhoods through a principled learning representation that is based on Euclidean distance geometry. We create a new dataset for molecular conformation generation with which we show experimentally that our generative model achieves state-of-the-art accuracy. Finally, we show how to use our model as a proposal distribution in an importance sampling scheme to compute molecular properties.

Icebreaker: Element-wise Active Information Acquisition with Bayesian Deep Latent Gaussian Model

Aug 14, 2019

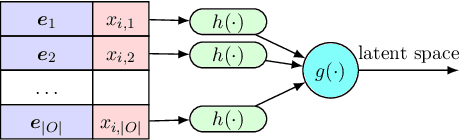

Abstract:In this paper we introduce the ice-start problem, i.e., the challenge of deploying machine learning models when only little or no training data is initially available, and acquiring each feature element of data is associated with costs. This setting is representative for the real-world machine learning applications. For instance, in the health-care domain, when training an AI system for predicting patient metrics from lab tests, obtaining every single measurement comes with a high cost. Active learning, where only the label is associated with a cost does not apply to such problem, because performing all possible lab tests to acquire a new training datum would be costly, as well as unnecessary due to redundancy. We propose Icebreaker, a principled framework to approach the ice-start problem. Icebreaker uses a full Bayesian Deep Latent Gaussian Model (BELGAM) with a novel inference method. Our proposed method combines recent advances in amortized inference and stochastic gradient MCMC to enable fast and accurate posterior inference. By utilizing BELGAM's ability to fully quantify model uncertainty, we also propose two information acquisition functions for imputation and active prediction problems. We demonstrate that BELGAM performs significantly better than the previous VAE (Variational autoencoder) based models, when the data set size is small, using both machine learning benchmarks and real-world recommender systems and health-care applications. Moreover, based on BELGAM, Icebreaker further improves the performance and demonstrate the ability to use minimum amount of the training data to obtain the highest test time performance.

Bayesian Batch Active Learning as Sparse Subset Approximation

Aug 06, 2019

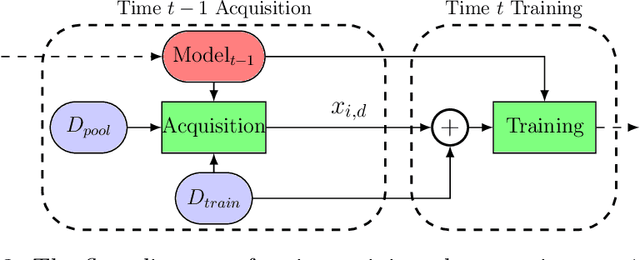

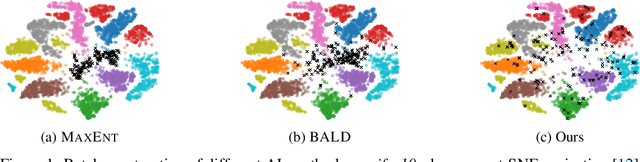

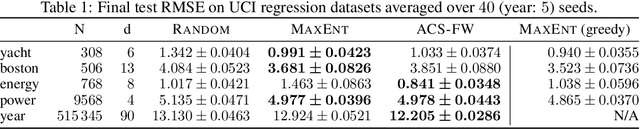

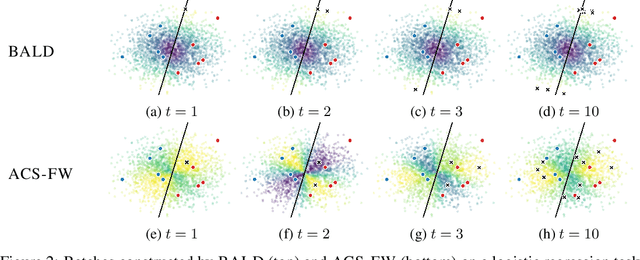

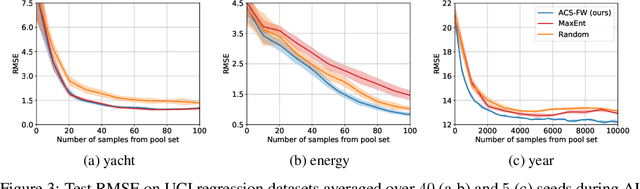

Abstract:Leveraging the wealth of unlabeled data produced in recent years provides great potential for improving supervised models. When the cost of acquiring labels is high, probabilistic active learning methods can be used to greedily select the most informative data points to be labeled. However, for many large-scale problems standard greedy procedures become computationally infeasible and suffer from negligible model change. In this paper, we introduce a novel Bayesian batch active learning approach that mitigates these issues. Our approach is motivated by approximating the complete data posterior of the model parameters. While naive batch construction methods result in correlated queries, our algorithm produces diverse batches that enable efficient active learning at scale. We derive interpretable closed-form solutions akin to existing active learning procedures for linear models, and generalize to arbitrary models using random projections. We demonstrate the benefits of our approach on several large-scale regression and classification tasks.

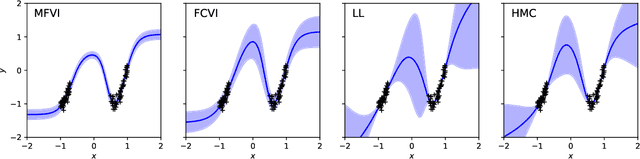

'In-Between' Uncertainty in Bayesian Neural Networks

Jun 27, 2019

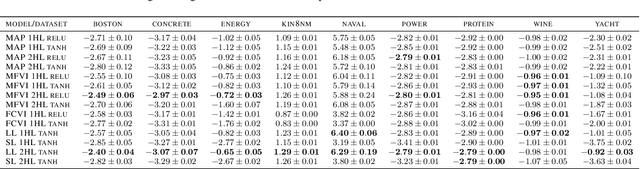

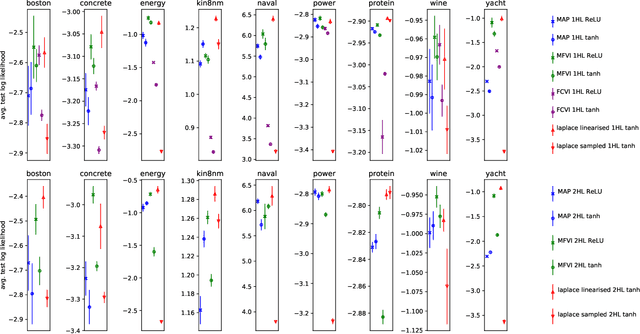

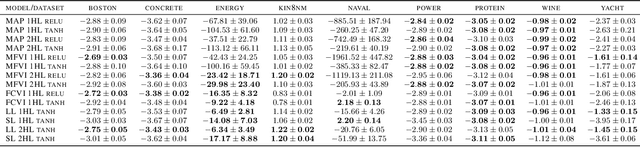

Abstract:We describe a limitation in the expressiveness of the predictive uncertainty estimate given by mean-field variational inference (MFVI), a popular approximate inference method for Bayesian neural networks. In particular, MFVI fails to give calibrated uncertainty estimates in between separated regions of observations. This can lead to catastrophically overconfident predictions when testing on out-of-distribution data. Avoiding such overconfidence is critical for active learning, Bayesian optimisation and out-of-distribution robustness. We instead find that a classical technique, the linearised Laplace approximation, can handle 'in-between' uncertainty much better for small network architectures.

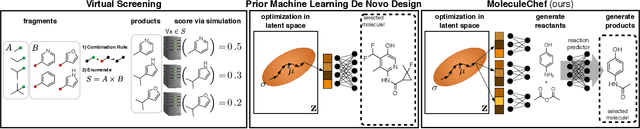

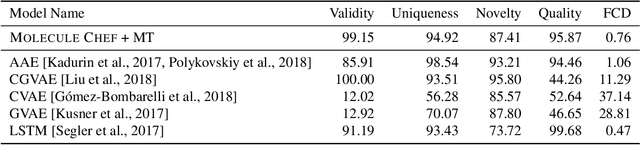

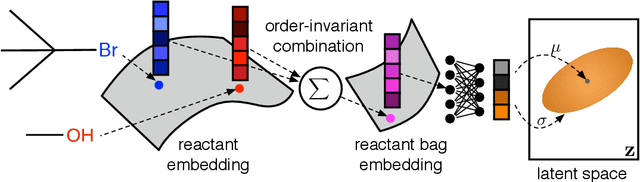

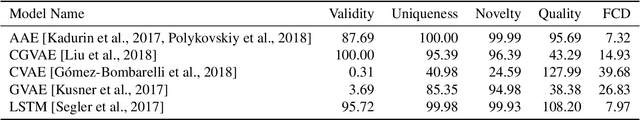

A Model to Search for Synthesizable Molecules

Jun 12, 2019

Abstract:Deep generative models are able to suggest new organic molecules by generating strings, trees, and graphs representing their structure. While such models allow one to generate molecules with desirable properties, they give no guarantees that the molecules can actually be synthesized in practice. We propose a new molecule generation model, mirroring a more realistic real-world process, where (a) reactants are selected, and (b) combined to form more complex molecules. More specifically, our generative model proposes a bag of initial reactants (selected from a pool of commercially-available molecules) and uses a reaction model to predict how they react together to generate new molecules. We first show that the model can generate diverse, valid and unique molecules due to the useful inductive biases of modeling reactions. Furthermore, our model allows chemists to interrogate not only the properties of the generated molecules but also the feasibility of the synthesis routes. We conclude by using our model to solve retrosynthesis problems, predicting a set of reactants that can produce a target product.

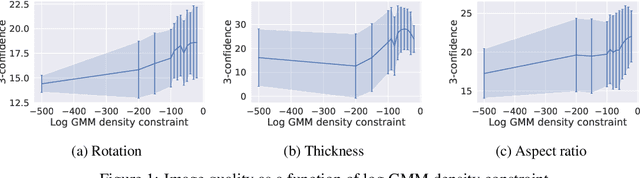

A COLD Approach to Generating Optimal Samples

May 23, 2019

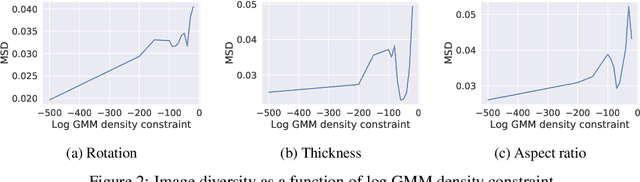

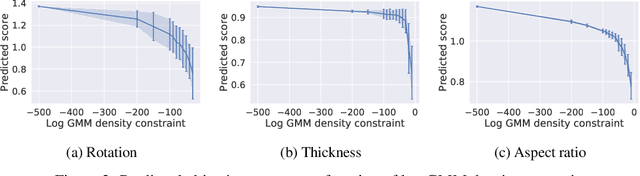

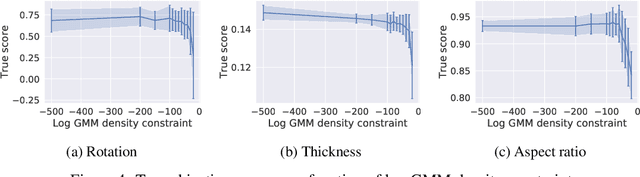

Abstract:Optimising discrete data for a desired characteristic using gradient-based methods involves projecting the data into a continuous latent space and carrying out optimisation in this space. Carrying out global optimisation is difficult as optimisers are likely to follow gradients into regions of the latent space that the model has not been exposed to during training; samples generated from these regions are likely to be too dissimilar to the training data to be useful. We propose Constrained Optimisation with Latent Distributions (COLD), a constrained global optimisation procedure to find samples with high values of a desired property that are similar to yet distinct from the training data. We find that on MNIST, our procedure yields optima for each of three different objectives, and that enforcing tighter constraints improves the quality and increases the diversity of the generated images. On the ChEMBL molecular dataset, our method generates a diverse set of new molecules with drug-likeness scores similar to those of the highest-scoring molecules in the training data. We also demonstrate a computationally efficient way to approximate the constraint when evaluating it exactly is computationally expensive.

Interpretable Outcome Prediction with Sparse Bayesian Neural Networks in Intensive Care

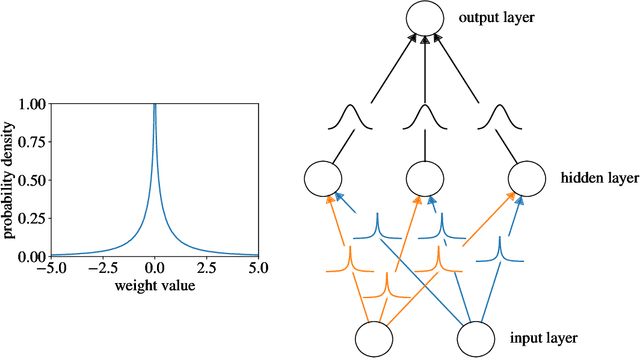

May 07, 2019

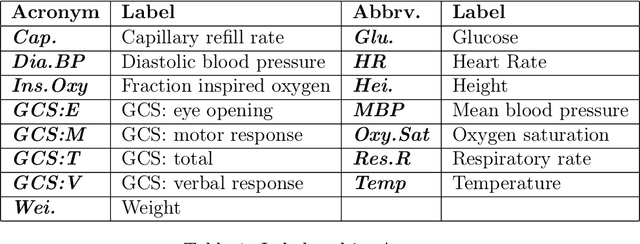

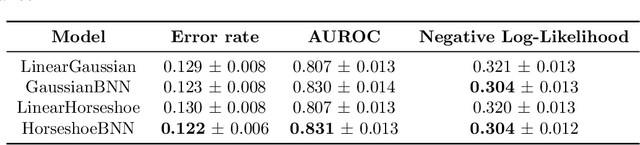

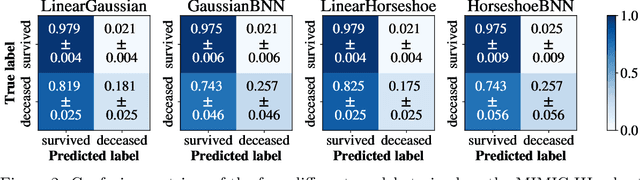

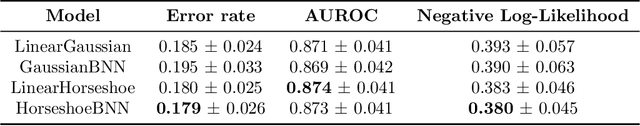

Abstract:Clinical decision making is challenging because of pathological complexity, as well as large amounts of heterogeneous data generated as part of routine clinical care. In recent years, machine learning tools have been developed to aid this process. Intensive care unit (ICU) admissions represent the most data dense and time-critical patient care episodes. In this context, prediction models may help clinicians determine which patients are most at risk and prioritize care. However, flexible tools such as artificial neural networks (ANNs) suffer from a lack of interpretability limiting their acceptability to clinicians. In this work, we propose a novel interpretable Bayesian neural network architecture which offers both the flexibility of ANNs and interpretability in terms of feature selection. In particular, we employ a sparsity inducing prior distribution in a tied manner to learn which features are important for outcome prediction. We evaluate our approach on the task of mortality prediction using two real-world ICU cohorts. In collaboration with clinicians we found that, in addition to the predicted outcome results, our approach can provide novel insights into the importance of different clinical measurements. This suggests that our model can support medical experts in their decision making process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge