Jiwon Kim

Match me if you can: Semantic Correspondence Learning with Unpaired Images

Nov 30, 2023Abstract:Recent approaches for semantic correspondence have focused on obtaining high-quality correspondences using a complicated network, refining the ambiguous or noisy matching points. Despite their performance improvements, they remain constrained by the limited training pairs due to costly point-level annotations. This paper proposes a simple yet effective method that performs training with unlabeled pairs to complement both limited image pairs and sparse point pairs, requiring neither extra labeled keypoints nor trainable modules. We fundamentally extend the data quantity and variety by augmenting new unannotated pairs not primitively provided as training pairs in benchmarks. Using a simple teacher-student framework, we offer reliable pseudo correspondences to the student network via machine supervision. Finally, the performance of our network is steadily improved by the proposed iterative training, putting back the student as a teacher to generate refined labels and train a new student repeatedly. Our models outperform the milestone baselines, including state-of-the-art methods on semantic correspondence benchmarks.

Improving Lane Detection Generalization: A Novel Framework using HD Maps for Boosting Diversity

Nov 28, 2023Abstract:Lane detection is a vital task for vehicles to navigate and localize their position on the road. To ensure reliable results, lane detection algorithms must have robust generalization performance in various road environments. However, despite the significant performance improvement of deep learning-based lane detection algorithms, their generalization performance in response to changes in road environments still falls short of expectations. In this paper, we present a novel framework for single-source domain generalization (SSDG) in lane detection. By decomposing data into lane structures and surroundings, we enhance diversity using High-Definition (HD) maps and generative models. Rather than expanding data volume, we strategically select a core subset of data, maximizing diversity and optimizing performance. Our extensive experiments demonstrate that our framework enhances the generalization performance of lane detection, comparable to the domain adaptation-based method.

Leveraging Future Relationship Reasoning for Vehicle Trajectory Prediction

May 24, 2023

Abstract:Understanding the interaction between multiple agents is crucial for realistic vehicle trajectory prediction. Existing methods have attempted to infer the interaction from the observed past trajectories of agents using pooling, attention, or graph-based methods, which rely on a deterministic approach. However, these methods can fail under complex road structures, as they cannot predict various interactions that may occur in the future. In this paper, we propose a novel approach that uses lane information to predict a stochastic future relationship among agents. To obtain a coarse future motion of agents, our method first predicts the probability of lane-level waypoint occupancy of vehicles. We then utilize the temporal probability of passing adjacent lanes for each agent pair, assuming that agents passing adjacent lanes will highly interact. We also model the interaction using a probabilistic distribution, which allows for multiple possible future interactions. The distribution is learned from the posterior distribution of interaction obtained from ground truth future trajectories. We validate our method on popular trajectory prediction datasets: nuScenes and Argoverse. The results show that the proposed method brings remarkable performance gain in prediction accuracy, and achieves state-of-the-art performance in long-term prediction benchmark dataset.

Trajectory Flow Map: Graph-based Approach to Analysing Temporal Evolution of Aggregated Traffic Flows in Large-scale Urban Networks

Dec 06, 2022Abstract:This paper proposes a graph-based approach to representing spatio-temporal trajectory data that allows an effective visualization and characterization of city-wide traffic dynamics. With the advance of sensor, mobile, and Internet of Things (IoT) technologies, vehicle and passenger trajectories are being increasingly collected on a massive scale and are becoming a critical source of insight into traffic pattern and traveller behaviour. To leverage such trajectory data to better understand traffic dynamics in a large-scale urban network, this study develops a trajectory-based network traffic analysis method that converts individual trajectory data into a sequence of graphs that evolve over time (known as dynamic graphs or time-evolving graphs) and analyses network-wide traffic patterns in terms of a compact and informative graph-representation of aggregated traffic flows. First, we partition the entire network into a set of cells based on the spatial distribution of data points in individual trajectories, where the cells represent spatial regions between which aggregated traffic flows can be measured. Next, dynamic flows of moving objects are represented as a time-evolving graph, where regions are graph vertices and flows between them are treated as weighted directed edges. Given a fixed set of vertices, edges can be inserted or removed at every time step depending on the presence of traffic flows between two regions at a given time window. Once a dynamic graph is built, we apply graph mining algorithms to detect change-points in time, which represent time points where the graph exhibits significant changes in its overall structure and, thus, correspond to change-points in city-wide mobility pattern throughout the day (e.g., global transition points between peak and off-peak periods).

A deep learning framework to generate realistic population and mobility data

Nov 14, 2022Abstract:Census and Household Travel Survey datasets are regularly collected from households and individuals and provide information on their daily travel behavior with demographic and economic characteristics. These datasets have important applications ranging from travel demand estimation to agent-based modeling. However, they often represent a limited sample of the population due to privacy concerns or are given aggregated. Synthetic data augmentation is a promising avenue in addressing these challenges. In this paper, we propose a framework to generate a synthetic population that includes both socioeconomic features (e.g., age, sex, industry) and trip chains (i.e., activity locations). Our model is tested and compared with other recently proposed models on multiple assessment metrics.

ConMatch: Semi-Supervised Learning with Confidence-Guided Consistency Regularization

Aug 18, 2022

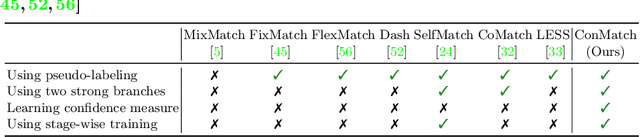

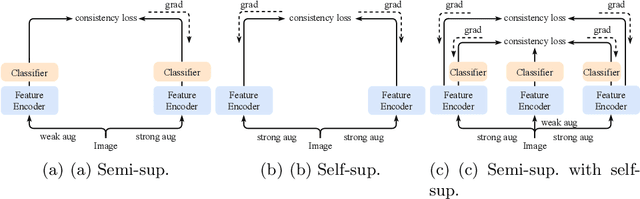

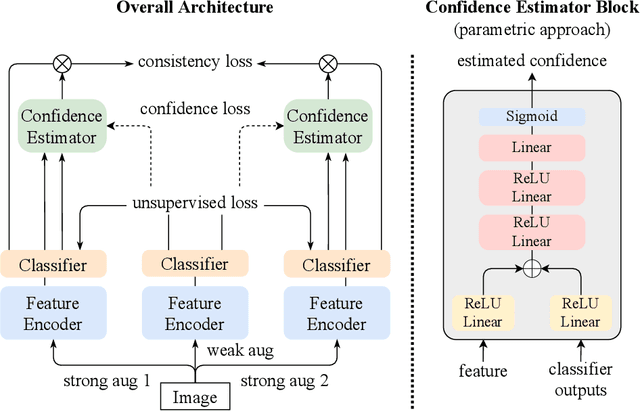

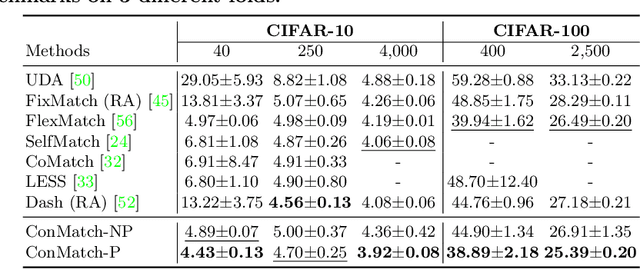

Abstract:We present a novel semi-supervised learning framework that intelligently leverages the consistency regularization between the model's predictions from two strongly-augmented views of an image, weighted by a confidence of pseudo-label, dubbed ConMatch. While the latest semi-supervised learning methods use weakly- and strongly-augmented views of an image to define a directional consistency loss, how to define such direction for the consistency regularization between two strongly-augmented views remains unexplored. To account for this, we present novel confidence measures for pseudo-labels from strongly-augmented views by means of weakly-augmented view as an anchor in non-parametric and parametric approaches. Especially, in parametric approach, we present, for the first time, to learn the confidence of pseudo-label within the networks, which is learned with backbone model in an end-to-end manner. In addition, we also present a stage-wise training to boost the convergence of training. When incorporated in existing semi-supervised learners, ConMatch consistently boosts the performance. We conduct experiments to demonstrate the effectiveness of our ConMatch over the latest methods and provide extensive ablation studies. Code has been made publicly available at https://github.com/JiwonCocoder/ConMatch.

Estimating Link Flows in Road Networks with Synthetic Trajectory Data Generation: Reinforcement Learning-based Approaches

Jun 26, 2022

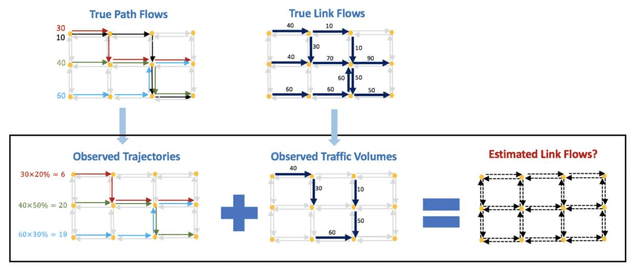

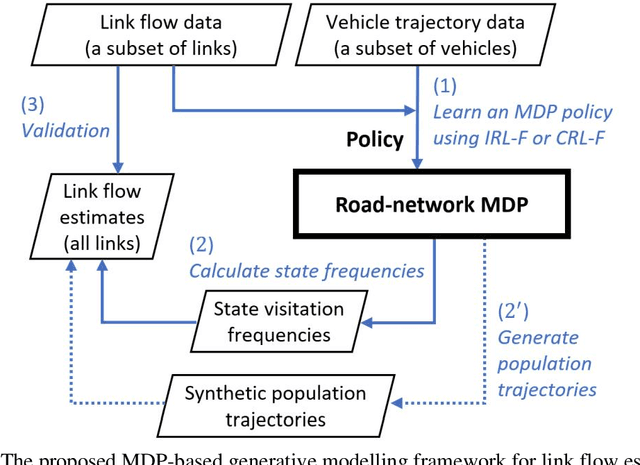

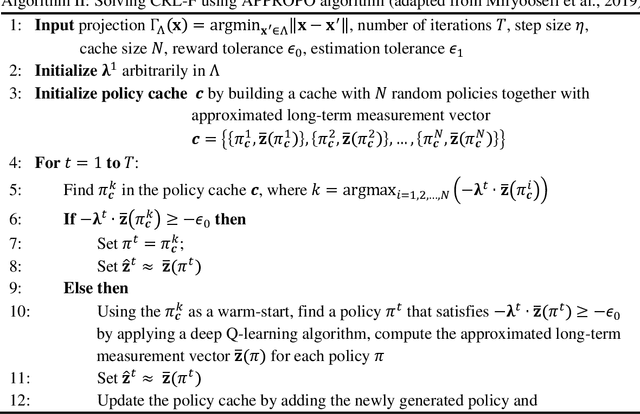

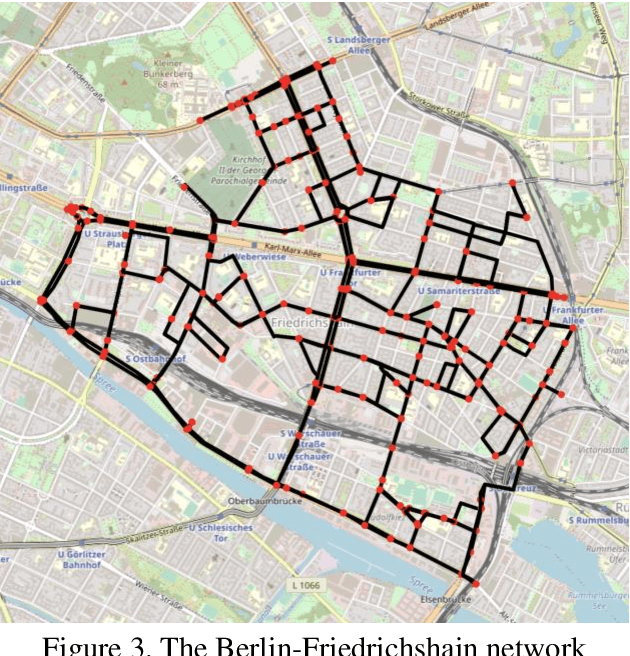

Abstract:This paper addresses the problem of estimating link flows in a road network by combining limited traffic volume and vehicle trajectory data. While traffic volume data from loop detectors have been the common data source for link flow estimation, the detectors only cover a subset of links. Vehicle trajectory data collected from vehicle tracking sensors are also incorporated these days. However, trajectory data are often sparse in that the observed trajectories only represent a small subset of the whole population, where the exact sampling rate is unknown and may vary over space and time. This study proposes a novel generative modelling framework, where we formulate the link-to-link movements of a vehicle as a sequential decision-making problem using the Markov Decision Process framework and train an agent to make sequential decisions to generate realistic synthetic vehicle trajectories. We use Reinforcement Learning (RL)-based methods to find the best behaviour of the agent, based on which synthetic population vehicle trajectories can be generated to estimate link flows across the whole network. To ensure the generated population vehicle trajectories are consistent with the observed traffic volume and trajectory data, two methods based on Inverse Reinforcement Learning and Constrained Reinforcement Learning are proposed. The proposed generative modelling framework solved by either of these RL-based methods is validated by solving the link flow estimation problem in a real road network. Additionally, we perform comprehensive experiments to compare the performance with two existing methods. The results show that the proposed framework has higher estimation accuracy and robustness under realistic scenarios where certain behavioural assumptions about drivers are not met or the network coverage and penetration rate of trajectory data are low.

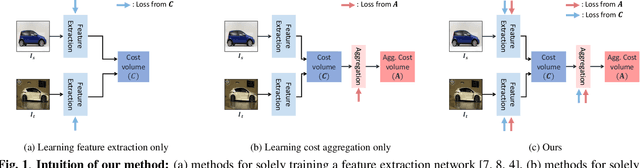

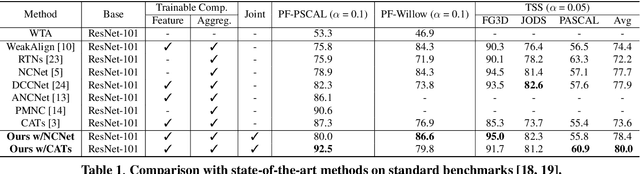

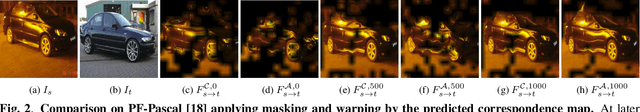

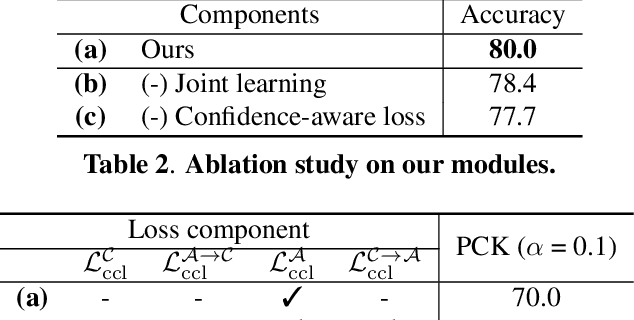

Joint Learning of Feature Extraction and Cost Aggregation for Semantic Correspondence

Apr 19, 2022

Abstract:Establishing dense correspondences across semantically similar images is one of the challenging tasks due to the significant intra-class variations and background clutters. To solve these problems, numerous methods have been proposed, focused on learning feature extractor or cost aggregation independently, which yields sub-optimal performance. In this paper, we propose a novel framework for jointly learning feature extraction and cost aggregation for semantic correspondence. By exploiting the pseudo labels from each module, the networks consisting of feature extraction and cost aggregation modules are simultaneously learned in a boosting fashion. Moreover, to ignore unreliable pseudo labels, we present a confidence-aware contrastive loss function for learning the networks in a weakly-supervised manner. We demonstrate our competitive results on standard benchmarks for semantic correspondence.

Semi-Supervised Learning of Semantic Correspondence with Pseudo-Labels

Apr 05, 2022

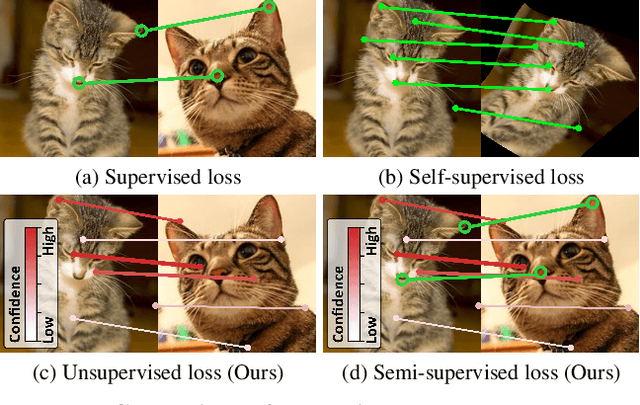

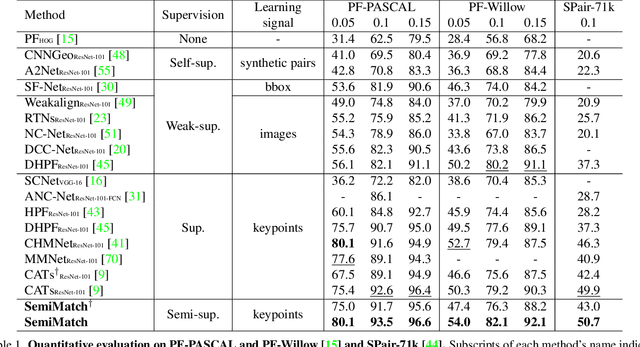

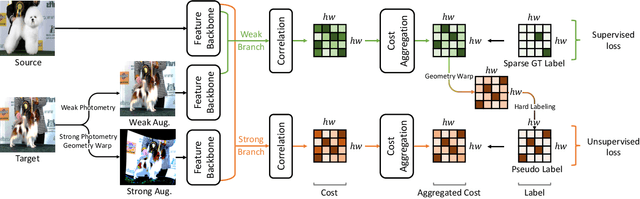

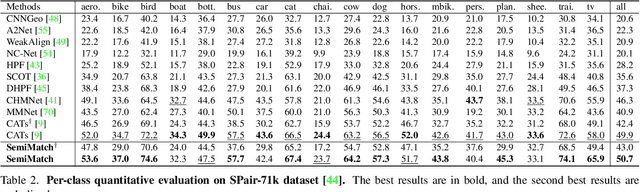

Abstract:Establishing dense correspondences across semantically similar images remains a challenging task due to the significant intra-class variations and background clutters. Traditionally, a supervised learning was used for training the models, which required tremendous manually-labeled data, while some methods suggested a self-supervised or weakly-supervised learning to mitigate the reliance on the labeled data, but with limited performance. In this paper, we present a simple, but effective solution for semantic correspondence that learns the networks in a semi-supervised manner by supplementing few ground-truth correspondences via utilization of a large amount of confident correspondences as pseudo-labels, called SemiMatch. Specifically, our framework generates the pseudo-labels using the model's prediction itself between source and weakly-augmented target, and uses pseudo-labels to learn the model again between source and strongly-augmented target, which improves the robustness of the model. We also present a novel confidence measure for pseudo-labels and data augmentation tailored for semantic correspondence. In experiments, SemiMatch achieves state-of-the-art performance on various benchmarks, especially on PF-Willow by a large margin.

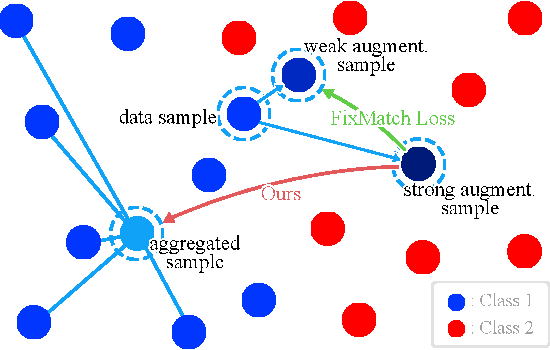

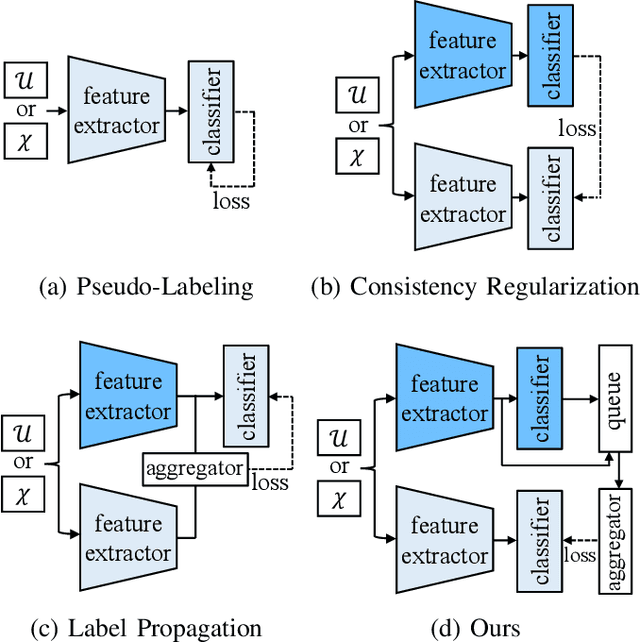

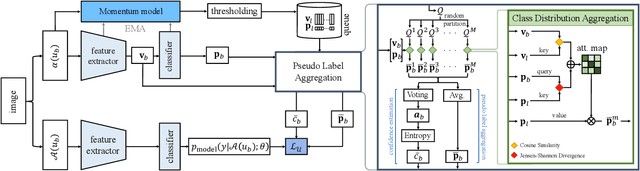

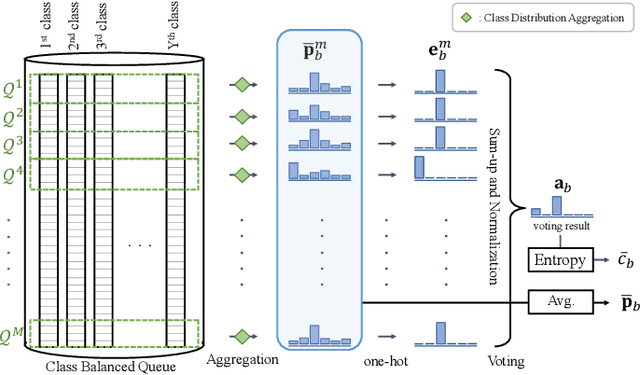

AggMatch: Aggregating Pseudo Labels for Semi-Supervised Learning

Jan 25, 2022

Abstract:Semi-supervised learning (SSL) has recently proven to be an effective paradigm for leveraging a huge amount of unlabeled data while mitigating the reliance on large labeled data. Conventional methods focused on extracting a pseudo label from individual unlabeled data sample and thus they mostly struggled to handle inaccurate or noisy pseudo labels, which degenerate performance. In this paper, we address this limitation with a novel SSL framework for aggregating pseudo labels, called AggMatch, which refines initial pseudo labels by using different confident instances. Specifically, we introduce an aggregation module for consistency regularization framework that aggregates the initial pseudo labels based on the similarity between the instances. To enlarge the aggregation candidates beyond the mini-batch, we present a class-balanced confidence-aware queue built with the momentum model, encouraging to provide more stable and consistent aggregation. We also propose a novel uncertainty-based confidence measure for the pseudo label by considering the consensus among multiple hypotheses with different subsets of the queue. We conduct experiments to demonstrate the effectiveness of AggMatch over the latest methods on standard benchmarks and provide extensive analyses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge