Jishen Zeng

MSPT: A Lightweight Face Image Quality Assessment Method with Multi-stage Progressive Training

Aug 11, 2025Abstract:Accurately assessing the perceptual quality of face images is crucial, especially with the rapid progress in face restoration and generation. Traditional quality assessment methods often struggle with the unique characteristics of face images, limiting their generalizability. While learning-based approaches demonstrate superior performance due to their strong fitting capabilities, their high complexity typically incurs significant computational and storage costs, hindering practical deployment. To address this, we propose a lightweight face quality assessment network with Multi-Stage Progressive Training (MSPT). Our network employs a three-stage progressive training strategy that gradually introduces more diverse data samples and increases input image resolution. This novel approach enables lightweight networks to achieve high performance by effectively learning complex quality features while significantly mitigating catastrophic forgetting. Our MSPT achieved the second highest score on the VQualA 2025 face image quality assessment benchmark dataset, demonstrating that MSPT achieves comparable or better performance than state-of-the-art methods while maintaining efficient inference.

Toward Real Text Manipulation Detection: New Dataset and New Solution

Dec 12, 2023

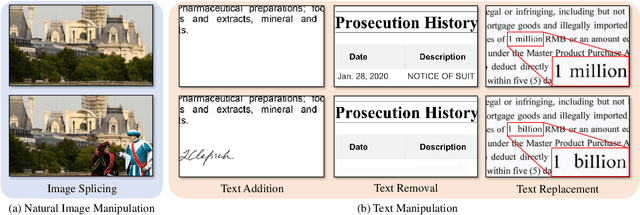

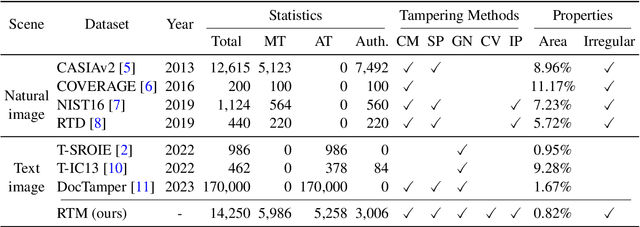

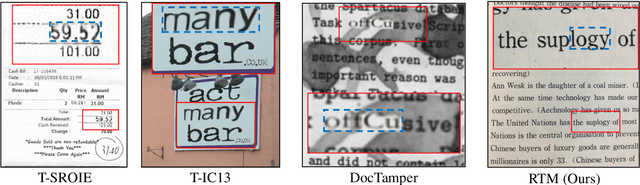

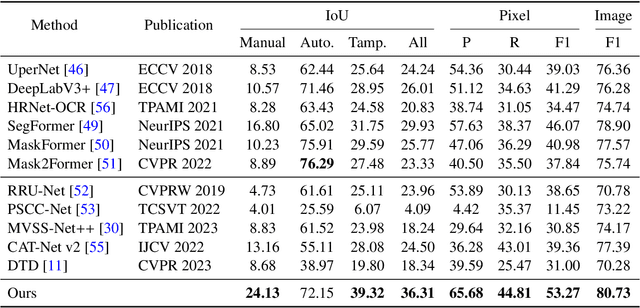

Abstract:With the surge in realistic text tampering, detecting fraudulent text in images has gained prominence for maintaining information security. However, the high costs associated with professional text manipulation and annotation limit the availability of real-world datasets, with most relying on synthetic tampering, which inadequately replicates real-world tampering attributes. To address this issue, we present the Real Text Manipulation (RTM) dataset, encompassing 14,250 text images, which include 5,986 manually and 5,258 automatically tampered images, created using a variety of techniques, alongside 3,006 unaltered text images for evaluating solution stability. Our evaluations indicate that existing methods falter in text forgery detection on the RTM dataset. We propose a robust baseline solution featuring a Consistency-aware Aggregation Hub and a Gated Cross Neighborhood-attention Fusion module for efficient multi-modal information fusion, supplemented by a Tampered-Authentic Contrastive Learning module during training, enriching feature representation distinction. This framework, extendable to other dual-stream architectures, demonstrated notable localization performance improvements of 7.33% and 6.38% on manual and overall manipulations, respectively. Our contributions aim to propel advancements in real-world text tampering detection. Code and dataset will be made available at https://github.com/DrLuo/RTM

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge