Jianrong Xu

HiGNN: Hierarchical Informative Graph Neural Networks for Molecular Property Prediction Equipped with Feature-Wise Attention

Aug 30, 2022

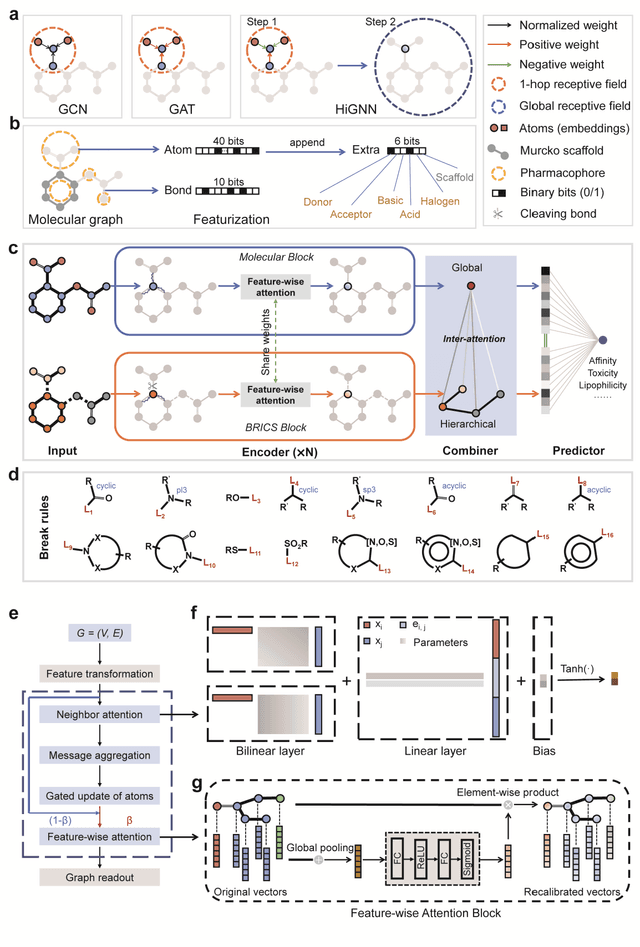

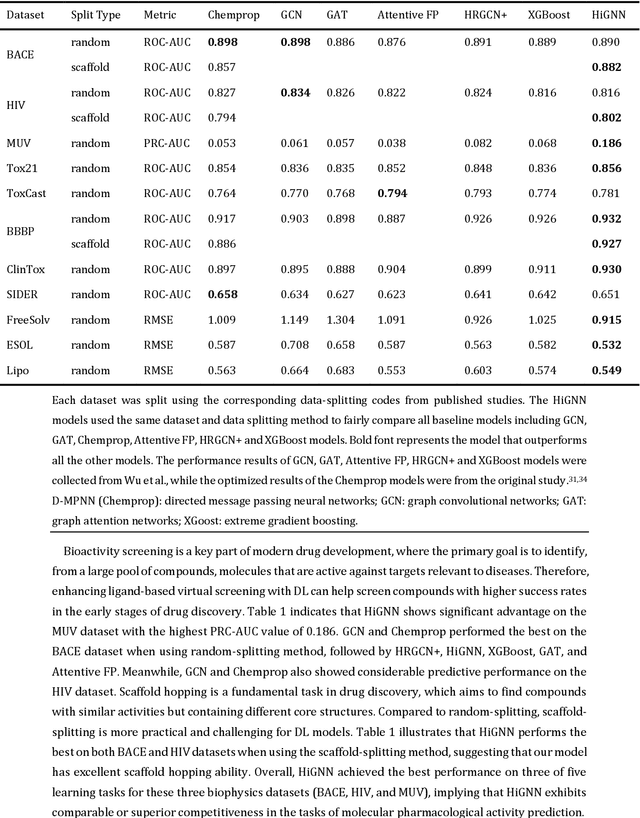

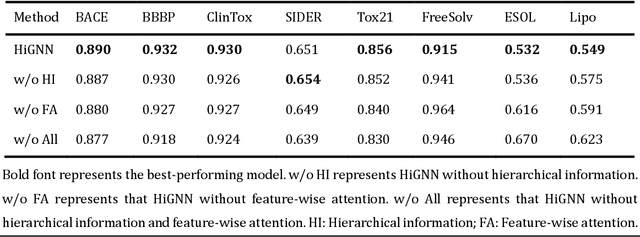

Abstract:Elucidating and accurately predicting the druggability and bioactivities of molecules plays a pivotal role in drug design and discovery and remains an open challenge. Recently, graph neural networks (GNN) have made remarkable advancements in graph-based molecular property prediction. However, current graph-based deep learning methods neglect the hierarchical information of molecules and the relationships between feature channels. In this study, we propose a well-designed hierarchical informative graph neural networks framework (termed HiGNN) for predicting molecular property by utilizing a co-representation learning of molecular graphs and chemically synthesizable BRICS fragments. Furthermore, a plug-and-play feature-wise attention block is first designed in HiGNN architecture to adaptively recalibrate atomic features after the message passing phase. Extensive experiments demonstrate that HiGNN achieves state-of-the-art predictive performance on many challenging drug discovery-associated benchmark datasets. In addition, we devise a molecule-fragment similarity mechanism to comprehensively investigate the interpretability of HiGNN model at the subgraph level, indicating that HiGNN as a powerful deep learning tool can help chemists and pharmacists identify the key components of molecules for designing better molecules with desired properties or functions. The source code is publicly available at https://github.com/idruglab/hignn.

PFGDF: Pruning Filter via Gaussian Distribution Feature for Deep Neural Networks Acceleration

Jun 23, 2020

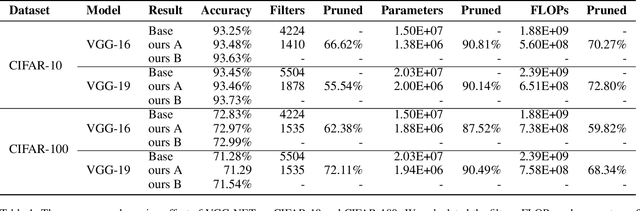

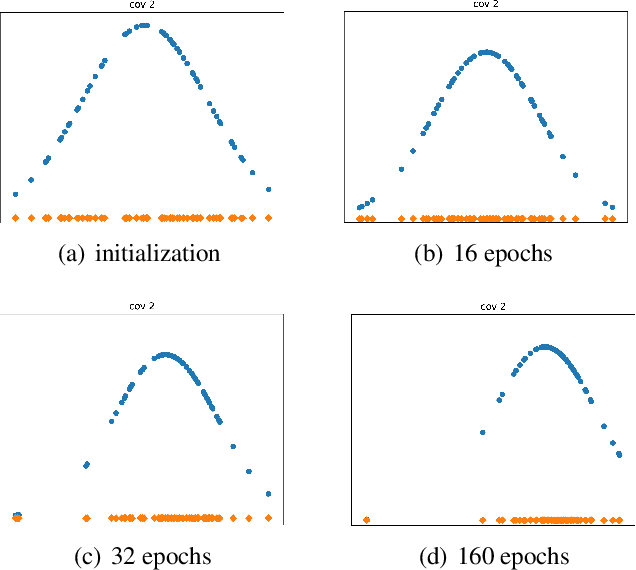

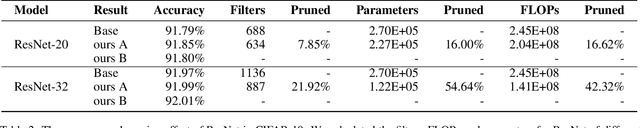

Abstract:The existence of a lot of redundant information in convolutional neural networks leads to the slow deployment of its equipment on the edge. To solve this issue, we proposed a novel deep learning model compression acceleration method based on data distribution characteristics, namely Pruning Filter via Gaussian Distribution Feature(PFGDF) which was to found the smaller interval of the convolution layer of a certain layer to describe the original on the grounds of distribution characteristics . Compared with revious advanced methods, PFGDF compressed the model by filters with insignificance in distribution regardless of the contribution and sensitivity information of the convolution filter. The pruning process of the model was automated, and always ensured that the compressed model could restore the performance of original model. Notably, on CIFAR-10, PFGDF compressed the convolution filter on VGG-16 by 66:62%, the parameter reducing more than 90%, and FLOPs achieved 70:27%. On ResNet-32, PFGDF reduced the convolution filter by 21:92%. The parameter was reduced to 54:64%, and the FLOPs exceeded 42%

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge