Jesse Hagenaars

On-Device Self-Supervised Learning of Low-Latency Monocular Depth from Only Events

Dec 09, 2024

Abstract:Event cameras provide low-latency perception for only milliwatts of power. This makes them highly suitable for resource-restricted, agile robots such as small flying drones. Self-supervised learning based on contrast maximization holds great potential for event-based robot vision, as it foregoes the need to high-frequency ground truth and allows for online learning in the robot's operational environment. However, online, onboard learning raises the major challenge of achieving sufficient computational efficiency for real-time learning, while maintaining competitive visual perception performance. In this work, we improve the time and memory efficiency of the contrast maximization learning pipeline. Benchmarking experiments show that the proposed pipeline achieves competitive results with the state of the art on the task of depth estimation from events. Furthermore, we demonstrate the usability of the learned depth for obstacle avoidance through real-world flight experiments. Finally, we compare the performance of different combinations of pre-training and fine-tuning of the depth estimation networks, showing that on-board domain adaptation is feasible given a few minutes of flight.

Direct learning of home vector direction for insect-inspired robot navigation

May 06, 2024Abstract:Insects have long been recognized for their ability to navigate and return home using visual cues from their nest's environment. However, the precise mechanism underlying this remarkable homing skill remains a subject of ongoing investigation. Drawing inspiration from the learning flights of honey bees and wasps, we propose a robot navigation method that directly learns the home vector direction from visual percepts during a learning flight in the vicinity of the nest. After learning, the robot will travel away from the nest, come back by means of odometry, and eliminate the resultant drift by inferring the home vector orientation from the currently experienced view. Using a compact convolutional neural network, we demonstrate successful learning in both simulated and real forest environments, as well as successful homing control of a simulated quadrotor. The average errors of the inferred home vectors in general stay well below the 90{\deg} required for successful homing, and below 24{\deg} if all images contain sufficient texture and illumination. Moreover, we show that the trajectory followed during the initial learning flight has a pronounced impact on the network's performance. A higher density of sample points in proximity to the nest results in a more consistent return. Code and data are available at https://mavlab.tudelft.nl/learning_to_home .

Fully neuromorphic vision and control for autonomous drone flight

Mar 15, 2023Abstract:Biological sensing and processing is asynchronous and sparse, leading to low-latency and energy-efficient perception and action. In robotics, neuromorphic hardware for event-based vision and spiking neural networks promises to exhibit similar characteristics. However, robotic implementations have been limited to basic tasks with low-dimensional sensory inputs and motor actions due to the restricted network size in current embedded neuromorphic processors and the difficulties of training spiking neural networks. Here, we present the first fully neuromorphic vision-to-control pipeline for controlling a freely flying drone. Specifically, we train a spiking neural network that accepts high-dimensional raw event-based camera data and outputs low-level control actions for performing autonomous vision-based flight. The vision part of the network, consisting of five layers and 28.8k neurons, maps incoming raw events to ego-motion estimates and is trained with self-supervised learning on real event data. The control part consists of a single decoding layer and is learned with an evolutionary algorithm in a drone simulator. Robotic experiments show a successful sim-to-real transfer of the fully learned neuromorphic pipeline. The drone can accurately follow different ego-motion setpoints, allowing for hovering, landing, and maneuvering sideways$\unicode{x2014}$even while yawing at the same time. The neuromorphic pipeline runs on board on Intel's Loihi neuromorphic processor with an execution frequency of 200 Hz, spending only 27 $\unicode{x00b5}$J per inference. These results illustrate the potential of neuromorphic sensing and processing for enabling smaller, more intelligent robots.

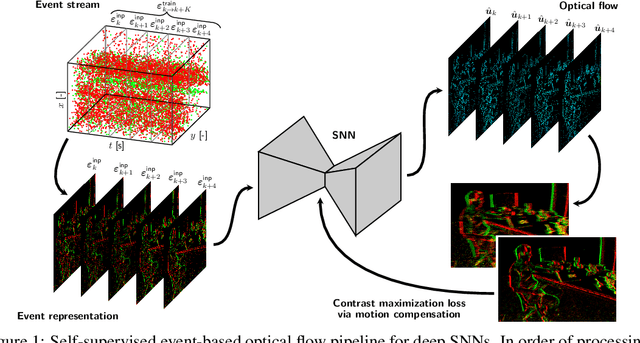

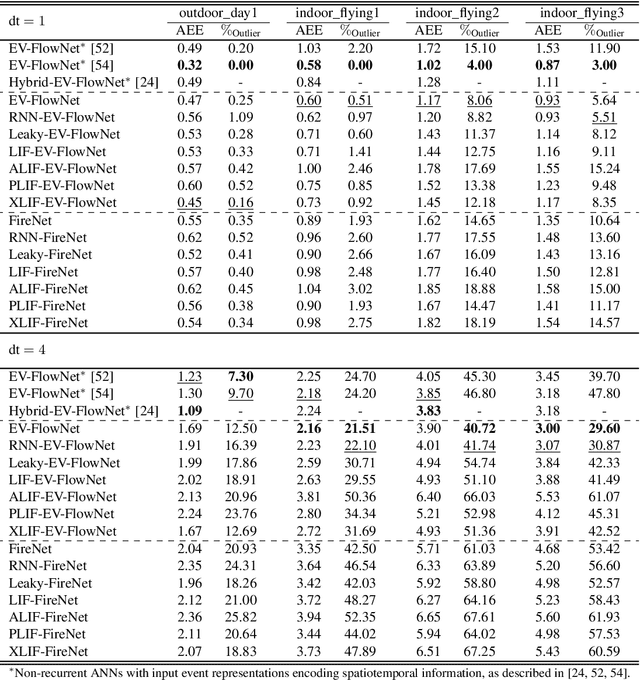

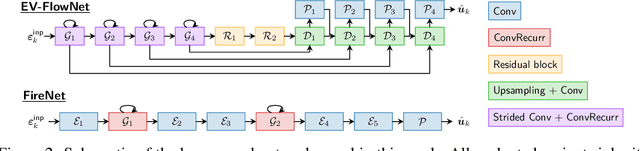

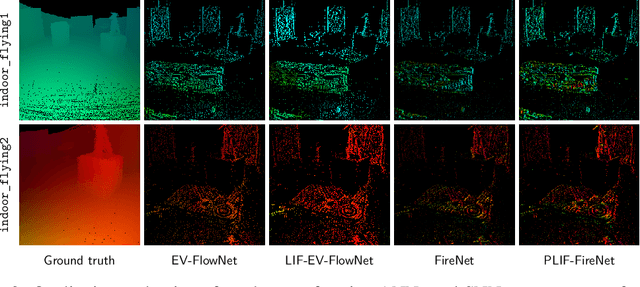

Self-Supervised Learning of Event-Based Optical Flow with Spiking Neural Networks

Jun 03, 2021

Abstract:Neuromorphic sensing and computing hold a promise for highly energy-efficient and high-bandwidth-sensor processing. A major challenge for neuromorphic computing is that learning algorithms for traditional artificial neural networks (ANNs) do not transfer directly to spiking neural networks (SNNs) due to the discrete spikes and more complex neuronal dynamics. As a consequence, SNNs have not yet been successfully applied to complex, large-scale tasks. In this article, we focus on the self-supervised learning problem of optical flow estimation from event-based camera inputs, and investigate the changes that are necessary to the state-of-the-art ANN training pipeline in order to successfully tackle it with SNNs. More specifically, we first modify the input event representation to encode a much smaller time slice with minimal explicit temporal information. Consequently, we make the network's neuronal dynamics and recurrent connections responsible for integrating information over time. Moreover, we reformulate the self-supervised loss function for event-based optical flow to improve its convexity. We perform experiments with various types of recurrent ANNs and SNNs using the proposed pipeline. Concerning SNNs, we investigate the effects of elements such as parameter initialization and optimization, surrogate gradient shape, and adaptive neuronal mechanisms. We find that initialization and surrogate gradient width play a crucial part in enabling learning with sparse inputs, while the inclusion of adaptivity and learnable neuronal parameters can improve performance. We show that the performance of the proposed ANNs and SNNs are on par with that of the current state-of-the-art ANNs trained in a self-supervised manner.

Neuromorphic control for optic-flow-based landings of MAVs using the Loihi processor

Nov 01, 2020

Abstract:Neuromorphic processors like Loihi offer a promising alternative to conventional computing modules for endowing constrained systems like micro air vehicles (MAVs) with robust, efficient and autonomous skills such as take-off and landing, obstacle avoidance, and pursuit. However, a major challenge for using such processors on robotic platforms is the reality gap between simulation and the real world. In this study, we present for the very first time a fully embedded application of the Loihi neuromorphic chip prototype in a flying robot. A spiking neural network (SNN) was evolved to compute the thrust command based on the divergence of the ventral optic flow field to perform autonomous landing. Evolution was performed in a Python-based simulator using the PySNN library. The resulting network architecture consists of only 35 neurons distributed among 3 layers. Quantitative analysis between simulation and Loihi reveals a root-mean-square error of the thrust setpoint as low as 0.005 g, along with a 99.8% matching of the spike sequences in the hidden layer, and 99.7% in the output layer. The proposed approach successfully bridges the reality gap, offering important insights for future neuromorphic applications in robotics. Supplementary material is available at https://mavlab.tudelft.nl/loihi/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge