Jean-François Lalonde

ManiFest: Manifold Deformation for Few-shot Image Translation

Nov 29, 2021

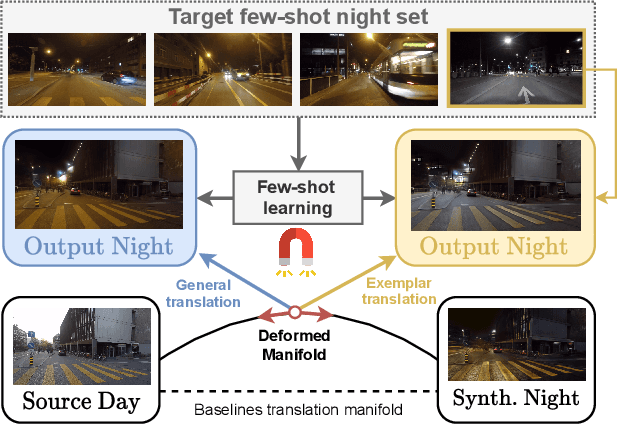

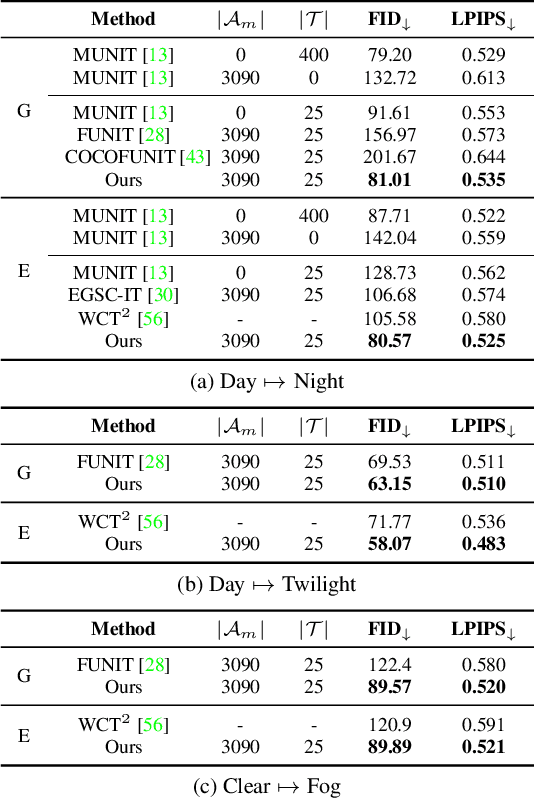

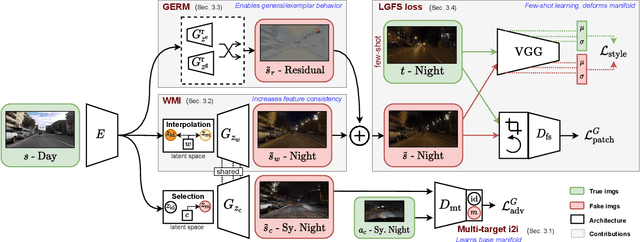

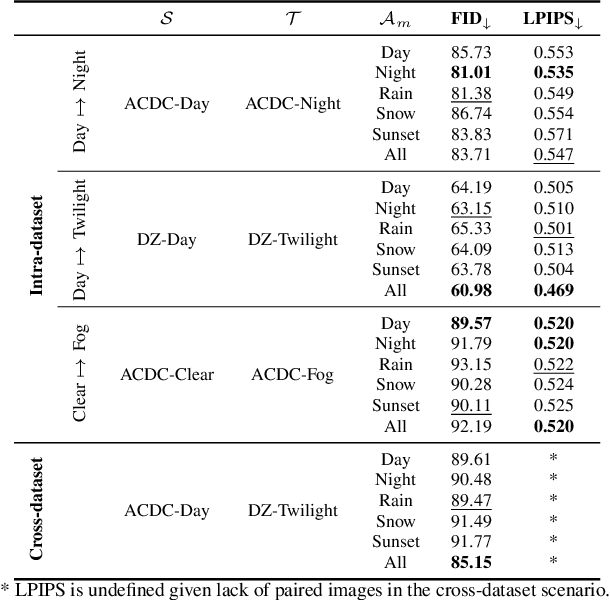

Abstract:Most image-to-image translation methods require a large number of training images, which restricts their applicability. We instead propose ManiFest: a framework for few-shot image translation that learns a context-aware representation of a target domain from a few images only. To enforce feature consistency, our framework learns a style manifold between source and proxy anchor domains (assumed to be composed of large numbers of images). The learned manifold is interpolated and deformed towards the few-shot target domain via patch-based adversarial and feature statistics alignment losses. All of these components are trained simultaneously during a single end-to-end loop. In addition to the general few-shot translation task, our approach can alternatively be conditioned on a single exemplar image to reproduce its specific style. Extensive experiments demonstrate the efficacy of ManiFest on multiple tasks, outperforming the state-of-the-art on all metrics and in both the general- and exemplar-based scenarios. Our code is available at https://github.com/cv-rits/Manifest .

Image-to-Image Translation with Low Resolution Conditioning

Jul 23, 2021

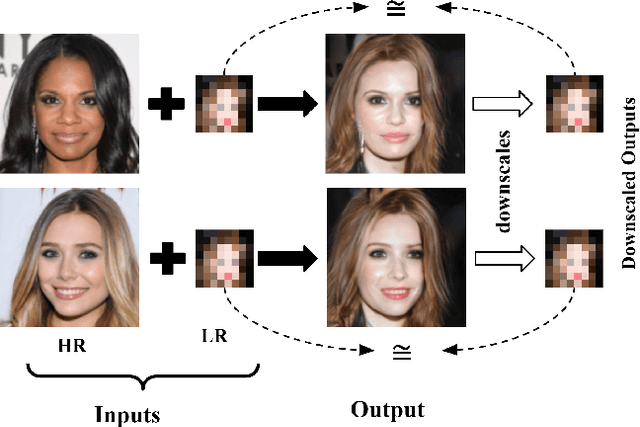

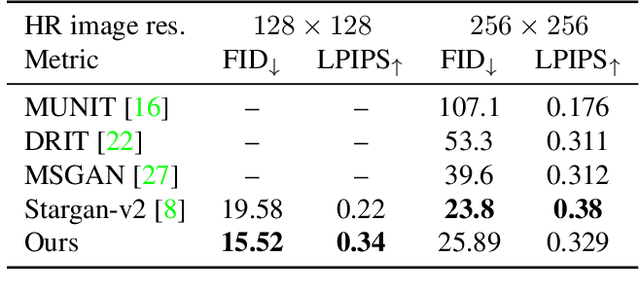

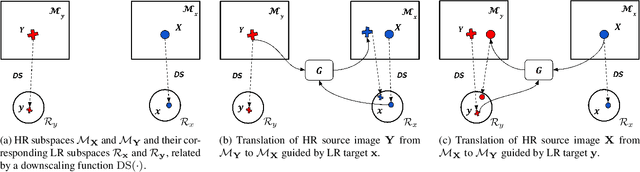

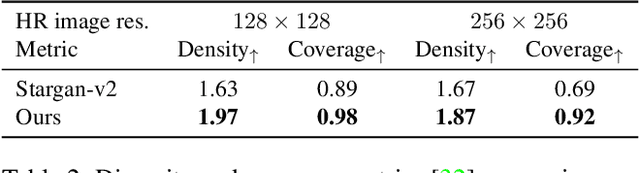

Abstract:Most image-to-image translation methods focus on learning mappings across domains with the assumption that images share content (e.g., pose) but have their own domain-specific information known as style. When conditioned on a target image, such methods aim to extract the style of the target and combine it with the content of the source image. In this work, we consider the scenario where the target image has a very low resolution. More specifically, our approach aims at transferring fine details from a high resolution (HR) source image to fit a coarse, low resolution (LR) image representation of the target. We therefore generate HR images that share features from both HR and LR inputs. This differs from previous methods that focus on translating a given image style into a target content, our translation approach being able to simultaneously imitate the style and merge the structural information of the LR target. Our approach relies on training the generative model to produce HR target images that both 1) share distinctive information of the associated source image; 2) correctly match the LR target image when downscaled. We validate our method on the CelebA-HQ and AFHQ datasets by demonstrating improvements in terms of visual quality, diversity and coverage. Qualitative and quantitative results show that when dealing with intra-domain image translation, our method generates more realistic samples compared to state-of-the-art methods such as Stargan-v2

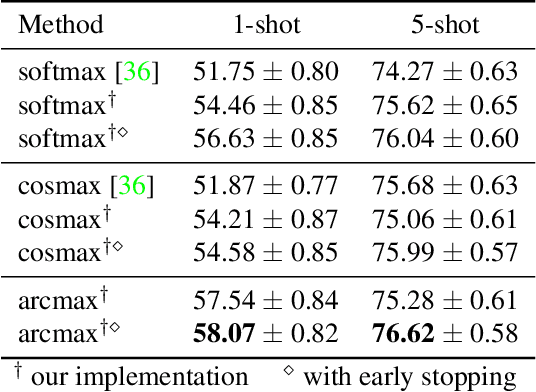

Persistent Mixture Model Networks for Few-Shot Image Classification

Nov 24, 2020

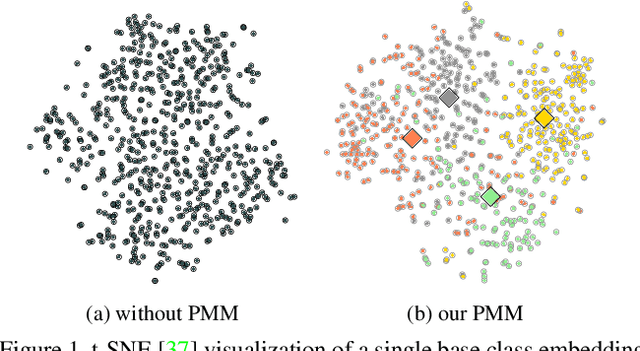

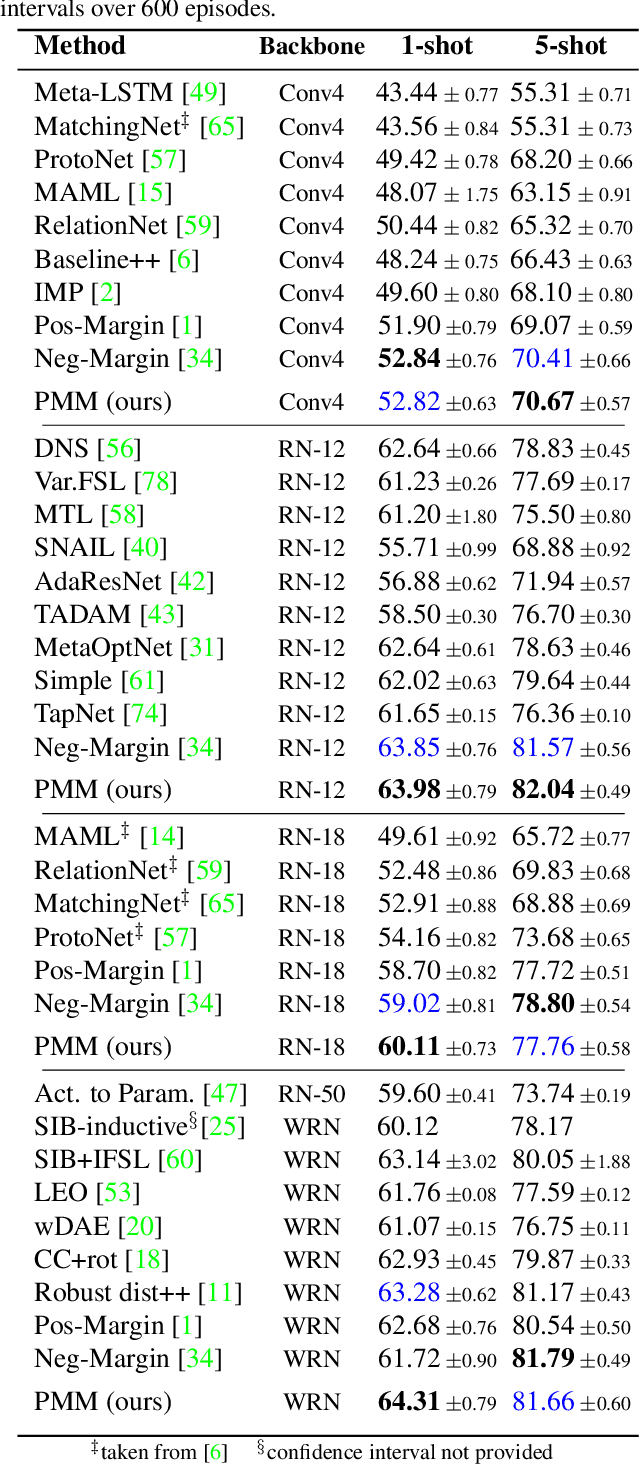

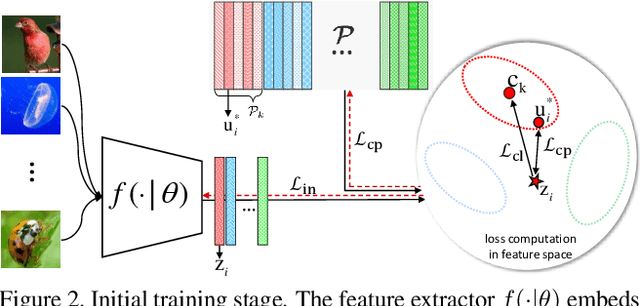

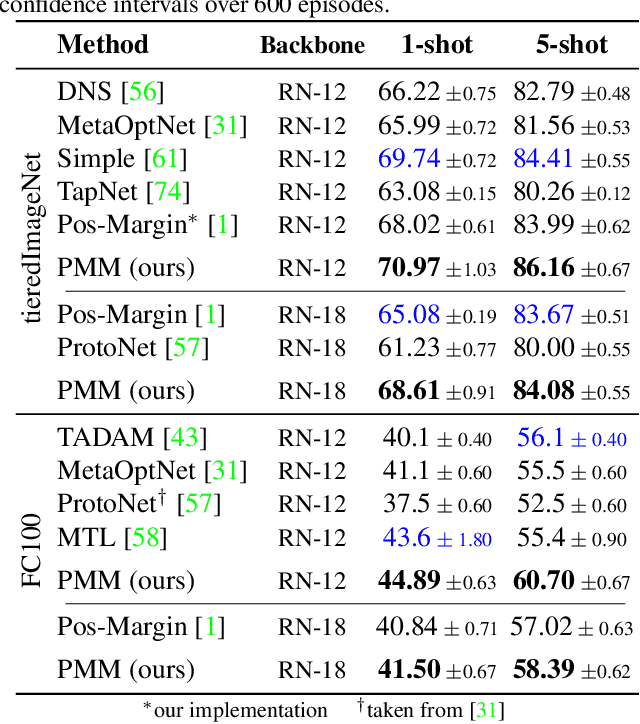

Abstract:We introduce Persistent Mixture Model (PMM) networks for representation learning in the few-shot image classification context. While previous methods represent classes with a single centroid or rely on post hoc clustering methods, our method learns a mixture model for each base class jointly with the data representation in an end-to-end manner. The PMM training algorithm is organized into two main stages: 1) initial training and 2) progressive following. First, the initial estimate for multi-component mixtures is learned for each class in the base domain using a combination of two loss functions (competitive and collaborative). The resulting network is then progressively refined through a leader-follower learning procedure, which uses the current estimate of the learner as a fixed "target" network. This target network is used to make a consistent assignment of instances to mixture components, in order to increase performance while stabilizing the training. The effectiveness of our joint representation/mixture learning approach is demonstrated with extensive experiments on four standard datasets and four backbones. In particular, we demonstrate that when we combine our robust representation with recent alignment- and margin-based approaches, we achieve new state-of-the-art results in the inductive setting, with an absolute accuracy for 5-shot classification of 82.45% on miniImageNet, 88.20% with tieredImageNet, and 60.70% in FC100, all using the ResNet-12 backbone.

Deep SVBRDF Estimation on Real Materials

Oct 08, 2020

Abstract:Recent work has demonstrated that deep learning approaches can successfully be used to recover accurate estimates of the spatially-varying BRDF (SVBRDF) of a surface from as little as a single image. Closer inspection reveals, however, that most approaches in the literature are trained purely on synthetic data, which, while diverse and realistic, is often not representative of the richness of the real world. In this paper, we show that training such networks exclusively on synthetic data is insufficient to achieve adequate results when tested on real data. Our analysis leverages a new dataset of real materials obtained with a novel portable multi-light capture apparatus. Through an extensive series of experiments and with the use of a novel deep learning architecture, we explore two strategies for improving results on real data: finetuning, and a per-material optimization procedure. We show that adapting network weights to real data is of critical importance, resulting in an approach which significantly outperforms previous methods for SVBRDF estimation on real materials. Dataset and code are available at https://lvsn.github.io/real-svbrdf

Rain rendering for evaluating and improving robustness to bad weather

Sep 06, 2020

Abstract:Rain fills the atmosphere with water particles, which breaks the common assumption that light travels unaltered from the scene to the camera. While it is well-known that rain affects computer vision algorithms, quantifying its impact is difficult. In this context, we present a rain rendering pipeline that enables the systematic evaluation of common computer vision algorithms to controlled amounts of rain. We present three different ways to add synthetic rain to existing images datasets: completely physic-based; completely data-driven; and a combination of both. The physic-based rain augmentation combines a physical particle simulator and accurate rain photometric modeling. We validate our rendering methods with a user study, demonstrating our rain is judged as much as 73% more realistic than the state-of-theart. Using our generated rain-augmented KITTI, Cityscapes, and nuScenes datasets, we conduct a thorough evaluation of object detection, semantic segmentation, and depth estimation algorithms and show that their performance decreases in degraded weather, on the order of 15% for object detection, 60% for semantic segmentation, and 6-fold increase in depth estimation error. Finetuning on our augmented synthetic data results in improvements of 21% on object detection, 37% on semantic segmentation, and 8% on depth estimation.

RGB-D-E: Event Camera Calibration for Fast 6-DOF Object Tracking

Jun 09, 2020

Abstract:Augmented reality devices require multiple sensors to perform various tasks such as localization and tracking. Currently, popular cameras are mostly frame-based (e.g. RGB and Depth) which impose a high data bandwidth and power usage. With the necessity for low power and more responsive augmented reality systems, using solely frame-based sensors imposes limits to the various algorithms that needs high frequency data from the environement. As such, event-based sensors have become increasingly popular due to their low power, bandwidth and latency, as well as their very high frequency data acquisition capabilities. In this paper, we propose, for the first time, to use an event-based camera to increase the speed of 3D object tracking in 6 degrees of freedom. This application requires handling very high object speed to convey compelling AR experiences. To this end, we propose a new system which combines a recent RGB-D sensor (Kinect Azure) with an event camera (DAVIS346). We develop a deep learning approach, which combines an existing RGB-D network along with a novel event-based network in a cascade fashion, and demonstrate that our approach significantly improves the robustness of a state-of-the-art frame-based 6-DOF object tracker using our RGB-D-E pipeline.

Input Dropout for Spatially Aligned Modalities

Feb 07, 2020

Abstract:Computer vision datasets containing multiple modalities such as color, depth, and thermal properties are now commonly accessible and useful for solving a wide array of challenging tasks. However, deploying multi-sensor heads is not possible in many scenarios. As such many practical solutions tend to be based on simpler sensors, mostly for cost, simplicity and robustness considerations. In this work, we propose a training methodology to take advantage of these additional modalities available in datasets, even if they are not available at test time. By assuming that the modalities have a strong spatial correlation, we propose Input Dropout, a simple technique that consists in stochastic hiding of one or many input modalities at training time, while using only the canonical (e.g. RGB) modalities at test time. We demonstrate that Input Dropout trivially combines with existing deep convolutional architectures, and improves their performance on a wide range of computer vision tasks such as dehazing, 6-DOF object tracking, pedestrian detection and object classification.

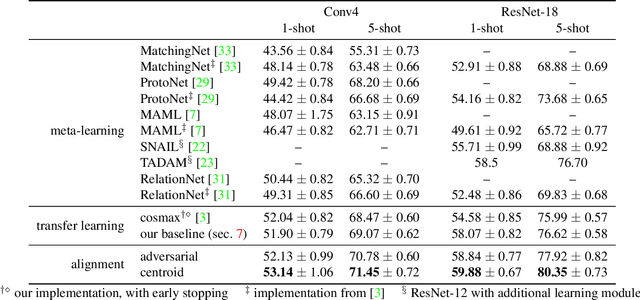

Associative Alignment for Few-shot Image Classification

Dec 11, 2019

Abstract:Few-shot image classification aims at training a model by using only a few (e.g., 5 or even 1) examples of novel classes. The established way of doing so is to rely on a larger set of base data for either pre-training a model, or for training in a meta-learning context. Unfortunately, these approaches often suffer from overfitting since the models can easily memorize all of the novel samples. This paper mitigates this issue and proposes to leverage part of the base data by aligning the novel training instances to the closely related ones in the base training set. This expands the size of the effective novel training set by adding extra related base instances to the few novel ones, thereby allowing to train the entire network. Doing so limits overfitting and simultaneously strengthens the generalization capabilities of the network. We propose two associative alignment strategies: 1) a conditional adversarial alignment loss based on the Wasserstein distance; and 2) a metric-learning loss for minimizing the distance between related base samples and the centroid of novel instances in the feature space. Experiments on two standard datasets demonstrate that combining our centroid-based alignment loss results in absolute accuracy improvements of 4.4%, 1.2%, and 6.0% in 5-shot learning over the state of the art for object recognition, fine-grained classification, and cross-domain adaptation, respectively.

Learning to Match Templates for Unseen Instance Detection

Nov 26, 2019

Abstract:Detecting objects in images is a quintessential problem in computer vision. Much of the focus in the literature has been on the problem of identifying the bounding box of a particular type of objects in an image. Yet, in many contexts such as robotics and augmented reality, it is more important to find a specific object instance---a unique toy or a custom industrial part for example---rather than a generic object class. Here, applications can require a rapid shift from one object instance to another, thus requiring fast turnaround which affords little-to-no training time. In this context, we propose a method for detecting objects that are unknown at training time. Our approach frames the problem as one of learned template matching, where a network is trained to match the template of an object in an image. The template is obtained by rendering a textured 3D model of the object. At test time, we provide a novel 3D object, and the network is able to successfully detect it, even under significant occlusion. Our method offers an improvement of almost 30 mAP over the previous template matching methods on the challenging Occluded Linemod (overall mAP of 50.7). With no access to the objects at training time, our method still yields detection results that are on par with existing ones that are allowed to train on the objects. By reviving this research direction in the context of more powerful, deep feature extractors, our work sets the stage for more development in the area of unseen object instance detection.

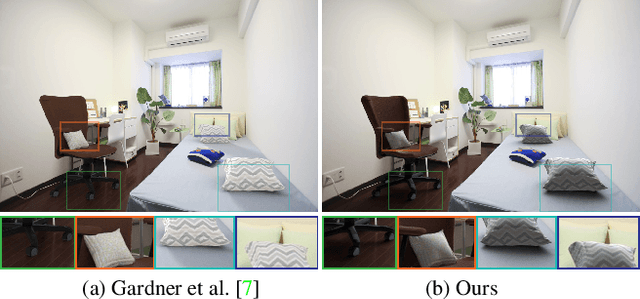

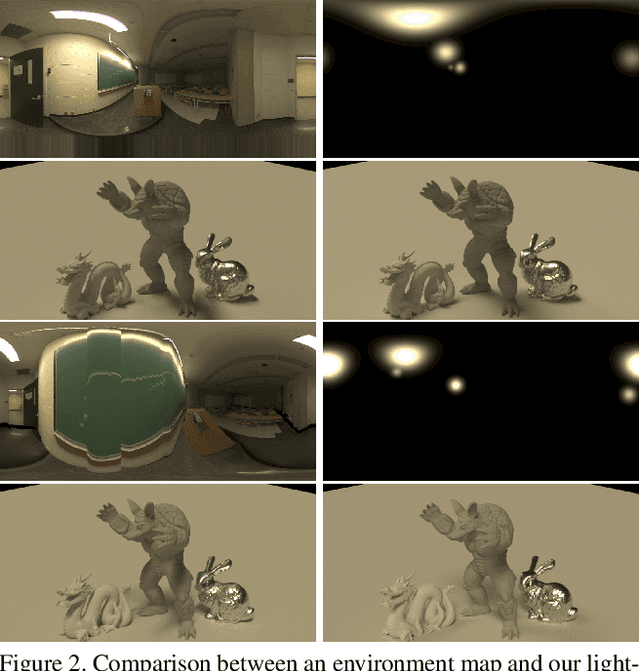

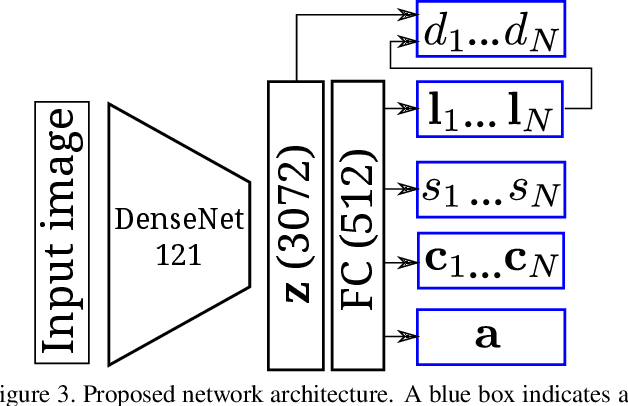

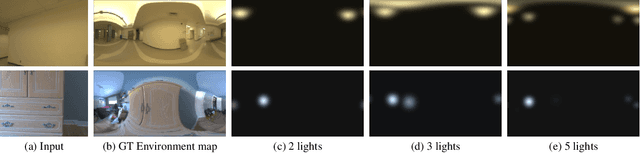

Deep Parametric Indoor Lighting Estimation

Oct 19, 2019

Abstract:We present a method to estimate lighting from a single image of an indoor scene. Previous work has used an environment map representation that does not account for the localized nature of indoor lighting. Instead, we represent lighting as a set of discrete 3D lights with geometric and photometric parameters. We train a deep neural network to regress these parameters from a single image, on a dataset of environment maps annotated with depth. We propose a differentiable layer to convert these parameters to an environment map to compute our loss; this bypasses the challenge of establishing correspondences between estimated and ground truth lights. We demonstrate, via quantitative and qualitative evaluations, that our representation and training scheme lead to more accurate results compared to previous work, while allowing for more realistic 3D object compositing with spatially-varying lighting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge