Ivan Svogor

Profiling and Improving the PyTorch Dataloader for high-latency Storage: A Technical Report

Nov 09, 2022

Abstract:A growing number of Machine Learning Frameworks recently made Deep Learning accessible to a wider audience of engineers, scientists, and practitioners, by allowing straightforward use of complex neural network architectures and algorithms. However, since deep learning is rapidly evolving, not only through theoretical advancements but also with respect to hardware and software engineering, ML frameworks often lose backward compatibility and introduce technical debt that can lead to bottlenecks and sub-optimal resource utilization. Moreover, the focus is in most cases not on deep learning engineering, but rather on new models and theoretical advancements. In this work, however, we focus on engineering, more specifically on the data loading pipeline in the PyTorch Framework. We designed a series of benchmarks that outline performance issues of certain steps in the data loading process. Our findings show that for classification tasks that involve loading many files, like images, the training wall-time can be significantly improved. With our new, modified ConcurrentDataloader we can reach improvements in GPU utilization and significantly reduce batch loading time, up to 12X. This allows for the use of the cloud-based, S3-like object storage for datasets, and have comparable training time as if datasets are stored on local drives.

ROS and Buzz: consensus-based behaviors for heterogeneous teams

Oct 24, 2017

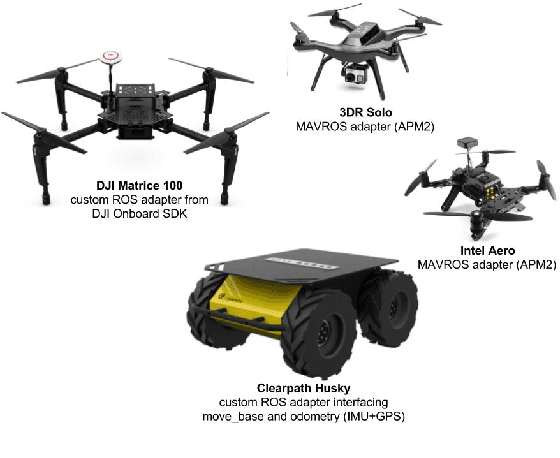

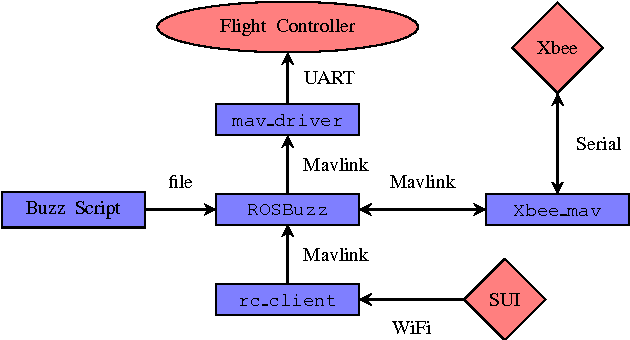

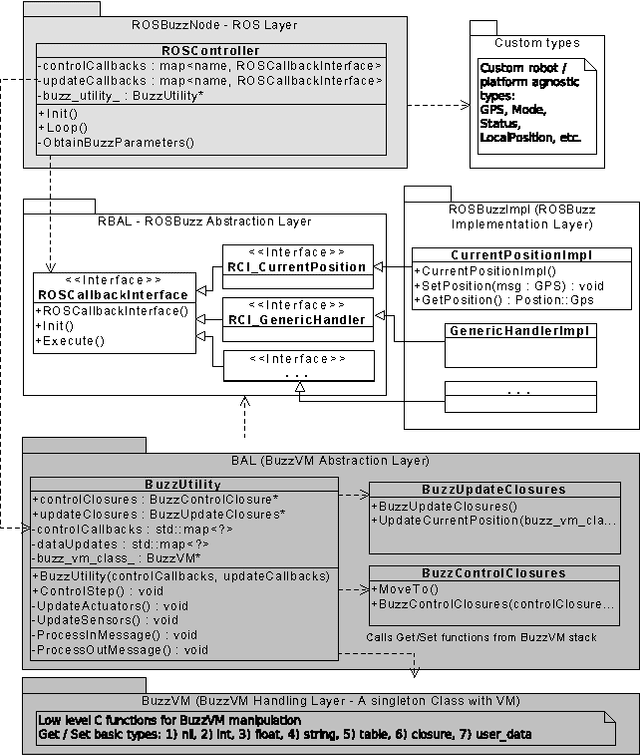

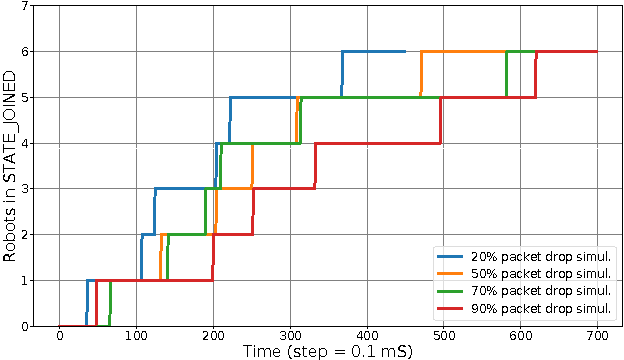

Abstract:This paper address the challenges encountered by developers when deploying a distributed decision-making behavior on heterogeneous robotic systems. Many applications benefit from the use of multiple robots, but their scalability and applicability are fundamentally limited if relying on a central control station. Getting beyond the centralized approach can increase the complexity of the embedded intelligence, the sensitivity to the network topology, and render the deployment on physical robots tedious and error-prone. By integrating the swarm-oriented programming language Buzz with the standard environment of ROS, this work demonstrates that behaviors requiring distributed consensus can be successfully deployed in practice. From simulation to the field, the behavioral script stays untouched and applicable to heterogeneous robot teams. We present the software structure of our solution as well as the swarm-oriented paradigms required from Buzz to implement a robust generic consensus strategy. We show the applicability of our solution with simulations and experiments with heterogeneous ground-and-air robotic teams.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge