Ilya Shenbin

Neural Click Models for Recommender Systems

Sep 30, 2024Abstract:We develop and evaluate neural architectures to model the user behavior in recommender systems (RS) inspired by click models for Web search but going beyond standard click models. Proposed architectures include recurrent networks, Transformer-based models that alleviate the quadratic complexity of self-attention, adversarial and hierarchical architectures. Our models outperform baselines on the ContentWise and RL4RS datasets and can be used in RS simulators to model user response for RS evaluation and pretraining.

$ abla^2$DFT: A Universal Quantum Chemistry Dataset of Drug-Like Molecules and a Benchmark for Neural Network Potentials

Jun 20, 2024Abstract:Methods of computational quantum chemistry provide accurate approximations of molecular properties crucial for computer-aided drug discovery and other areas of chemical science. However, high computational complexity limits the scalability of their applications. Neural network potentials (NNPs) are a promising alternative to quantum chemistry methods, but they require large and diverse datasets for training. This work presents a new dataset and benchmark called $\nabla^2$DFT that is based on the nablaDFT. It contains twice as much molecular structures, three times more conformations, new data types and tasks, and state-of-the-art models. The dataset includes energies, forces, 17 molecular properties, Hamiltonian and overlap matrices, and a wavefunction object. All calculations were performed at the DFT level ($\omega$B97X-D/def2-SVP) for each conformation. Moreover, $\nabla^2$DFT is the first dataset that contains relaxation trajectories for a substantial number of drug-like molecules. We also introduce a novel benchmark for evaluating NNPs in molecular property prediction, Hamiltonian prediction, and conformational optimization tasks. Finally, we propose an extendable framework for training NNPs and implement 10 models within it.

ImplicitSLIM and How it Improves Embedding-based Collaborative Filtering

May 31, 2024

Abstract:We present ImplicitSLIM, a novel unsupervised learning approach for sparse high-dimensional data, with applications to collaborative filtering. Sparse linear methods (SLIM) and their variations show outstanding performance, but they are memory-intensive and hard to scale. ImplicitSLIM improves embedding-based models by extracting embeddings from SLIM-like models in a computationally cheap and memory-efficient way, without explicit learning of heavy SLIM-like models. We show that ImplicitSLIM improves performance and speeds up convergence for both state of the art and classical collaborative filtering methods. The source code for ImplicitSLIM, related models, and applications is available at https://github.com/ilya-shenbin/ImplicitSLIM.

Machine Learning for SAT: Restricted Heuristics and New Graph Representations

Jul 18, 2023

Abstract:Boolean satisfiability (SAT) is a fundamental NP-complete problem with many applications, including automated planning and scheduling. To solve large instances, SAT solvers have to rely on heuristics, e.g., choosing a branching variable in DPLL and CDCL solvers. Such heuristics can be improved with machine learning (ML) models; they can reduce the number of steps but usually hinder the running time because useful models are relatively large and slow. We suggest the strategy of making a few initial steps with a trained ML model and then releasing control to classical heuristics; this simplifies cold start for SAT solving and can decrease both the number of steps and overall runtime, but requires a separate decision of when to release control to the solver. Moreover, we introduce a modification of Graph-Q-SAT tailored to SAT problems converted from other domains, e.g., open shop scheduling problems. We validate the feasibility of our approach with random and industrial SAT problems.

DetIE: Multilingual Open Information Extraction Inspired by Object Detection

Jun 24, 2022

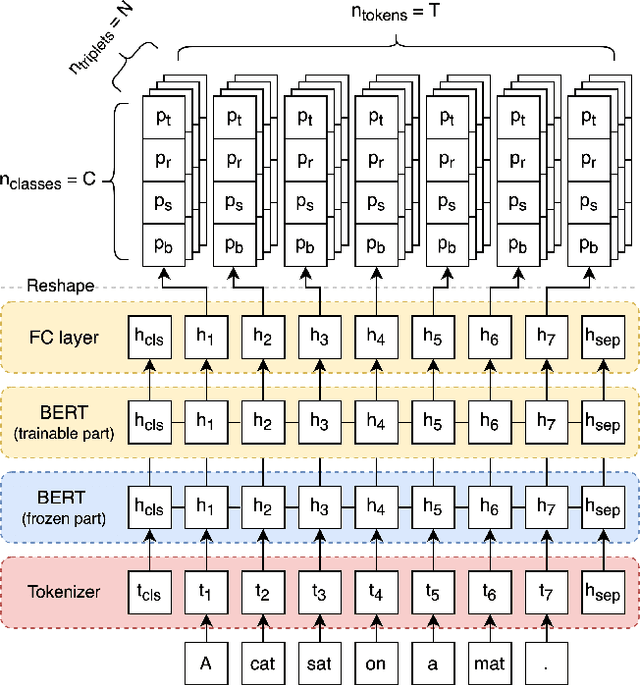

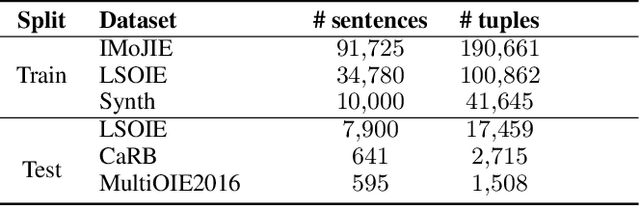

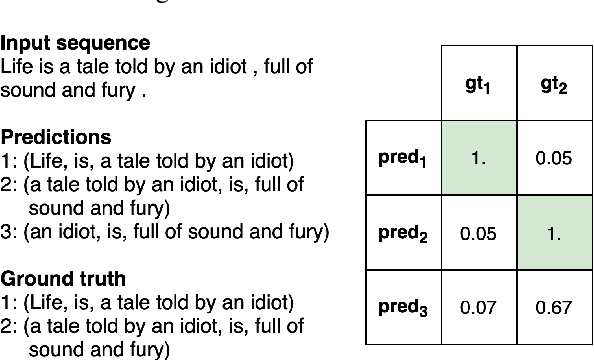

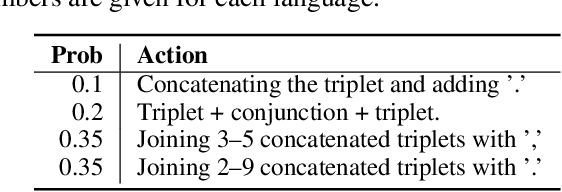

Abstract:State of the art neural methods for open information extraction (OpenIE) usually extract triplets (or tuples) iteratively in an autoregressive or predicate-based manner in order not to produce duplicates. In this work, we propose a different approach to the problem that can be equally or more successful. Namely, we present a novel single-pass method for OpenIE inspired by object detection algorithms from computer vision. We use an order-agnostic loss based on bipartite matching that forces unique predictions and a Transformer-based encoder-only architecture for sequence labeling. The proposed approach is faster and shows superior or similar performance in comparison with state of the art models on standard benchmarks in terms of both quality metrics and inference time. Our model sets the new state of the art performance of 67.7% F1 on CaRB evaluated as OIE2016 while being 3.35x faster at inference than previous state of the art. We also evaluate the multilingual version of our model in the zero-shot setting for two languages and introduce a strategy for generating synthetic multilingual data to fine-tune the model for each specific language. In this setting, we show performance improvement 15% on multilingual Re-OIE2016, reaching 75% F1 for both Portuguese and Spanish languages. Code and models are available at https://github.com/sberbank-ai/DetIE.

RecVAE: a New Variational Autoencoder for Top-N Recommendations with Implicit Feedback

Dec 24, 2019

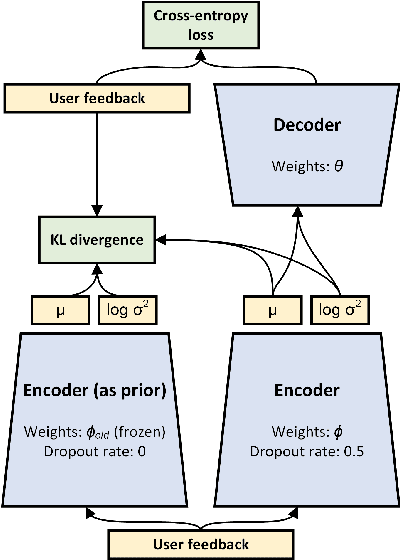

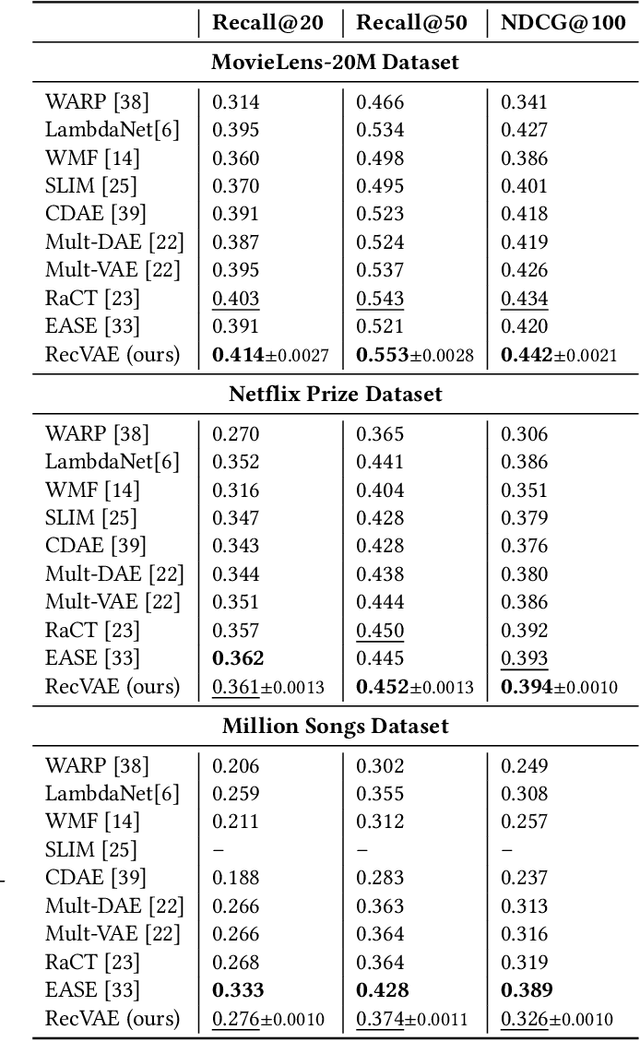

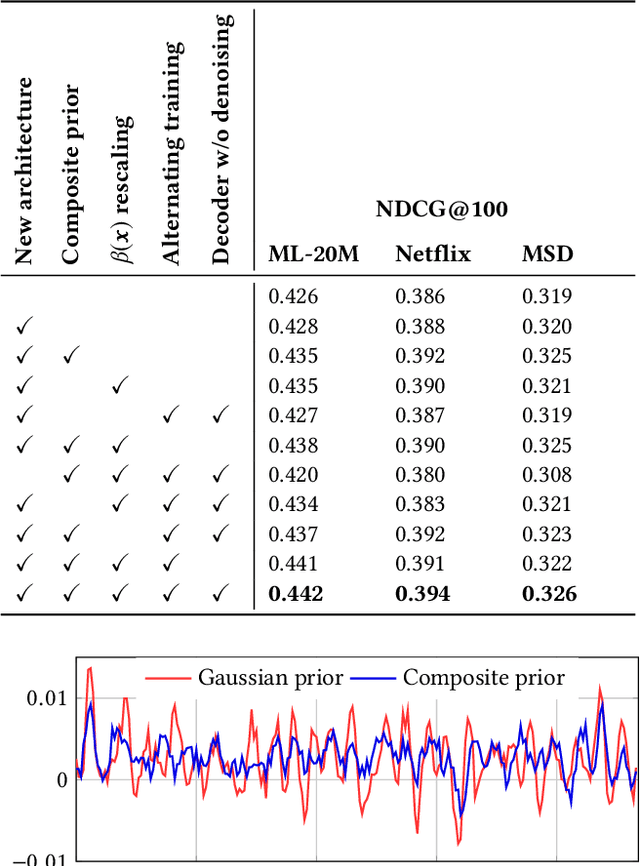

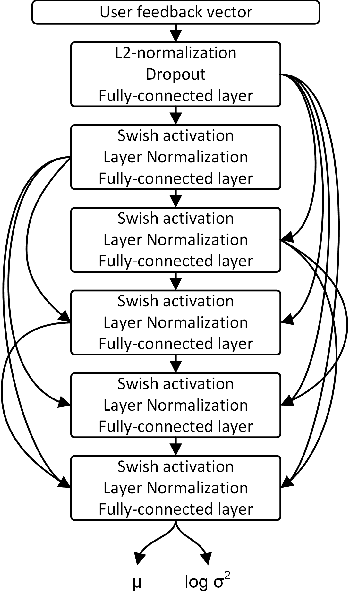

Abstract:Recent research has shown the advantages of using autoencoders based on deep neural networks for collaborative filtering. In particular, the recently proposed Mult-VAE model, which used the multinomial likelihood variational autoencoders, has shown excellent results for top-N recommendations. In this work, we propose the Recommender VAE (RecVAE) model that originates from our research on regularization techniques for variational autoencoders. RecVAE introduces several novel ideas to improve Mult-VAE, including a novel composite prior distribution for the latent codes, a new approach to setting the $\beta$ hyperparameter for the $\beta$-VAE framework, and a new approach to training based on alternating updates. In experimental evaluation, we show that RecVAE significantly outperforms previously proposed autoencoder-based models, including Mult-VAE and RaCT, across classical collaborative filtering datasets, and present a detailed ablation study to assess our new developments. Code and models are available at https://github.com/ilya-shenbin/RecVAE.

AspeRa: Aspect-based Rating Prediction Model

Jan 23, 2019

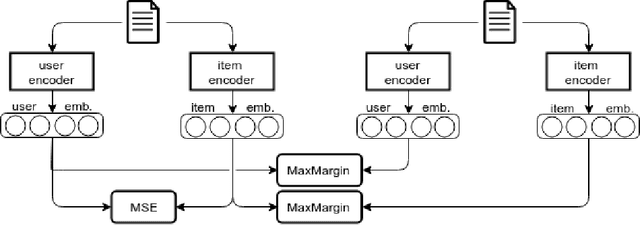

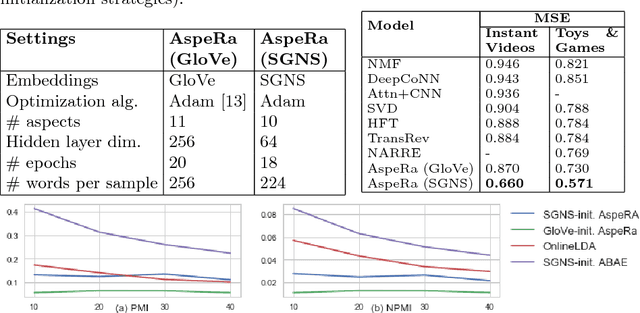

Abstract:We propose a novel end-to-end Aspect-based Rating Prediction model (AspeRa) that estimates user rating based on review texts for the items and at the same time discovers coherent aspects of reviews that can be used to explain predictions or profile users. The AspeRa model uses max-margin losses for joint item and user embedding learning and a dual-headed architecture; it significantly outperforms recently proposed state-of-the-art models such as DeepCoNN, HFT, NARRE, and TransRev on two real world data sets of user reviews. With qualitative examination of the aspects and quantitative evaluation of rating prediction models based on these aspects, we show how aspect embeddings can be used in a recommender system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge