Hyunji Nam

Netflix Artwork Personalization via LLM Post-training

Jan 06, 2026Abstract:Large language models (LLMs) have demonstrated success in various applications of user recommendation and personalization across e-commerce and entertainment. On many entertainment platforms such as Netflix, users typically interact with a wide range of titles, each represented by an artwork. Since users have diverse preferences, an artwork that appeals to one type of user may not resonate with another with different preferences. Given this user heterogeneity, our work explores the novel problem of personalized artwork recommendations according to diverse user preferences. Similar to the multi-dimensional nature of users' tastes, titles contain different themes and tones that may appeal to different viewers. For example, the same title might feature both heartfelt family drama and intense action scenes. Users who prefer romantic content may like the artwork emphasizing emotional warmth between the characters, while those who prefer action thrillers may find high-intensity action scenes more intriguing. Rather than a one-size-fits-all approach, we conduct post-training of pre-trained LLMs to make personalized artwork recommendations, selecting the most preferred visual representation of a title for each user and thereby improving user satisfaction and engagement. Our experimental results with Llama 3.1 8B models (trained on a dataset of 110K data points and evaluated on 5K held-out user-title pairs) show that the post-trained LLMs achieve 3-5\% improvements over the Netflix production model, suggesting a promising direction for granular personalized recommendations using LLMs.

Learning Pluralistic User Preferences through Reinforcement Learning Fine-tuned Summaries

Jul 17, 2025

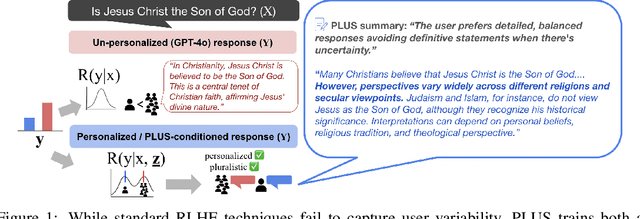

Abstract:As everyday use cases of large language model (LLM) AI assistants have expanded, it is becoming increasingly important to personalize responses to align to different users' preferences and goals. While reinforcement learning from human feedback (RLHF) is effective at improving LLMs to be generally more helpful and fluent, it does not account for variability across users, as it models the entire user population with a single reward model. We present a novel framework, Preference Learning Using Summarization (PLUS), that learns text-based summaries of each user's preferences, characteristics, and past conversations. These summaries condition the reward model, enabling it to make personalized predictions about the types of responses valued by each user. We train the user-summarization model with reinforcement learning, and update the reward model simultaneously, creating an online co-adaptation loop. We show that in contrast with prior personalized RLHF techniques or with in-context learning of user information, summaries produced by PLUS capture meaningful aspects of a user's preferences. Across different pluralistic user datasets, we show that our method is robust to new users and diverse conversation topics. Additionally, we demonstrate that the textual summaries generated about users can be transferred for zero-shot personalization of stronger, proprietary models like GPT-4. The resulting user summaries are not only concise and portable, they are easy for users to interpret and modify, allowing for more transparency and user control in LLM alignment.

Predicting Long Term Sequential Policy Value Using Softer Surrogates

Dec 30, 2024Abstract:Performing policy evaluation in education, healthcare and online commerce can be challenging, because it can require waiting substantial amounts of time to observe outcomes over the desired horizon of interest. While offline evaluation methods can be used to estimate the performance of a new decision policy from historical data in some cases, such methods struggle when the new policy involves novel actions or is being run in a new decision process with potentially different dynamics. Here we consider how to estimate the full-horizon value of a new decision policy using only short-horizon data from the new policy, and historical full-horizon data from a different behavior policy. We introduce two new estimators for this setting, including a doubly robust estimator, and provide formal analysis of their properties. Our empirical results on two realistic simulators, of HIV treatment and sepsis treatment, show that our methods can often provide informative estimates of a new decision policy ten times faster than waiting for the full horizon, highlighting that it may be possible to quickly identify if a new decision policy, involving new actions, is better or worse than existing past policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge