Hwiyeol Jo

Enhancing Hallucination Detection via Future Context

Jul 28, 2025Abstract:Large Language Models (LLMs) are widely used to generate plausible text on online platforms, without revealing the generation process. As users increasingly encounter such black-box outputs, detecting hallucinations has become a critical challenge. To address this challenge, we focus on developing a hallucination detection framework for black-box generators. Motivated by the observation that hallucinations, once introduced, tend to persist, we sample future contexts. The sampled future contexts provide valuable clues for hallucination detection and can be effectively integrated with various sampling-based methods. We extensively demonstrate performance improvements across multiple methods using our proposed sampling approach.

Investigating the Influence of Prompt-Specific Shortcuts in AI Generated Text Detection

Jun 24, 2024

Abstract:AI Generated Text (AIGT) detectors are developed with texts from humans and LLMs of common tasks. Despite the diversity of plausible prompt choices, these datasets are generally constructed with a limited number of prompts. The lack of prompt variation can introduce prompt-specific shortcut features that exist in data collected with the chosen prompt, but do not generalize to others. In this paper, we analyze the impact of such shortcuts in AIGT detection. We propose Feedback-based Adversarial Instruction List Optimization (FAILOpt), an attack that searches for instructions deceptive to AIGT detectors exploiting prompt-specific shortcuts. FAILOpt effectively drops the detection performance of the target detector, comparable to other attacks based on adversarial in-context examples. We also utilize our method to enhance the robustness of the detector by mitigating the shortcuts. Based on the findings, we further train the classifier with the dataset augmented by FAILOpt prompt. The augmented classifier exhibits improvements across generation models, tasks, and attacks. Our code will be available at https://github.com/zxcvvxcz/FAILOpt.

ZeroDL: Zero-shot Distribution Learning for Text Clustering via Large Language Models

Jun 19, 2024Abstract:The recent advancements in large language models (LLMs) have brought significant progress in solving NLP tasks. Notably, in-context learning (ICL) is the key enabling mechanism for LLMs to understand specific tasks and grasping nuances. In this paper, we propose a simple yet effective method to contextualize a task toward a specific LLM, by (1) observing how a given LLM describes (all or a part of) target datasets, i.e., open-ended zero-shot inference, and (2) aggregating the open-ended inference results by the LLM, and (3) finally incorporate the aggregated meta-information for the actual task. We show the effectiveness of this approach in text clustering tasks, and also highlight the importance of the contextualization through examples of the above procedure.

Taxonomy and Analysis of Sensitive User Queries in Generative AI Search

Apr 05, 2024

Abstract:Although there has been a growing interest among industries to integrate generative LLMs into their services, limited experiences and scarcity of resources acts as a barrier in launching and servicing large-scale LLM-based conversational services. In this paper, we share our experiences in developing and operating generative AI models within a national-scale search engine, with a specific focus on the sensitiveness of user queries. We propose a taxonomy for sensitive search queries, outline our approaches, and present a comprehensive analysis report on sensitive queries from actual users.

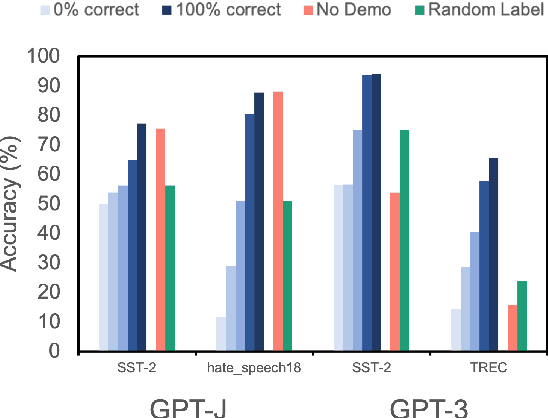

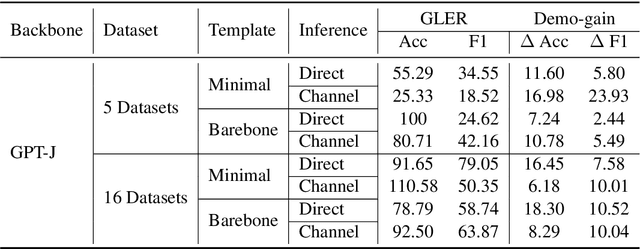

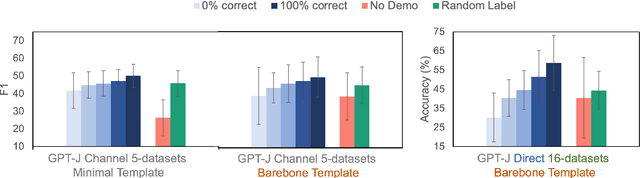

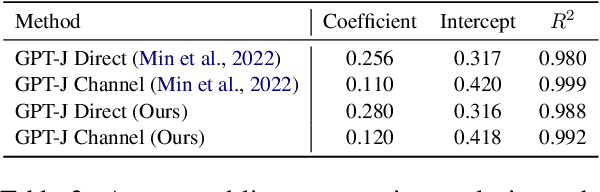

Ground-Truth Labels Matter: A Deeper Look into Input-Label Demonstrations

May 25, 2022

Abstract:Despite recent explosion in research interests, in-context learning and the precise impact of the quality of demonstrations remain elusive. While, based on current literature, it is expected that in-context learning shares a similar mechanism to supervised learning, Min et al. (2022) recently reported that, surprisingly, input-label correspondence is less important than other aspects of prompt demonstrations. Inspired by this counter-intuitive observation, we re-examine the importance of ground truth labels on in-context learning from diverse and statistical points of view. With the aid of the newly introduced metrics, i.e., Ground-truth Label Effect Ratio (GLER), demo-gain, and label sensitivity, we find that the impact of the correct input-label matching can vary according to different configurations. Expanding upon the previous key finding on the role of demonstrations, the complementary and contrastive results suggest that one might need to take more care when estimating the impact of each component in in-context learning demonstrations.

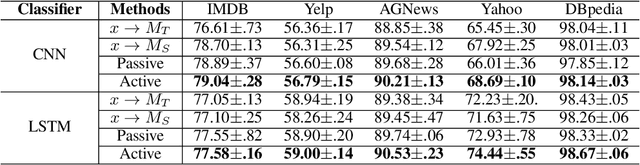

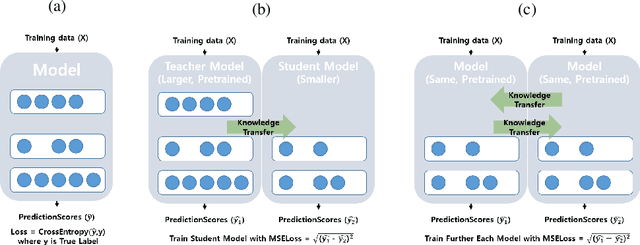

Human-Like Active Learning: Machines Simulating the Human Learning Process

Nov 07, 2020

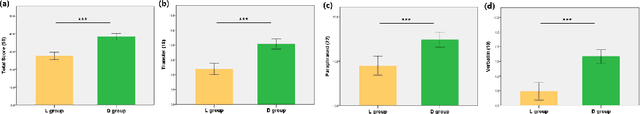

Abstract:Although the use of active learning to increase learners' engagement has recently been introduced in a variety of methods, empirical experiments are lacking. In this study, we attempted to align two experiments in order to (1) make a hypothesis for machine and (2) empirically confirm the effect of active learning on learning. In Experiment 1, we compared the effect of a passive form of learning to active form of learning. The results showed that active learning had a greater learning outcomes than passive learning. In the machine experiment based on the human result, we imitated the human active learning as a form of knowledge distillation. The active learning framework performed better than the passive learning framework. In the end, we showed not only that we can make build better machine training framework through the human experiment result, but also empirically confirm the result of human experiment through imitated machine experiments; human-like active learning have crucial effect on learning performance.

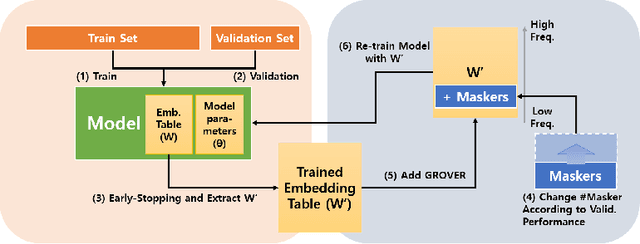

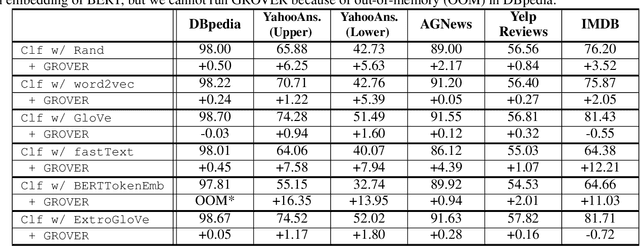

Ruminating Word Representations with Random Noised Masker

Nov 08, 2019

Abstract:We introduce a training method for both better word representation and performance, which we call GROVER (Gradual Rumination On the Vector with maskERs). The method is to gradually and iteratively add random noises to word embeddings while training a model. GROVER first starts from conventional training process, and then extracts the fine-tuned representations. Next, we gradually add random noises to the word representations and repeat the training process from scratch, but initialize with the noised word representations. Through the re-training process, we can mitigate some noises to be compensated and utilize other noises to learn better representations. As a result, we can get word representations further fine-tuned and specialized on the task. When we experiment with our method on 5 text classification datasets, our method improves model performances on most of the datasets. Moreover, we show that our method can be combined with other regularization techniques, further improving the model performance.

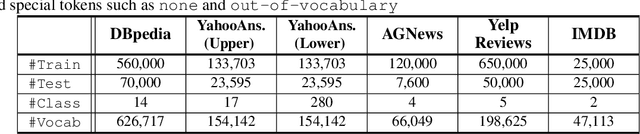

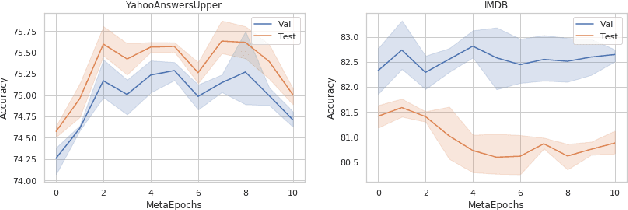

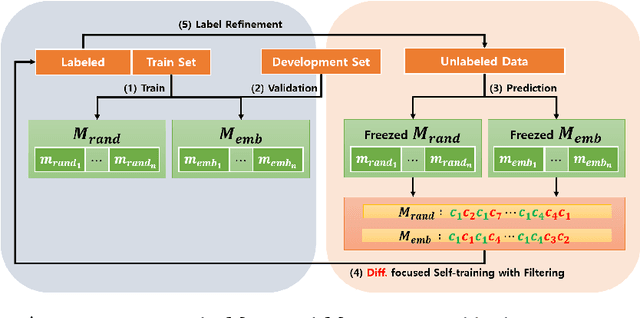

Delta-training: Simple Semi-Supervised Text Classification using Pretrained Word Embeddings

Jan 22, 2019

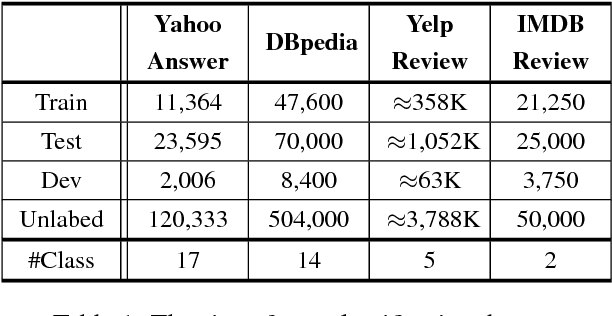

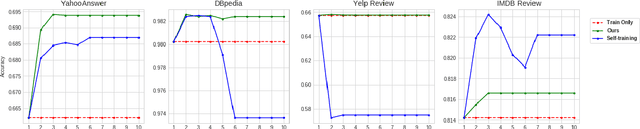

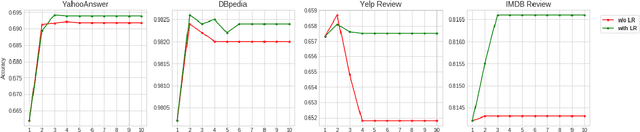

Abstract:We propose a novel and simple method for semi-supervised text classification. The method starts from a hypothesis that a classifier with pretrained word embeddings always outperforms the same classifier with randomly initialized word embeddings, as empirically observed in NLP tasks. Our method first builds two sets of classifiers as a form of model ensemble, and then initializes their word embeddings differently: one using random, the other using pretrained word embeddings. We focus on different predictions between the two classifiers on unlabeled data while following the self-training framework. We also introduce label refinement and early-stopping in meta-epoch for better confidence on the label-by-prediction. We experiment on 4 different classification datasets, showing that our method performs better than the method using only the training set. Delta-training also outperforms the conventional self-training method in multi-class classification, showing robust performance against error accumulation.

Deep Extrofitting: Specialization and Generalization of Expansional Retrofitting Word Vectors using Semantic Lexicons

Sep 04, 2018

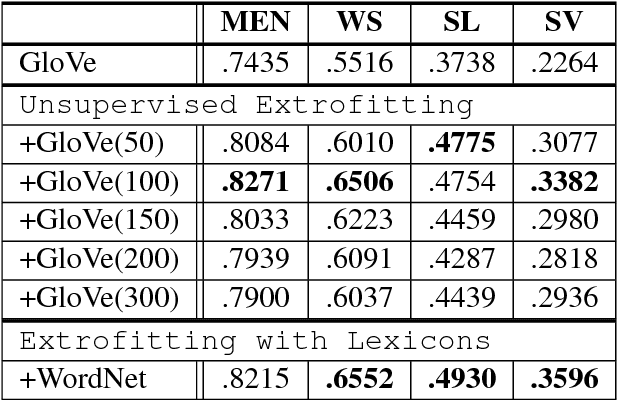

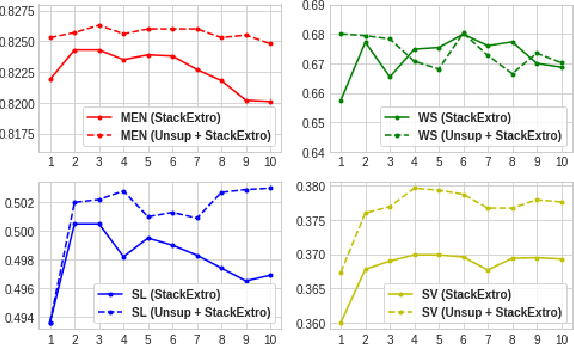

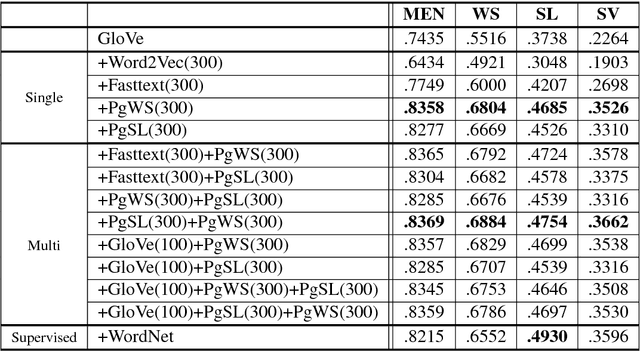

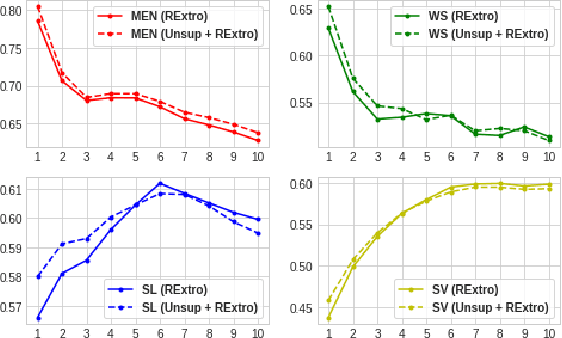

Abstract:The retrofitting techniques, which inject external resources into word representations, have compensated the weakness of distributed representations in semantic and relational knowledge between words. Implicitly retrofitting word vectors by expansional technique showed that the method outperforms retrofitting in word similarity task with generalization. In this paper, we propose deep extrofitting: in-depth stacking of extrofitting. We first stack extrofitting for word vector generalization. Next, we combine extrofitting with retrofitting, finding new vector space on specialization that prevents retrofitting from converging in a few iterations. When experimenting with GloVe, we show that our methods outperform the previous methods on most of word similarity task while requiring only synonyms as external resources. We also report further analysis on the effect of word vector specialization and word vector generalization in text classification task.

What we really want to find by Sentiment Analysis: The Relationship between Computational Models and Psychological State

Jun 03, 2018

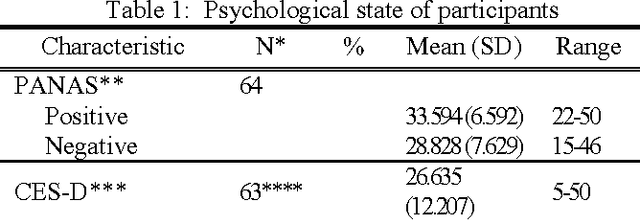

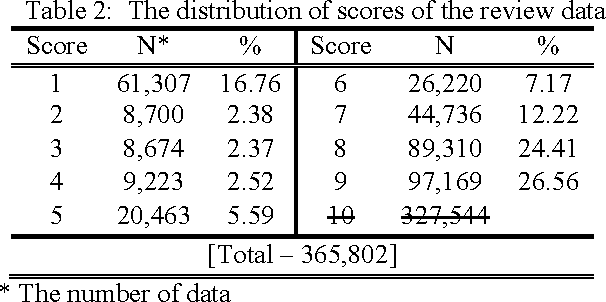

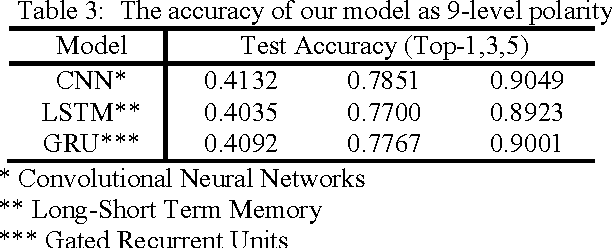

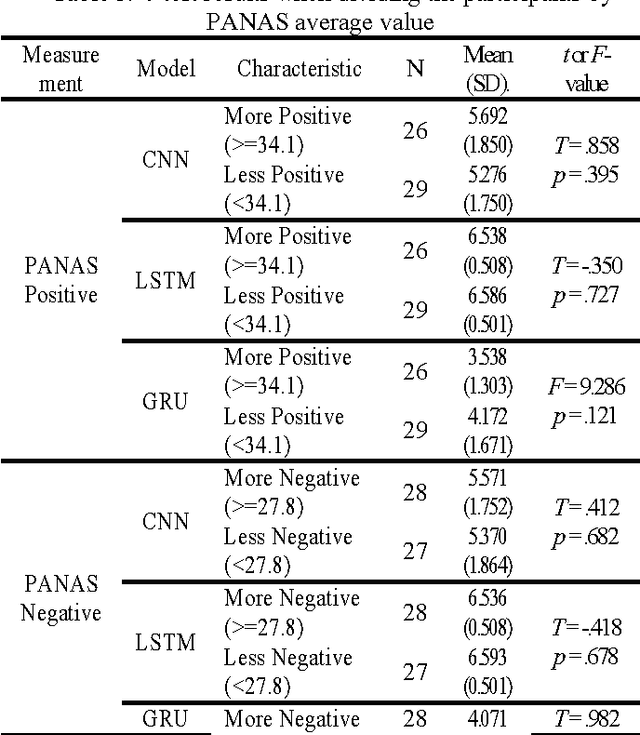

Abstract:As the first step to model emotional state of a person, we build sentiment analysis models with existing deep neural network algorithms and compare the models with psychological measurements to enlighten the relationship. In the experiments, we first examined psychological state of 64 participants and asked them to summarize the story of a book, Chronicle of a Death Foretold (Marquez, 1981). Secondly, we trained models using crawled 365,802 movie review data; then we evaluated participants' summaries using the pretrained model as a concept of transfer learning. With the background that emotion affects on memories, we investigated the relationship between the evaluation score of the summaries from computational models and the examined psychological measurements. The result shows that although CNN performed the best among other deep neural network algorithms (LSTM, GRU), its results are not related to the psychological state. Rather, GRU shows more explainable results depending on the psychological state. The contribution of this paper can be summarized as follows: (1) we enlighten the relationship between computational models and psychological measurements. (2) we suggest this framework as objective methods to evaluate the emotion; the real sentiment analysis of a person.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge