Haoran Niu

Privacy Risk Predictions Based on Fundamental Understanding of Personal Data and an Evolving Threat Landscape

Aug 06, 2025Abstract:It is difficult for individuals and organizations to protect personal information without a fundamental understanding of relative privacy risks. By analyzing over 5,000 empirical identity theft and fraud cases, this research identifies which types of personal data are exposed, how frequently exposures occur, and what the consequences of those exposures are. We construct an Identity Ecosystem graph--a foundational, graph-based model in which nodes represent personally identifiable information (PII) attributes and edges represent empirical disclosure relationships between them (e.g., the probability that one PII attribute is exposed due to the exposure of another). Leveraging this graph structure, we develop a privacy risk prediction framework that uses graph theory and graph neural networks to estimate the likelihood of further disclosures when certain PII attributes are compromised. The results show that our approach effectively answers the core question: Can the disclosure of a given identity attribute possibly lead to the disclosure of another attribute?

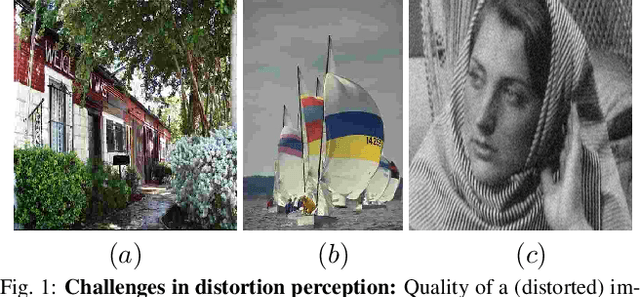

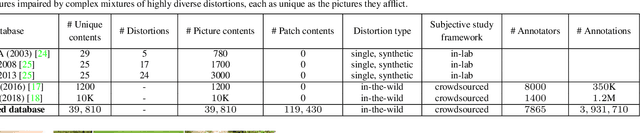

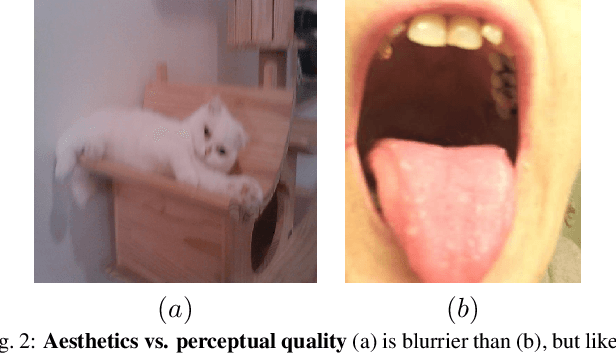

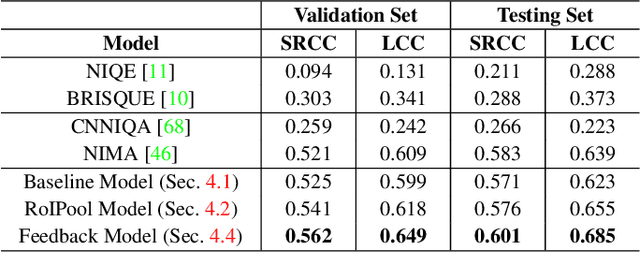

From Patches to Pictures (PaQ-2-PiQ): Mapping the Perceptual Space of Picture Quality

Dec 20, 2019

Abstract:Blind or no-reference (NR) perceptual picture quality prediction is a difficult, unsolved problem of great consequence to the social and streaming media industries that impacts billions of viewers daily. Unfortunately, popular NR prediction models perform poorly on real-world distorted pictures. To advance progress on this problem, we introduce the largest (by far) subjective picture quality database, containing about 40000 real-world distorted pictures and 120000 patches, on which we collected about 4M human judgments of picture quality. Using these picture and patch quality labels, we built deep region-based architectures that learn to produce state-of-the-art global picture quality predictions as well as useful local picture quality maps. Our innovations include picture quality prediction architectures that produce global-to-local inferences as well as local-to-global inferences (via feedback).

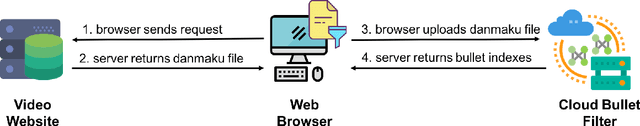

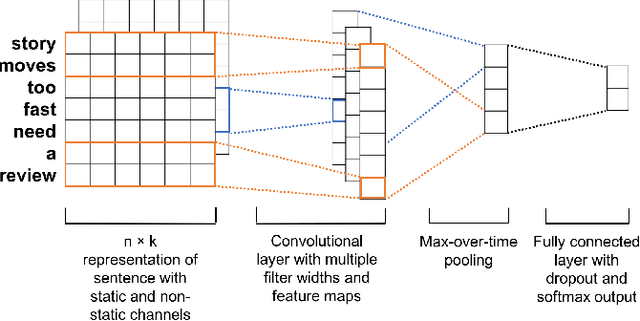

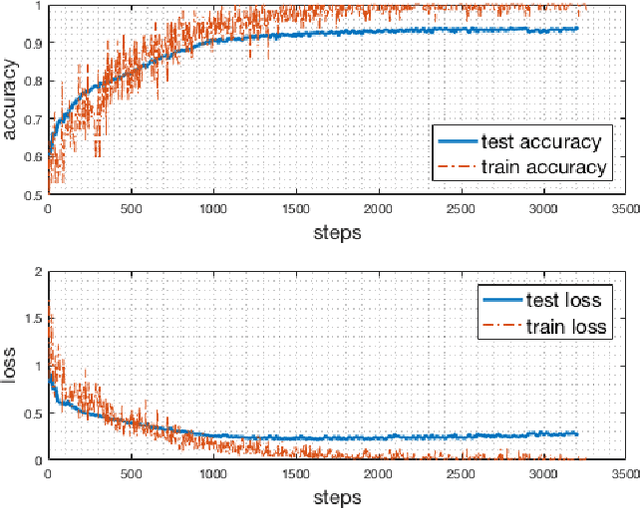

SmartBullets: A Cloud-Assisted Bullet Screen Filter based on Deep Learning

May 15, 2019

Abstract:Bullet-screen is a technique that enables the website users to send real-time comment `bullet' cross the screen. Compared with the traditional review of a video, bullet-screen provides new features of feeling expression to video watching and more iterations between video viewers. However, since all the comments from the viewers are shown on the screen publicly and simultaneously, some low-quality bullets will reduce the watching enjoyment of the users. Although the bullet-screen video websites have provided filter functions based on regular expression, bad bullets can still easily pass the filter through making a small modification. In this paper, we present SmartBullets, a user-centered bullet-screen filter based on deep learning techniques. A convolutional neural network is trained as the classifier to determine whether a bullet need to be removed according to its quality. Moreover, to increase the scalability of the filter, we employ a cloud-assisted framework by developing a backend cloud server and a front-end browser extension. The evaluation of 40 volunteers shows that SmartBullets can effectively remove the low-quality bullets and improve the overall watching experience of viewers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge