Gyunam Park

Online Discovery of Simulation Models for Evolving Business Processes (Extended Version)

Jun 11, 2025Abstract:Business Process Simulation (BPS) refers to techniques designed to replicate the dynamic behavior of a business process. Many approaches have been proposed to automatically discover simulation models from historical event logs, reducing the cost and time to manually design them. However, in dynamic business environments, organizations continuously refine their processes to enhance efficiency, reduce costs, and improve customer satisfaction. Existing techniques to process simulation discovery lack adaptability to real-time operational changes. In this paper, we propose a streaming process simulation discovery technique that integrates Incremental Process Discovery with Online Machine Learning methods. This technique prioritizes recent data while preserving historical information, ensuring adaptation to evolving process dynamics. Experiments conducted on four different event logs demonstrate the importance in simulation of giving more weight to recent data while retaining historical knowledge. Our technique not only produces more stable simulations but also exhibits robustness in handling concept drift, as highlighted in one of the use cases.

Synchronizing Process Model and Event Abstraction for Grounded Process Intelligence (Extended Version)

May 29, 2025Abstract:Model abstraction (MA) and event abstraction (EA) are means to reduce complexity of (discovered) models and event data. Imagine a process intelligence project that aims to analyze a model discovered from event data which is further abstracted, possibly multiple times, to reach optimality goals, e.g., reducing model size. So far, after discovering the model, there is no technique that enables the synchronized abstraction of the underlying event log. This results in loosing the grounding in the real-world behavior contained in the log and, in turn, restricts analysis insights. Hence, in this work, we provide the formal basis for synchronized model and event abstraction, i.e., we prove that abstracting a process model by MA and discovering a process model from an abstracted event log yields an equivalent process model. We prove the feasibility of our approach based on behavioral profile abstraction as non-order preserving MA technique, resulting in a novel EA technique.

Unlocking Non-Block-Structured Decisions: Inductive Mining with Choice Graphs

May 11, 2025Abstract:Process discovery aims to automatically derive process models from event logs, enabling organizations to analyze and improve their operational processes. Inductive mining algorithms, while prioritizing soundness and efficiency through hierarchical modeling languages, often impose a strict block-structured representation. This limits their ability to accurately capture the complexities of real-world processes. While recent advancements like the Partially Ordered Workflow Language (POWL) have addressed the block-structure limitation for concurrency, a significant gap remains in effectively modeling non-block-structured decision points. In this paper, we bridge this gap by proposing an extension of POWL to handle non-block-structured decisions through the introduction of choice graphs. Choice graphs offer a structured yet flexible approach to model complex decision logic within the hierarchical framework of POWL. We present an inductive mining discovery algorithm that uses our extension and preserves the quality guarantees of the inductive mining framework. Our experimental evaluation demonstrates that the discovered models, enriched with choice graphs, more precisely represent the complex decision-making behavior found in real-world processes, without compromising the high scalability inherent in inductive mining techniques.

INEXA: Interactive and Explainable Process Model Abstraction Through Object-Centric Process Mining

Mar 27, 2024

Abstract:Process events are recorded by multiple information systems at different granularity levels. Based on the resulting event logs, process models are discovered at different granularity levels, as well. Events stored at a fine-grained granularity level, for example, may hinder the discovered process model to be displayed due the high number of resulting model elements. The discovered process model of a real-world manufacturing process, for example, consists of 1,489 model elements and over 2,000 arcs. Existing process model abstraction techniques could help reducing the size of the model, but would disconnect it from the underlying event log. Existing event abstraction techniques do neither support the analysis of mixed granularity levels, nor interactive exploration of a suitable granularity level. To enable the exploration of discovered process models at different granularity levels, we propose INEXA, an interactive, explainable process model abstraction method that keeps the link to the event log. As a starting point, INEXA aggregates large process models to a "displayable" size, e.g., for the manufacturing use case to a process model with 58 model elements. Then, the process analyst can explore granularity levels interactively, while applied abstractions are automatically traced in the event log for explainability.

Analyzing An After-Sales Service Process Using Object-Centric Process Mining: A Case Study

Oct 16, 2023Abstract:Process mining, a technique turning event data into business process insights, has traditionally operated on the assumption that each event corresponds to a singular case or object. However, many real-world processes are intertwined with multiple objects, making them object-centric. This paper focuses on the emerging domain of object-centric process mining, highlighting its potential yet underexplored benefits in actual operational scenarios. Through an in-depth case study of Borusan Cat's after-sales service process, this study emphasizes the capability of object-centric process mining to capture entangled business process details. Utilizing an event log of approximately 65,000 events, our analysis underscores the importance of embracing this paradigm for richer business insights and enhanced operational improvements.

Extracting Rules from Event Data for Study Planning

Oct 04, 2023Abstract:In this study, we examine how event data from campus management systems can be used to analyze the study paths of higher education students. The main goal is to offer valuable guidance for their study planning. We employ process and data mining techniques to explore the impact of sequences of taken courses on academic success. Through the use of decision tree models, we generate data-driven recommendations in the form of rules for study planning and compare them to the recommended study plan. The evaluation focuses on RWTH Aachen University computer science bachelor program students and demonstrates that the proposed course sequence features effectively explain academic performance measures. Furthermore, the findings suggest avenues for developing more adaptable study plans.

Preventing Object-centric Discovery of Unsound Process Models for Object Interactions with Loops in Collaborative Systems: Extended Version

Mar 31, 2023

Abstract:Object-centric process discovery (OCPD) constitutes a paradigm shift in process mining. Instead of assuming a single case notion present in the event log, OCPD can handle events without a single case notion, but that are instead related to a collection of objects each having a certain type. The object types constitute multiple, interacting case notions. The output of OCPD is an object-centric Petri net, i.e. a Petri net with object-typed places, that represents the parallel execution of multiple execution flows corresponding to object types. Similar to classical process discovery, where we aim for behaviorally sound process models as a result, in OCPD, we aim for soundness of the resulting object-centric Petri nets. However, the existing OCPD approach can result in violations of soundness. As we will show, one violation arises for multiple interacting object types with loops that arise in collaborative systems. This paper proposes an extended OCPD approach and proves that it does not suffer from this violation of soundness of the resulting object-centric Petri nets. We also show how we prevent the OCPD approach from introducing spurious interactions in the discovered object-centric Petri net. The proposed framework is prototypically implemented.

Performance-Preserving Event Log Sampling for Predictive Monitoring

Jan 18, 2023Abstract:Predictive process monitoring is a subfield of process mining that aims to estimate case or event features for running process instances. Such predictions are of significant interest to the process stakeholders. However, most of the state-of-the-art methods for predictive monitoring require the training of complex machine learning models, which is often inefficient. Moreover, most of these methods require a hyper-parameter optimization that requires several repetitions of the training process which is not feasible in many real-life applications. In this paper, we propose an instance selection procedure that allows sampling training process instances for prediction models. We show that our instance selection procedure allows for a significant increase of training speed for next activity and remaining time prediction methods while maintaining reliable levels of prediction accuracy.

Explainable Predictive Decision Mining for Operational Support

Oct 30, 2022

Abstract:Several decision points exist in business processes (e.g., whether a purchase order needs a manager's approval or not), and different decisions are made for different process instances based on their characteristics (e.g., a purchase order higher than $500 needs a manager approval). Decision mining in process mining aims to describe/predict the routing of a process instance at a decision point of the process. By predicting the decision, one can take proactive actions to improve the process. For instance, when a bottleneck is developing in one of the possible decisions, one can predict the decision and bypass the bottleneck. However, despite its huge potential for such operational support, existing techniques for decision mining have focused largely on describing decisions but not on predicting them, deploying decision trees to produce logical expressions to explain the decision. In this work, we aim to enhance the predictive capability of decision mining to enable proactive operational support by deploying more advanced machine learning algorithms. Our proposed approach provides explanations of the predicted decisions using SHAP values to support the elicitation of proactive actions. We have implemented a Web application to support the proposed approach and evaluated the approach using the implementation.

A Framework for Extracting and Encoding Features from Object-Centric Event Data

Sep 02, 2022

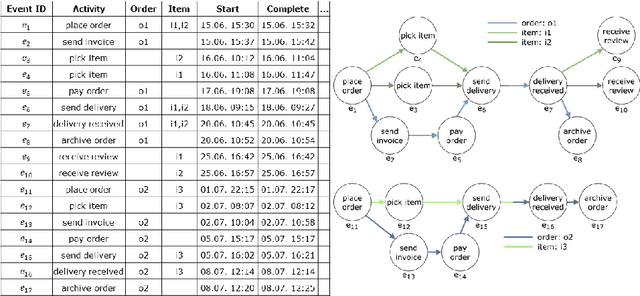

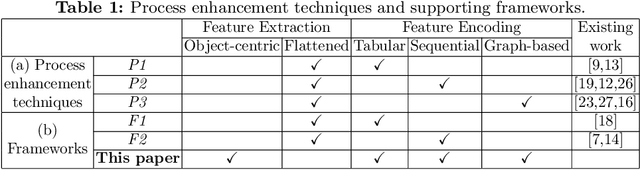

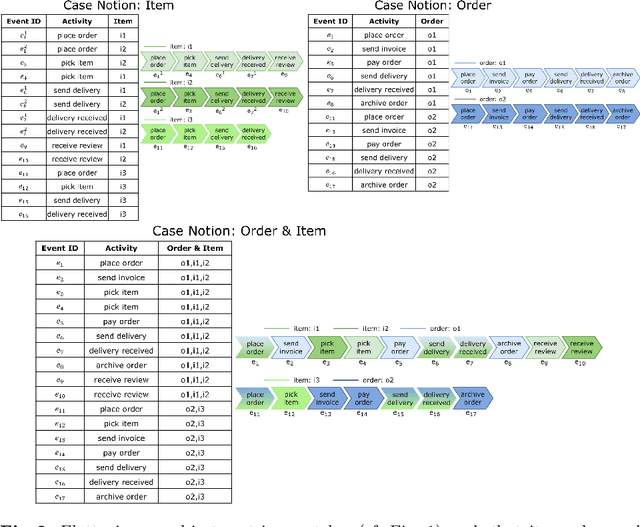

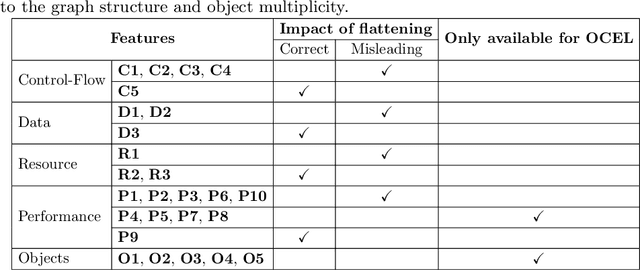

Abstract:Traditional process mining techniques take event data as input where each event is associated with exactly one object. An object represents the instantiation of a process. Object-centric event data contain events associated with multiple objects expressing the interaction of multiple processes. As traditional process mining techniques assume events associated with exactly one object, these techniques cannot be applied to object-centric event data. To use traditional process mining techniques, the object-centric event data are flattened by removing all object references but one. The flattening process is lossy, leading to inaccurate features extracted from flattened data. Furthermore, the graph-like structure of object-centric event data is lost when flattening. In this paper, we introduce a general framework for extracting and encoding features from object-centric event data. We calculate features natively on the object-centric event data, leading to accurate measures. Furthermore, we provide three encodings for these features: tabular, sequential, and graph-based. While tabular and sequential encodings have been heavily used in process mining, the graph-based encoding is a new technique preserving the structure of the object-centric event data. We provide six use cases: a visualization and a prediction use case for each of the three encodings. We use explainable AI in the prediction use cases to show the utility of both the object-centric features and the structure of the sequential and graph-based encoding for a predictive model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge