Guyue Hu

Federated Client-tailored Adapter for Medical Image Segmentation

Apr 25, 2025Abstract:Medical image segmentation in X-ray images is beneficial for computer-aided diagnosis and lesion localization. Existing methods mainly fall into a centralized learning paradigm, which is inapplicable in the practical medical scenario that only has access to distributed data islands. Federated Learning has the potential to offer a distributed solution but struggles with heavy training instability due to client-wise domain heterogeneity (including distribution diversity and class imbalance). In this paper, we propose a novel Federated Client-tailored Adapter (FCA) framework for medical image segmentation, which achieves stable and client-tailored adaptive segmentation without sharing sensitive local data. Specifically, the federated adapter stirs universal knowledge in off-the-shelf medical foundation models to stabilize the federated training process. In addition, we develop two client-tailored federated updating strategies that adaptively decompose the adapter into common and individual components, then globally and independently update the parameter groups associated with common client-invariant and individual client-specific units, respectively. They further stabilize the heterogeneous federated learning process and realize optimal client-tailored instead of sub-optimal global-compromised segmentation models. Extensive experiments on three large-scale datasets demonstrate the effectiveness and superiority of the proposed FCA framework for federated medical segmentation.

Progressive Relation Learning for Group Activity Recognition

Aug 08, 2019

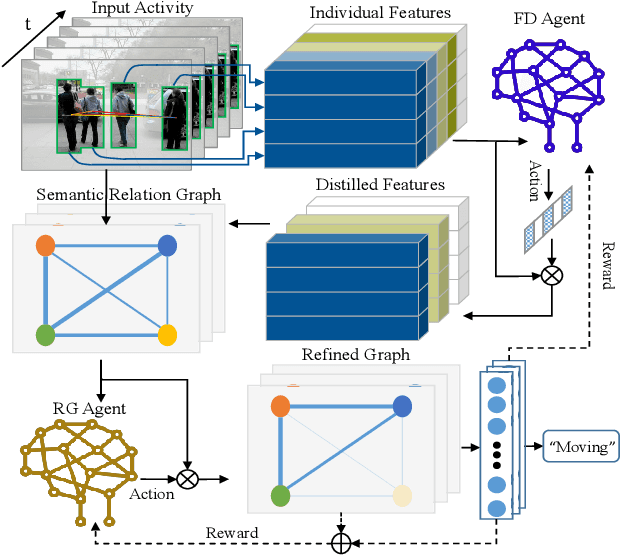

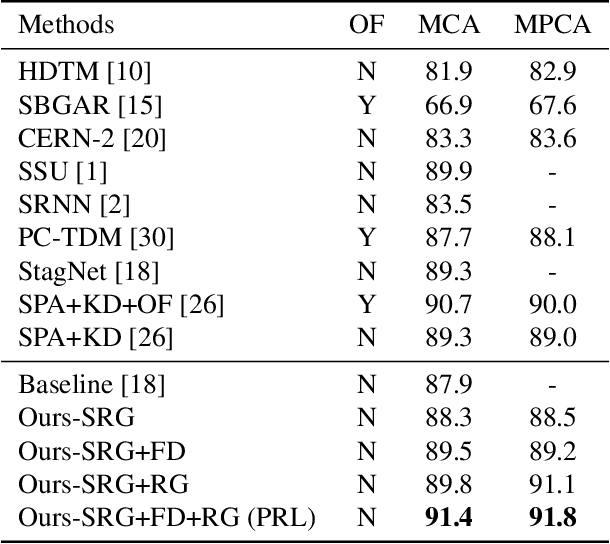

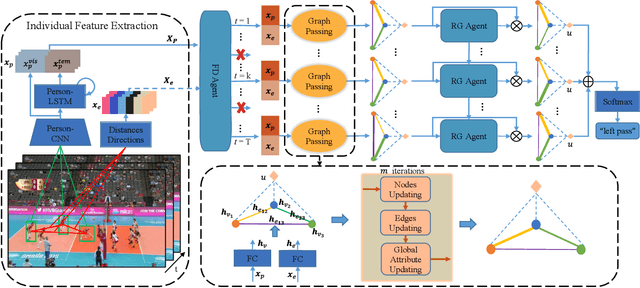

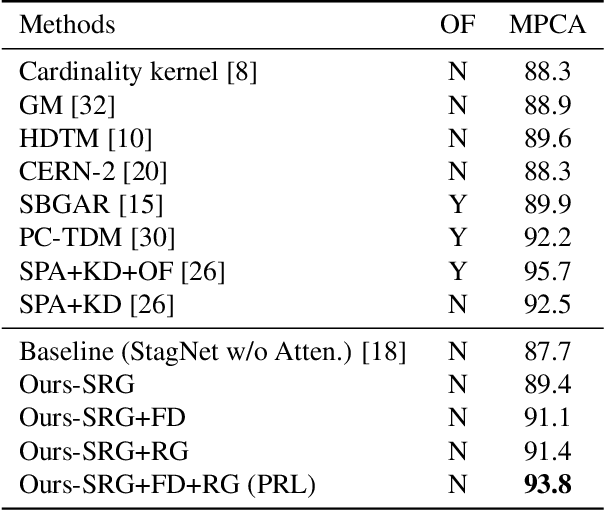

Abstract:Group activities usually involve spatio-temporal dynamics among many interactive individuals, while only a few participants at several key frames essentially define the activity. Therefore, effectively modeling the group-relevant and suppressing the irrelevant actions (and interactions) are vital for group activity recognition. In this paper, we propose a novel method based on deep reinforcement learning to progressively refine the low-level features and high-level relations of group activities. Firstly, we construct a semantic relation graph (SRG) to explicitly model the relations among persons. Then, two agents adopting policy according to two Markov decision processes are applied to progressively refine the SRG. Specifically, one feature-distilling (FD) agent in the discrete action space refines the low-level spatio-temporal features by distilling the most informative frames. Another relation-gating (RG) agent in continuous action space adjusts the high-level semantic graph to pay more attention to group-relevant relations. The SRG, FD agent, and RG agent are optimized alternately to mutually boost the performance of each other. Extensive experiments on two widely used benchmarks demonstrate the effectiveness and superiority of the proposed approach.

Skeleton-Based Action Recognition with Synchronous Local and Non-local Spatio-temporal Learning and Frequency Attention

Nov 10, 2018

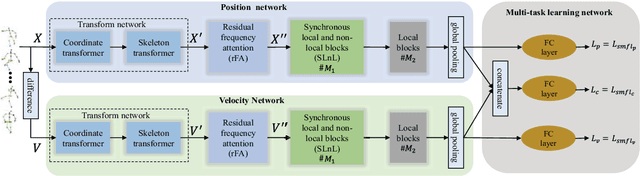

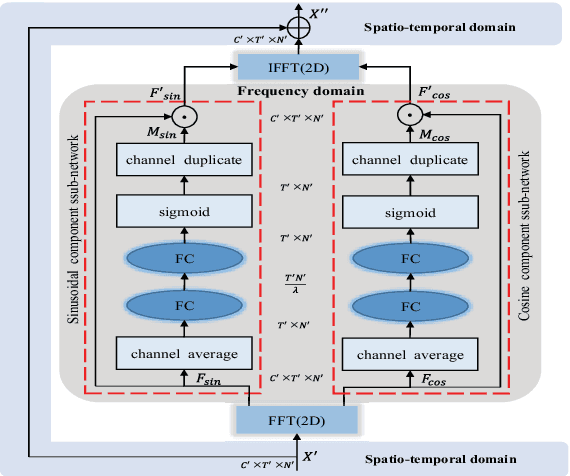

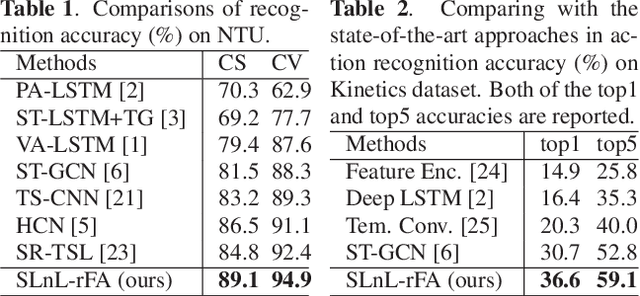

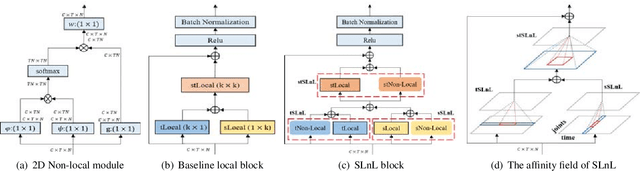

Abstract:Benefiting from its succinctness and robustness, skeleton-based human action recognition has recently attracted much attention. Most existing methods utilize local networks, such as recurrent networks, convolutional neural networks, and graph convolutional networks, to extract spatio-temporal dynamics hierarchically. As a consequence, the local and non-local dependencies, which respectively contain more details and semantics, are asynchronously captured in different level of layers. Moreover, limited to the spatio-temporal domain, these methods ignored patterns in the frequency domain. To better extract information from multi-domains, we propose a residual frequency attention (rFA) to focus on discriminative patterns in the frequency domain, and a synchronous local and non-local (SLnL) block to simultaneously capture the details and semantics in the spatio-temporal domain. To optimize the whole process, we also propose a soft-margin focal loss (SMFL), which can automatically conducts adaptive data selection and encourages intrinsic margins in classifiers. Extensive experiments are performed on several large-scale action recognition datasets and our approach significantly outperforms other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge