Guangmang Cui

Dual-Domain Perspective on Degradation-Aware Fusion: A VLM-Guided Robust Infrared and Visible Image Fusion Framework

Sep 05, 2025Abstract:Most existing infrared-visible image fusion (IVIF) methods assume high-quality inputs, and therefore struggle to handle dual-source degraded scenarios, typically requiring manual selection and sequential application of multiple pre-enhancement steps. This decoupled pre-enhancement-to-fusion pipeline inevitably leads to error accumulation and performance degradation. To overcome these limitations, we propose Guided Dual-Domain Fusion (GD^2Fusion), a novel framework that synergistically integrates vision-language models (VLMs) for degradation perception with dual-domain (frequency/spatial) joint optimization. Concretely, the designed Guided Frequency Modality-Specific Extraction (GFMSE) module performs frequency-domain degradation perception and suppression and discriminatively extracts fusion-relevant sub-band features. Meanwhile, the Guided Spatial Modality-Aggregated Fusion (GSMAF) module carries out cross-modal degradation filtering and adaptive multi-source feature aggregation in the spatial domain to enhance modality complementarity and structural consistency. Extensive qualitative and quantitative experiments demonstrate that GD^2Fusion achieves superior fusion performance compared with existing algorithms and strategies in dual-source degraded scenarios. The code will be publicly released after acceptance of this paper.

FSATFusion: Frequency-Spatial Attention Transformer for Infrared and Visible Image Fusion

Jun 12, 2025Abstract:The infrared and visible images fusion (IVIF) is receiving increasing attention from both the research community and industry due to its excellent results in downstream applications. Existing deep learning approaches often utilize convolutional neural networks to extract image features. However, the inherently capacity of convolution operations to capture global context can lead to information loss, thereby restricting fusion performance. To address this limitation, we propose an end-to-end fusion network named the Frequency-Spatial Attention Transformer Fusion Network (FSATFusion). The FSATFusion contains a frequency-spatial attention Transformer (FSAT) module designed to effectively capture discriminate features from source images. This FSAT module includes a frequency-spatial attention mechanism (FSAM) capable of extracting significant features from feature maps. Additionally, we propose an improved Transformer module (ITM) to enhance the ability to extract global context information of vanilla Transformer. We conducted both qualitative and quantitative comparative experiments, demonstrating the superior fusion quality and efficiency of FSATFusion compared to other state-of-the-art methods. Furthermore, our network was tested on two additional tasks without any modifications, to verify the excellent generalization capability of FSATFusion. Finally, the object detection experiment demonstrated the superiority of FSATFusion in downstream visual tasks. Our code is available at https://github.com/Lmmh058/FSATFusion.

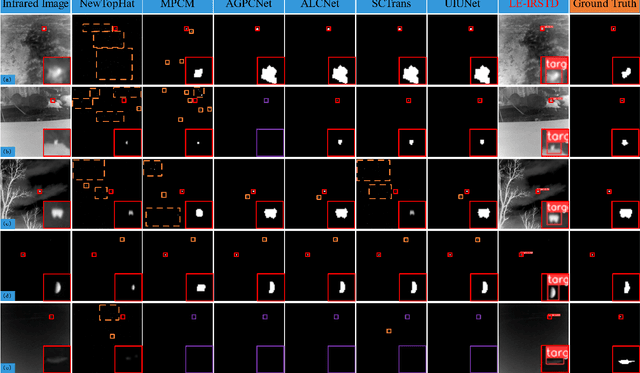

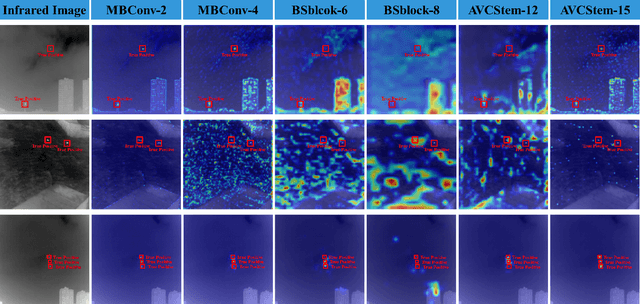

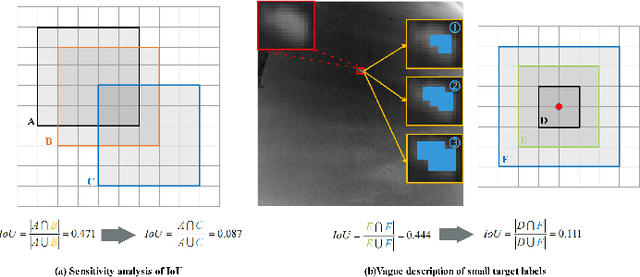

Make Both Ends Meet: A Synergistic Optimization Infrared Small Target Detection with Streamlined Computational Overhead

Apr 30, 2025

Abstract:Infrared small target detection(IRSTD) is widely recognized as a challenging task due to the inherent limitations of infrared imaging, including low signal-to-noise ratios, lack of texture details, and complex background interference. While most existing methods model IRSTD as a semantic segmentation task, but they suffer from two critical drawbacks: (1)blurred target boundaries caused by long-distance imaging dispersion; and (2) excessive computational overhead due to indiscriminate feature stackin. To address these issues, we propose the Lightweight Efficiency Infrared Small Target Detection (LE-IRSTD), a lightweight and efficient framework based on YOLOv8n, with following key innovations. Firstly, we identify that the multiple bottleneck structures within the C2f component of the YOLOv8-n backbone contribute to an increased computational burden. Therefore, we implement the Mobile Inverted Bottleneck Convolution block (MBConvblock) and Bottleneck Structure block (BSblock) in the backbone, effectively balancing the trade-off between computational efficiency and the extraction of deep semantic information. Secondly, we introduce the Attention-based Variable Convolution Stem (AVCStem) structure, substituting the final convolution with Variable Kernel Convolution (VKConv), which allows for adaptive convolutional kernels that can transform into various shapes, facilitating the receptive field for the extraction of targets. Finally, we employ Global Shuffle Convolution (GSConv) to shuffle the channel dimension features obtained from different convolutional approaches, thereby enhancing the robustness and generalization capabilities of our method. Experimental results demonstrate that our LE-IRSTD method achieves compelling results in both accuracy and lightweight performance, outperforming several state-of-the-art deep learning methods.

DAAF:Degradation-Aware Adaptive Fusion Framework for Robust Infrared and Visible Images Fusion

Apr 15, 2025

Abstract:Existing infrared and visible image fusion(IVIF) algorithms often prioritize high-quality images, neglecting image degradation such as low light and noise, which limits the practical potential. This paper propose Degradation-Aware Adaptive image Fusion (DAAF), which achieves unified modeling of adaptive degradation optimization and image fusion. Specifically, DAAF comprises an auxiliary Adaptive Degradation Optimization Network (ADON) and a Feature Interactive Local-Global Fusion (FILGF) Network. Firstly, ADON includes infrared and visible-light branches. Within the infrared branch, frequency-domain feature decomposition and extraction are employed to isolate Gaussian and stripe noise. In the visible-light branch, Retinex decomposition is applied to extract illumination and reflectance components, enabling complementary enhancement of detail and illumination distribution. Subsequently, FILGF performs interactive multi-scale local-global feature fusion. Local feature fusion consists of intra-inter model feature complement, while global feature fusion is achieved through a interactive cross-model attention. Extensive experiments have shown that DAAF outperforms current IVIF algorithms in normal and complex degradation scenarios.

LAM-YOLO: Drones-based Small Object Detection on Lighting-Occlusion Attention Mechanism YOLO

Nov 01, 2024

Abstract:Drone-based target detection presents inherent challenges, such as the high density and overlap of targets in drone-based images, as well as the blurriness of targets under varying lighting conditions, which complicates identification. Traditional methods often struggle to recognize numerous densely packed small targets under complex background. To address these challenges, we propose LAM-YOLO, an object detection model specifically designed for drone-based. First, we introduce a light-occlusion attention mechanism to enhance the visibility of small targets under different lighting conditions. Meanwhile, we incroporate incorporate Involution modules to improve interaction among feature layers. Second, we utilize an improved SIB-IoU as the regression loss function to accelerate model convergence and enhance localization accuracy. Finally, we implement a novel detection strategy that introduces two auxiliary detection heads for identifying smaller-scale targets.Our quantitative results demonstrate that LAM-YOLO outperforms methods such as Faster R-CNN, YOLOv9, and YOLOv10 in terms of mAP@0.5 and mAP@0.5:0.95 on the VisDrone2019 public dataset. Compared to the original YOLOv8, the average precision increases by 7.1\%. Additionally, the proposed SIB-IoU loss function shows improved faster convergence speed during training and improved average precision over the traditional loss function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge