Human Interpretation and Exploitation of Self-attention Patterns in Transformers: A Case Study in Extractive Summarization

Dec 10, 2021Raymond Li, Wen Xiao, Lanjun Wang, Giuseppe Carenini

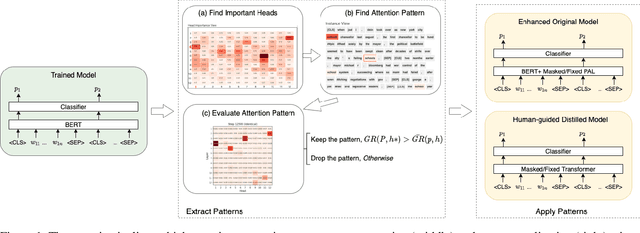

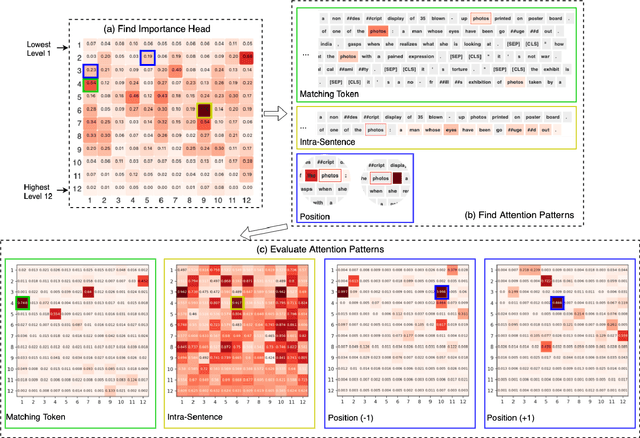

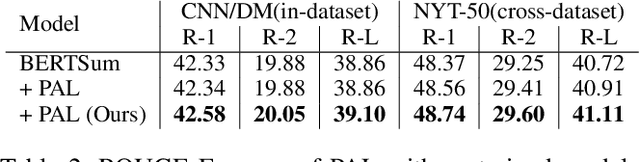

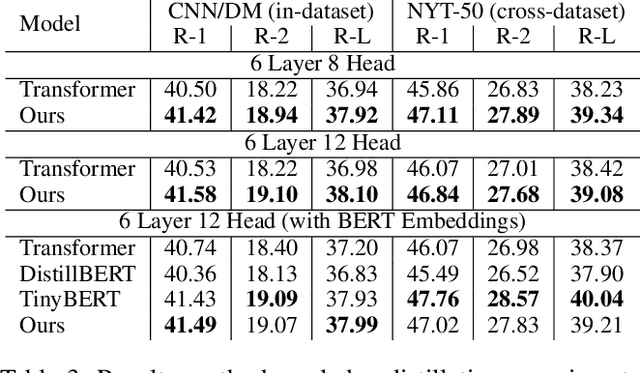

The transformer multi-head self-attention mechanism has been thoroughly investigated recently. On one hand, researchers are interested in understanding why and how transformers work. On the other hand, they propose new attention augmentation methods to make transformers more accurate, efficient and interpretable. In this paper, we synergize these two lines of research in a human-in-the-loop pipeline to first find important task-specific attention patterns. Then those patterns are applied, not only to the original model, but also to smaller models, as a human-guided knowledge distillation process. The benefits of our pipeline are demonstrated in a case study with the extractive summarization task. After finding three meaningful attention patterns in the popular BERTSum model, experiments indicate that when we inject such patterns, both the original and the smaller model show improvements in performance and arguably interpretability.

PRIMER: Pyramid-based Masked Sentence Pre-training for Multi-document Summarization

Oct 16, 2021Wen Xiao, Iz Beltagy, Giuseppe Carenini, Arman Cohan

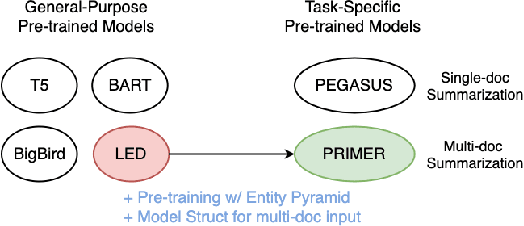

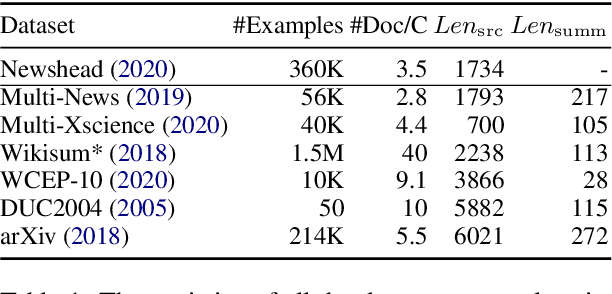

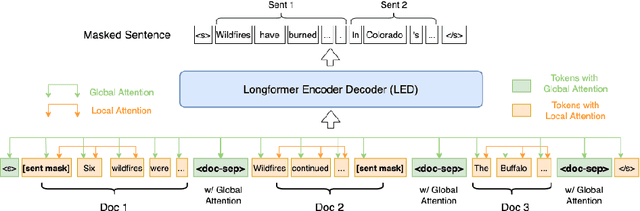

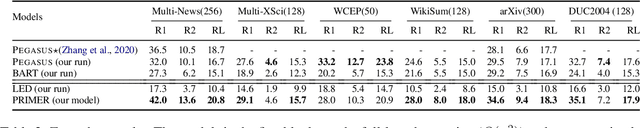

Recently proposed pre-trained generation models achieve strong performance on single-document summarization benchmarks. However, most of them are pre-trained with general-purpose objectives and mainly aim to process single document inputs. In this paper, we propose PRIMER, a pre-trained model for multi-document representation with focus on summarization that reduces the need for dataset-specific architectures and large amounts of fine-tuning labeled data. Specifically, we adopt the Longformer architecture with proper input transformation and global attention to fit for multi-document inputs, and we use Gap Sentence Generation objective with a new strategy to select salient sentences for the whole cluster, called Entity Pyramid, to teach the model to select and aggregate information across a cluster of related documents. With extensive experiments on 6 multi-document summarization datasets from 3 different domains on the zero-shot, few-shot, and full-supervised settings, our model, PRIMER, outperforms current state-of-the-art models on most of these settings with large margins. Code and pre-trained models are released at https://github.com/allenai/PRIMER

T3-Vis: a visual analytic framework for Training and fine-Tuning Transformers in NLP

Aug 31, 2021Raymond Li, Wen Xiao, Lanjun Wang, Hyeju Jang, Giuseppe Carenini

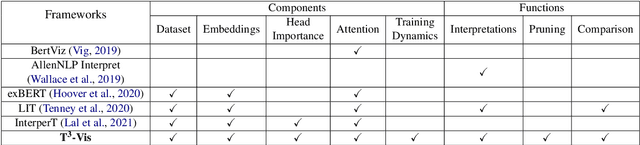

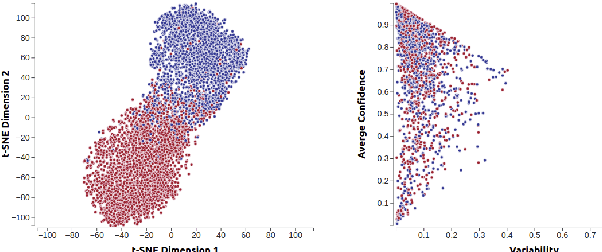

Transformers are the dominant architecture in NLP, but their training and fine-tuning is still very challenging. In this paper, we present the design and implementation of a visual analytic framework for assisting researchers in such process, by providing them with valuable insights about the model's intrinsic properties and behaviours. Our framework offers an intuitive overview that allows the user to explore different facets of the model (e.g., hidden states, attention) through interactive visualization, and allows a suite of built-in algorithms that compute the importance of model components and different parts of the input sequence. Case studies and feedback from a user focus group indicate that the framework is useful, and suggest several improvements.

ConVIScope: Visual Analytics for Exploring Patient Conversations

Aug 30, 2021Raymond Li, Enamul Hoque, Giuseppe Carenini, Richard Lester, Raymond Chau

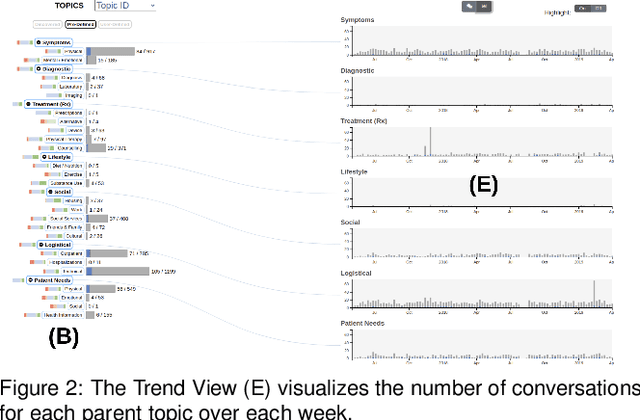

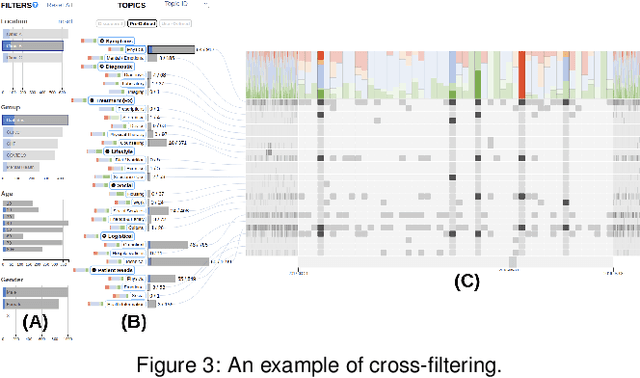

The proliferation of text messaging for mobile health is generating a large amount of patient-doctor conversations that can be extremely valuable to health care professionals. We present ConVIScope, a visual text analytic system that tightly integrates interactive visualization with natural language processing in analyzing patient-doctor conversations. ConVIScope was developed in collaboration with healthcare professionals following a user-centered iterative design. Case studies with six domain experts suggest the potential utility of ConVIScope and reveal lessons for further developments.

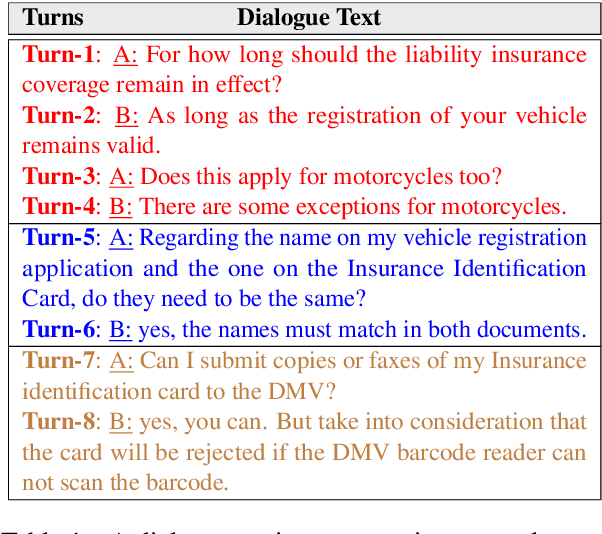

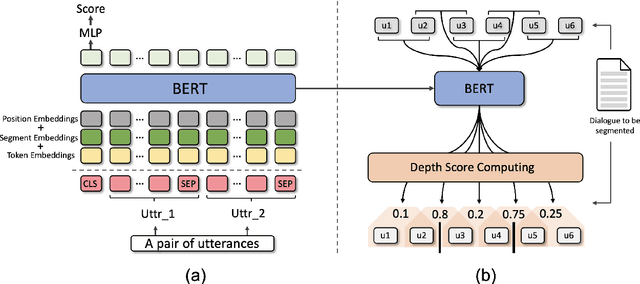

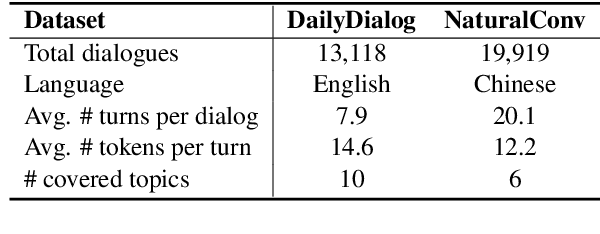

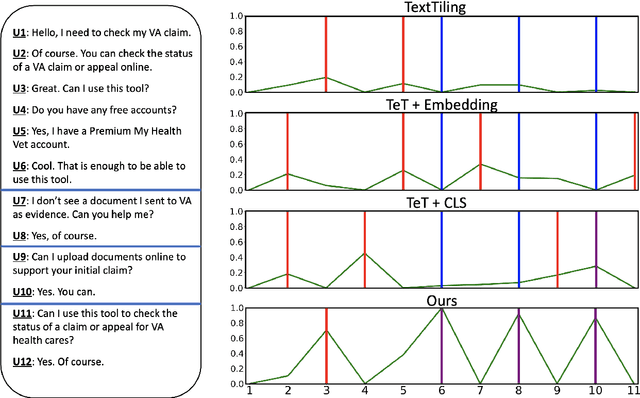

Improving Unsupervised Dialogue Topic Segmentation with Utterance-Pair Coherence Scoring

Jun 12, 2021Linzi Xing, Giuseppe Carenini

Dialogue topic segmentation is critical in several dialogue modeling problems. However, popular unsupervised approaches only exploit surface features in assessing topical coherence among utterances. In this work, we address this limitation by leveraging supervisory signals from the utterance-pair coherence scoring task. First, we present a simple yet effective strategy to generate a training corpus for utterance-pair coherence scoring. Then, we train a BERT-based neural utterance-pair coherence model with the obtained training corpus. Finally, such model is used to measure the topical relevance between utterances, acting as the basis of the segmentation inference. Experiments on three public datasets in English and Chinese demonstrate that our proposal outperforms the state-of-the-art baselines.

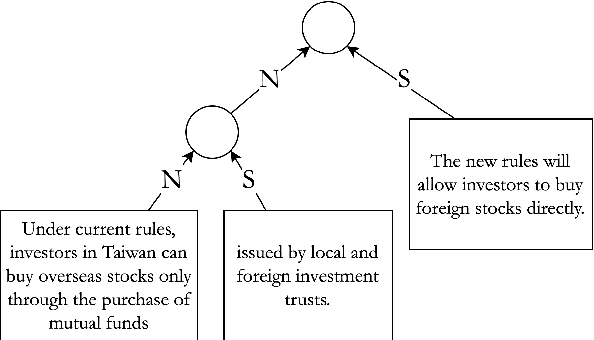

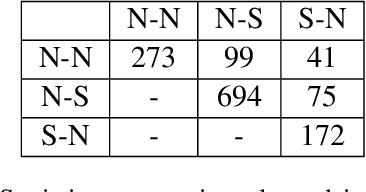

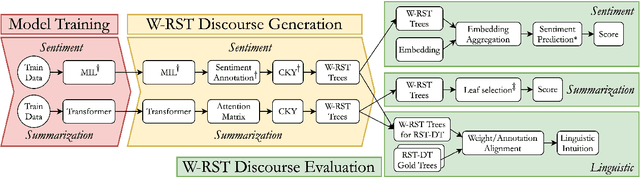

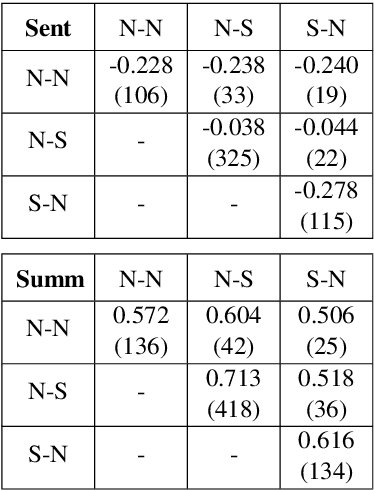

W-RST: Towards a Weighted RST-style Discourse Framework

Jun 04, 2021Patrick Huber, Wen Xiao, Giuseppe Carenini

Aiming for a better integration of data-driven and linguistically-inspired approaches, we explore whether RST Nuclearity, assigning a binary assessment of importance between text segments, can be replaced by automatically generated, real-valued scores, in what we call a Weighted-RST framework. In particular, we find that weighted discourse trees from auxiliary tasks can benefit key NLP downstream applications, compared to nuclearity-centered approaches. We further show that real-valued importance distributions partially and interestingly align with the assessment and uncertainty of human annotators.

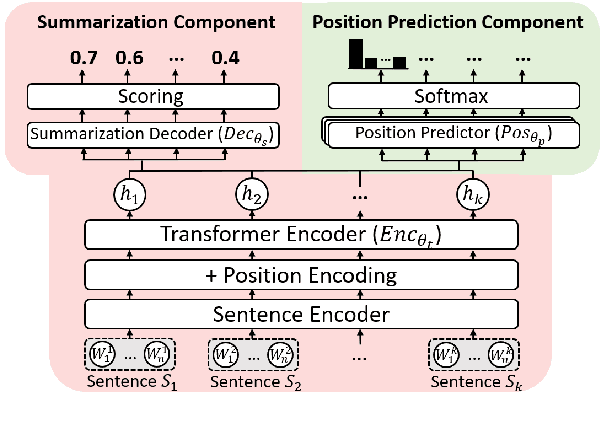

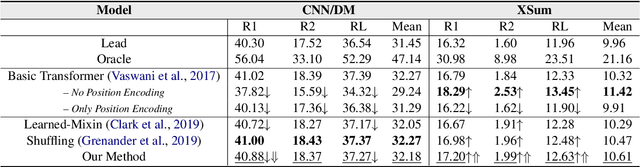

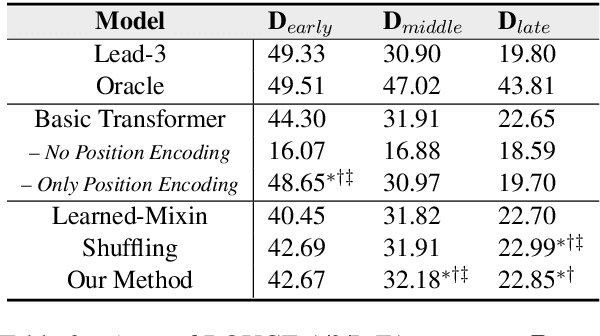

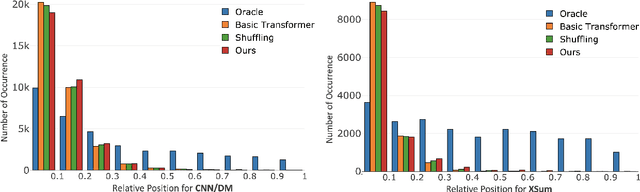

Demoting the Lead Bias in News Summarization via Alternating Adversarial Learning

May 29, 2021Linzi Xing, Wen Xiao, Giuseppe Carenini

In news articles the lead bias is a common phenomenon that usually dominates the learning signals for neural extractive summarizers, severely limiting their performance on data with different or even no bias. In this paper, we introduce a novel technique to demote lead bias and make the summarizer focus more on the content semantics. Experiments on two news corpora with different degrees of lead bias show that our method can effectively demote the model's learned lead bias and improve its generality on out-of-distribution data, with little to no performance loss on in-distribution data.

Predicting Discourse Trees from Transformer-based Neural Summarizers

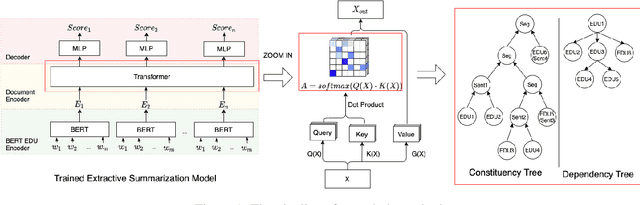

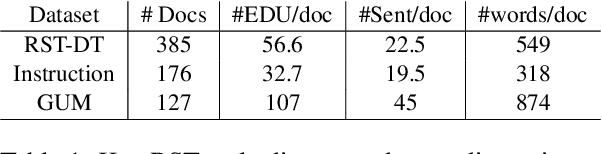

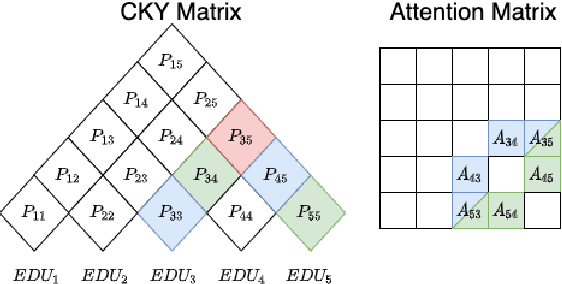

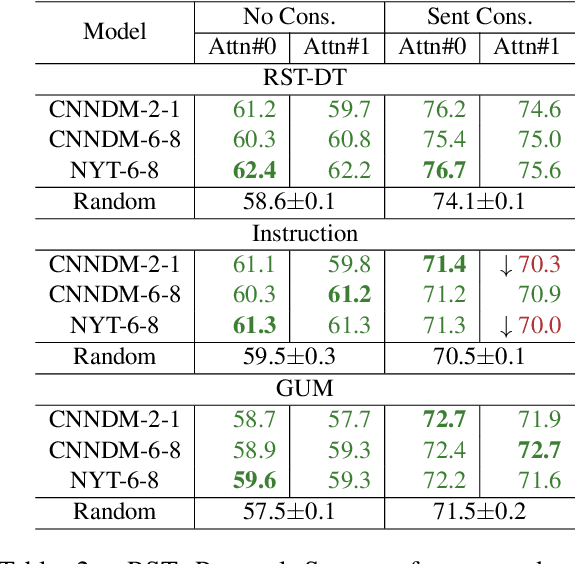

Apr 14, 2021Wen Xiao, Patrick Huber, Giuseppe Carenini

Previous work indicates that discourse information benefits summarization. In this paper, we explore whether this synergy between discourse and summarization is bidirectional, by inferring document-level discourse trees from pre-trained neural summarizers. In particular, we generate unlabeled RST-style discourse trees from the self-attention matrices of the transformer model. Experiments across models and datasets reveal that the summarizer learns both, dependency- and constituency-style discourse information, which is typically encoded in a single head, covering long- and short-distance discourse dependencies. Overall, the experimental results suggest that the learned discourse information is general and transferable inter-domain.

Unsupervised Learning of Discourse Structures using a Tree Autoencoder

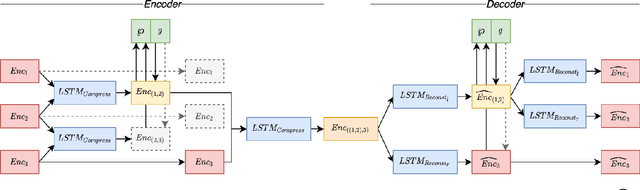

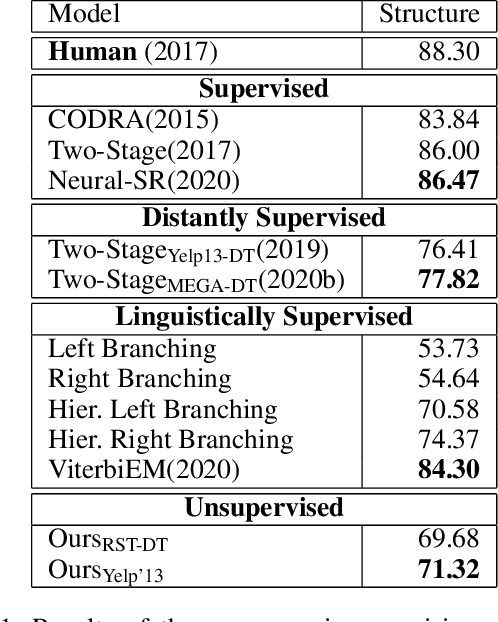

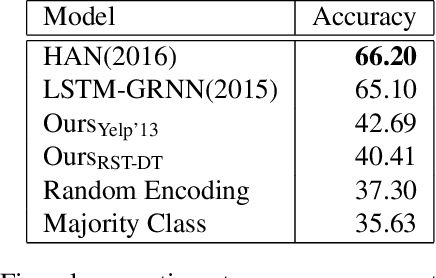

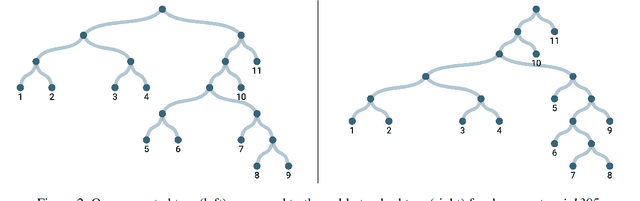

Dec 17, 2020Patrick Huber, Giuseppe Carenini

Discourse information, as postulated by popular discourse theories, such as RST and PDTB, has been shown to improve an increasing number of downstream NLP tasks, showing positive effects and synergies of discourse with important real-world applications. While methods for incorporating discourse become more and more sophisticated, the growing need for robust and general discourse structures has not been sufficiently met by current discourse parsers, usually trained on small scale datasets in a strictly limited number of domains. This makes the prediction for arbitrary tasks noisy and unreliable. The overall resulting lack of high-quality, high-quantity discourse trees poses a severe limitation to further progress. In order the alleviate this shortcoming, we propose a new strategy to generate tree structures in a task-agnostic, unsupervised fashion by extending a latent tree induction framework with an auto-encoding objective. The proposed approach can be applied to any tree-structured objective, such as syntactic parsing, discourse parsing and others. However, due to the especially difficult annotation process to generate discourse trees, we initially develop a method to generate larger and more diverse discourse treebanks. In this paper we are inferring general tree structures of natural text in multiple domains, showing promising results on a diverse set of tasks.

Do We Really Need That Many Parameters In Transformer For Extractive Summarization? Discourse Can Help !

Dec 03, 2020Wen Xiao, Patrick Huber, Giuseppe Carenini

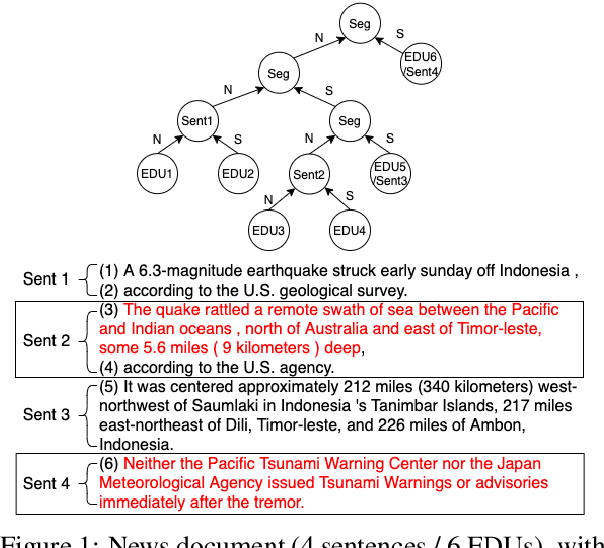

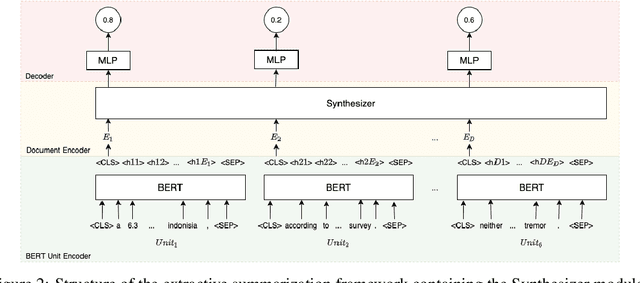

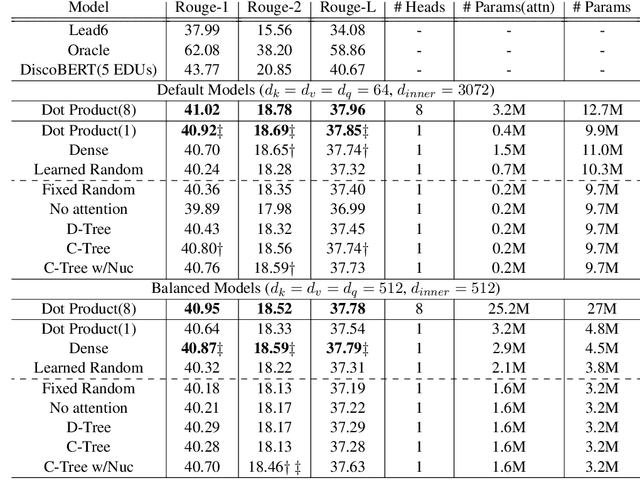

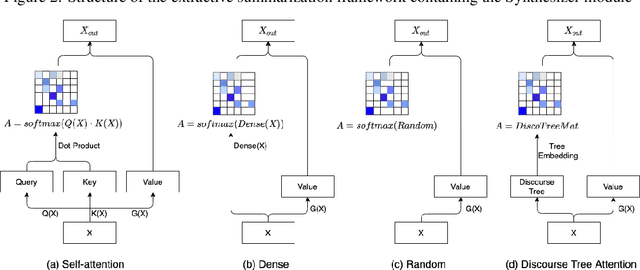

The multi-head self-attention of popular transformer models is widely used within Natural Language Processing (NLP), including for the task of extractive summarization. With the goal of analyzing and pruning the parameter-heavy self-attention mechanism, there are multiple approaches proposing more parameter-light self-attention alternatives. In this paper, we present a novel parameter-lean self-attention mechanism using discourse priors. Our new tree self-attention is based on document-level discourse information, extending the recently proposed "Synthesizer" framework with another lightweight alternative. We show empirical results that our tree self-attention approach achieves competitive ROUGE-scores on the task of extractive summarization. When compared to the original single-head transformer model, the tree attention approach reaches similar performance on both, EDU and sentence level, despite the significant reduction of parameters in the attention component. We further significantly outperform the 8-head transformer model on sentence level when applying a more balanced hyper-parameter setting, requiring an order of magnitude less parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge