Gilberto Berardinelli

Energy-Efficient Federated Learning with Relay-Assisted Aggregation in IIoT Networks

Dec 10, 2025Abstract:This paper presents an energy-efficient transmission framework for federated learning (FL) in industrial Internet of Things (IIoT) environments with strict latency and energy constraints. Machinery subnetworks (SNs) collaboratively train a global model by uploading local updates to an edge server (ES), either directly or via neighboring SNs acting as decode-and-forward relays. To enhance communication efficiency, relays perform partial aggregation before forwarding the models to the ES, significantly reducing overhead and training latency. We analyze the convergence behavior of this relay-assisted FL scheme. To address the inherent energy efficiency (EE) challenges, we decompose the original non-convex optimization problem into sub-problems addressing computation and communication energy separately. An SN grouping algorithm categorizes devices into single-hop and two-hop transmitters based on latency minimization, followed by a relay selection mechanism. To improve FL reliability, we further maximize the number of SNs that meet the roundwise delay constraint, promoting broader participation and improved convergence stability under practical IIoT data distributions. Transmit power levels are then optimized to maximize EE, and a sequential parametric convex approximation (SPCA) method is proposed for joint configuration of system parameters. We further extend the EE formulation to the imperfect channel state information (ICSI). Simulation results demonstrate that the proposed framework significantly enhances convergence speed, reduces outage probability from 10-2 in single-hop to 10-6 and achieves substantial energy savings, with the SPCA approach reducing energy consumption by at least 2x compared to unaggregated cooperation and up to 6x over single-hop transmission.

Toward ISAC-empowered subnetworks: Cooperative localization and iterative node selection

Nov 15, 2025Abstract:This paper tackles the sensing-communication trade-off in integrated sensing and communication (ISAC)-empowered subnetworks for mono-static target localization. We propose a low-complexity iterative node selection algorithm that exploits the spatial diversity of subnetwork deployments and dynamically refines the set of sensing subnetworks to maximize localization accuracy under tight resource constraints. Simulation results show that our method achieves sub-7 cm accuracy in additive white Gaussian noise (AWGN) channels within only three iterations, yielding over 97% improvement compared to the best-performing benchmark under the same sensing budget. We further demonstrate that increasing spatial diversity through additional antennas and subnetworks enhances sensing robustness, especially in fading channels. Finally, we quantify the sensing-communication trade-off, showing that reducing sensing iterations and the number of sensing subnetworks improves throughput at the cost of reduced localization precision.

AI-Assisted NLOS Sensing for RIS-Based Indoor Localization in Smart Factories

May 21, 2025Abstract:In the era of Industry 4.0, precise indoor localization is vital for automation and efficiency in smart factories. Reconfigurable Intelligent Surfaces (RIS) are emerging as key enablers in 6G networks for joint sensing and communication. However, RIS faces significant challenges in Non-Line-of-Sight (NLOS) and multipath propagation, particularly in localization scenarios, where detecting NLOS conditions is crucial for ensuring not only reliable results and increased connectivity but also the safety of smart factory personnel. This study introduces an AI-assisted framework employing a Convolutional Neural Network (CNN) customized for accurate Line-of-Sight (LOS) and Non-Line-of-Sight (NLOS) classification to enhance RIS-based localization using measured, synthetic, mixed-measured, and mixed-synthetic experimental data, that is, original, augmented, slightly noisy, and highly noisy data, respectively. Validated through such data from three different environments, the proposed customized-CNN (cCNN) model achieves {95.0\%-99.0\%} accuracy, outperforming standard pre-trained models like Visual Geometry Group 16 (VGG-16) with an accuracy of {85.5\%-88.0\%}. By addressing RIS limitations in NLOS scenarios, this framework offers scalable and high-precision localization solutions for 6G-enabled smart factories.

Multi-User Beamforming with Deep Reinforcement Learning in Sensing-Aided Communication

May 09, 2025Abstract:Mobile users are prone to experience beam failure due to beam drifting in millimeter wave (mmWave) communications. Sensing can help alleviate beam drifting with timely beam changes and low overhead since it does not need user feedback. This work studies the problem of optimizing sensing-aided communication by dynamically managing beams allocated to mobile users. A multi-beam scheme is introduced, which allocates multiple beams to the users that need an update on the angle of departure (AoD) estimates and a single beam to the users that have satisfied AoD estimation precision. A deep reinforcement learning (DRL) assisted method is developed to optimize the beam allocation policy, relying only upon the sensing echoes. For comparison, a heuristic AoD-based method using approximated Cram\'er-Rao lower bound (CRLB) for allocation is also presented. Both methods require neither user feedback nor prior state evolution information. Results show that the DRL-assisted method achieves a considerable gain in throughput than the conventional beam sweeping method and the AoD-based method, and it is robust to different user speeds.

Learning Power Control Protocol for In-Factory 6G Subnetworks

May 09, 2025Abstract:In-X Subnetworks are envisioned to meet the stringent demands of short-range communication in diverse 6G use cases. In the context of In-Factory scenarios, effective power control is critical to mitigating the impact of interference resulting from potentially high subnetwork density. Existing approaches to power control in this domain have predominantly emphasized the data plane, often overlooking the impact of signaling overhead. Furthermore, prior work has typically adopted a network-centric perspective, relying on the assumption of complete and up-to-date channel state information (CSI) being readily available at the central controller. This paper introduces a novel multi-agent reinforcement learning (MARL) framework designed to enable access points to autonomously learn both signaling and power control protocols in an In-Factory Subnetwork environment. By formulating the problem as a partially observable Markov decision process (POMDP) and leveraging multi-agent proximal policy optimization (MAPPO), the proposed approach achieves significant advantages. The simulation results demonstrate that the learning-based method reduces signaling overhead by a factor of 8 while maintaining a buffer flush rate that lags the ideal "Genie" approach by only 5%.

Power Efficient Cooperative Communication within IIoT Subnetworks: Relay or RIS?

Nov 19, 2024

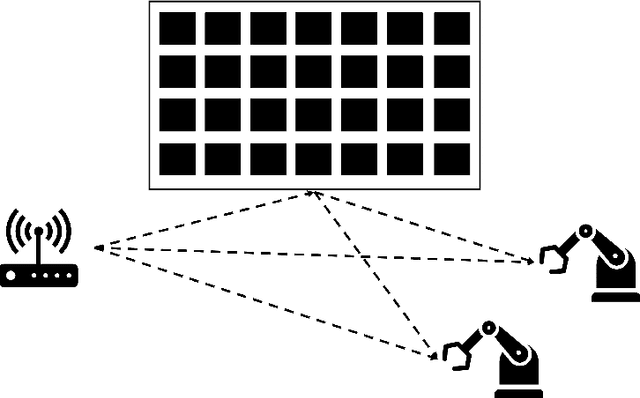

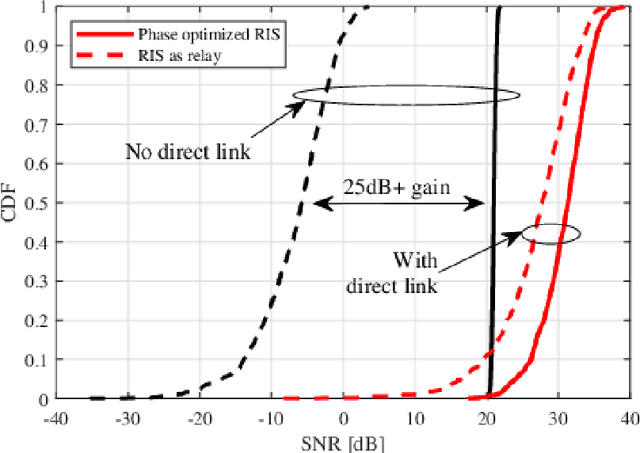

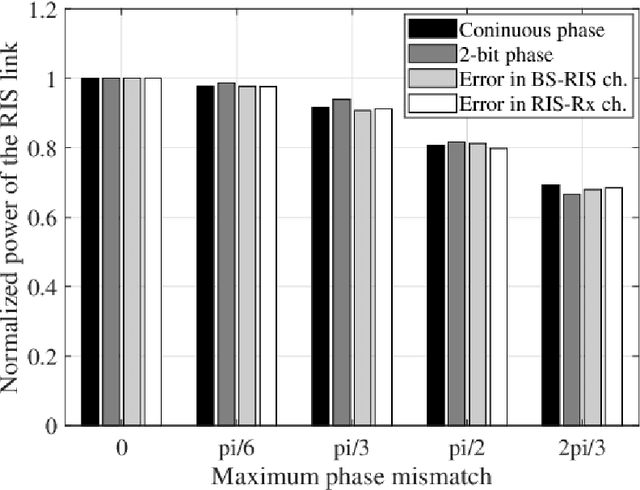

Abstract:The forthcoming sixth-generation (6G) industrial Internet-of-Things (IIoT) subnetworks are expected to support ultra-fast control communication cycles for numerous IoT devices. However, meeting the stringent requirements for low latency and high reliability poses significant challenges, particularly due to signal fading and physical obstructions. In this paper, we propose novel time division multiple access (TDMA) and frequency division multiple access (FDMA) communication protocols for cooperative transmission in IIoT subnetworks. These protocols leverage secondary access points (sAPs) as Decode-and-Forward (DF) and Amplify-and-Forward (AF) relays, enabling shorter cycle times while minimizing overall transmit power. A classification mechanism determines whether the highest-gain link for each IoT device is a single-hop or two-hop connection, and selects the corresponding sAP. We then formulate the problem of minimizing transmit power for DF/AF relaying while adhering to the delay and maximum power constraints. In the FDMA case, an additional constraint is introduced for bandwidth allocation to IoT devices during the first and second phases of cooperative transmission. To tackle the nonconvex problem, we employ the sequential parametric convex approximation (SPCA) method. We extend our analysis to a system model with reconfigurable intelligent surfaces (RISs), enabling transmission through direct and RIS-assisted channels, and optimizing for a multi-RIS scenario for comparative analysis. Simulation results show that our cooperative communication approach reduces the emitted power by up to 7.5 dB while maintaining an outage probability and a resource overflow rate below $10^{-6}$. While the RIS-based solution achieves greater power savings, the relay-based protocol outperforms RIS in terms of outage probability.

Unsupervised Graph-based Learning Method for Sub-band Allocation in 6G Subnetworks

Dec 13, 2023Abstract:In this paper, we present an unsupervised approach for frequency sub-band allocation in wireless networks using graph-based learning. We consider a dense deployment of subnetworks in the factory environment with a limited number of sub-bands which must be optimally allocated to coordinate inter-subnetwork interference. We model the subnetwork deployment as a conflict graph and propose an unsupervised learning approach inspired by the graph colouring heuristic and the Potts model to optimize the sub-band allocation using graph neural networks. The numerical evaluation shows that the proposed method achieves close performance to the centralized greedy colouring sub-band allocation heuristic with lower computational time complexity. In addition, it incurs reduced signalling overhead compared to iterative optimization heuristics that require all the mutual interfering channel information. We further demonstrate that the method is robust to different network settings.

On the required radio resources for ultra-reliable communication in highly interfered scenarios

Jun 10, 2023Abstract:Future wireless systems are expected to support mission-critical services demanding higher and higher reliability. In this letter, we dimension the radio resources needed to achieve a given failure probability target for ultra-reliable wireless systems in high interference conditions, assuming a protocol with frequency hopping combined with packet repetitions. We resort to packet erasure channel models and derive the minimum amount of resource units in the case of receiver with and without collision resolution capability, as well as the number of packet repetitions needed for achieving the failure probability target. Analytical results are numerically validated and can be used as a benchmark for realistic system simulations

Power Control for 6G Industrial Wireless Subnetworks: A Graph Neural Network Approach

Dec 30, 2022Abstract:6th Generation (6G) industrial wireless subnetworks are expected to replace wired connectivity for control operation in robots and production modules. Interference management techniques such as centralized power control can improve spectral efficiency in dense deployments of such subnetworks. However, existing solutions for centralized power control may require full channel state information (CSI) of all the desired and interfering links, which may be cumbersome and time-consuming to obtain in dense deployments. This paper presents a novel solution for centralized power control for industrial subnetworks based on Graph Neural Networks (GNNs). The proposed method only requires the subnetwork positioning information, usually known at the central controller, and the knowledge of the desired link channel gain during the execution phase. Simulation results show that our solution achieves similar spectral efficiency as the benchmark schemes requiring full CSI in runtime operations. Also, robustness to changes in the deployment density and environment characteristics with respect to the training phase is verified.

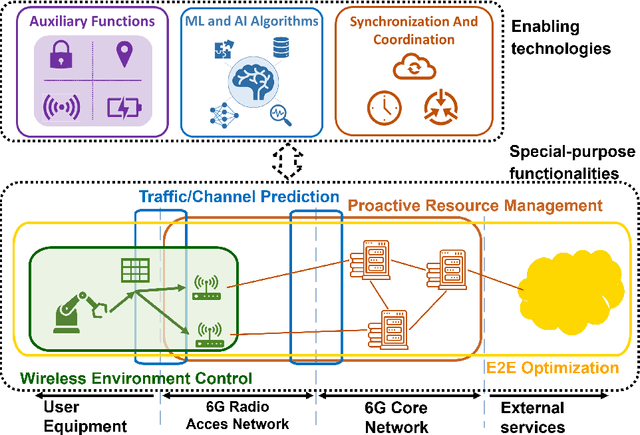

A Functional Architecture for 6G Special Purpose Industrial IoT Networks

Jul 01, 2022

Abstract:Future industrial applications will encompass compelling new use cases requiring stringent performance guarantees over multiple key performance indicators (KPI) such as reliability, dependability, latency, time synchronization, security, etc. Achieving such stringent and diverse service requirements necessitates the design of a special-purpose Industrial Internet of Things (IIoT) network comprising a multitude of specialized functionalities and technological enablers. This article proposes an innovative architecture for such a special-purpose 6G IIoT network incorporating seven functional building blocks categorized into: special-purpose functionalities and enabling technologies. The former consists of Wireless Environment Control, Traffic/Channel Prediction, Proactive Resource Management and End-to-End Optimization functions; whereas the latter includes Synchronization and Coordination, Machine Learning and Artificial Intelligence Algorithms, and Auxiliary Functions. The proposed architecture aims at providing a resource-efficient and holistic solution for the complex and dynamically challenging requirements imposed by future 6G industrial use cases. Selected test scenarios are provided and assessed to illustrate cross-functional collaboration and demonstrate the applicability of the proposed architecture in a wireless IIoT network.

* 11 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge