Giancarlo Guizzardi

ONTrust: A Reference Ontology of Trust

Feb 07, 2026Abstract:Trust has stood out more than ever in the light of recent innovations. Some examples are advances in artificial intelligence that make machines more and more humanlike, and the introduction of decentralized technologies (e.g. blockchains), which creates new forms of (decentralized) trust. These new developments have the potential to improve the provision of products and services, as well as to contribute to individual and collective well-being. However, their adoption depends largely on trust. In order to build trustworthy systems, along with defining laws, regulations and proper governance models for new forms of trust, it is necessary to properly conceptualize trust, so that it can be understood both by humans and machines. This paper is the culmination of a long-term research program of providing a solid ontological foundation on trust, by creating reference conceptual models to support information modeling, automated reasoning, information integration and semantic interoperability tasks. To address this, a Reference Ontology of Trust (ONTrust) was developed, grounded on the Unified Foundational Ontology and specified in OntoUML, which has been applied in several initiatives, to demonstrate, for example, how it can be used for conceptual modeling and enterprise architecture design, for language evaluation and (re)design, for trust management, for requirements engineering, and for trustworthy artificial intelligence (AI) in the context of affective Human-AI teaming. ONTrust formally characterizes the concept of trust and its different types, describes the different factors that can influence trust, as well as explains how risk emerges from trust relations. To illustrate the working of ONTrust, the ontology is applied to model two case studies extracted from the literature.

DODGE: Ontology-Aware Risk Assessment via Object-Oriented Disruption Graphs

Dec 18, 2024Abstract:When considering risky events or actions, we must not downplay the role of involved objects: a charged battery in our phone averts the risk of being stranded in the desert after a flat tyre, and a functional firewall mitigates the risk of a hacker intruding the network. The Common Ontology of Value and Risk (COVER) highlights how the role of objects and their relationships remains pivotal to performing transparent, complete and accountable risk assessment. In this paper, we operationalize some of the notions proposed by COVER -- such as parthood between objects and participation of objects in events/actions -- by presenting a new framework for risk assessment: DODGE. DODGE enriches the expressivity of vetted formal models for risk -- i.e., fault trees and attack trees -- by bridging the disciplines of ontology and formal methods into an ontology-aware formal framework composed by a more expressive modelling formalism, Object-Oriented Disruption Graphs (ODGs), logic (ODGLog) and an intermediate query language (ODGLang). With these, DODGE allows risk assessors to pose questions about disruption propagation, disruption likelihood and risk levels, keeping the fundamental role of objects at risk always in sight.

Mining Frequent Structures in Conceptual Models

Jun 11, 2024

Abstract:The problem of using structured methods to represent knowledge is well-known in conceptual modeling and has been studied for many years. It has been proven that adopting modeling patterns represents an effective structural method. Patterns are, indeed, generalizable recurrent structures that can be exploited as solutions to design problems. They aid in understanding and improving the process of creating models. The undeniable value of using patterns in conceptual modeling was demonstrated in several experimental studies. However, discovering patterns in conceptual models is widely recognized as a highly complex task and a systematic solution to pattern identification is currently lacking. In this paper, we propose a general approach to the problem of discovering frequent structures, as they occur in conceptual modeling languages. As proof of concept for our scientific contribution, we provide an implementation of the approach, by focusing on UML class diagrams, in particular OntoUML models. This implementation comprises an exploratory tool, which, through the combination of a frequent subgraph mining algorithm and graph manipulation techniques, can process multiple conceptual models and discover recurrent structures according to multiple criteria. The primary objective is to offer a support facility for language engineers. This can be employed to leverage both good and bad modeling practices, to evolve and maintain the conceptual modeling language, and to promote the reuse of encoded experience in designing better models with the given language.

On the Multiple Roles of Ontologies in Explainable AI

Nov 08, 2023

Abstract:This paper discusses the different roles that explicit knowledge, in particular ontologies, can play in Explainable AI and in the development of human-centric explainable systems and intelligible explanations. We consider three main perspectives in which ontologies can contribute significantly, namely reference modelling, common-sense reasoning, and knowledge refinement and complexity management. We overview some of the existing approaches in the literature, and we position them according to these three proposed perspectives. The paper concludes by discussing what challenges still need to be addressed to enable ontology-based approaches to explanation and to evaluate their human-understandability and effectiveness.

Semantics, Ontology and Explanation

Apr 21, 2023

Abstract:The terms 'semantics' and 'ontology' are increasingly appearing together with 'explanation', not only in the scientific literature, but also in organizational communication. However, all of these terms are also being significantly overloaded. In this paper, we discuss their strong relation under particular interpretations. Specifically, we discuss a notion of explanation termed ontological unpacking, which aims at explaining symbolic domain descriptions (conceptual models, knowledge graphs, logical specifications) by revealing their ontological commitment in terms of their assumed truthmakers, i.e., the entities in one's ontology that make the propositions in those descriptions true. To illustrate this idea, we employ an ontological theory of relations to explain (by revealing the hidden semantics of) a very simple symbolic model encoded in the standard modeling language UML. We also discuss the essential role played by ontology-driven conceptual models (resulting from this form of explanation processes) in properly supporting semantic interoperability tasks. Finally, we discuss the relation between ontological unpacking and other forms of explanation in philosophy and science, as well as in the area of Artificial Intelligence.

An Overview of Ontologies and Tool Support for COVID-19 Analytics

Oct 12, 2021

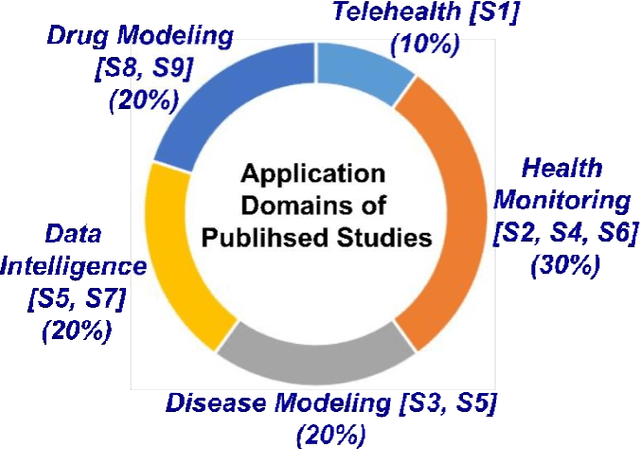

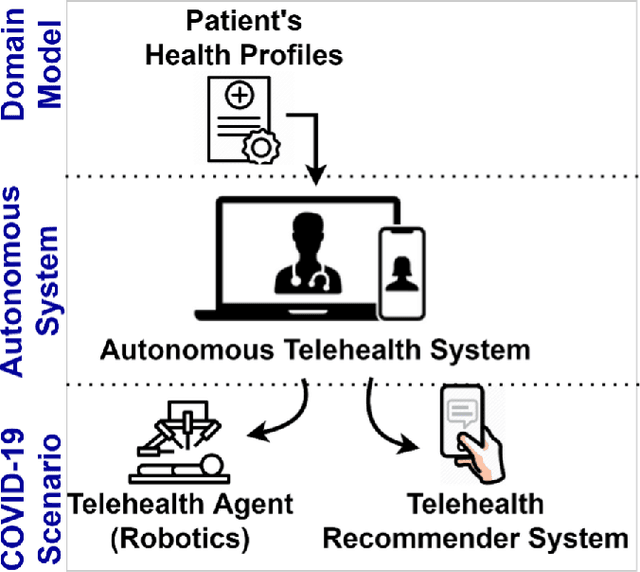

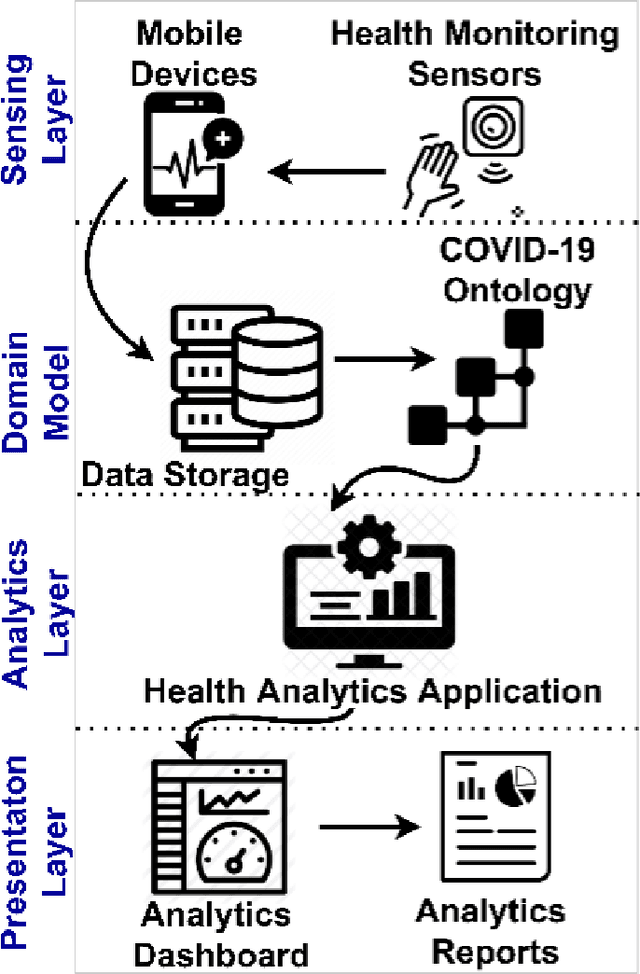

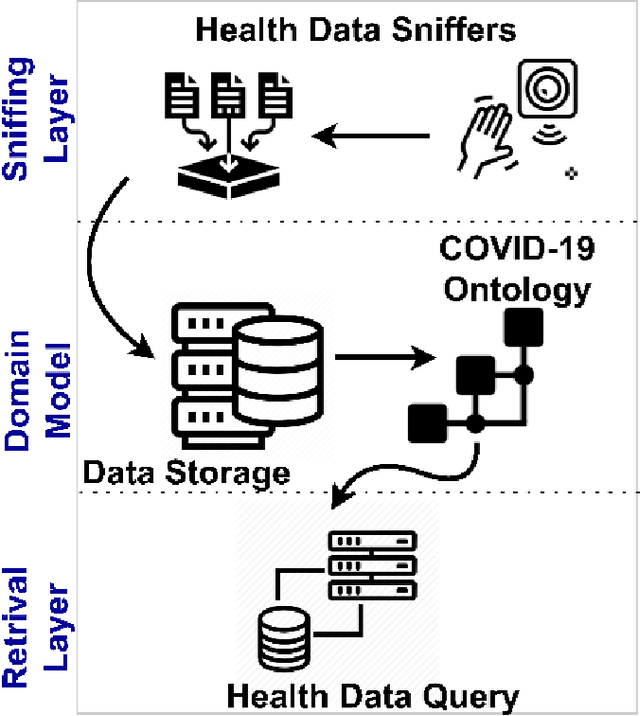

Abstract:The outbreak of the SARS-CoV-2 pandemic of the new COVID-19 disease (COVID-19 for short) demands empowering existing medical, economic, and social emergency backend systems with data analytics capabilities. An impediment in taking advantages of data analytics in these systems is the lack of a unified framework or reference model. Ontologies are highlighted as a promising solution to bridge this gap by providing a formal representation of COVID-19 concepts such as symptoms, infections rate, contact tracing, and drug modelling. Ontology-based solutions enable the integration of diverse data sources that leads to a better understanding of pandemic data, management of smart lockdowns by identifying pandemic hotspots, and knowledge-driven inference, reasoning, and recommendations to tackle surrounding issues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge