Gerard Memmi

A Data Augmentation-based Defense Method Against Adversarial Attacks in Neural Networks

Jul 30, 2020

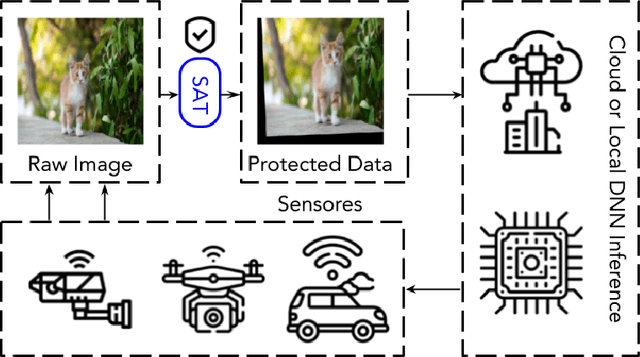

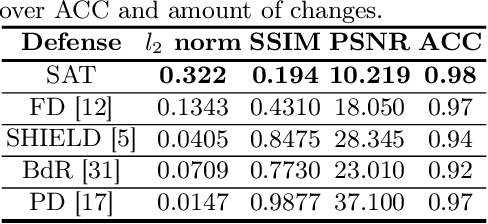

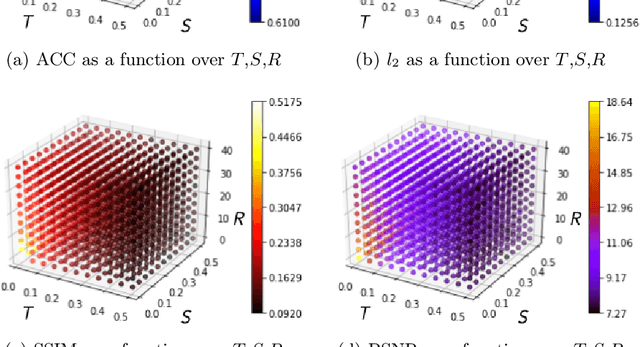

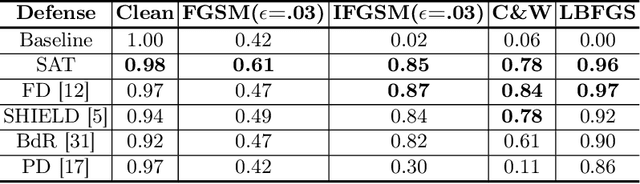

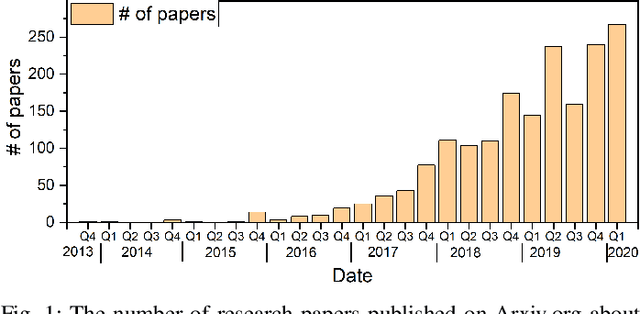

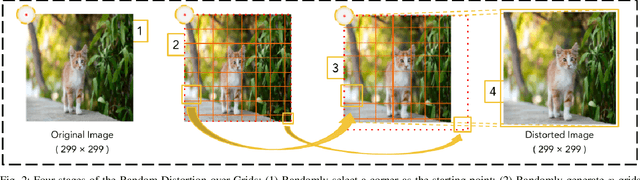

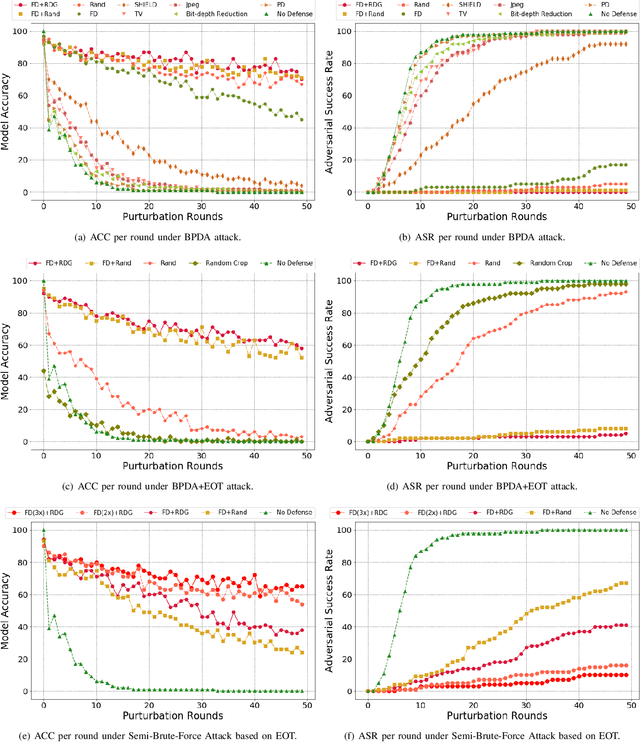

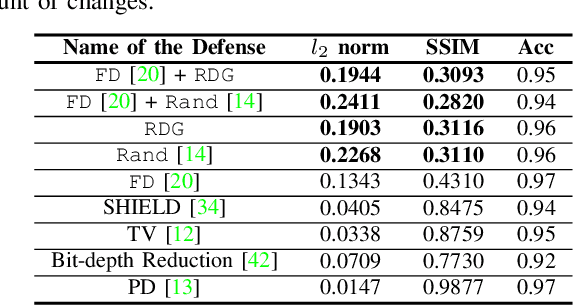

Abstract:Deep Neural Networks (DNNs) in Computer Vision (CV) are well-known to be vulnerable to Adversarial Examples (AEs), namely imperceptible perturbations added maliciously to cause wrong classification results. Such variability has been a potential risk for systems in real-life equipped DNNs as core components. Numerous efforts have been put into research on how to protect DNN models from being tackled by AEs. However, no previous work can efficiently reduce the effects caused by novel adversarial attacks and be compatible with real-life constraints at the same time. In this paper, we focus on developing a lightweight defense method that can efficiently invalidate full whitebox adversarial attacks with the compatibility of real-life constraints. From basic affine transformations, we integrate three transformations with randomized coefficients that fine-tuned respecting the amount of change to the defended sample. Comparing to 4 state-of-art defense methods published in top-tier AI conferences in the past two years, our method demonstrates outstanding robustness and efficiency. It is worth highlighting that, our model can withstand advanced adaptive attack, namely BPDA with 50 rounds, and still helps the target model maintain an accuracy around 80 %, meanwhile constraining the attack success rate to almost zero.

Mitigating Advanced Adversarial Attacks with More Advanced Gradient Obfuscation Techniques

May 27, 2020

Abstract:Deep Neural Networks (DNNs) are well-known to be vulnerable to Adversarial Examples (AEs). A large amount of efforts have been spent to launch and heat the arms race between the attackers and defenders. Recently, advanced gradient-based attack techniques were proposed (e.g., BPDA and EOT), which have defeated a considerable number of existing defense methods. Up to today, there are still no satisfactory solutions that can effectively and efficiently defend against those attacks. In this paper, we make a steady step towards mitigating those advanced gradient-based attacks with two major contributions. First, we perform an in-depth analysis about the root causes of those attacks, and propose four properties that can break the fundamental assumptions of those attacks. Second, we identify a set of operations that can meet those properties. By integrating these operations, we design two preprocessing functions that can invalidate these powerful attacks. Extensive evaluations indicate that our solutions can effectively mitigate all existing standard and advanced attack techniques, and beat 11 state-of-the-art defense solutions published in top-tier conferences over the past 2 years. The defender can employ our solutions to constrain the attack success rate below 7% for the strongest attacks even the adversary has spent dozens of GPU hours.

Investigating Image Applications Based on Spatial-Frequency Transform and Deep Learning Techniques

Mar 20, 2020

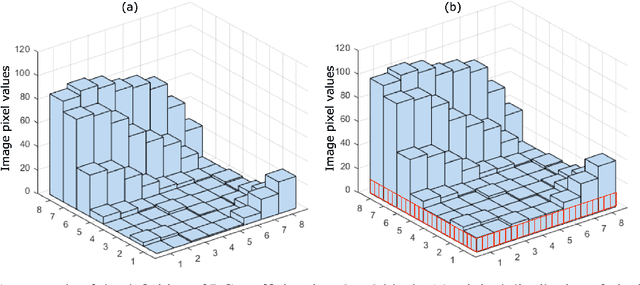

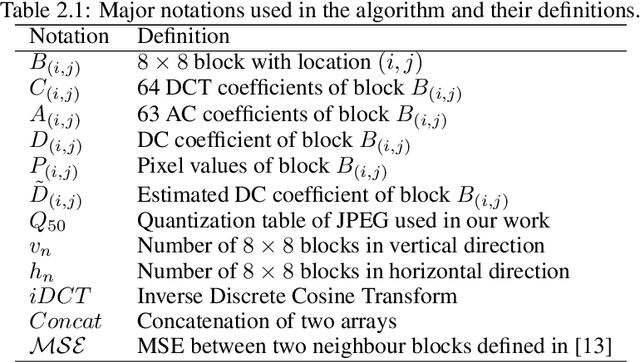

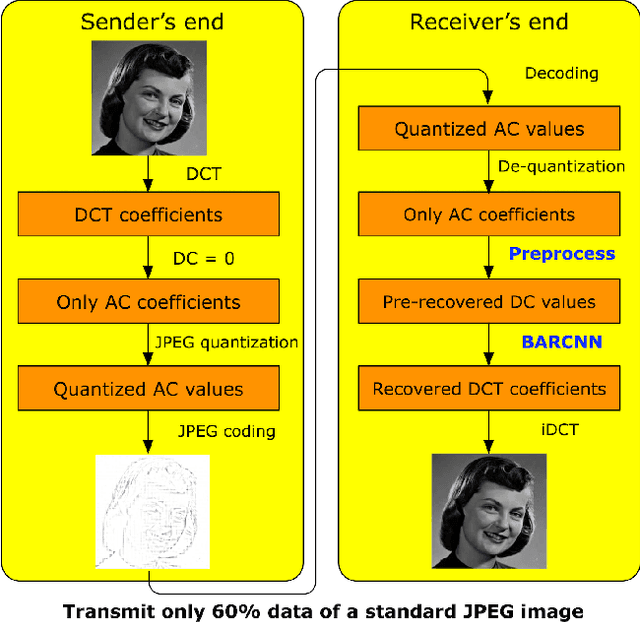

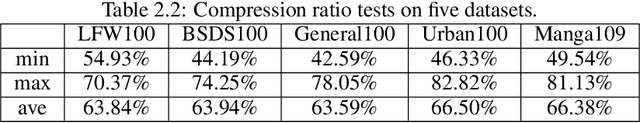

Abstract:This is the report for the PRIM project in Telecom Paris. This report is about applications based on spatial-frequency transform and deep learning techniques. In this report, there are two main works. The first work is about the enhanced JPEG compression method based on deep learning. we propose a novel method to highly enhance the JPEG compression by transmitting fewer image data at the sender's end. At the receiver's end, we propose a DC recovery algorithm together with the deep residual learning framework to recover images with high quality. The second work is about adversarial examples defenses based on signal processing. We propose the wavelet extension method to extend image data features, which makes it more difficult to generate adversarial examples. We further adopt wavelet denoising to reduce the influence of the adversarial perturbations. With intensive experiments, we demonstrate that both works are effective in their application scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge