Fabio Babiloni

Spontaneous Spatial Cognition Emerges during Egocentric Video Viewing through Non-invasive BCI

Jul 16, 2025

Abstract:Humans possess a remarkable capacity for spatial cognition, allowing for self-localization even in novel or unfamiliar environments. While hippocampal neurons encoding position and orientation are well documented, the large-scale neural dynamics supporting spatial representation, particularly during naturalistic, passive experience, remain poorly understood. Here, we demonstrate for the first time that non-invasive brain-computer interfaces (BCIs) based on electroencephalography (EEG) can decode spontaneous, fine-grained egocentric 6D pose, comprising three-dimensional position and orientation, during passive viewing of egocentric video. Despite EEG's limited spatial resolution and high signal noise, we find that spatially coherent visual input (i.e., continuous and structured motion) reliably evokes decodable spatial representations, aligning with participants' subjective sense of spatial engagement. Decoding performance further improves when visual input is presented at a frame rate of 100 ms per image, suggesting alignment with intrinsic neural temporal dynamics. Using gradient-based backpropagation through a neural decoding model, we identify distinct EEG channels contributing to position -- and orientation specific -- components, revealing a distributed yet complementary neural encoding scheme. These findings indicate that the brain's spatial systems operate spontaneously and continuously, even under passive conditions, challenging traditional distinctions between active and passive spatial cognition. Our results offer a non-invasive window into the automatic construction of egocentric spatial maps and advance our understanding of how the human mind transforms everyday sensory experience into structured internal representations.

Human brain distinctiveness based on EEG spectral coherence connectivity

Mar 23, 2014

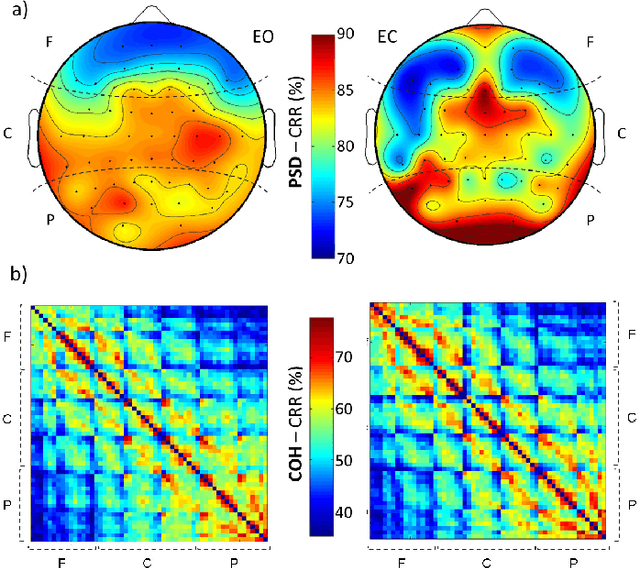

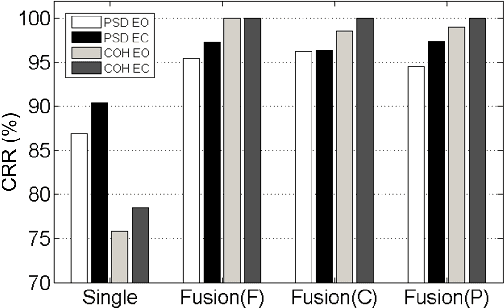

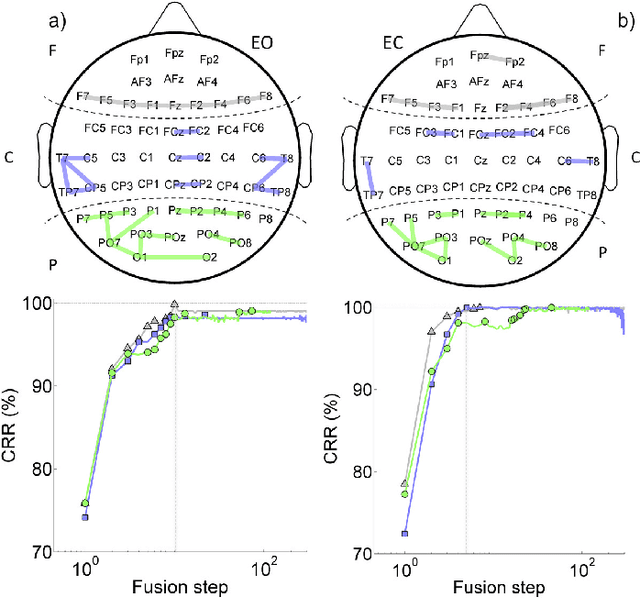

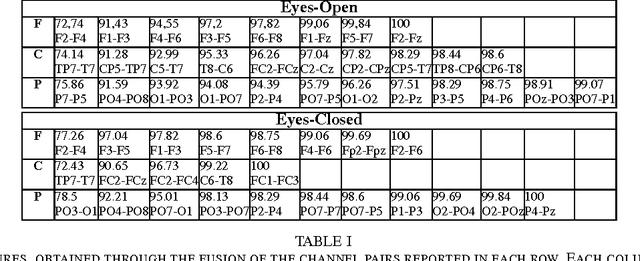

Abstract:The use of EEG biometrics, for the purpose of automatic people recognition, has received increasing attention in the recent years. Most of current analysis rely on the extraction of features characterizing the activity of single brain regions, like power-spectrum estimates, thus neglecting possible temporal dependencies between the generated EEG signals. However, important physiological information can be extracted from the way different brain regions are functionally coupled. In this study, we propose a novel approach that fuses spectral coherencebased connectivity between different brain regions as a possibly viable biometric feature. The proposed approach is tested on a large dataset of subjects (N=108) during eyes-closed (EC) and eyes-open (EO) resting state conditions. The obtained recognition performances show that using brain connectivity leads to higher distinctiveness with respect to power-spectrum measurements, in both the experimental conditions. Notably, a 100% recognition accuracy is obtained in EC and EO when integrating functional connectivity between regions in the frontal lobe, while a lower 97.41% is obtained in EC (96.26% in EO) when fusing power spectrum information from centro-parietal regions. Taken together, these results suggest that functional connectivity patterns represent effective features for improving EEG-based biometric systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge