Erwan Plantec

Hypernetworks That Evolve Themselves

Dec 18, 2025

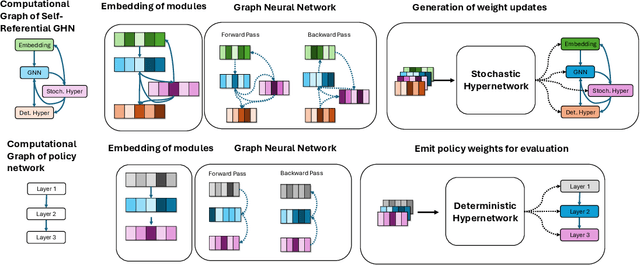

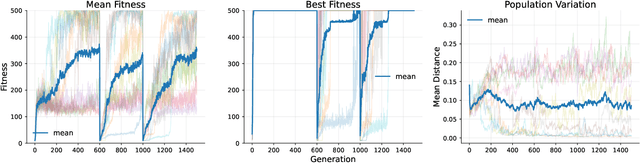

Abstract:How can neural networks evolve themselves without relying on external optimizers? We propose Self-Referential Graph HyperNetworks, systems where the very machinery of variation and inheritance is embedded within the network. By uniting hypernetworks, stochastic parameter generation, and graph-based representations, Self-Referential GHNs mutate and evaluate themselves while adapting mutation rates as selectable traits. Through new reinforcement learning benchmarks with environmental shifts (CartPoleSwitch, LunarLander-Switch), Self-Referential GHNs show swift, reliable adaptation and emergent population dynamics. In the locomotion benchmark Ant-v5, they evolve coherent gaits, showing promising fine-tuning capabilities by autonomously decreasing variation in the population to concentrate around promising solutions. Our findings support the idea that evolvability itself can emerge from neural self-reference. Self-Referential GHNs reflect a step toward synthetic systems that more closely mirror biological evolution, offering tools for autonomous, open-ended learning agents.

Flow-Lenia: Emergent evolutionary dynamics in mass conservative continuous cellular automata

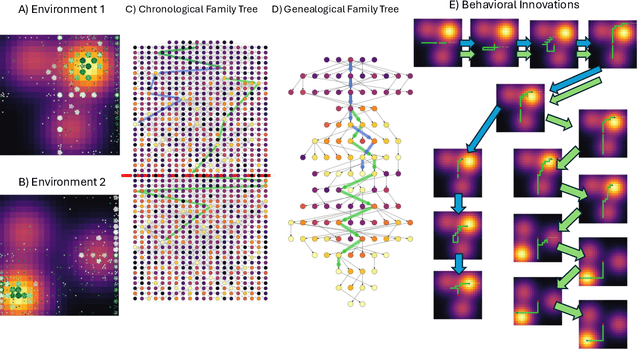

Jun 10, 2025Abstract:Central to the artificial life endeavour is the creation of artificial systems spontaneously generating properties found in the living world such as autopoiesis, self-replication, evolution and open-endedness. While numerous models and paradigms have been proposed, cellular automata (CA) have taken a very important place in the field notably as they enable the study of phenomenons like self-reproduction and autopoiesis. Continuous CA like Lenia have been showed to produce life-like patterns reminiscent, on an aesthetic and ontological point of view, of biological organisms we call creatures. We propose in this paper Flow-Lenia, a mass conservative extension of Lenia. We present experiments demonstrating its effectiveness in generating spatially-localized patters (SLPs) with complex behaviors and show that the update rule parameters can be optimized to generate complex creatures showing behaviors of interest. Furthermore, we show that Flow-Lenia allows us to embed the parameters of the model, defining the properties of the emerging patterns, within its own dynamics thus allowing for multispecies simulations. By using the evolutionary activity framework as well as other metrics, we shed light on the emergent evolutionary dynamics taking place in this system.

* This manuscript has been accepted for publication in the Artificial Life journal (https://direct.mit.edu/artl)

When Does Neuroevolution Outcompete Reinforcement Learning in Transfer Learning Tasks?

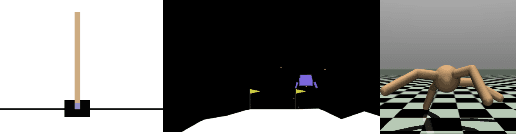

May 28, 2025Abstract:The ability to continuously and efficiently transfer skills across tasks is a hallmark of biological intelligence and a long-standing goal in artificial systems. Reinforcement learning (RL), a dominant paradigm for learning in high-dimensional control tasks, is known to suffer from brittleness to task variations and catastrophic forgetting. Neuroevolution (NE) has recently gained attention for its robustness, scalability, and capacity to escape local optima. In this paper, we investigate an understudied dimension of NE: its transfer learning capabilities. To this end, we introduce two benchmarks: a) in stepping gates, neural networks are tasked with emulating logic circuits, with designs that emphasize modular repetition and variation b) ecorobot extends the Brax physics engine with objects such as walls and obstacles and the ability to easily switch between different robotic morphologies. Crucial in both benchmarks is the presence of a curriculum that enables evaluating skill transfer across tasks of increasing complexity. Our empirical analysis shows that NE methods vary in their transfer abilities and frequently outperform RL baselines. Our findings support the potential of NE as a foundation for building more adaptable agents and highlight future challenges for scaling NE to complex, real-world problems.

Evolving Self-Assembling Neural Networks: From Spontaneous Activity to Experience-Dependent Learning

Jun 14, 2024

Abstract:Biological neural networks are characterized by their high degree of plasticity, a core property that enables the remarkable adaptability of natural organisms. Importantly, this ability affects both the synaptic strength and the topology of the nervous systems. Artificial neural networks, on the other hand, have been mainly designed as static, fully connected structures that can be notoriously brittle in the face of changing environments and novel inputs. Building on previous works on Neural Developmental Programs (NDPs), we propose a class of self-organizing neural networks capable of synaptic and structural plasticity in an activity and reward-dependent manner which we call Lifelong Neural Developmental Program (LNDP). We present an instance of such a network built on the graph transformer architecture and propose a mechanism for pre-experience plasticity based on the spontaneous activity of sensory neurons. Our results demonstrate the ability of the model to learn from experiences in different control tasks starting from randomly connected or empty networks. We further show that structural plasticity is advantageous in environments necessitating fast adaptation or with non-stationary rewards.

Meta-Learning an Evolvable Developmental Encoding

Jun 13, 2024

Abstract:Representations for black-box optimisation methods (such as evolutionary algorithms) are traditionally constructed using a delicate manual process. This is in contrast to the representation that maps DNAs to phenotypes in biological organisms, which is at the hear of biological complexity and evolvability. Additionally, the core of this process is fundamentally the same across nearly all forms of life, reflecting their shared evolutionary origin. Generative models have shown promise in being learnable representations for black-box optimisation but they are not per se designed to be easily searchable. Here we present a system that can meta-learn such representation by directly optimising for a representation's ability to generate quality-diversity. In more detail, we show our meta-learning approach can find one Neural Cellular Automata, in which cells can attend to different parts of a "DNA" string genome during development, enabling it to grow different solvable 2D maze structures. We show that the evolved genotype-to-phenotype mappings become more and more evolvable, not only resulting in a faster search but also increasing the quality and diversity of grown artefacts.

Growing Artificial Neural Networks for Control: the Role of Neuronal Diversity

May 14, 2024

Abstract:In biological evolution complex neural structures grow from a handful of cellular ingredients. As genomes in nature are bounded in size, this complexity is achieved by a growth process where cells communicate locally to decide whether to differentiate, proliferate and connect with other cells. This self-organisation is hypothesized to play an important part in the generalisation, and robustness of biological neural networks. Artificial neural networks (ANNs), on the other hand, are traditionally optimized in the space of weights. Thus, the benefits and challenges of growing artificial neural networks remain understudied. Building on the previously introduced Neural Developmental Programs (NDP), in this work we present an algorithm for growing ANNs that solve reinforcement learning tasks. We identify a key challenge: ensuring phenotypic complexity requires maintaining neuronal diversity, but this diversity comes at the cost of optimization stability. To address this, we introduce two mechanisms: (a) equipping neurons with an intrinsic state inherited upon neurogenesis; (b) lateral inhibition, a mechanism inspired by biological growth, which controlls the pace of growth, helping diversity persist. We show that both mechanisms contribute to neuronal diversity and that, equipped with them, NDPs achieve comparable results to existing direct and developmental encodings in complex locomotion tasks

Flow Lenia: Mass conservation for the study of virtual creatures in continuous cellular automata

Dec 14, 2022

Abstract:Lenia is a family of cellular automata (CA) generalizing Conway's Game of Life to continuous space, time and states. Lenia has attracted a lot of attention because of the wide diversity of self-organizing patterns it can generate. Among those, some spatially localized patterns (SLPs) resemble life-like artificial creatures. However, those creatures are found in only a small subspace of the Lenia parameter space and are not trivial to discover, necessitating advanced search algorithms. We hypothesize that adding a mass conservation constraint could facilitate the emergence of SLPs. We propose here an extension of the Lenia model, called Flow Lenia, which enables mass conservation. We show a few observations demonstrating its effectiveness in generating SLPs with complex behaviors. Furthermore, we show how Flow Lenia enables the integration of the parameters of the CA update rules within the CA dynamics, making them dynamic and localized. This allows for multi-species simulations, with locally coherent update rules that define properties of the emerging creatures, and that can be mixed with neighbouring rules. We argue that this paves the way for the intrinsic evolution of self-organized artificial life forms within continuous CAs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge