Elijah Kagan

Imandra CodeLogician: Neuro-Symbolic Reasoning for Precise Analysis of Software Logic

Jan 17, 2026Abstract:Large Language Models (LLMs) have shown strong performance on code understanding tasks, yet they fundamentally lack the ability to perform precise, exhaustive mathematical reasoning about program behavior. Existing benchmarks either focus on mathematical proof automation, largely disconnected from real-world software, or on engineering tasks that do not require semantic rigor. We present CodeLogician, a neurosymbolic agent for precise analysis of software logic, integrated with ImandraX, an industrial automated reasoning engine deployed in financial markets and safety-critical systems. Unlike prior approaches that use formal methods primarily to validate LLM outputs, CodeLogician uses LLMs to construct explicit formal models of software systems, enabling automated reasoning to answer rich semantic questions beyond binary verification outcomes. To rigorously evaluate mathematical reasoning about software logic, we introduce code-logic-bench, a benchmark targeting the middle ground between theorem proving and software engineering benchmarks. It measures reasoning correctness about program state spaces, control flow, coverage constraints, and edge cases, with ground truth defined via formal modeling and region decomposition. Comparing LLM-only reasoning against LLMs augmented with CodeLogician, formal augmentation yields substantial improvements, closing a 41-47 percentage point gap in reasoning accuracy. These results demonstrate that neurosymbolic integration is essential for scaling program analysis toward rigorous, autonomous software understanding.

The Imandra Automated Reasoning System (system description)

Apr 21, 2020

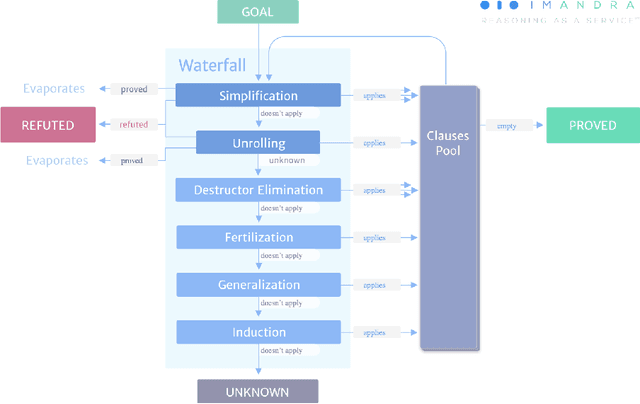

Abstract:We describe Imandra, a modern computational logic theorem prover designed to bridge the gap between decision procedures such as SMT, semi-automatic inductive provers of the Boyer-Moore family like ACL2, and interactive proof assistants for typed higher-order logics. Imandra's logic is computational, based on a pure subset of OCaml in which all functions are terminating, with restrictions on types and higher-order functions that allow conjectures to be translated into multi-sorted first-order logic with theories, including arithmetic and datatypes. Imandra has novel features supporting large-scale industrial applications, including a seamless integration of bounded and unbounded verification, first-class computable counterexamples, efficiently executable models and a cloud-native architecture supporting live multiuser collaboration. The core reasoning mechanisms of Imandra are (i) a semi-complete procedure for finding models of formulas in the logic mentioned above, centered around the lazy expansion of recursive functions, and (ii) an inductive waterfall and simplifier which "lifts" many Boyer-Moore ideas to our typed higher-order setting. These mechanisms are tightly integrated and subject to many forms of user control. Imandra's user interfaces include an interactive toplevel, Jupyter notebooks and asynchronous document-based verification (in the spirit of Isabelle's Prover IDE) with VS Code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge