Christoph M. Wintersteiger

Imandra CodeLogician: Neuro-Symbolic Reasoning for Precise Analysis of Software Logic

Jan 17, 2026Abstract:Large Language Models (LLMs) have shown strong performance on code understanding tasks, yet they fundamentally lack the ability to perform precise, exhaustive mathematical reasoning about program behavior. Existing benchmarks either focus on mathematical proof automation, largely disconnected from real-world software, or on engineering tasks that do not require semantic rigor. We present CodeLogician, a neurosymbolic agent for precise analysis of software logic, integrated with ImandraX, an industrial automated reasoning engine deployed in financial markets and safety-critical systems. Unlike prior approaches that use formal methods primarily to validate LLM outputs, CodeLogician uses LLMs to construct explicit formal models of software systems, enabling automated reasoning to answer rich semantic questions beyond binary verification outcomes. To rigorously evaluate mathematical reasoning about software logic, we introduce code-logic-bench, a benchmark targeting the middle ground between theorem proving and software engineering benchmarks. It measures reasoning correctness about program state spaces, control flow, coverage constraints, and edge cases, with ground truth defined via formal modeling and region decomposition. Comparing LLM-only reasoning against LLMs augmented with CodeLogician, formal augmentation yields substantial improvements, closing a 41-47 percentage point gap in reasoning accuracy. These results demonstrate that neurosymbolic integration is essential for scaling program analysis toward rigorous, autonomous software understanding.

Exploring Approximations for Floating-Point Arithmetic using UppSAT

Dec 11, 2017

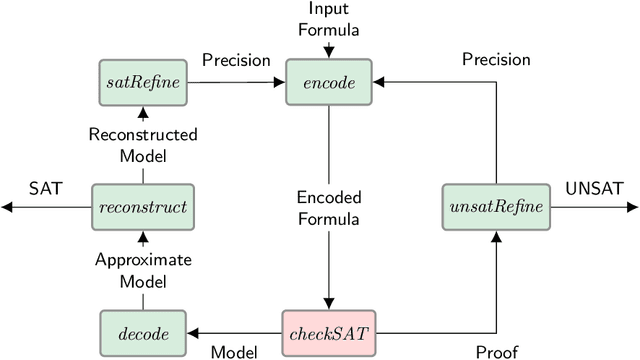

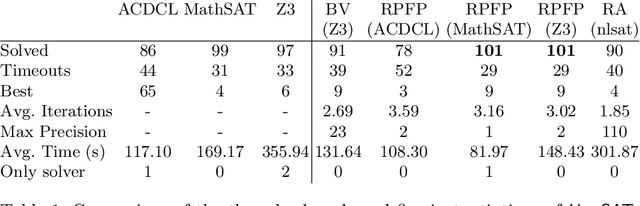

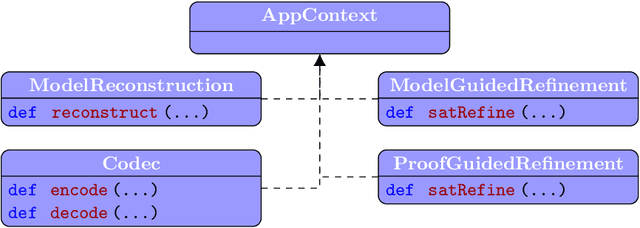

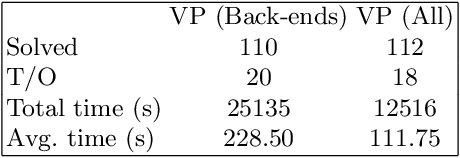

Abstract:We consider the problem of solving floating-point constraints obtained from software verification. We present UppSAT --- a new implementation of a systematic approximation refinement framework [ZWR17] as an abstract SMT solver. Provided with an approximation and a decision procedure (implemented in an off-the-shelf SMT solver), UppSAT yields an approximating SMT solver. Additionally, UppSAT includes a library of predefined approximation components which can be combined and extended to define new encodings, orderings and solving strategies. We propose that UppSAT can be used as a sandbox for easy and flexible exploration of new approximations. To substantiate this, we explore several approximations of floating-point arithmetic. Approximations can be viewed as a composition of an encoding into a target theory, a precision ordering, and a number of strategies for model reconstruction and precision (or approximation) refinement. We present encodings of floating-point arithmetic into reduced precision floating-point arithmetic, real-arithmetic, and fixed-point arithmetic (encoded in the theory of bit-vectors). In an experimental evaluation, we compare the advantages and disadvantages of approximating solvers obtained by combining various encodings and decision procedures (based on existing state-of-the-art SMT solvers for floating-point, real, and bit-vector arithmetic).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge