Duen Horng Chau

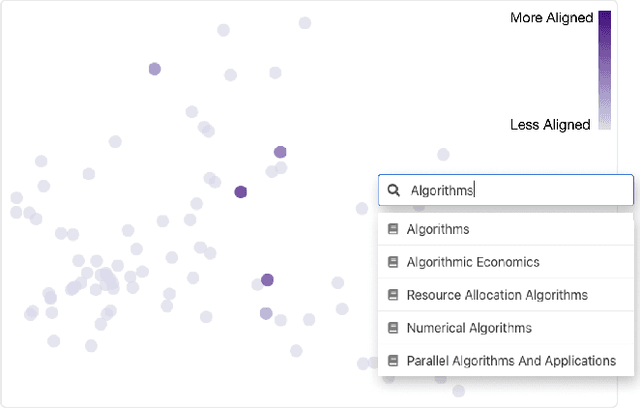

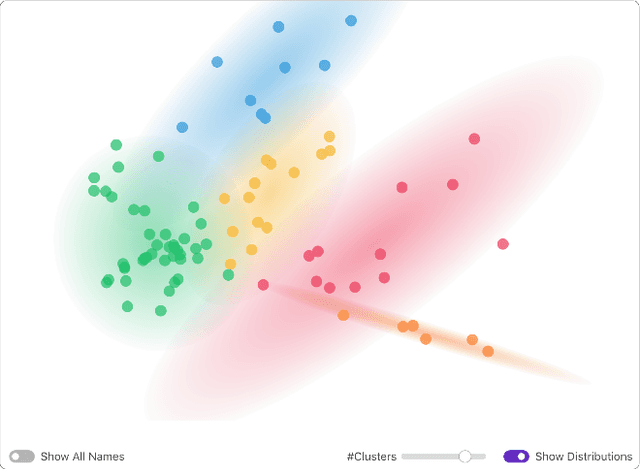

Mapping Researchers with PeopleMap

Aug 31, 2020Abstract:Discovering research expertise at universities can be a difficult task. Directories routinely become outdated, and few help in visually summarizing researchers' work or supporting the exploration of shared interests among researchers. This results in lost opportunities for both internal and external entities to discover new connections, nurture research collaboration, and explore the diversity of research. To address this problem, at Georgia Tech, we have been developing PeopleMap, an open-source interactive web-based tool that uses natural language processing (NLP) to create visual maps for researchers based on their research interests and publications. Requiring only the researchers' Google Scholar profiles as input, PeopleMap generates and visualizes embeddings for the researchers, significantly reducing the need for manual curation of publication information. To encourage and facilitate easy adoption and extension of PeopleMap, we have open-sourced it under the permissive MIT license at https://github.com/poloclub/people-map. PeopleMap has received positive feedback and enthusiasm for expanding its adoption across Georgia Tech.

PeopleMap: Visualization Tool for Mapping Out Researchers using Natural Language Processing

Jun 10, 2020

Abstract:Discovering research expertise at institutions can be a difficult task. Manually curated university directories easily become out of date and they often lack the information necessary for understanding a researcher's interests and past work, making it harder to explore the diversity of research at an institution and identify research talents. This results in lost opportunities for both internal and external entities to discover new connections and nurture research collaboration. To solve this problem, we have developed PeopleMap, the first interactive, open-source, web-based tool that visually "maps out" researchers based on their research interests and publications by leveraging embeddings generated by natural language processing (NLP) techniques. PeopleMap provides a new engaging way for institutions to summarize their research talents and for people to discover new connections. The platform is developed with ease-of-use and sustainability in mind. Using only researchers' Google Scholar profiles as input, PeopleMap can be readily adopted by any institution using its publicly-accessible repository and detailed documentation.

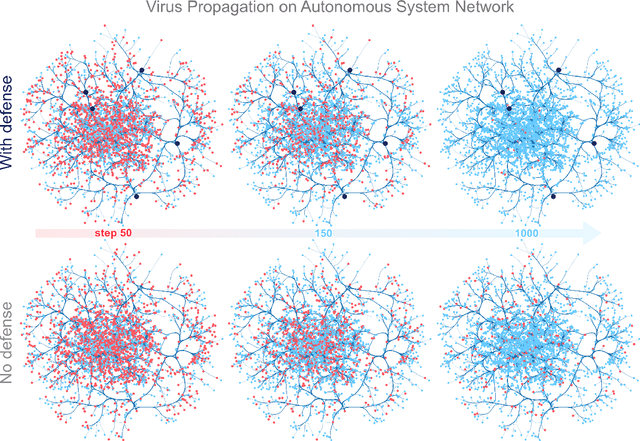

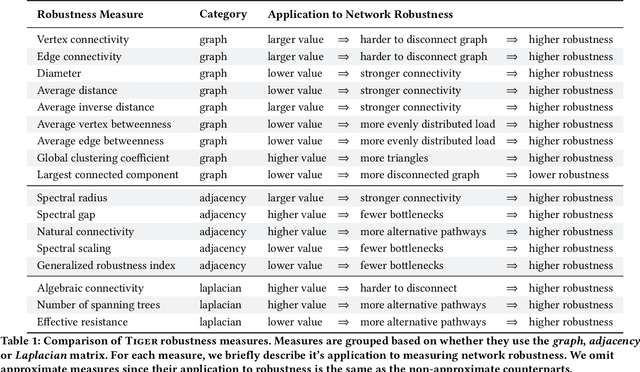

Evaluating Graph Vulnerability and Robustness using TIGER

Jun 10, 2020

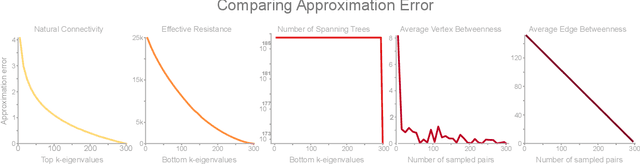

Abstract:The study of network robustness is a critical tool in the characterization and understanding of complex interconnected systems such as transportation, infrastructure, communication, and computer networks. Through analyzing and understanding the robustness of these networks we can:(1) quantify network vulnerability and robustness,(2) augment a network's structure to resist attacks and recover from failure, and (3) control the dissemination of entities on the network (e.g., viruses, propaganda). While significant research has been conducted on all of these tasks, no comprehensive open-source toolbox currently exists to assist researchers and practitioners in this important topic. This lack of available tools hinders reproducibility and examination of existing work, development of new research, and dissemination of new ideas. We contribute TIGER, an open-sourced Python toolbox to address these challenges. TIGER contains 22 graph robustness measures with both original and fast approximate versions; 17 failure and attack strategies; 15 heuristic and optimization based defense techniques; and 4 simulation tools. By democratizing the tools required to study network robustness, our goal is to assist researchers and practitioners in analyzing their own networks; and facilitate the development of new research in the field. TIGER is open-sourced at: https://github.com/safreita1/TIGER

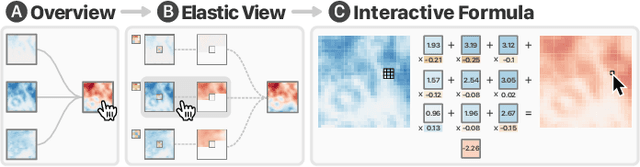

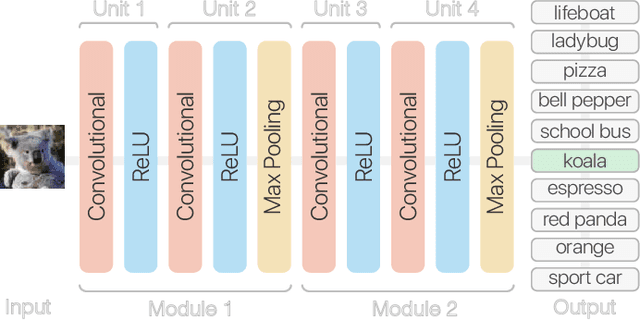

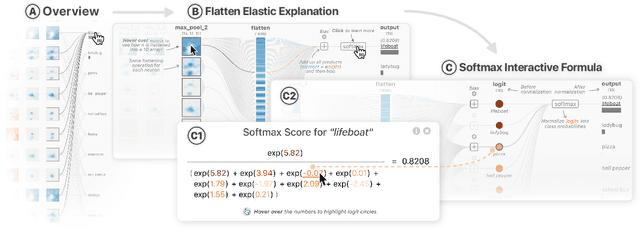

CNN Explainer: Learning Convolutional Neural Networks with Interactive Visualization

May 01, 2020

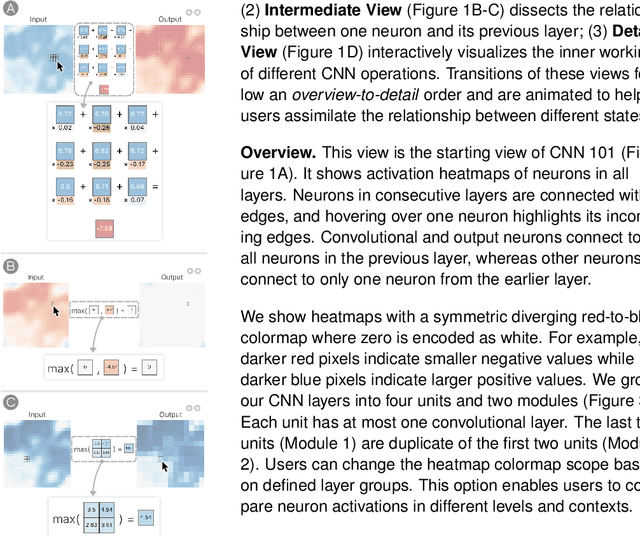

Abstract:Deep learning's great success motivates many practitioners and students to learn about this exciting technology. However, it is often challenging for beginners to take their first step due to the complexity of understanding and applying deep learning. We present CNN Explainer, an interactive visualization tool designed for non-experts to learn and examine convolutional neural networks (CNNs), a foundational deep learning model architecture. Our tool addresses key challenges that novices face while learning about CNNs, which we identify from interviews with instructors and a survey with past students. Users can interactively visualize and inspect the data transformation and flow of intermediate results in a CNN. CNN Explainer tightly integrates a model overview that summarizes a CNN's structure, and on-demand, dynamic visual explanation views that help users understand the underlying components of CNNs. Through smooth transitions across levels of abstraction, our tool enables users to inspect the interplay between low-level operations (e.g., mathematical computations) and high-level outcomes (e.g., class predictions). To better understand our tool's benefits, we conducted a qualitative user study, which shows that CNN Explainer can help users more easily understand the inner workings of CNNs, and is engaging and enjoyable to use. We also derive design lessons from our study. Developed using modern web technologies, CNN Explainer runs locally in users' web browsers without the need for installation or specialized hardware, broadening the public's education access to modern deep learning techniques.

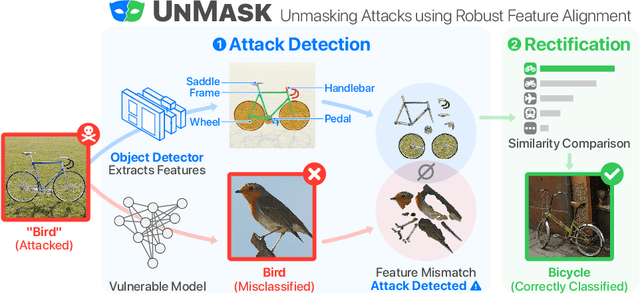

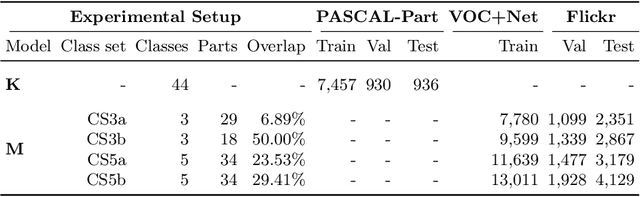

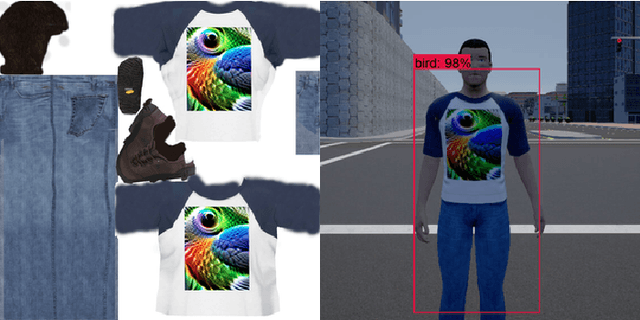

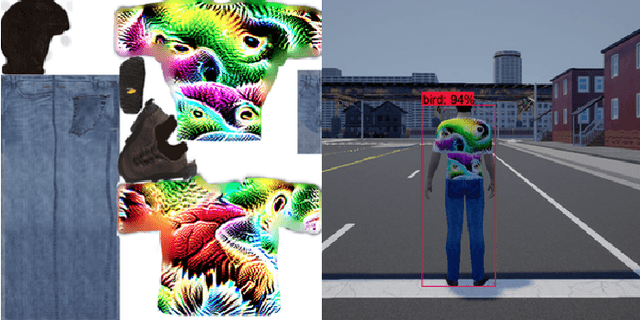

UnMask: Adversarial Detection and Defense Through Robust Feature Alignment

Feb 21, 2020

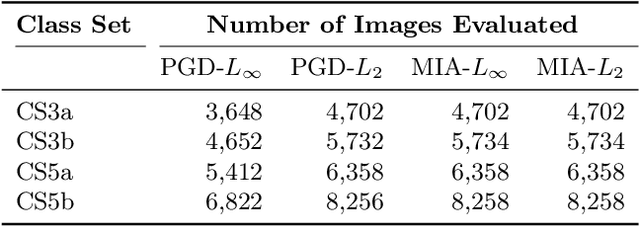

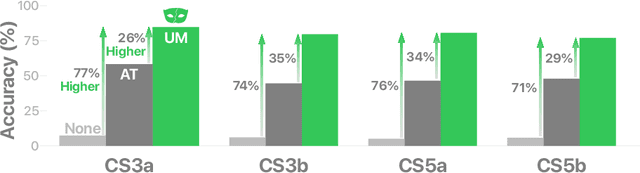

Abstract:Deep learning models are being integrated into a wide range of high-impact, security-critical systems, from self-driving cars to medical diagnosis. However, recent research has demonstrated that many of these deep learning architectures are vulnerable to adversarial attacks--highlighting the vital need for defensive techniques to detect and mitigate these attacks before they occur. To combat these adversarial attacks, we developed UnMask, an adversarial detection and defense framework based on robust feature alignment. The core idea behind UnMask is to protect these models by verifying that an image's predicted class ("bird") contains the expected robust features (e.g., beak, wings, eyes). For example, if an image is classified as "bird", but the extracted features are wheel, saddle and frame, the model may be under attack. UnMask detects such attacks and defends the model by rectifying the misclassification, re-classifying the image based on its robust features. Our extensive evaluation shows that UnMask (1) detects up to 96.75% of attacks, with a false positive rate of 9.66% and (2) defends the model by correctly classifying up to 93% of adversarial images produced by the current strongest attack, Projected Gradient Descent, in the gray-box setting. UnMask provides significantly better protection than adversarial training across 8 attack vectors, averaging 31.18% higher accuracy. Our proposed method is architecture agnostic and fast. We open source the code repository and data with this paper: https://github.com/unmaskd/unmask.

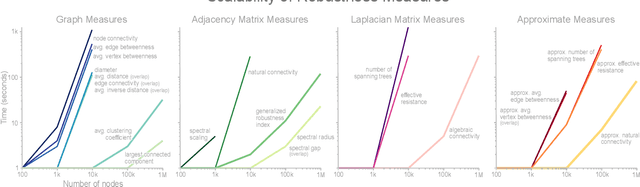

CNN 101: Interactive Visual Learning for Convolutional Neural Networks

Feb 19, 2020

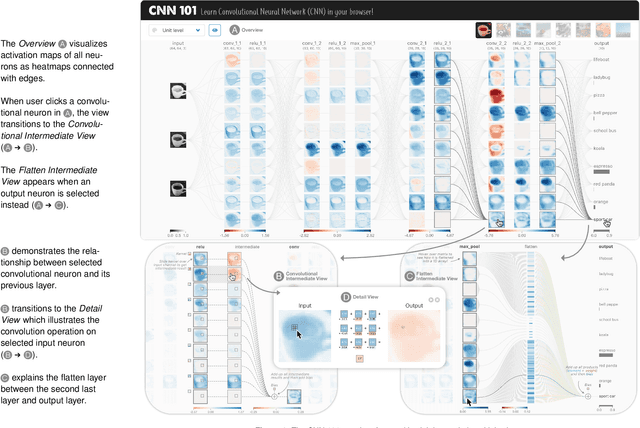

Abstract:The success of deep learning solving previously-thought hard problems has inspired many non-experts to learn and understand this exciting technology. However, it is often challenging for learners to take the first steps due to the complexity of deep learning models. We present our ongoing work, CNN 101, an interactive visualization system for explaining and teaching convolutional neural networks. Through tightly integrated interactive views, CNN 101 offers both overview and detailed descriptions of how a model works. Built using modern web technologies, CNN 101 runs locally in users' web browsers without requiring specialized hardware, broadening the public's education access to modern deep learning techniques.

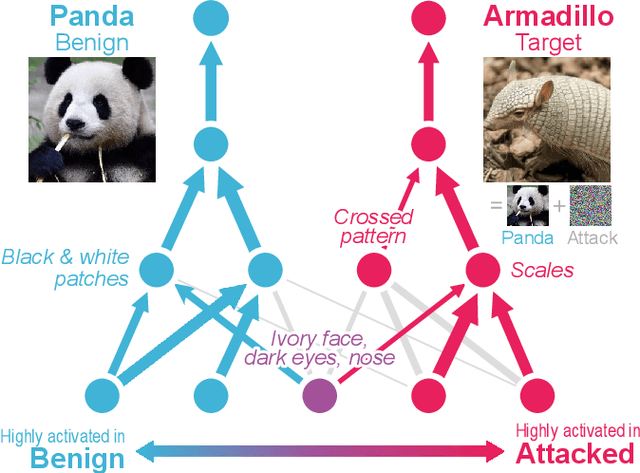

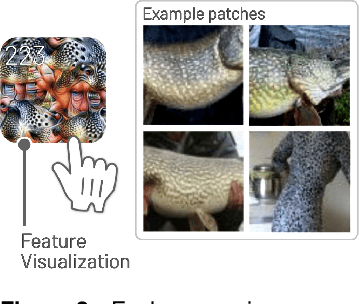

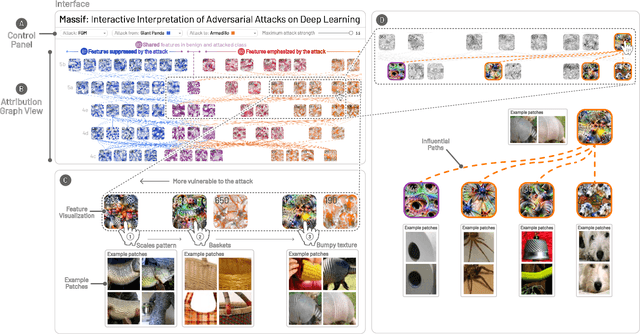

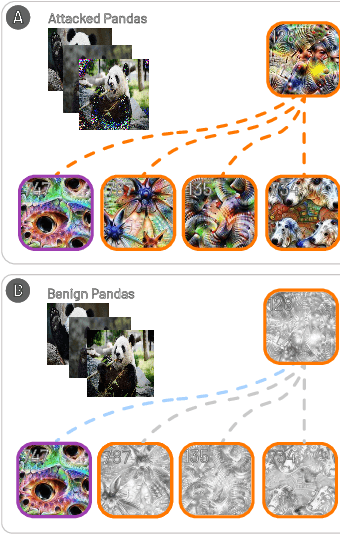

Massif: Interactive Interpretation of Adversarial Attacks on Deep Learning

Feb 16, 2020

Abstract:Deep neural networks (DNNs) are increasingly powering high-stakes applications such as autonomous cars and healthcare; however, DNNs are often treated as "black boxes" in such applications. Recent research has also revealed that DNNs are highly vulnerable to adversarial attacks, raising serious concerns over deploying DNNs in the real world. To overcome these deficiencies, we are developing Massif, an interactive tool for deciphering adversarial attacks. Massif identifies and interactively visualizes neurons and their connections inside a DNN that are strongly activated or suppressed by an adversarial attack. Massif provides both a high-level, interpretable overview of the effect of an attack on a DNN, and a low-level, detailed description of the affected neurons. These tightly coupled views in Massif help people better understand which input features are most vulnerable or important for correct predictions.

REST: Robust and Efficient Neural Networks for Sleep Monitoring in the Wild

Jan 29, 2020

Abstract:In recent years, significant attention has been devoted towards integrating deep learning technologies in the healthcare domain. However, to safely and practically deploy deep learning models for home health monitoring, two significant challenges must be addressed: the models should be (1) robust against noise; and (2) compact and energy-efficient. We propose REST, a new method that simultaneously tackles both issues via 1) adversarial training and controlling the Lipschitz constant of the neural network through spectral regularization while 2) enabling neural network compression through sparsity regularization. We demonstrate that REST produces highly-robust and efficient models that substantially outperform the original full-sized models in the presence of noise. For the sleep staging task over single-channel electroencephalogram (EEG), the REST model achieves a macro-F1 score of 0.67 vs. 0.39 achieved by a state-of-the-art model in the presence of Gaussian noise while obtaining 19x parameter reduction and 15x MFLOPS reduction on two large, real-world EEG datasets. By deploying these models to an Android application on a smartphone, we quantitatively observe that REST allows models to achieve up to 17x energy reduction and 9x faster inference. We open-source the code repository with this paper: https://github.com/duggalrahul/REST.

NeuralDivergence: Exploring and Understanding Neural Networks by Comparing Activation Distributions

Jun 02, 2019Abstract:As deep neural networks are increasingly used in solving high-stake problems, there is a pressing need to understand their internal decision mechanisms. Visualization has helped address this problem by assisting with interpreting complex deep neural networks. However, current tools often support only single data instances, or visualize layers in isolation. We present NeuralDivergence, an interactive visualization system that uses activation distributions as a high-level summary of what a model has learned. NeuralDivergence enables users to interactively summarize and compare activation distributions across layers, classes, and instances (e.g., pairs of adversarial attacked and benign images), helping them gain better understanding of neural network models.

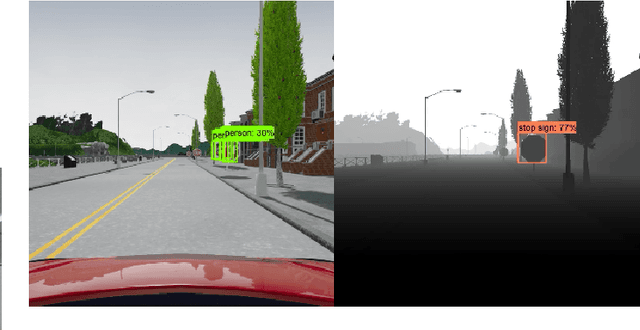

Talk Proposal: Towards the Realistic Evaluation of Evasion Attacks using CARLA

Apr 18, 2019

Abstract:In this talk we describe our content-preserving attack on object detectors, ShapeShifter, and demonstrate how to evaluate this threat in realistic scenarios. We describe how we use CARLA, a realistic urban driving simulator, to create these scenarios, and how we use ShapeShifter to generate content-preserving attacks against those scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge