Daniel Barcklow

Explain What You Mean: Intent Augmented Knowledge Graph Recommender Built With LLM

May 16, 2025Abstract:Interaction sparsity is the primary obstacle for recommendation systems. Sparsity manifests in environments with disproportional cardinality of groupings of entities, such as users and products in an online marketplace. It also is found for newly introduced entities, described as the cold-start problem. Recent efforts to mitigate this sparsity issue shifts the performance bottleneck to other areas in the computational pipeline. Those that focus on enriching sparse representations with connectivity data from other external sources propose methods that are resource demanding and require careful domain expert aided addition of this newly introduced data. Others that turn to Large Language Model (LLM) based recommenders will quickly encounter limitations surrounding data quality and availability. In this work, we propose LLM-based Intent Knowledge Graph Recommender (IKGR), a novel framework that leverages retrieval-augmented generation and an encoding approach to construct and densify a knowledge graph. IKGR learns latent user-item affinities from an interaction knowledge graph and further densifies it through mutual intent connectivity. This addresses sparsity issues and allows the model to make intent-grounded recommendations with an interpretable embedding translation layer. Through extensive experiments on real-world datasets, we demonstrate that IKGR overcomes knowledge gaps and achieves substantial gains over state-of-the-art baselines on both publicly available and our internal recommendation datasets.

BASED-XAI: Breaking Ablation Studies Down for Explainable Artificial Intelligence

Jul 12, 2022

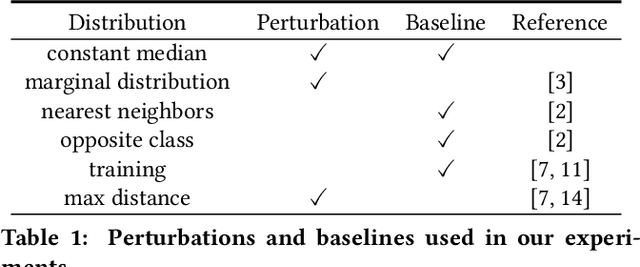

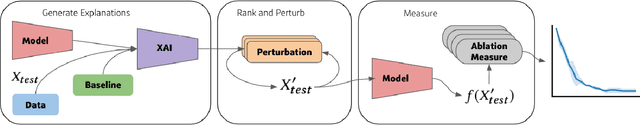

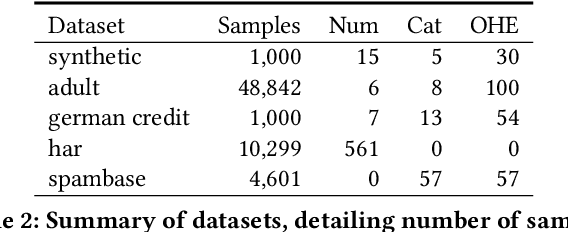

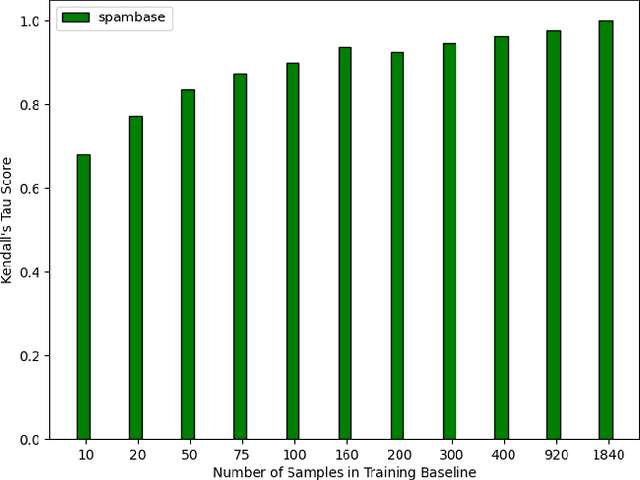

Abstract:Explainable artificial intelligence (XAI) methods lack ground truth. In its place, method developers have relied on axioms to determine desirable properties for their explanations' behavior. For high stakes uses of machine learning that require explainability, it is not sufficient to rely on axioms as the implementation, or its usage, can fail to live up to the ideal. As a result, there exists active research on validating the performance of XAI methods. The need for validation is especially magnified in domains with a reliance on XAI. A procedure frequently used to assess their utility, and to some extent their fidelity, is an ablation study. By perturbing the input variables in rank order of importance, the goal is to assess the sensitivity of the model's performance. Perturbing important variables should correlate with larger decreases in measures of model capability than perturbing less important features. While the intent is clear, the actual implementation details have not been studied rigorously for tabular data. Using five datasets, three XAI methods, four baselines, and three perturbations, we aim to show 1) how varying perturbations and adding simple guardrails can help to avoid potentially flawed conclusions, 2) how treatment of categorical variables is an important consideration in both post-hoc explainability and ablation studies, and 3) how to identify useful baselines for XAI methods and viable perturbations for ablation studies.

Persistent Weak Interferer Detection in WiFi Networks: A Deep Learning Based Approach

May 23, 2022

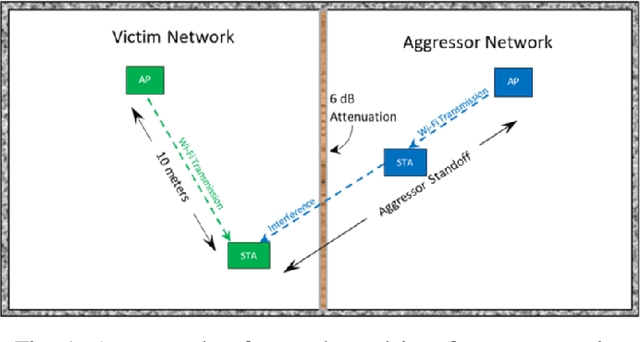

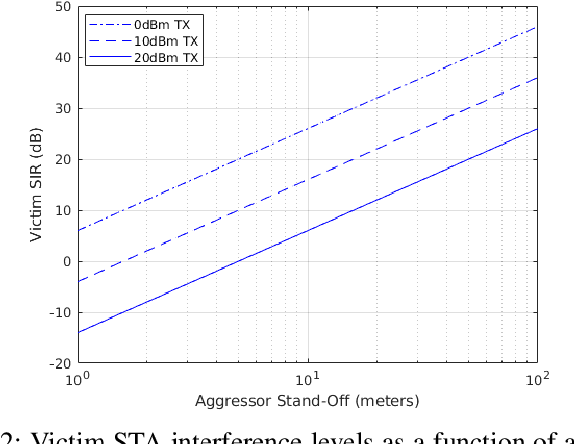

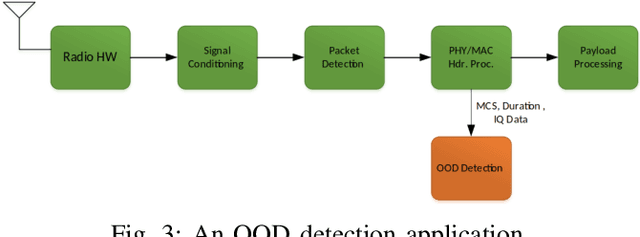

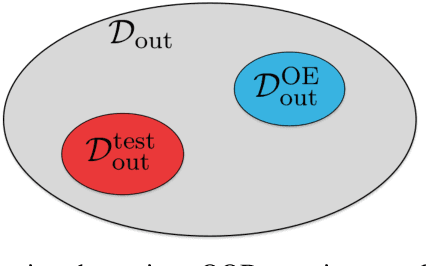

Abstract:In this paper, we explore the use of multiple deep learning techniques to detect weak interference in WiFi networks. Given the low interference signal levels involved, this scenario tends to be difficult to detect. However, even signal-to-interference ratios exceeding 20 dB can cause significant throughput degradation and latency. Furthermore, the resultant packet error rate may not be enough to force the WiFi network to fallback to a more robust physical layer configuration. Deep learning applied directly to sampled radio frequency data has the potential to perform detection much cheaper than successive interference cancellation, which is important for real-time persistent network monitoring. The techniques explored in this work include maximum softmax probability, distance metric learning, variational autoencoder, and autoreggressive log-likelihood. We also introduce the notion of generalized outlier exposure for these techniques, and show its importance in detecting weak interference. Our results indicate that with outlier exposure, maximum softmax probability, distance metric learning, and autoreggresive log-likelihood are capable of reliably detecting interference more than 20 dB below the 802.11 specified minimum sensitivity levels. We believe this presents a unique software solution to real-time, persistent network monitoring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge