Da Kong

Keyframe-Based Feed-Forward Visual Odometry

Jan 22, 2026Abstract:The emergence of visual foundation models has revolutionized visual odometry~(VO) and SLAM, enabling pose estimation and dense reconstruction within a single feed-forward network. However, unlike traditional pipelines that leverage keyframe methods to enhance efficiency and accuracy, current foundation model based methods, such as VGGT-Long, typically process raw image sequences indiscriminately. This leads to computational redundancy and degraded performance caused by low inter-frame parallax, which provides limited contextual stereo information. Integrating traditional geometric heuristics into these methods is non-trivial, as their performance depends on high-dimensional latent representations rather than explicit geometric metrics. To bridge this gap, we propose a novel keyframe-based feed-forward VO. Instead of relying on hand-crafted rules, our approach employs reinforcement learning to derive an adaptive keyframe policy in a data-driven manner, aligning selection with the intrinsic characteristics of the underlying foundation model. We train our agent on TartanAir dataset and conduct extensive evaluations across several real-world datasets. Experimental results demonstrate that the proposed method achieves consistent and substantial improvements over state-of-the-art feed-forward VO methods.

Simplified POMDP Planning with an Alternative Observation Space and Formal Performance Guarantees

Oct 10, 2024

Abstract:Online planning under uncertainty in partially observable domains is an essential capability in robotics and AI. The partially observable Markov decision process (POMDP) is a mathematically principled framework for addressing decision-making problems in this challenging setting. However, finding an optimal solution for POMDPs is computationally expensive and is feasible only for small problems. In this work, we contribute a novel method to simplify POMDPs by switching to an alternative, more compact, observation space and simplified model to speedup planning with formal performance guarantees. We introduce the notion of belief tree topology, which encodes the levels and branches in the tree that use the original and alternative observation space and models. Each belief tree topology comes with its own policy space and planning performance. Our key contribution is to derive bounds between the optimal Q-function of the original POMDP and the simplified tree defined by a given topology with a corresponding simplified policy space. These bounds are then used as an adaptation mechanism between different tree topologies until the optimal action of the original POMDP can be determined. Further, we consider a specific instantiation of our framework, where the alternative observation space and model correspond to a setting where the state is fully observable. We evaluate our approach in simulation, considering exact and approximate POMDP solvers and demonstrating a significant speedup while preserving solution quality. We believe this work opens new exciting avenues for online POMDP planning with formal performance guarantees.

A Dataset for Evaluating Multi-spectral Motion Estimation Methods

Jul 01, 2020

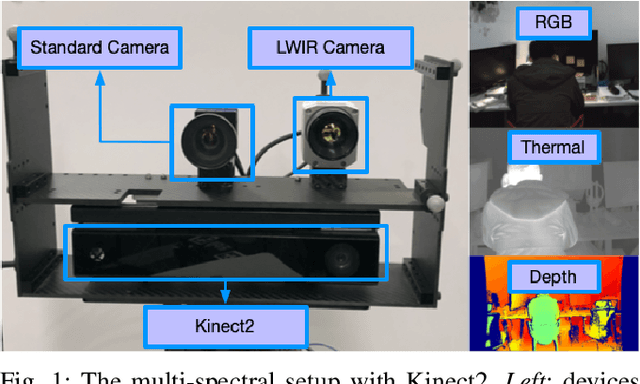

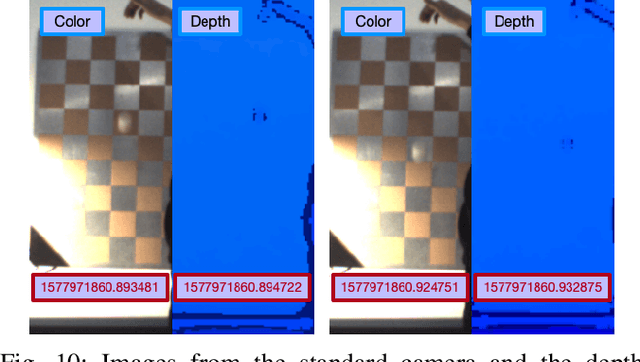

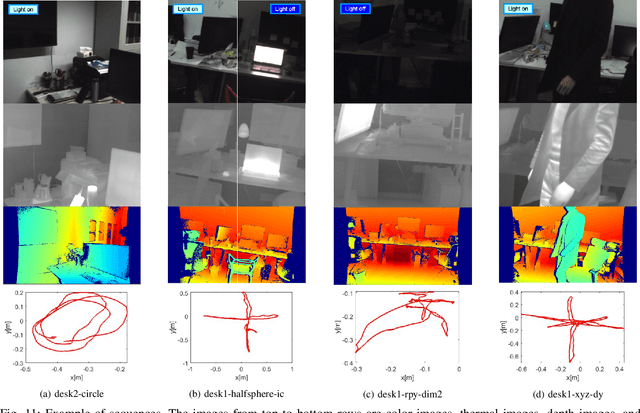

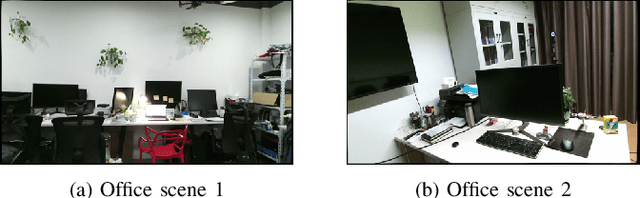

Abstract:Visible images have been widely used for indoor motion estimation. Thermal images, in contrast, are more challenging to be used in motion estimation since they typically have lower resolution, less texture, and more noise. In this paper, a novel dataset for evaluating the performance of multi-spectral motion estimation systems is presented. The dataset includes both multi-spectral and dense depth images with accurate ground-truth camera poses provided by a motion capture system. All the sequences are recorded from a handheld multi-spectral device, which consists of a standard visible-light camera, a long-wave infrared camera, and a depth camera. The multi-spectral images, including both color and thermal images in full sensor resolution (640 $\times$ 480), are obtained from the hardware-synchronized standard and long-wave infrared camera at 32Hz. The depth images are captured by a Microsoft Kinect2 and can have benefits for learning cross-modalities stereo matching. In addition to the sequences with bright illumination, the dataset also contains scenes with dim or varying illumination. The full dataset, including both raw data and calibration data with detailed specifications of data format, is publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge