Cristian Tatino

Generalization in Reinforcement Learning for Radio Access Networks

Jul 09, 2025Abstract:Modern RAN operate in highly dynamic and heterogeneous environments, where hand-tuned, rule-based RRM algorithms often underperform. While RL can surpass such heuristics in constrained settings, the diversity of deployments and unpredictable radio conditions introduce major generalization challenges. Data-driven policies frequently overfit to training conditions, degrading performance in unseen scenarios. To address this, we propose a generalization-centered RL framework for RAN control that: (i) encodes cell topology and node attributes via attention-based graph representations; (ii) applies domain randomization to broaden the training distribution; and (iii) distributes data generation across multiple actors while centralizing training in a cloud-compatible architecture aligned with O-RAN principles. Although generalization increases computational and data-management complexity, our distributed design mitigates this by scaling data collection and training across diverse network conditions. Applied to downlink link adaptation in five 5G benchmarks, our policy improves average throughput and spectral efficiency by ~10% over an OLLA baseline (10% BLER target) in full-buffer MIMO/mMIMO and by >20% under high mobility. It matches specialized RL in full-buffer traffic and achieves up to 4- and 2-fold gains in eMBB and mixed-traffic benchmarks, respectively. In nine-cell deployments, GAT models offer 30% higher throughput over MLP baselines. These results, combined with our scalable architecture, offer a path toward AI-native 6G RAN using a single, generalizable RL agent.

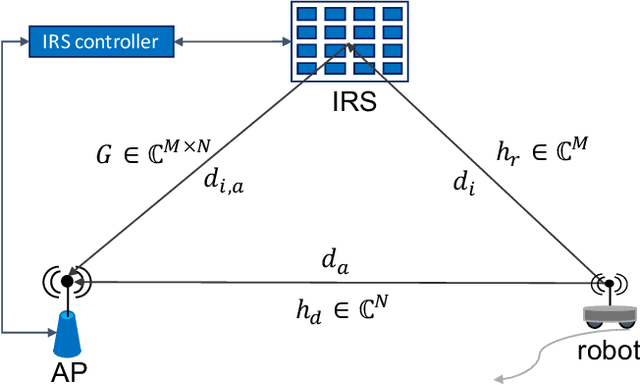

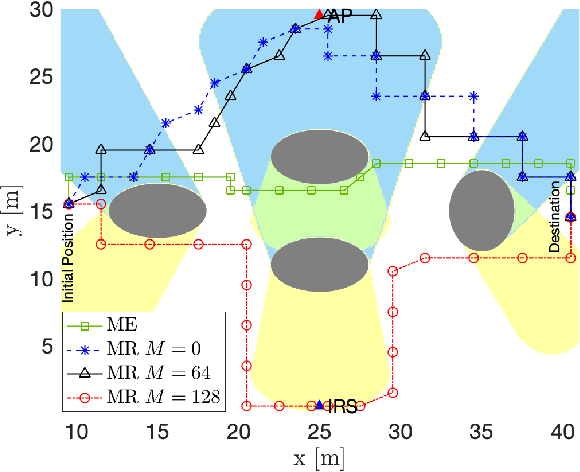

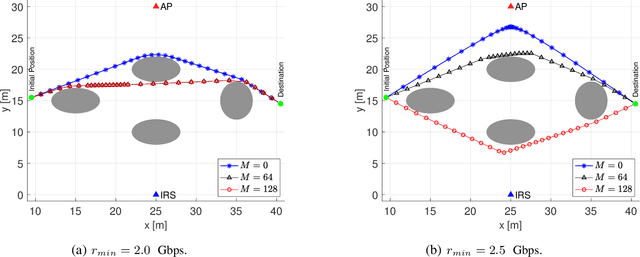

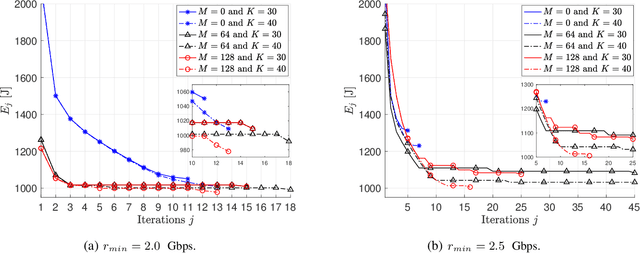

QoS Aware Robot Trajectory Optimization with IRS-Assisted Millimeter-Wave Communications

Dec 19, 2020

Abstract:This paper considers the motion energy minimization problem for a wirelessly connected robot using millimeter-wave (mm-wave) communications. These are assisted by an intelligent reflective surface (IRS) that enhances the coverage at such high frequencies characterized by high blockage sensitivity. The robot is subject to time and uplink communication quality of service (QoS) constraints. This is a fundamental problem in fully automated factories that characterize Industry 4.0, where robots may have to perform tasks with given deadlines while maximizing the battery autonomy and communication efficiency. To account for the mutual dependence between robot position and communication QoS, we propose a joint optimization of robot trajectory and beamforming at the IRS and access point (AP). We present a solution that first exploits mm-wave channel characteristics to decouple beamforming and trajectory optimization. Then, the latter is solved by a successive-convex optimization-based algorithm. The algorithm takes into account the obstacles' positions and a radio map to avoid collisions and poorly covered areas. We prove that the algorithm can converge to a solution satisfying the Karush-Kuhn-Tucker (KKT) conditions. The simulation results show a dramatic reduction of the motion energy consumption with respect to methods that aim to find maximum-rate trajectories. Moreover, we show how the IRS and the beamforming optimization improve the motion energy efficiency of the robot.

Multi-Robot Association-Path Planning in Millimeter-Wave Industrial Scenarios

Mar 21, 2020

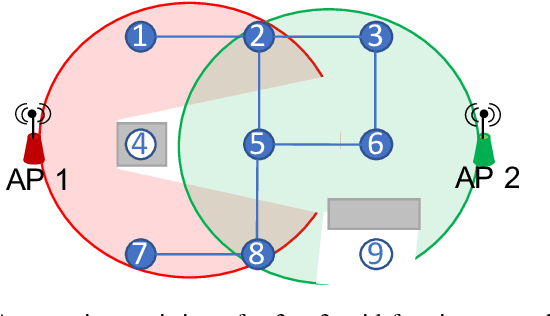

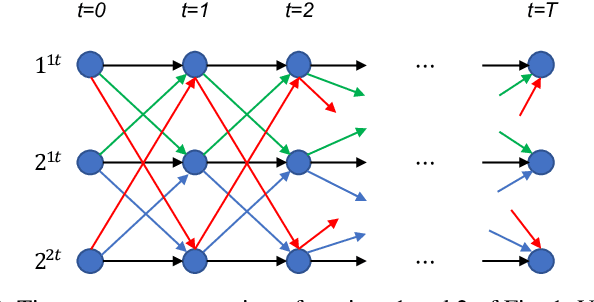

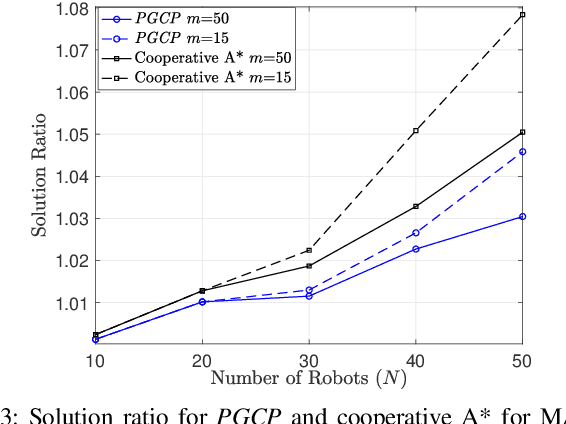

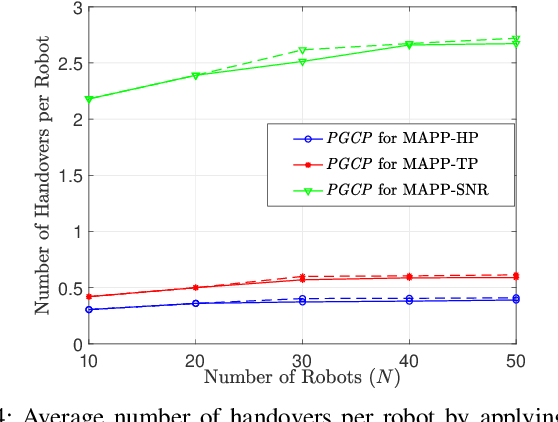

Abstract:The massive exploitation of robots for industry 4.0 needs advanced wireless solutions that replace less flexible and more costly wired networks. In this regard, millimeter-waves (mm-waves) can provide high data rates, but they are characterized by a spotty coverage requiring dense radio deployments. In such scenarios, coverage holes and numerous handovers may decrease the communication throughput and reliability. In contrast to conventional multi-robot path planning (MPP), we define a type of multi-robot association-path planning (MAPP) problems aiming to jointly optimize the robots' paths and the robots-access points (APs) associations. In MAPP, we focus on minimizing the path lengths as well as the number of handovers while sustaining connectivity. We propose an algorithm that can solve MAPP in polynomial time and it is able to numerically approach the global optimum. We show that the proposed solution is able to guarantee network connectivity and to dramatically reduce the number of handovers in comparison to minimizing only the path lengths.

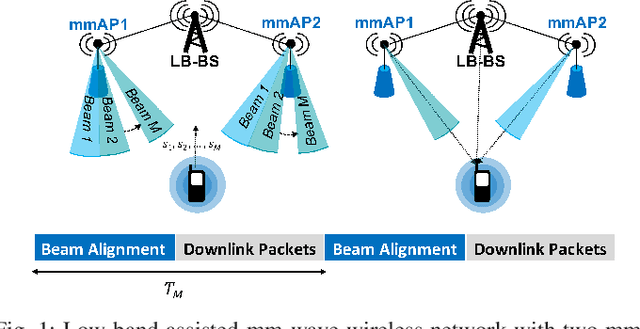

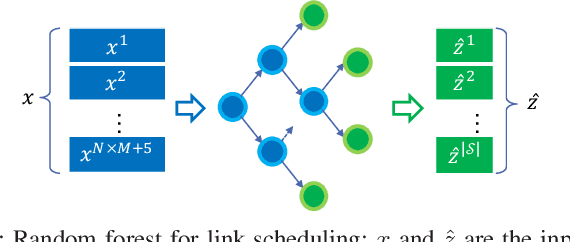

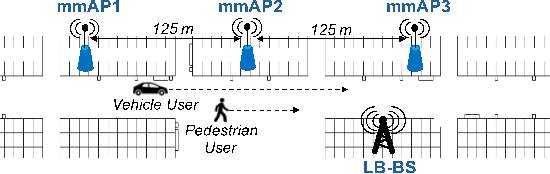

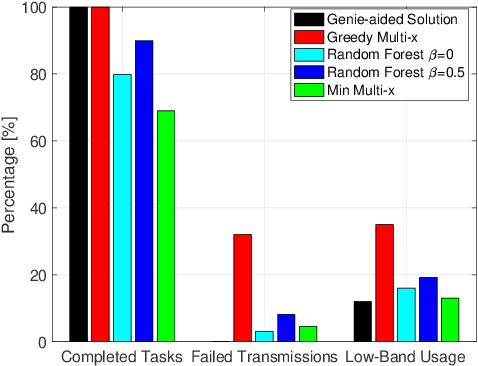

Learning-Based Link Scheduling in Millimeter-wave Multi-connectivity Scenarios

Mar 02, 2020

Abstract:Multi-connectivity is emerging as a promising solution to provide reliable communications and seamless connectivity for the millimeter-wave frequency range. Due to the blockage sensitivity at such high frequencies, connectivity with multiple cells can drastically increase the network performance in terms of throughput and reliability. However, an inefficient link scheduling, i.e., over and under-provisioning of connections, can lead either to high interference and energy consumption or to unsatisfied user's quality of service (QoS) requirements. In this work, we present a learning-based solution that is able to learn and then to predict the optimal link scheduling to satisfy users' QoS requirements while avoiding communication interruptions. Moreover, we compare the proposed approach with two base line methods and the genie-aided link scheduling that assumes perfect channel knowledge. We show that the learning-based solution approaches the optimum and outperforms the base line methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge