Burak Demirel

From Intents to Actions: Agentic AI in Autonomous Networks

Feb 01, 2026Abstract:Telecommunication networks are increasingly expected to operate autonomously while supporting heterogeneous services with diverse and often conflicting intents -- that is, performance objectives, constraints, and requirements specific to each service. However, transforming high-level intents -- such as ultra-low latency, high throughput, or energy efficiency -- into concrete control actions (i.e., low-level actuator commands) remains beyond the capability of existing heuristic approaches. This work introduces an Agentic AI system for intent-driven autonomous networks, structured around three specialized agents. A supervisory interpreter agent, powered by language models, performs both lexical parsing of intents into executable optimization templates and cognitive refinement based on feedback, constraint feasibility, and evolving network conditions. An optimizer agent converts these templates into tractable optimization problems, analyzes trade-offs, and derives preferences across objectives. Lastly, a preference-driven controller agent, based on multi-objective reinforcement learning, leverages these preferences to operate near the Pareto frontier of network performance that best satisfies the original intent. Collectively, these agents enable networks to autonomously interpret, reason over, adapt to, and act upon diverse intents and network conditions in a scalable manner.

Practical Policy Distillation for Reinforcement Learning in Radio Access Networks

Nov 09, 2025

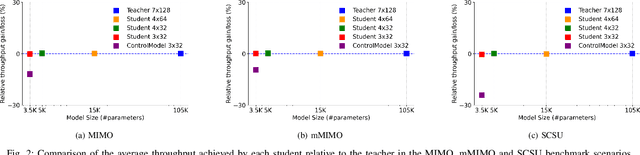

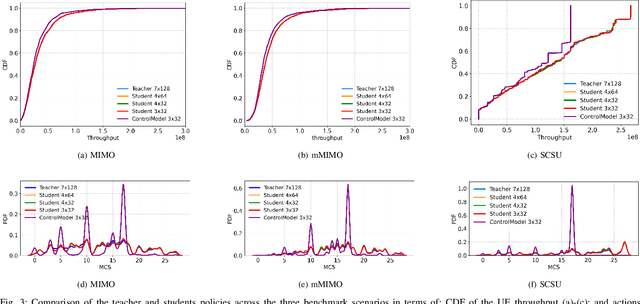

Abstract:Adopting artificial intelligence (AI) in radio access networks (RANs) presents several challenges, including limited availability of link-level measurements (e.g., CQI reports), stringent real-time processing constraints (e.g., sub-1 ms per TTI), and network heterogeneity (different spectrum bands, cell types, and vendor equipment). A critical yet often overlooked barrier lies in the computational and memory limitations of RAN baseband hardware, particularly in legacy 4th Generation (4G) systems, which typically lack on-chip neural accelerators. As a result, only lightweight AI models (under 1 Mb and sub-100~μs inference time) can be effectively deployed, limiting both their performance and applicability. However, achieving strong generalization across diverse network conditions often requires large-scale models with substantial resource demands. To address this trade-off, this paper investigates policy distillation in the context of a reinforcement learning-based link adaptation task. We explore two strategies: single-policy distillation, where a scenario-agnostic teacher model is compressed into one generalized student model; and multi-policy distillation, where multiple scenario-specific teachers are consolidated into a single generalist student. Experimental evaluations in a high-fidelity, 5th Generation (5G)-compliant simulator demonstrate that both strategies produce compact student models that preserve the teachers' generalization capabilities while complying with the computational and memory limitations of existing RAN hardware.

Generalization in Reinforcement Learning for Radio Access Networks

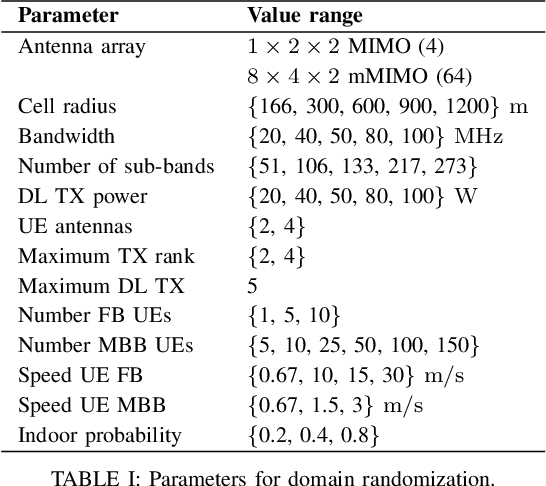

Jul 09, 2025Abstract:Modern RAN operate in highly dynamic and heterogeneous environments, where hand-tuned, rule-based RRM algorithms often underperform. While RL can surpass such heuristics in constrained settings, the diversity of deployments and unpredictable radio conditions introduce major generalization challenges. Data-driven policies frequently overfit to training conditions, degrading performance in unseen scenarios. To address this, we propose a generalization-centered RL framework for RAN control that: (i) encodes cell topology and node attributes via attention-based graph representations; (ii) applies domain randomization to broaden the training distribution; and (iii) distributes data generation across multiple actors while centralizing training in a cloud-compatible architecture aligned with O-RAN principles. Although generalization increases computational and data-management complexity, our distributed design mitigates this by scaling data collection and training across diverse network conditions. Applied to downlink link adaptation in five 5G benchmarks, our policy improves average throughput and spectral efficiency by ~10% over an OLLA baseline (10% BLER target) in full-buffer MIMO/mMIMO and by >20% under high mobility. It matches specialized RL in full-buffer traffic and achieves up to 4- and 2-fold gains in eMBB and mixed-traffic benchmarks, respectively. In nine-cell deployments, GAT models offer 30% higher throughput over MLP baselines. These results, combined with our scalable architecture, offer a path toward AI-native 6G RAN using a single, generalizable RL agent.

Design Principles for Generalization and Scalability of AI in Communication Systems

Jun 09, 2023

Abstract:Artificial intelligence (AI) has emerged as a powerful tool for addressing complex and dynamic tasks in communication systems, where traditional rule-based algorithms often struggle. However, most AI applications to networking tasks are designed and trained for specific, limited conditions, hindering the algorithms from learning and adapting to generic situations, such as those met across radio access networks (RAN). This paper proposes design principles for sustainable and scalable AI integration in communication systems, focusing on creating AI algorithms that can generalize across network environments, intents, and control tasks. This approach enables a limited number of AI-driven RAN functions to tackle larger problems, improve system performance, and simplify lifecycle management. To achieve sustainability and automation, we introduce a scalable learning architecture that supports all deployed AI applications in the system. This architecture separates centralized learning functionalities from distributed actuation and inference functions, enabling efficient data collection and management, computational and storage resources optimization, and cost reduction. We illustrate these concepts by designing a generalized link adaptation algorithm, demonstrating the benefits of our proposed approach.

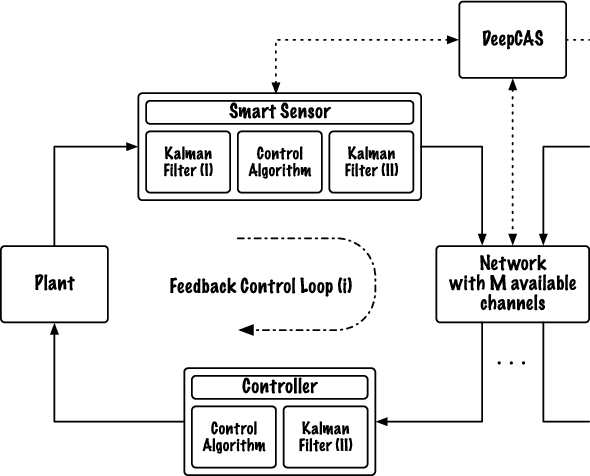

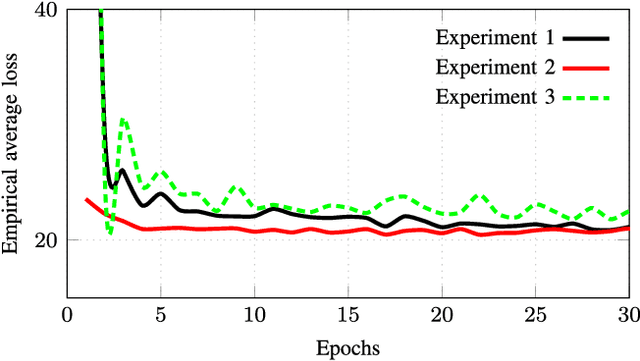

DeepCAS: A Deep Reinforcement Learning Algorithm for Control-Aware Scheduling

Jun 13, 2018

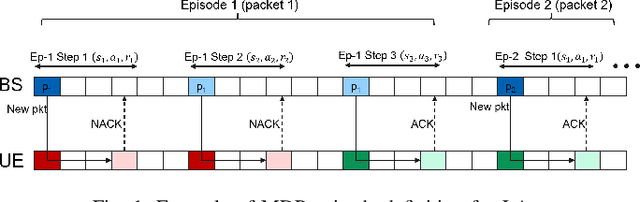

Abstract:We consider networked control systems consisting of multiple independent controlled subsystems, operating over a shared communication network. Such systems are ubiquitous in cyber-physical systems, Internet of Things, and large-scale industrial systems. In many large-scale settings, the size of the communication network is smaller than the size of the system. In consequence, scheduling issues arise. The main contribution of this paper is to develop a deep reinforcement learning-based \emph{control-aware} scheduling (\textsc{DeepCAS}) algorithm to tackle these issues. We use the following (optimal) design strategy: First, we synthesize an optimal controller for each subsystem; next, we design a learning algorithm that adapts to the chosen subsystems (plants) and controllers. As a consequence of this adaptation, our algorithm finds a schedule that minimizes the \emph{control loss}. We present empirical results to show that \textsc{DeepCAS} finds schedules with better performance than periodic ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge