Colin Keil

Towards Long Term SLAM on Thermal Imagery

Mar 28, 2024Abstract:Visual SLAM with thermal imagery, and other low contrast visually degraded environments such as underwater, or in areas dominated by snow and ice, remain a difficult problem for many state of the art (SOTA) algorithms. In addition to challenging front-end data association, thermal imagery presents an additional difficulty for long term relocalization and map reuse. The relative temperatures of objects in thermal imagery change dramatically from day to night. Feature descriptors typically used for relocalization in SLAM are unable to maintain consistency over these diurnal changes. We show that learned feature descriptors can be used within existing Bag of Word based localization schemes to dramatically improve place recognition across large temporal gaps in thermal imagery. In order to demonstrate the effectiveness of our trained vocabulary, we have developed a baseline SLAM system, integrating learned features and matching into a classical SLAM algorithm. Our system demonstrates good local tracking on challenging thermal imagery, and relocalization that overcomes dramatic day to night thermal appearance changes. Our code and datasets are available here: https://github.com/neufieldrobotics/IRSLAM_Baseline

Challenges of Indoor SLAM: A multi-modal multi-floor dataset for SLAM evaluation

Jun 14, 2023

Abstract:Robustness in Simultaneous Localization and Mapping (SLAM) remains one of the key challenges for the real-world deployment of autonomous systems. SLAM research has seen significant progress in the last two and a half decades, yet many state-of-the-art (SOTA) algorithms still struggle to perform reliably in real-world environments. There is a general consensus in the research community that we need challenging real-world scenarios which bring out different failure modes in sensing modalities. In this paper, we present a novel multi-modal indoor SLAM dataset covering challenging common scenarios that a robot will encounter and should be robust to. Our data was collected with a mobile robotics platform across multiple floors at Northeastern University's ISEC building. Such a multi-floor sequence is typical of commercial office spaces characterized by symmetry across floors and, thus, is prone to perceptual aliasing due to similar floor layouts. The sensor suite comprises seven global shutter cameras, a high-grade MEMS inertial measurement unit (IMU), a ZED stereo camera, and a 128-channel high-resolution lidar. Along with the dataset, we benchmark several SLAM algorithms and highlight the problems faced during the runs, such as perceptual aliasing, visual degradation, and trajectory drift. The benchmarking results indicate that parts of the dataset work well with some algorithms, while other data sections are challenging for even the best SOTA algorithms. The dataset is available at https://github.com/neufieldrobotics/NUFR-M3F.

Team Northeastern's Approach to ANA XPRIZE Avatar Final Testing: A Holistic Approach to Telepresence and Lessons Learned

Mar 08, 2023

Abstract:This paper reports on Team Northeastern's Avatar system for telepresence, and our holistic approach to meet the ANA Avatar XPRIZE Final testing task requirements. The system features a dual-arm configuration with hydraulically actuated glove-gripper pair for haptic force feedback. Our proposed Avatar system was evaluated in the ANA Avatar XPRIZE Finals and completed all 10 tasks, scored 14.5 points out of 15.0, and received the 3rd Place Award. We provide the details of improvements over our first generation Avatar, covering manipulation, perception, locomotion, power, network, and controller design. We also extensively discuss the major lessons learned during our participation in the competition.

Efficient and Accurate Candidate Generation for Grasp Pose Detection in SE(3)

Apr 03, 2022

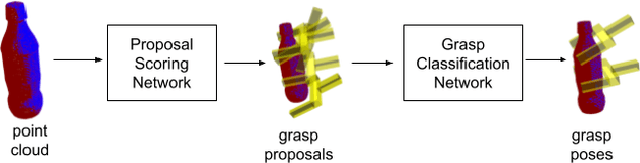

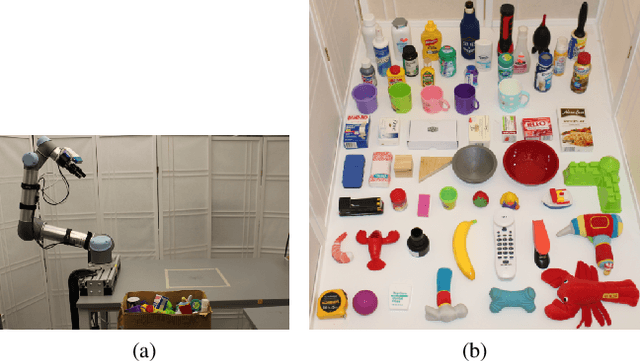

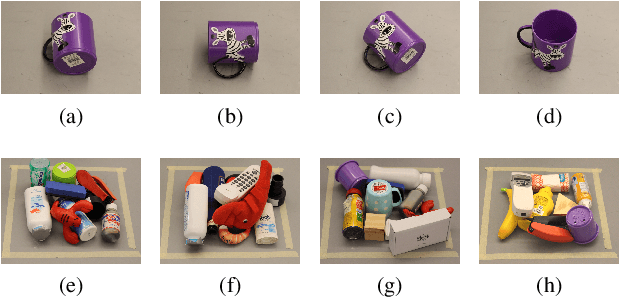

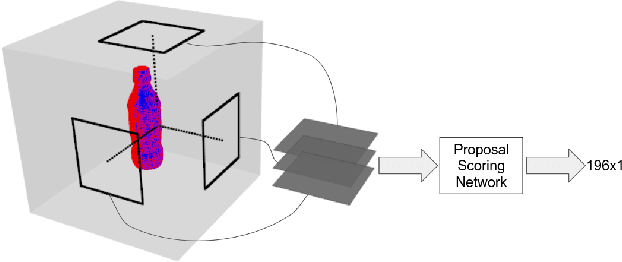

Abstract:Grasp detection of novel objects in unstructured environments is a key capability in robotic manipulation. For 2D grasp detection problems where grasps are assumed to lie in the plane, it is common to design a fully convolutional neural network that predicts grasps over an entire image in one step. However, this is not possible for grasp pose detection where grasp poses are assumed to exist in SE(3). In this case, it is common to approach the problem in two steps: grasp candidate generation and candidate classification. Since grasp candidate classification is typically expensive, the problem becomes one of efficiently identifying high quality candidate grasps. This paper proposes a new grasp candidate generation method that significantly outperforms major 3D grasp detection baselines. Supplementary material is available at https://atenpas.github.io/psn/.

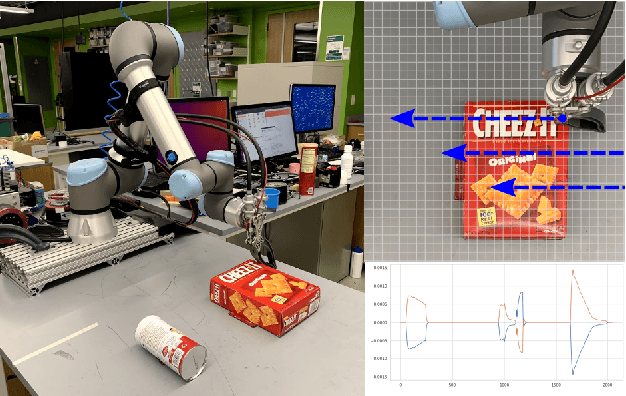

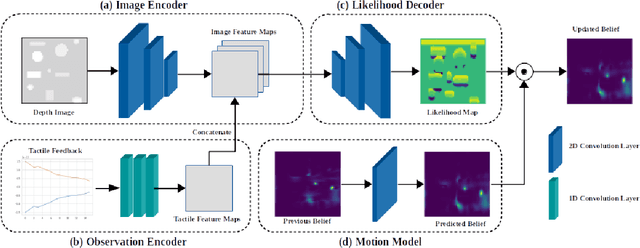

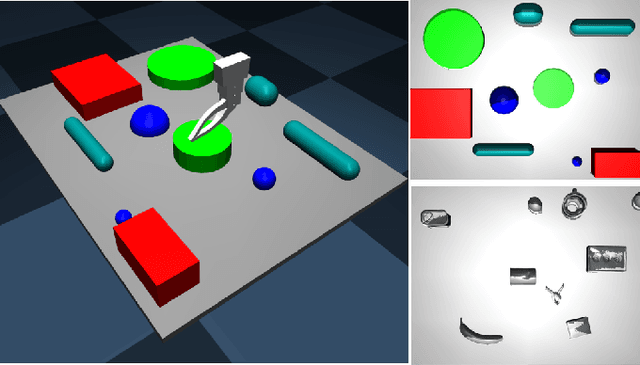

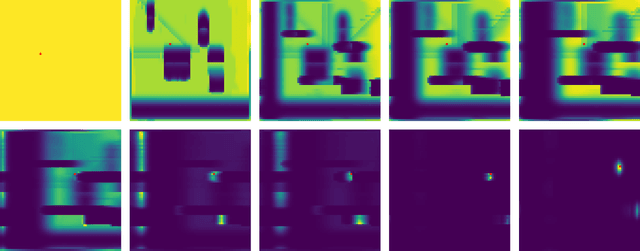

Learning Bayes Filter Models for Tactile Localization

Nov 11, 2020

Abstract:Localizing and tracking the pose of robotic grippers are necessary skills for manipulation tasks. However, the manipulators with imprecise kinematic models (e.g. low-cost arms) or manipulators with unknown world coordinates (e.g. poor camera-arm calibration) cannot locate the gripper with respect to the world. In these circumstances, we can leverage tactile feedback between the gripper and the environment. In this paper, we present learnable Bayes filter models that can localize robotic grippers using tactile feedback. We propose a novel observation model that conditions the tactile feedback on visual maps of the environment along with a motion model to recursively estimate the gripper's location. Our models are trained in simulation with self-supervision and transferred to the real world. Our method is evaluated on a tabletop localization task in which the gripper interacts with objects. We report results in simulation and on a real robot, generalizing over different sizes, shapes, and configurations of the objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge