Christopher Syben

Pattern Recognition Lab, Department of Computer Science, Friedrich-Alexander-Universität Erlangen-Nürnberg, Erlangen, Germany

Reconstruction of Voxels with Position- and Angle-Dependent Weightings

Oct 27, 2020

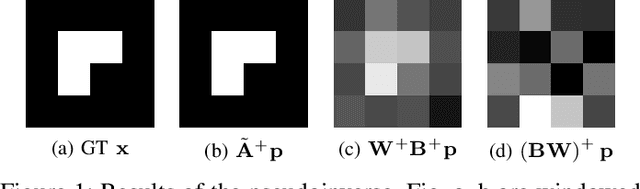

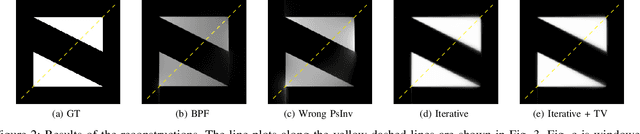

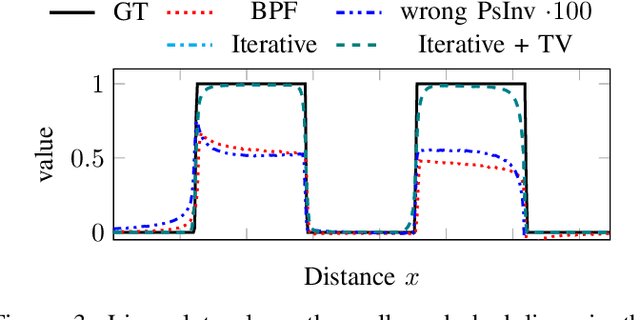

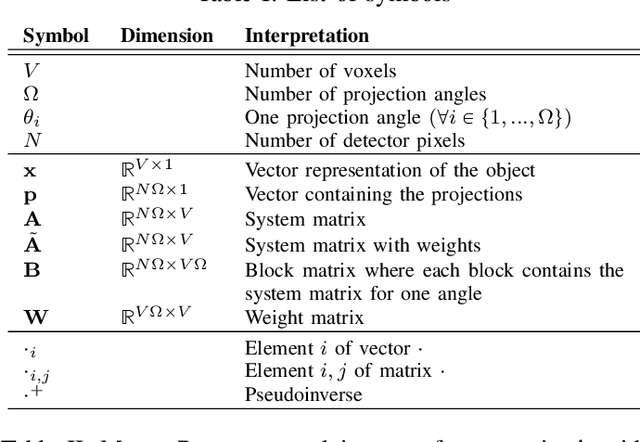

Abstract:The reconstruction problem of voxels with individual weightings can be modeled a position- and angle- dependent function in the forward-projection. This changes the system matrix and prohibits to use standard filtered backprojection. In this work we first formulate this reconstruction problem in terms of a system matrix and weighting part. We compute the pseudoinverse and show that the solution is rank-deficient and hence very ill posed. This is a fundamental limitation for reconstruction. We then derive an iterative solution and experimentally show its uperiority to any closed-form solution.

Cephalogram Synthesis and Landmark Detection in Dental Cone-Beam CT Systems

Sep 09, 2020

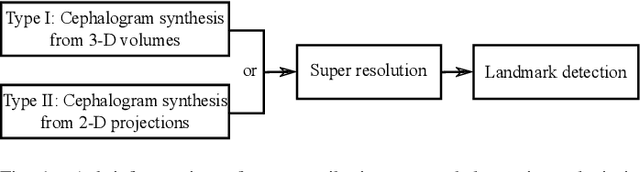

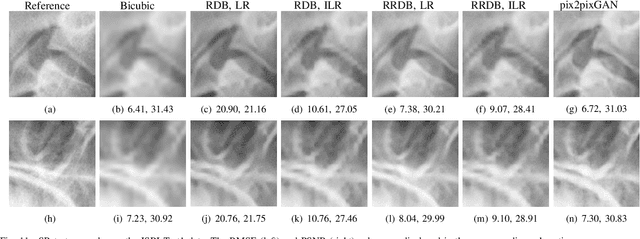

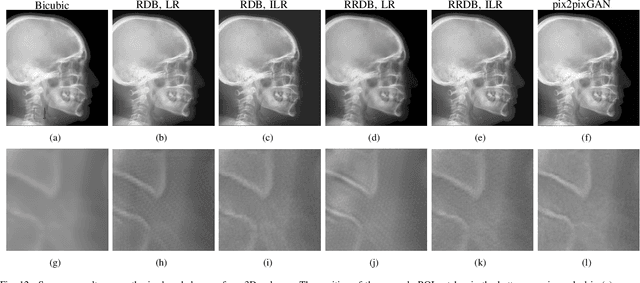

Abstract:Due to the lack of standardized 3D cephalometric analytic methodology, 2D cephalograms synthesized from 3D cone-beam computed tomography (CBCT) volumes are widely used for cephalometric analysis in dental CBCT systems. However, compared with conventional X-ray film based cephalograms, such synthetic cephalograms lack image contrast and resolution. In addition, the radiation dose during the scan for 3D reconstruction causes potential health risks. In this work, we propose a sigmoid-based intensity transform that uses the nonlinear optical property of X-ray films to increase image contrast of synthetic cephalograms. To improve image resolution, super resolution deep learning techniques are investigated. For low dose purpose, the pixel-to-pixel generative adversarial network (pix2pixGAN) is proposed for 2D cephalogram synthesis directly from two CBCT projections. For landmark detection in the synthetic cephalograms, an efficient automatic landmark detection method using the combination of LeNet-5 and ResNet50 is proposed. Our experiments demonstrate the efficacy of pix2pixGAN in 2D cephalogram synthesis, achieving an average peak signal-to-noise ratio (PSNR) value of 33.8 with reference to the cephalograms synthesized from 3D CBCT volumes. Pix2pixGAN also achieves the best performance in super resolution, achieving an average PSNR value of 32.5 without the introduction of checkerboard or jagging artifacts. Our proposed automatic landmark detection method achieves 86.7% successful detection rate in the 2 mm clinical acceptable range on the ISBI Test1 data, which is comparable to the state-of-the-art methods. The method trained on conventional cephalograms can be directly applied to landmark detection in the synthetic cephalograms, achieving 93.0% and 80.7% successful detection rate in 4 mm precision range for synthetic cephalograms from 3D volumes and 2D projections respectively.

Simultaneous Estimation of X-ray Back-Scatter and Forward-Scatter using Multi-Task Learning

Jul 08, 2020

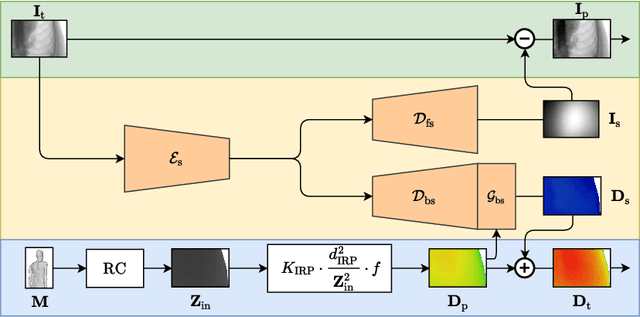

Abstract:Scattered radiation is a major concern impacting X-ray image-guided procedures in two ways. First, back-scatter significantly contributes to patient (skin) dose during complicated interventions. Second, forward-scattered radiation reduces contrast in projection images and introduces artifacts in 3-D reconstructions. While conventionally employed anti-scatter grids improve image quality by blocking X-rays, the additional attenuation due to the anti-scatter grid at the detector needs to be compensated for by a higher patient entrance dose. This also increases the room dose affecting the staff caring for the patient. For skin dose quantification, back-scatter is usually accounted for by applying pre-determined scalar back-scatter factors or linear point spread functions to a primary kerma forward projection onto a patient surface point. However, as patients come in different shapes, the generalization of conventional methods is limited. Here, we propose a novel approach combining conventional techniques with learning-based methods to simultaneously estimate the forward-scatter reaching the detector as well as the back-scatter affecting the patient skin dose. Knowing the forward-scatter, we can correct X-ray projections, while a good estimate of the back-scatter component facilitates an improved skin dose assessment. To simultaneously estimate forward-scatter as well as back-scatter, we propose a multi-task approach for joint back- and forward-scatter estimation by combining X-ray physics with neural networks. We show that, in theory, highly accurate scatter estimation in both cases is possible. In addition, we identify research directions for our multi-task framework and learning-based scatter estimation in general.

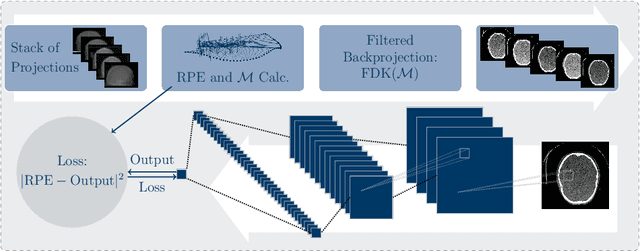

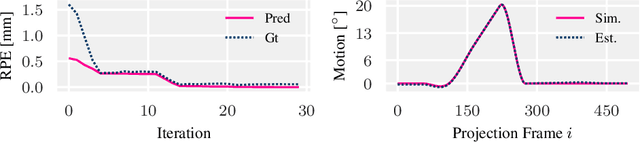

Deep autofocus with cone-beam CT consistency constraint

Dec 04, 2019

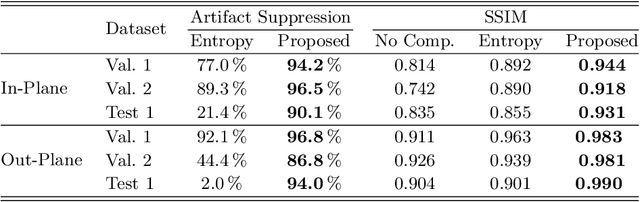

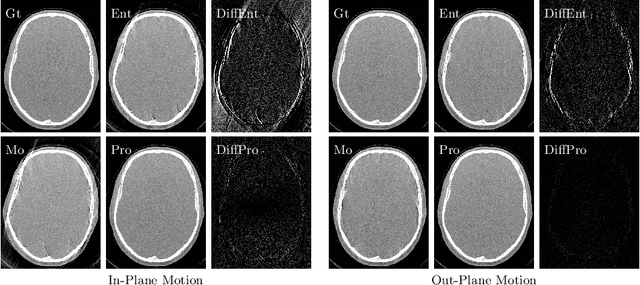

Abstract:High quality reconstruction with interventional C-arm cone-beam computed tomography (CBCT) requires exact geometry information. If the geometry information is corrupted, e. g., by unexpected patient or system movement, the measured signal is misplaced in the backprojection operation. With prolonged acquisition times of interventional C-arm CBCT the likelihood of rigid patient motion increases. To adapt the backprojection operation accordingly, a motion estimation strategy is necessary. Recently, a novel learning-based approach was proposed, capable of compensating motions within the acquisition plane. We extend this method by a CBCT consistency constraint, which was proven to be efficient for motions perpendicular to the acquisition plane. By the synergistic combination of these two measures, in and out-plane motion is well detectable, achieving an average artifact suppression of 93 [percent]. This outperforms the entropy-based state-of-the-art autofocus measure which achieves on average an artifact suppression of 54 [percent].

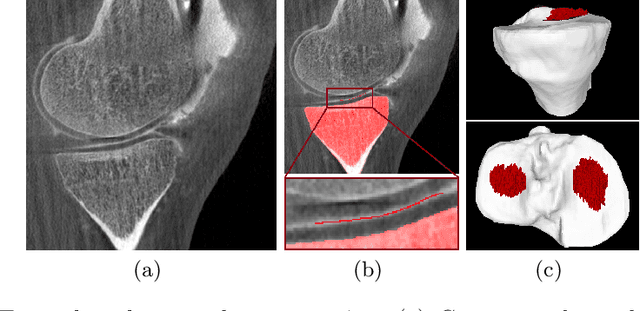

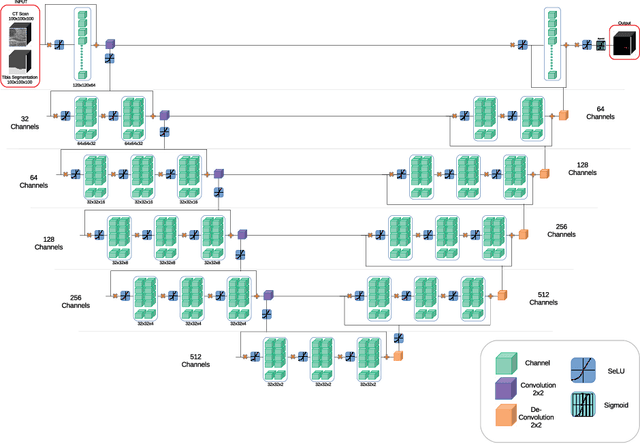

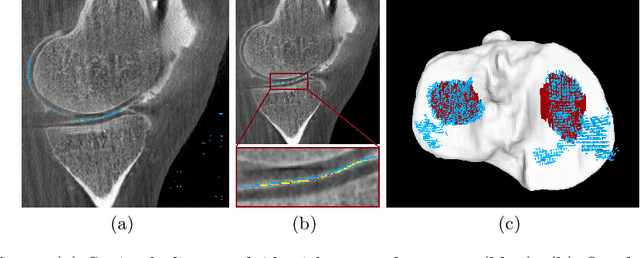

Multi-Channel Volumetric Neural Network for Knee Cartilage Segmentation in Cone-beam CT

Dec 03, 2019

Abstract:Analyzing knee cartilage thickness and strain under load can help to further the understanding of the effects of diseases like Osteoarthritis. A precise segmentation of the cartilage is a necessary prerequisite for this analysis. This segmentation task has mainly been addressed in Magnetic Resonance Imaging, and was rarely investigated on contrast-enhanced Computed Tomography, where contrast agent visualizes the border between femoral and tibial cartilage. To overcome the main drawback of manual segmentation, namely its high time investment, we propose to use a 3D Convolutional Neural Network for this task. The presented architecture consists of a V-Net with SeLu activation, and a Tversky loss function. Due to the high imbalance between very few cartilage pixels and many background pixels, a high false positive rate is to be expected. To reduce this rate, the two largest segmented point clouds are extracted using a connected component analysis, since they most likely represent the medial and lateral tibial cartilage surfaces. The resulting segmentations are compared to manual segmentations, and achieve on average a recall of 0.69, which confirms the feasibility of this approach.

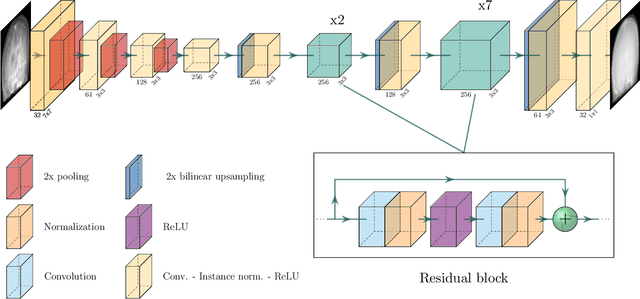

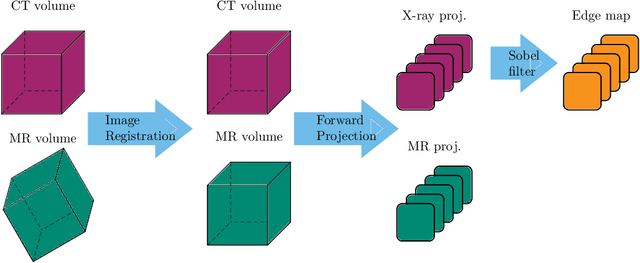

Projection-to-Projection Translation for Hybrid X-ray and Magnetic Resonance Imaging

Nov 19, 2019

Abstract:Hybrid X-ray and magnetic resonance (MR) imaging promises large potential in interventional medical imaging applications due to the broad variety of contrast of MRI combined with fast imaging of X-ray-based modalities. To fully utilize the potential of the vast amount of existing image enhancement techniques, the corresponding information from both modalities must be present in the same domain. For image-guided interventional procedures, X-ray fluoroscopy has proven to be the modality of choice. Synthesizing one modality from another in this case is an ill-posed problem due to ambiguous signal and overlapping structures in projective geometry. To take on these challenges, we present a learning-based solution to MR to X-ray projection-to-projection translation. We propose an image generator network that focuses on high representation capacity in higher resolution layers to allow for accurate synthesis of fine details in the projection images. Additionally, a weighting scheme in the loss computation that favors high-frequency structures is proposed to focus on the important details and contours in projection imaging. The proposed extensions prove valuable in generating X-ray projection images with natural appearance. Our approach achieves a deviation from the ground truth of only $6$% and structural similarity measure of $0.913\,\pm\,0.005$. In particular the high frequency weighting assists in generating projection images with sharp appearance and reduces erroneously synthesized fine details.

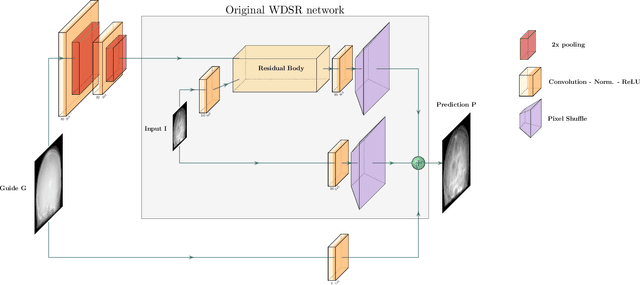

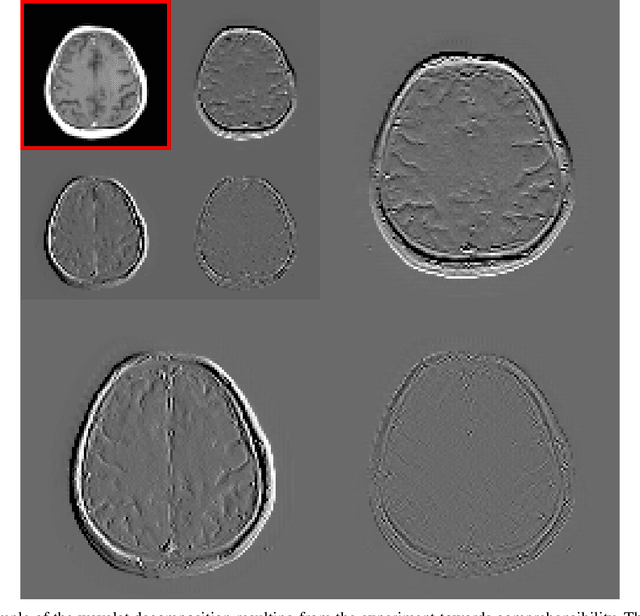

Multi-modal Deep Guided Filtering for Comprehensible Medical Image Processing

Nov 18, 2019

Abstract:Deep learning-based image processing is capable of creating highly appealing results. However, it is still widely considered as a "blackbox" transformation. In medical imaging, this lack of comprehensibility of the results is a sensitive issue. The integration of known operators into the deep learning environment has proven to be advantageous for the comprehensibility and reliability of the computations. Consequently, we propose the use of the locally linear guided filter in combination with a learned guidance map for general purpose medical image processing. The output images are only processed by the guided filter while the guidance map can be trained to be task-optimal in an end-to-end fashion. We investigate the performance based on two popular tasks: image super resolution and denoising. The evaluation is conducted based on pairs of multi-modal magnetic resonance imaging and cross-modal computed tomography and magnetic resonance imaging datasets. For both tasks, the proposed approach is on par with state-of-the-art approaches. Additionally, we can show that the input image's content is almost unchanged after the processing which is not the case for conventional deep learning approaches. On top, the proposed pipeline offers increased robustness against degraded input as well as adversarial attacks.

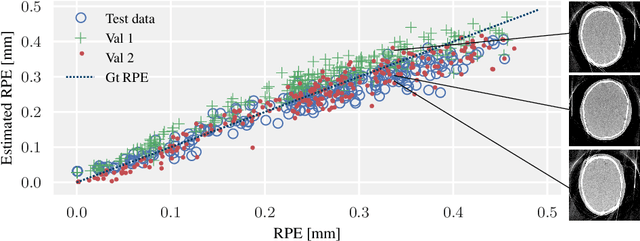

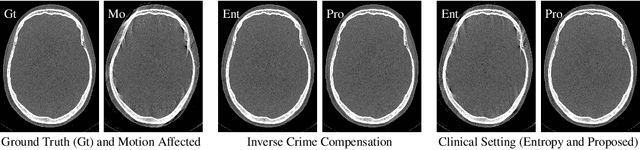

Image Quality Assessment for Rigid Motion Compensation

Oct 09, 2019

Abstract:Diagnostic stroke imaging with C-arm cone-beam computed tomography (CBCT) enables reduction of time-to-therapy for endovascular procedures. However, the prolonged acquisition time compared to helical CT increases the likelihood of rigid patient motion. Rigid motion corrupts the geometry alignment assumed during reconstruction, resulting in image blurring or streaking artifacts. To reestablish the geometry, we estimate the motion trajectory by an autofocus method guided by a neural network, which was trained to regress the reprojection error, based on the image information of a reconstructed slice. The network was trained with CBCT scans from 19 patients and evaluated using an additional test patient. It adapts well to unseen motion amplitudes and achieves superior results in a motion estimation benchmark compared to the commonly used entropy-based method.

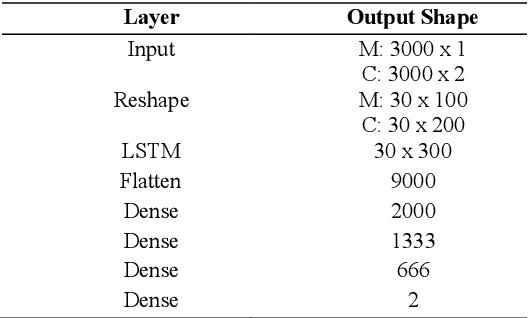

Magnetic Resonance Fingerprinting Reconstruction Using Recurrent Neural Networks

Sep 13, 2019

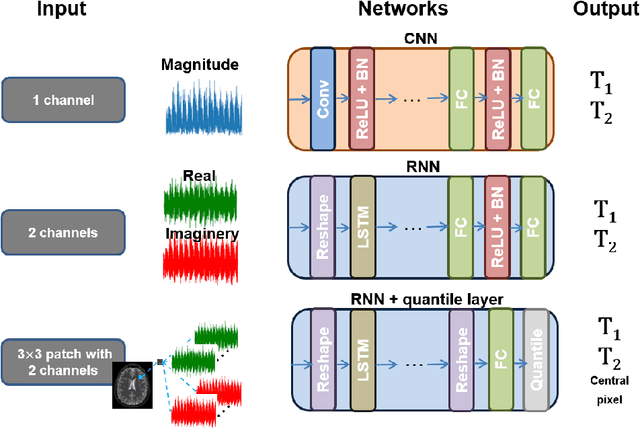

Abstract:Magnetic Resonance Fingerprinting (MRF) is an imaging technique acquiring unique time signals for different tissues. Although the acquisition is highly accelerated, the reconstruction time remains a problem, as the state-of-the-art template matching compares every signal with a set of possible signals. To overcome this limitation, deep learning based approaches, e.g. Convolutional Neural Networks (CNNs) have been proposed. In this work, we investigate the applicability of Recurrent Neural Networks (RNNs) for this reconstruction problem, as the signals are correlated in time. Compared to previous methods based on CNNs, RNN models yield significantly improved results using in-vivo data.

* Accepted and presented at the German Medical Data Sciences (GMDS) conference 2019 (Dortmund, Germany)

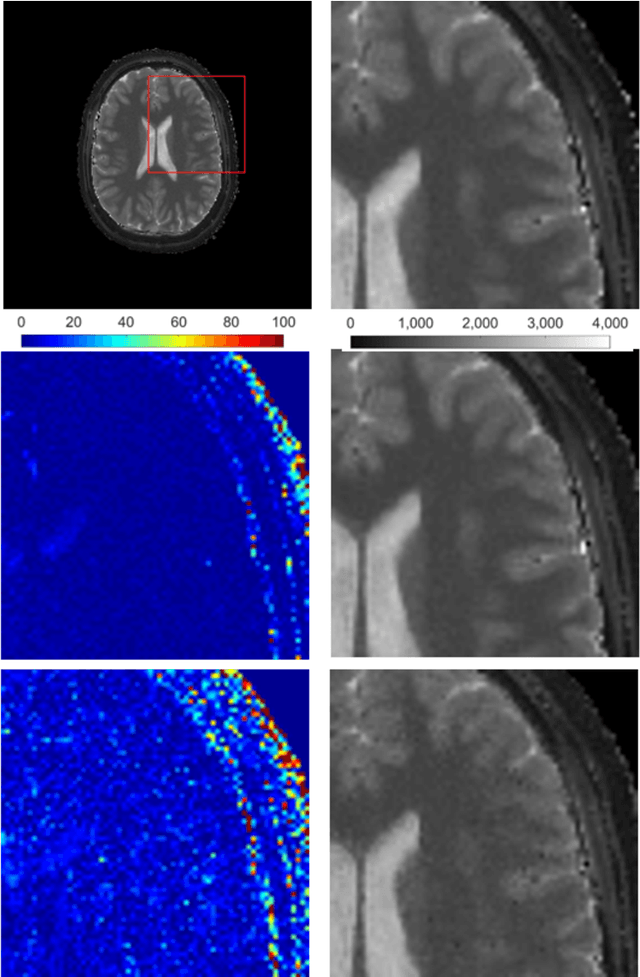

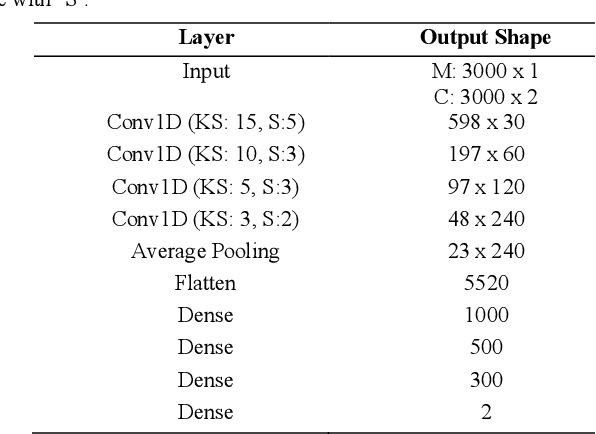

RinQ Fingerprinting: Recurrence-informed Quantile Networks for Magnetic Resonance Fingerprinting

Jul 21, 2019

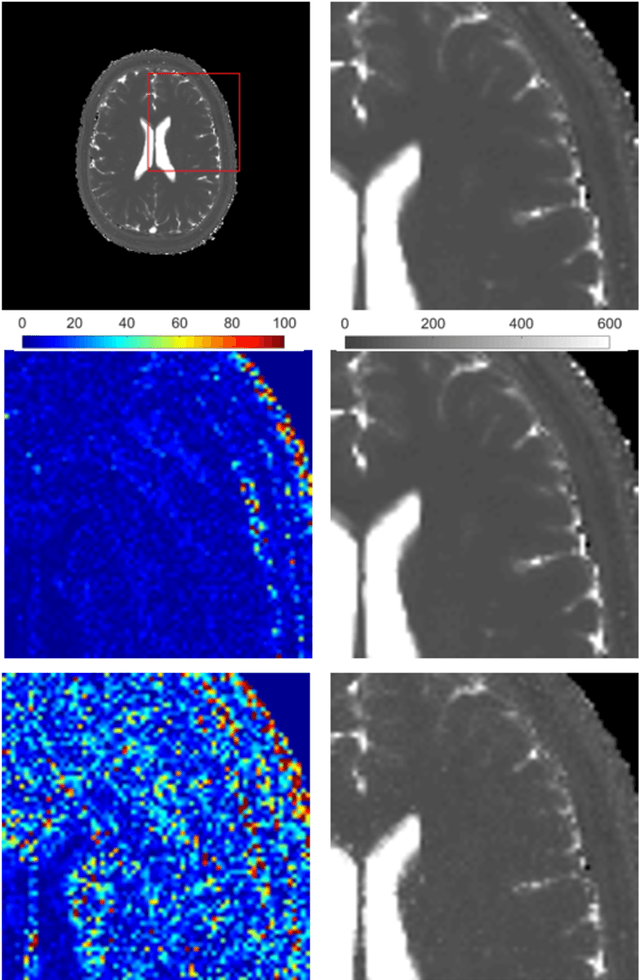

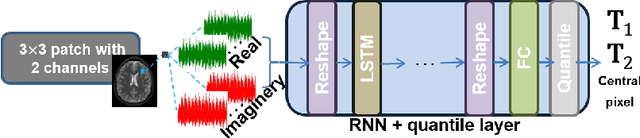

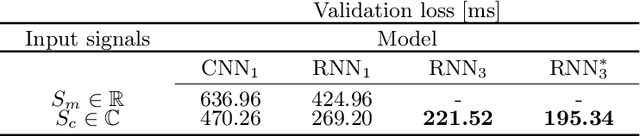

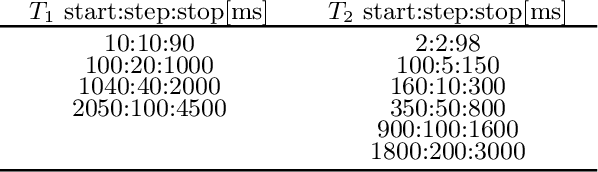

Abstract:Recently, Magnetic Resonance Fingerprinting (MRF) was proposed as a quantitative imaging technique for the simultaneous acquisition of tissue parameters such as relaxation times $T_1$ and $T_2$. Although the acquisition is highly accelerated, the state-of-the-art reconstruction suffers from long computation times: Template matching methods are used to find the most similar signal to the measured one by comparing it to pre-simulated signals of possible parameter combinations in a discretized dictionary. Deep learning approaches can overcome this limitation, by providing the direct mapping from the measured signal to the underlying parameters by one forward pass through a network. In this work, we propose a Recurrent Neural Network (RNN) architecture in combination with a novel quantile layer. RNNs are well suited for the processing of time-dependent signals and the quantile layer helps to overcome the noisy outliers by considering the spatial neighbors of the signal. We evaluate our approach using in-vivo data from multiple brain slices and several volunteers, running various experiments. We show that the RNN approach with small patches of complex-valued input signals in combination with a quantile layer outperforms other architectures, e.g. previously proposed CNNs for the MRF reconstruction reducing the error in $T_1$ and $T_2$ by more than 80%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge