C. Lataniotis

Stochastic spectral embedding

Apr 09, 2020

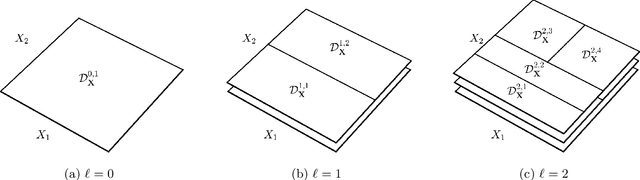

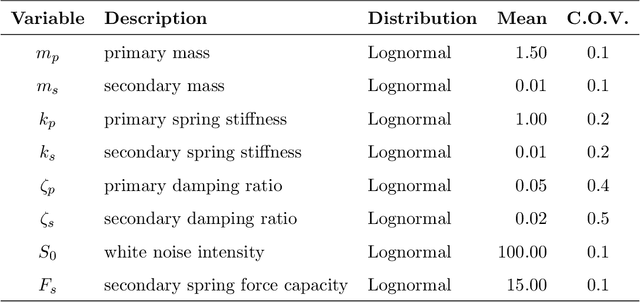

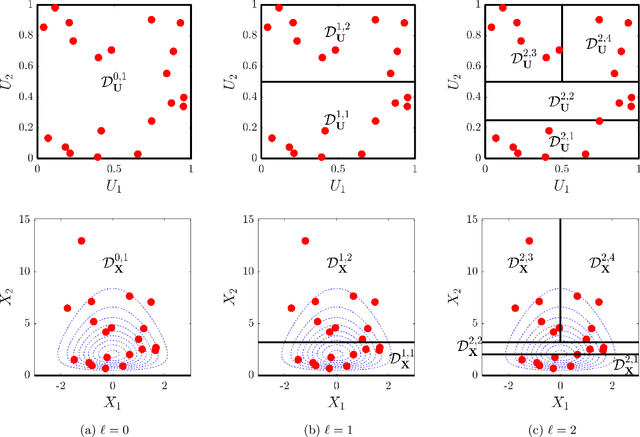

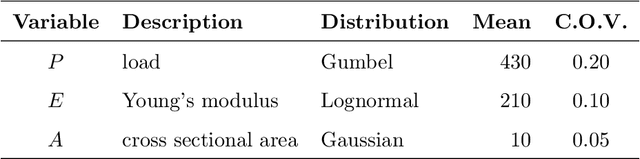

Abstract:Constructing approximations that can accurately mimic the behaviour of complex models at reduced computational costs is an important aspect of uncertainty quantification. Despite their flexibility and efficiency, classical surrogate models such as Kriging or polynomial chaos expansions tend to struggle with highly non-linear, localized or non-stationary computational models. We hereby propose a novel sequential adaptive surrogate modelling method based on recursively embedding locally spectral expansions. It is achieved by means of disjoint recursive partitioning of the input domain, which consists in sequentially splitting the latter into smaller subdomains, and constructing a simpler local spectral expansions in each, exploiting the trade-off complexity vs. locality. The resulting expansion, which we refer to as "stochastic spectral embedding" (SSE), is a piece-wise continuous approximation of the model response that shows promising approximation capabilities, and good scaling with both the problem dimension and the size of the training set. We finally show how the method compares favourably against state-of-the-art sparse polynomial chaos expansions on a set of models with different complexity and input dimension.

Extending classical surrogate modelling to ultrahigh dimensional problems through supervised dimensionality reduction: a data-driven approach

Dec 15, 2018

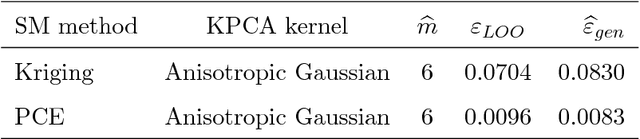

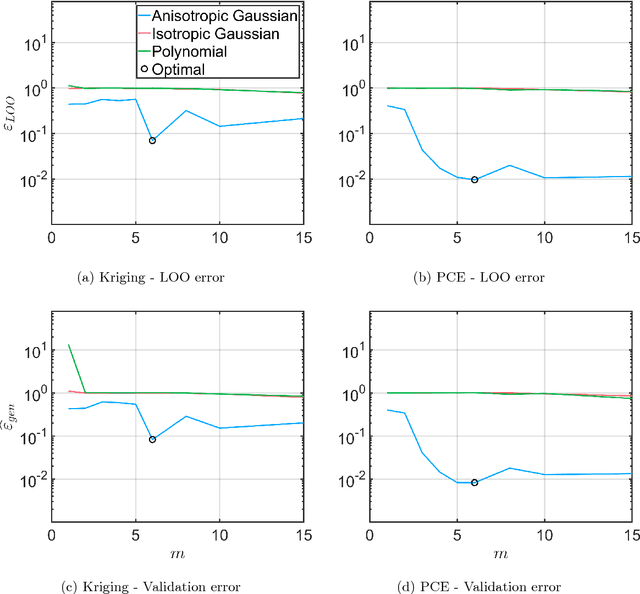

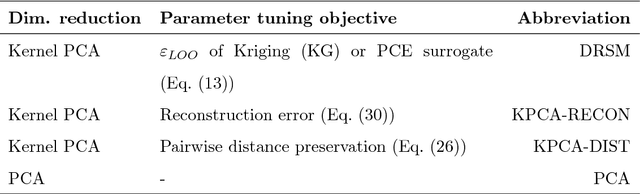

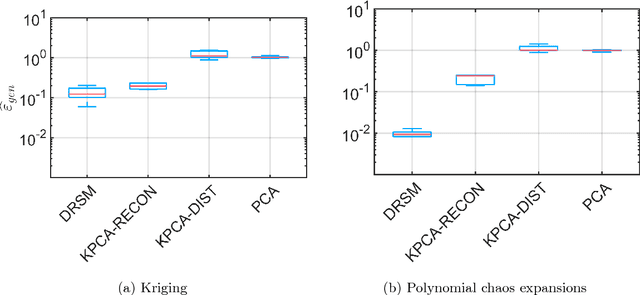

Abstract:Thanks to their versatility, ease of deployment and high-performance, surrogate models have become staple tools in the arsenal of uncertainty quantification (UQ). From local interpolants to global spectral decompositions, surrogates are characterised by their ability to efficiently emulate complex computational models based on a small set of model runs used for training. An inherent limitation of many surrogate models is their susceptibility to the curse of dimensionality, which traditionally limits their applicability to a maximum of $\co(10^2)$ input dimensions. We present a novel approach at high-dimensional surrogate modelling that is model-, dimensionality reduction- and surrogate model- agnostic (black box), and can enable the solution of high dimensional (i.e. up to $\co(10^4)$) problems. After introducing the general algorithm, we demonstrate its performance by combining Kriging and polynomial chaos expansions surrogates and kernel principal component analysis. In particular, we compare the generalisation performance that the resulting surrogates achieve to the classical sequential application of dimensionality reduction followed by surrogate modelling on several benchmark applications, comprising an analytical function and two engineering applications of increasing dimensionality and complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge