Ava Soleimany

Deep Evidential Regression

Oct 07, 2019

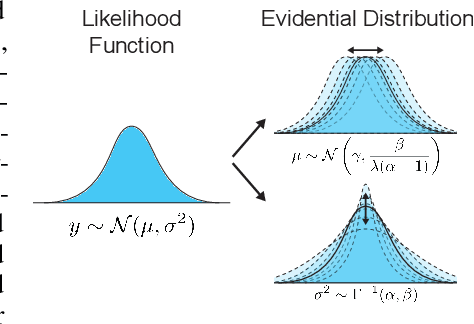

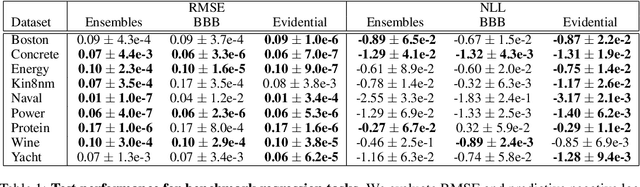

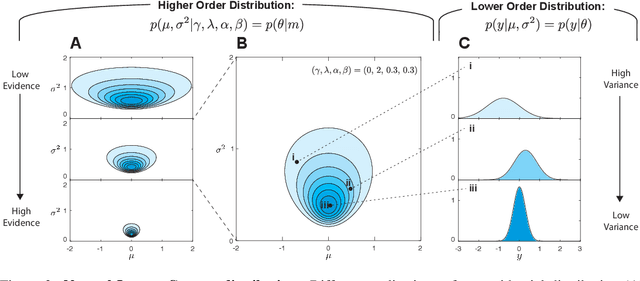

Abstract:Deterministic neural networks (NNs) are increasingly being deployed in safety critical domains, where calibrated, robust and efficient measures of uncertainty are crucial. While it is possible to train regression networks to output the parameters of a probability distribution by maximizing a Gaussian likelihood function, the resulting model remains oblivious to the underlying confidence of its predictions. In this paper, we propose a novel method for training deterministic NNs to not only estimate the desired target but also the associated evidence in support of that target. We accomplish this by placing evidential priors over our original Gaussian likelihood function and training our NN to infer the hyperparameters of our evidential distribution. We impose priors during training such that the model is penalized when its predicted evidence is not aligned with the correct output. Thus the model estimates not only the probabilistic mean and variance of our target but also the underlying uncertainty associated with each of those parameters. We observe that our evidential regression method learns well-calibrated measures of uncertainty on various benchmarks, scales to complex computer vision tasks, and is robust to adversarial input perturbations.

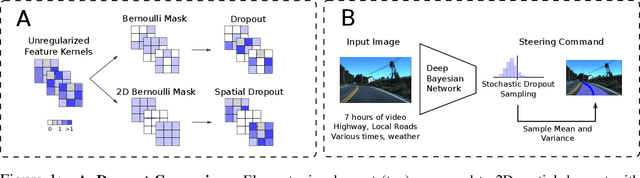

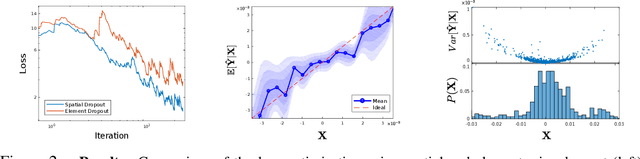

Spatial Uncertainty Sampling for End-to-End Control

May 13, 2018

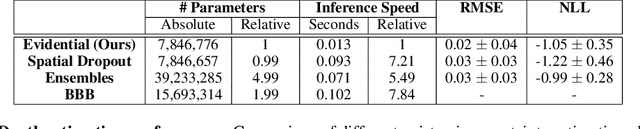

Abstract:End-to-end trained neural networks (NNs) are a compelling approach to autonomous vehicle control because of their ability to learn complex tasks without manual engineering of rule-based decisions. However, challenging road conditions, ambiguous navigation situations, and safety considerations require reliable uncertainty estimation for the eventual adoption of full-scale autonomous vehicles. Bayesian deep learning approaches provide a way to estimate uncertainty by approximating the posterior distribution of weights given a set of training data. Dropout training in deep NNs approximates Bayesian inference in a deep Gaussian process and can thus be used to estimate model uncertainty. In this paper, we propose a Bayesian NN for end-to-end control that estimates uncertainty by exploiting feature map correlation during training. This approach achieves improved model fits, as well as tighter uncertainty estimates, than traditional element-wise dropout. We evaluate our algorithms on a challenging dataset collected over many different road types, times of day, and weather conditions, and demonstrate how uncertainties can be used in conjunction with a human controller in a parallel autonomous setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge