Andreas Joseph

Inflation Attitudes of Large Language Models

Dec 16, 2025Abstract:This paper investigates the ability of Large Language Models (LLMs), specifically GPT-3.5-turbo (GPT), to form inflation perceptions and expectations based on macroeconomic price signals. We compare the LLM's output to household survey data and official statistics, mimicking the information set and demographic characteristics of the Bank of England's Inflation Attitudes Survey (IAS). Our quasi-experimental design exploits the timing of GPT's training cut-off in September 2021 which means it has no knowledge of the subsequent UK inflation surge. We find that GPT tracks aggregate survey projections and official statistics at short horizons. At a disaggregated level, GPT replicates key empirical regularities of households' inflation perceptions, particularly for income, housing tenure, and social class. A novel Shapley value decomposition of LLM outputs suited for the synthetic survey setting provides well-defined insights into the drivers of model outputs linked to prompt content. We find that GPT demonstrates a heightened sensitivity to food inflation information similar to that of human respondents. However, we also find that it lacks a consistent model of consumer price inflation. More generally, our approach could be used to evaluate the behaviour of LLMs for use in the social sciences, to compare different models, or to assist in survey design.

Deep Reinforcement Learning in a Monetary Model

Apr 19, 2021

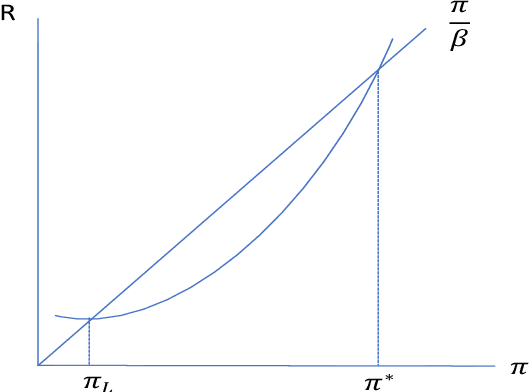

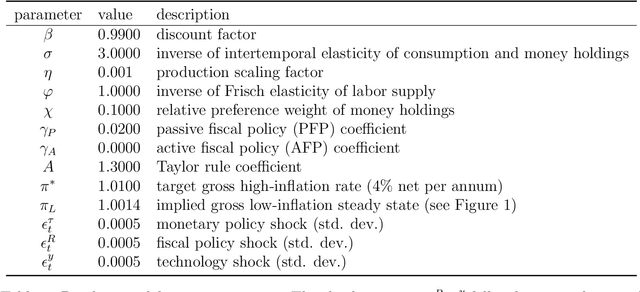

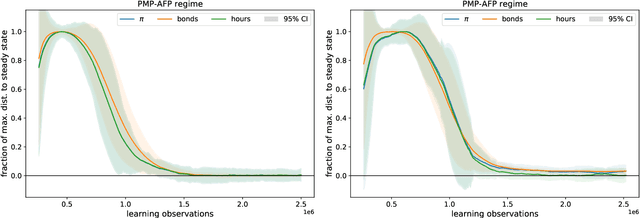

Abstract:We propose using deep reinforcement learning to solve dynamic stochastic general equilibrium models. Agents are represented by deep artificial neural networks and learn to solve their dynamic optimisation problem by interacting with the model environment, of which they have no a priori knowledge. Deep reinforcement learning offers a flexible yet principled way to model bounded rationality within this general class of models. We apply our proposed approach to a classical model from the adaptive learning literature in macroeconomics which looks at the interaction of monetary and fiscal policy. We find that, contrary to adaptive learning, the artificially intelligent household can solve the model in all policy regimes.

Shapley regressions: A framework for statistical inference on machine learning models

Mar 11, 2019

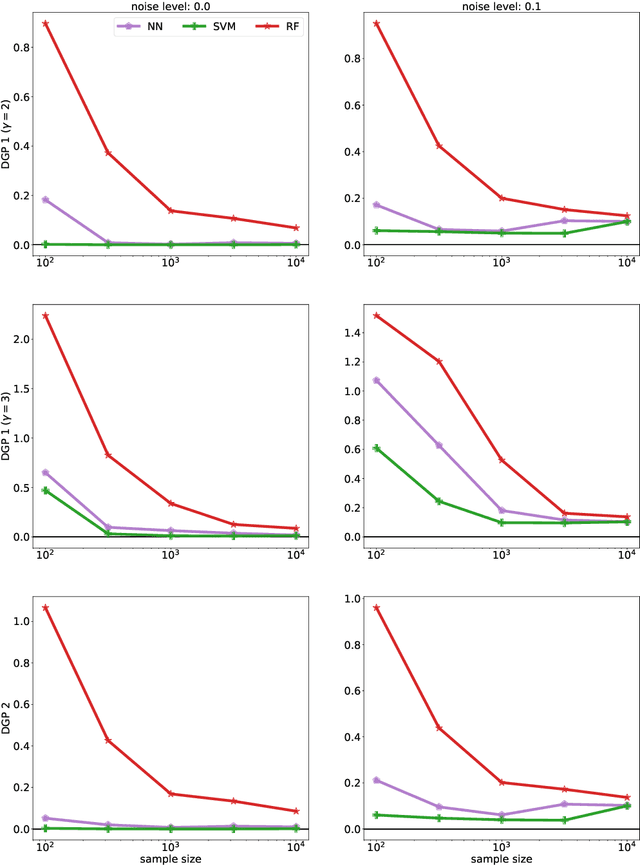

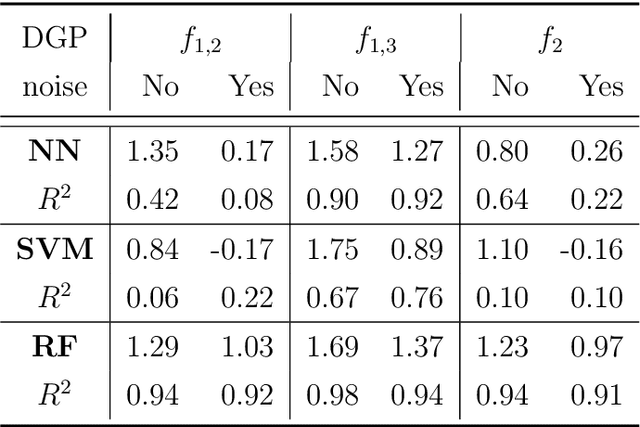

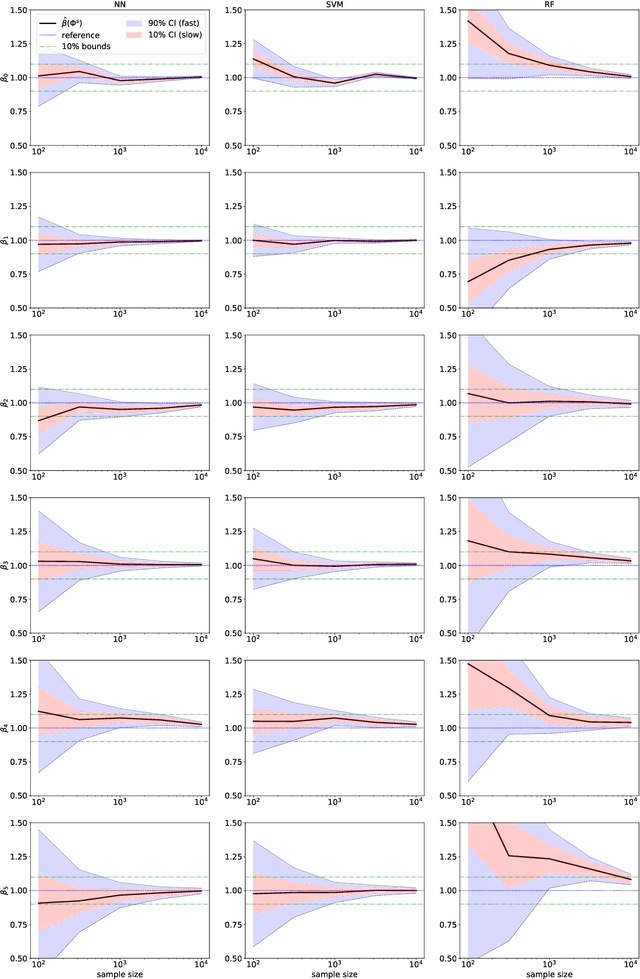

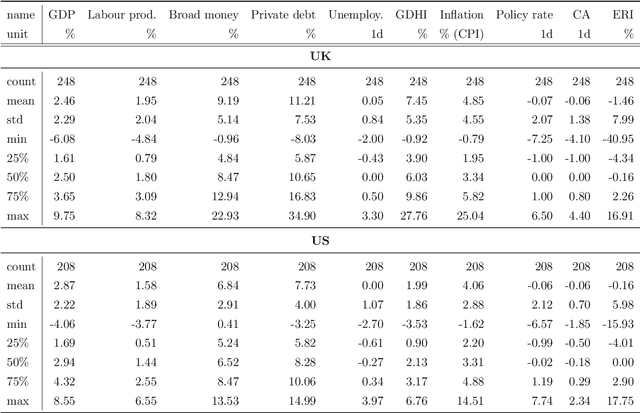

Abstract:Machine learning models often excel in the accuracy of their predictions but are opaque due to their non-linear and non-parametric structure. This makes statistical inference challenging and disqualifies them from many applications where model interpretability is crucial. This paper proposes the Shapley regression framework as an approach for statistical inference on non-linear or non-parametric models. Inference is performed based on the Shapley value decomposition of a model, a pay-off concept from cooperative game theory. I show that universal approximators from machine learning are estimation consistent and introduce hypothesis tests for individual variable contributions, model bias and parametric functional forms. The inference properties of state-of-the-art machine learning models - like artificial neural networks, support vector machines and random forests - are investigated using numerical simulations and real-world data. The proposed framework is unique in the sense that it is identical to the conventional case of statistical inference on a linear model if the model is linear in parameters. This makes it a well-motivated extension to more general models and strengthens the case for the use of machine learning to inform decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge