Andreas Erzigkeit

Pitfalls and Limits in Automatic Dementia Assessment

Aug 06, 2025Abstract:Current work on speech-based dementia assessment focuses on either feature extraction to predict assessment scales, or on the automation of existing test procedures. Most research uses public data unquestioningly and rarely performs a detailed error analysis, focusing primarily on numerical performance. We perform an in-depth analysis of an automated standardized dementia assessment, the Syndrom-Kurz-Test. We find that while there is a high overall correlation with human annotators, due to certain artifacts, we observe high correlations for the severely impaired individuals, which is less true for the healthy or mildly impaired ones. Speech production decreases with cognitive decline, leading to overoptimistic correlations when test scoring relies on word naming. Depending on the test design, fallback handling introduces further biases that favor certain groups. These pitfalls remain independent of group distributions in datasets and require differentiated analysis of target groups.

Automated Evaluation of Standardized Dementia Screening Tests

Jun 13, 2022

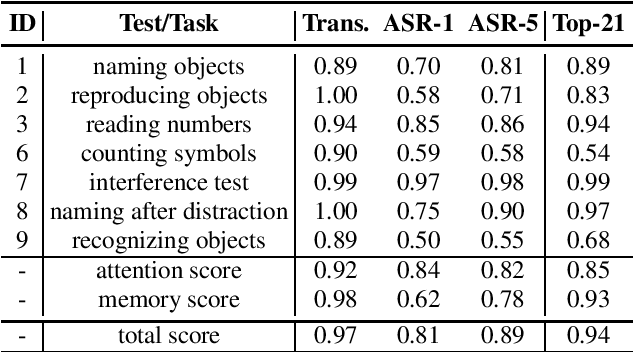

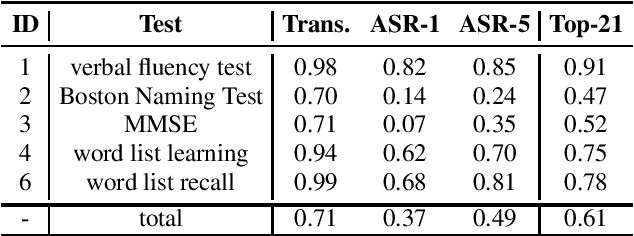

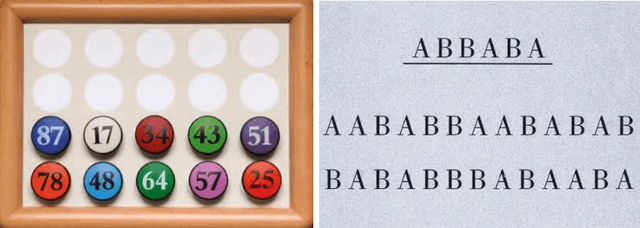

Abstract:For dementia screening and monitoring, standardized tests play a key role in clinical routine since they aim at minimizing subjectivity by measuring performance on a variety of cognitive tasks. In this paper, we report on a study that consists of a semi-standardized history taking followed by two standardized neuropsychological tests, namely the SKT and the CERAD-NB. The tests include basic tasks such as naming objects, learning word lists, but also widely used tools such as the MMSE. Most of the tasks are performed verbally and should thus be suitable for automated scoring based on transcripts. For the first batch of 30 patients, we analyze the correlation between expert manual evaluations and automatic evaluations based on manual and automatic transcriptions. For both SKT and CERAD-NB, we observe high to perfect correlations using manual transcripts; for certain tasks with lower correlation, the automatic scoring is stricter than the human reference since it is limited to the audio. Using automatic transcriptions, correlations drop as expected and are related to recognition accuracy; however, we still observe high correlations of up to 0.98 (SKT) and 0.85 (CERAD-NB). We show that using word alternatives helps to mitigate recognition errors and subsequently improves correlation with expert scores.

Going Beyond the Cookie Theft Picture Test: Detecting Cognitive Impairments using Acoustic Features

Jun 10, 2022

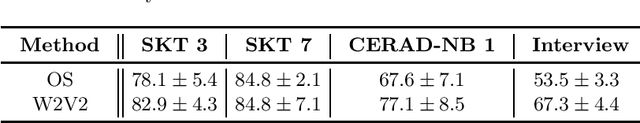

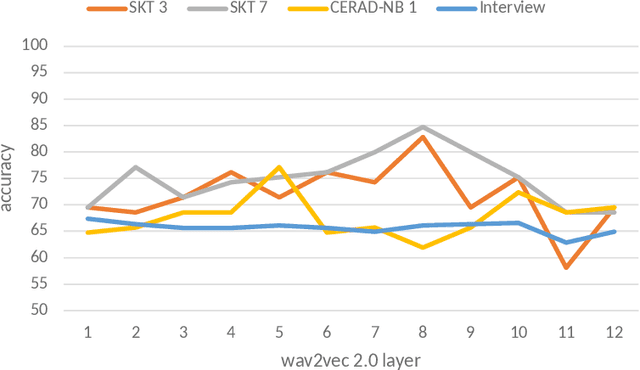

Abstract:Standardized tests play a crucial role in the detection of cognitive impairment. Previous work demonstrated that automatic detection of cognitive impairment is possible using audio data from a standardized picture description task. The presented study goes beyond that, evaluating our methods on data taken from two standardized neuropsychological tests, namely the German SKT and a German version of the CERAD-NB, and a semi-structured clinical interview between a patient and a psychologist. For the tests, we focus on speech recordings of three sub-tests: reading numbers (SKT 3), interference (SKT 7), and verbal fluency (CERAD-NB 1). We show that acoustic features from standardized tests can be used to reliably discriminate cognitively impaired individuals from non-impaired ones. Furthermore, we provide evidence that even features extracted from random speech samples of the interview can be a discriminator of cognitive impairment. In our baseline experiments, we use OpenSMILE features and Support Vector Machine classifiers. In an improved setup, we show that using wav2vec 2.0 features instead, we can achieve an accuracy of up to 85%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge