Alexei A. Koulakov

Neural networks with motivation

Jun 23, 2019

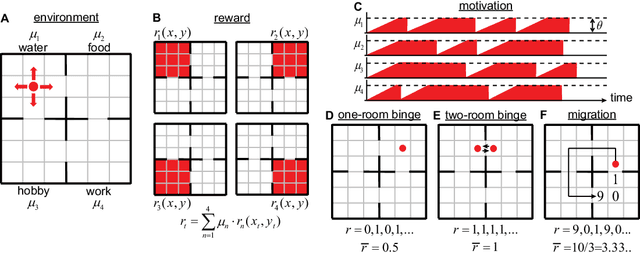

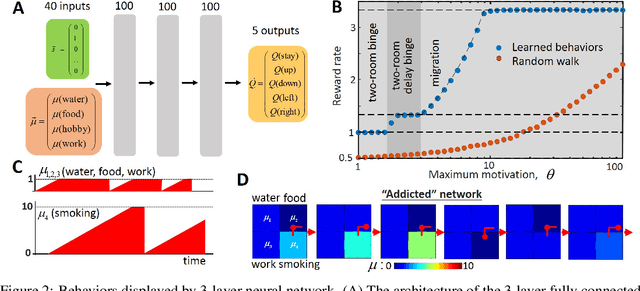

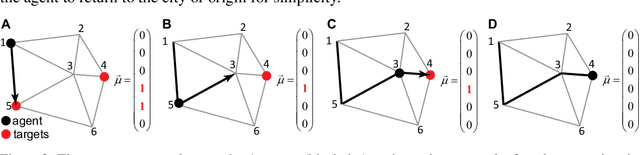

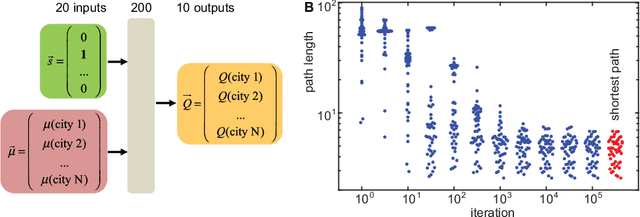

Abstract:Motivational salience is a mechanism that determines an organism's current level of attraction to or repulsion from a particular object, event, or outcome. Motivational salience is described by modulating the reward by an externally controlled parameter that remains constant within a single behavioral episode. The vector of perceived values of various outcomes determines motivation of an organism toward different goals. Organism's behavior should be able to adapt to the varying-in-time motivation vector. Here, we propose a reinforcement learning framework that relies on neural networks to learn optimal behavior for different dynamically changing motivation vectors. First, we show that Q-learning neural networks can learn to navigate towards variable goals whose relative salience is determined by a multidimensional motivational vector. Second, we show that a Q-learning network with motivation can learn complex behaviors towards several goals distributed in an environment. Finally, we show that firing patterns displayed by neurons in the ventral pallidum, a basal ganglia structure playing a crucial role in motivated behaviors, are similar to the responses of neurons in recurrent neural networks trained in similar conditions. Similarly to the pallidum neurons, artificial neural nets contain two different classes of neurons, tuned to reward and punishment. We conclude that reinforcement learning networks can efficiently learn optimal behavior in conditions when reward values are modulated by external motivational processes with arbitrary dynamics. Motivational salience can be viewed as a general-purpose model-free method identifying and capturing changes in subjective or objective values of multiple rewards. Networks with motivation may also be parts of a larger hierarchical reinforcement learning system in the brain.

Representations of Sound in Deep Learning of Audio Features from Music

Dec 08, 2017

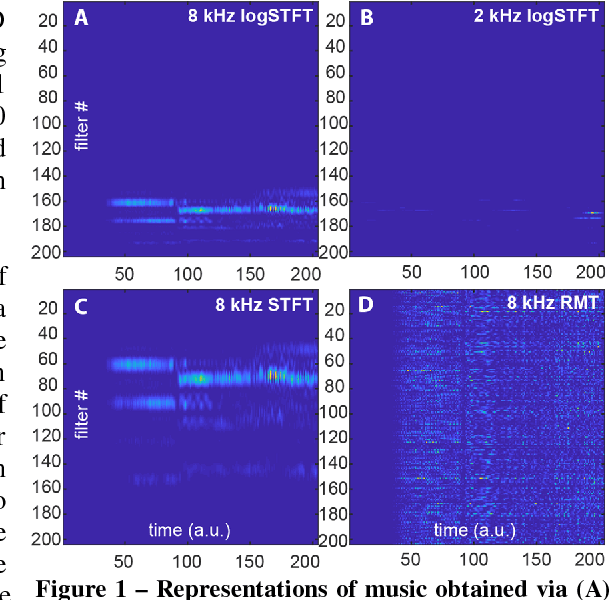

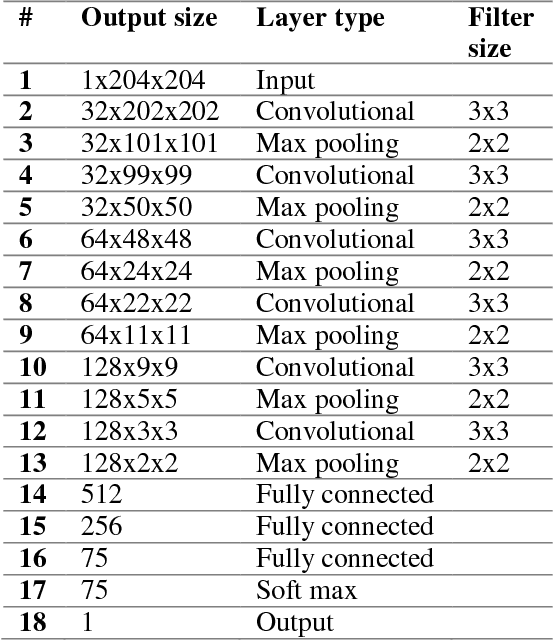

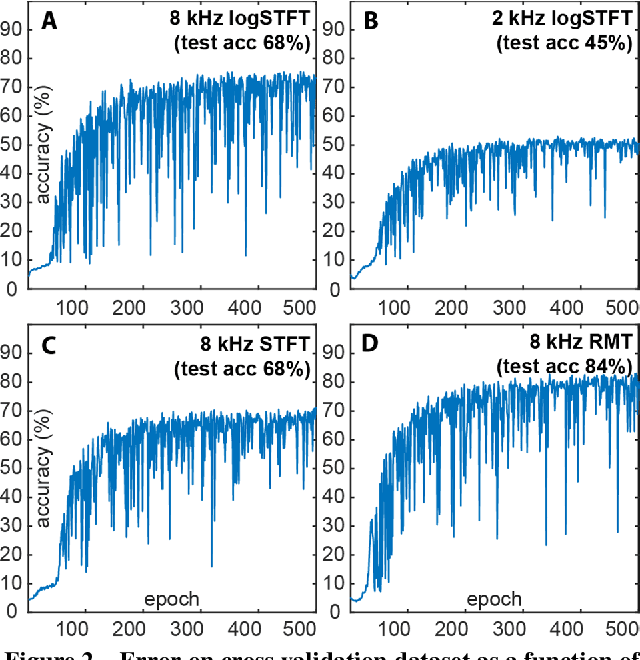

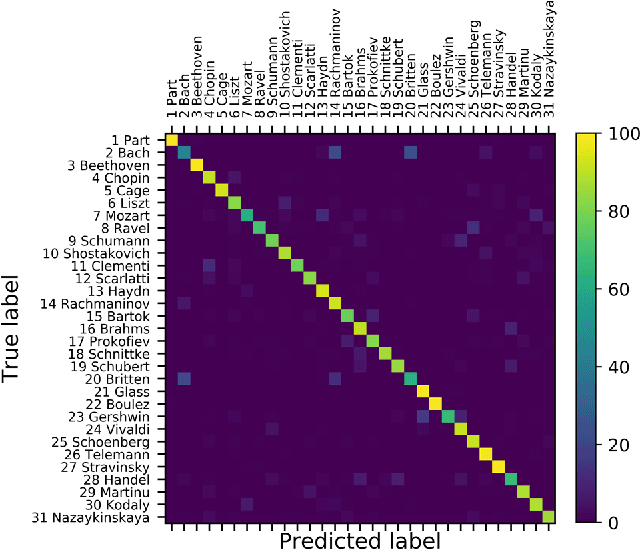

Abstract:The work of a single musician, group or composer can vary widely in terms of musical style. Indeed, different stylistic elements, from performance medium and rhythm to harmony and texture, are typically exploited and developed across an artist's lifetime. Yet, there is often a discernable character to the work of, for instance, individual composers at the perceptual level - an experienced listener can often pick up on subtle clues in the music to identify the composer or performer. Here we suggest that a convolutional network may learn these subtle clues or features given an appropriate representation of the music. In this paper, we apply a deep convolutional neural network to a large audio dataset and empirically evaluate its performance on audio classification tasks. Our trained network demonstrates accurate performance on such classification tasks when presented with 5 s examples of music obtained by simple transformations of the raw audio waveform. A particularly interesting example is the spectral representation of music obtained by application of a logarithmically spaced filter bank, mirroring the early stages of auditory signal transduction in mammals. The most successful representation of music to facilitate discrimination was obtained via a random matrix transform (RMT). Networks based on logarithmic filter banks and RMT were able to correctly guess the one composer out of 31 possibilities in 68 and 84 percent of cases respectively.

Ocular dominance patterns in mammalian visual cortex: A wire length minimization approach

Jun 14, 1999

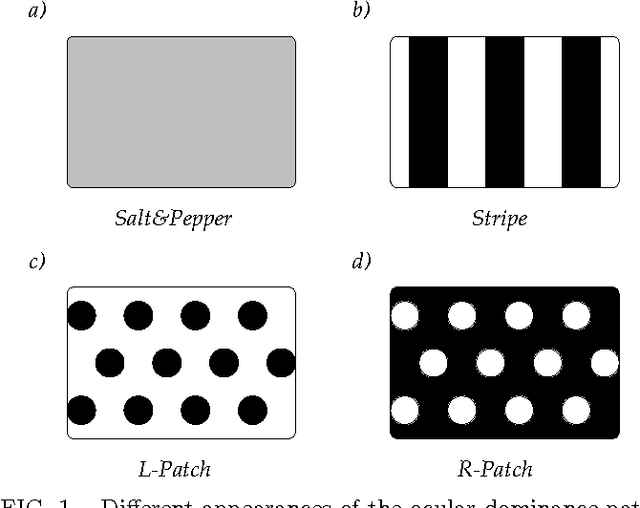

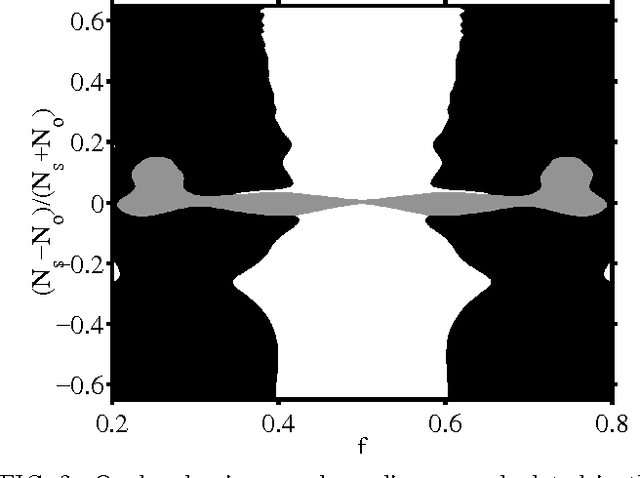

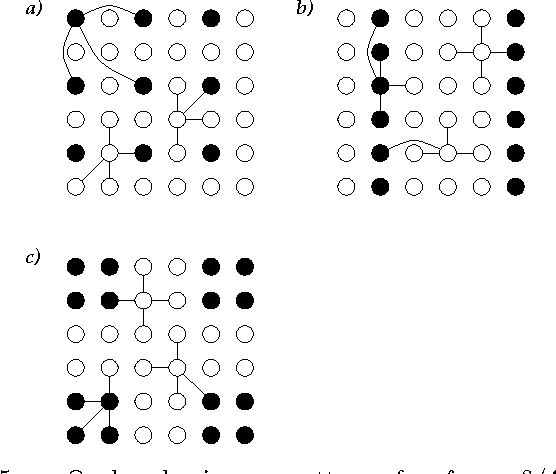

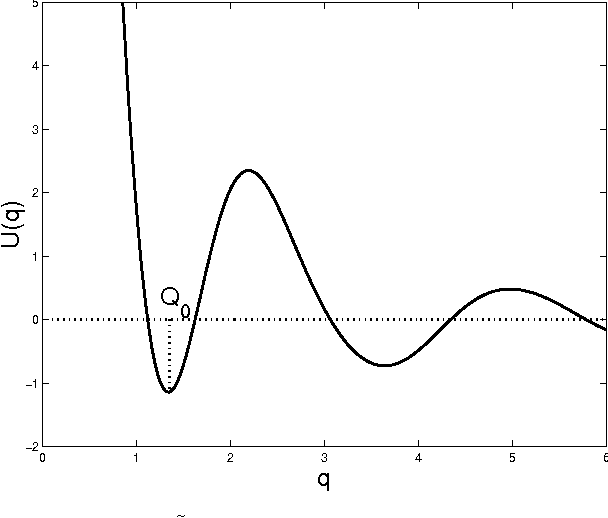

Abstract:We propose a theory for ocular dominance (OD) patterns in mammalian primary visual cortex. This theory is based on the premise that OD pattern is an adaptation to minimize the length of intra-cortical wiring. Thus we can understand the existing OD patterns by solving a wire length minimization problem. We divide all the neurons into two classes: left-eye dominated and right-eye dominated. We find that segregation of neurons into monocular regions reduces wire length if the number of connections with the neurons of the same class differs from that with the other class. The shape of the regions depends on the relative fraction of neurons in the two classes. If the numbers are close we find that the optimal OD pattern consists of interdigitating stripes. If one class is less numerous than the other, the optimal OD pattern consists of patches of the first class neurons in the sea of the other class neurons. We predict the transition from stripes to patches when the fraction of neurons dominated by the ipsilateral eye is about 40%. This prediction agrees with the data in macaque and Cebus monkeys. This theory can be applied to other binary cortical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge