COVID-Net Clinical ICU: Enhanced Prediction of ICU Admission for COVID-19 Patients via Explainability and Trust Quantification

Sep 14, 2021Audrey Chung, Mahmoud Famouri, Andrew Hryniowski, Alexander Wong

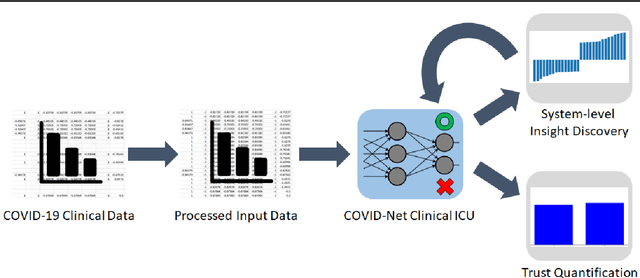

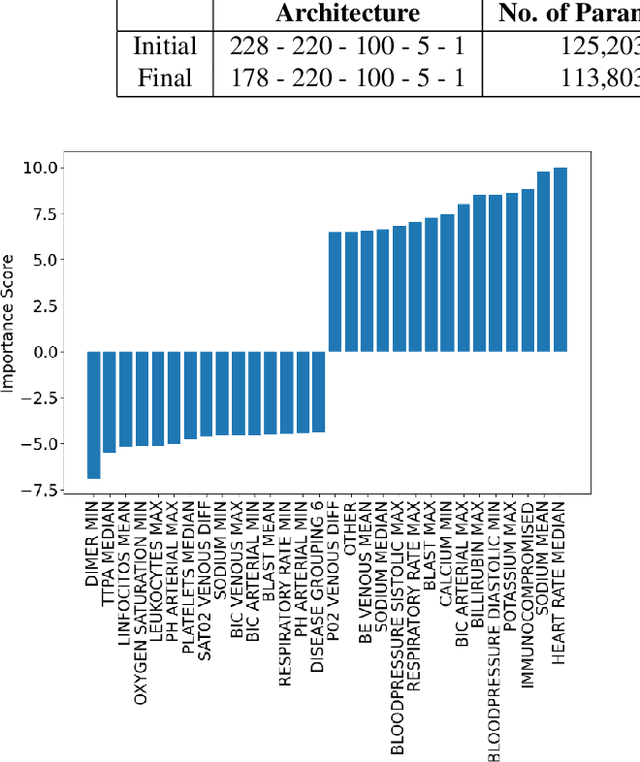

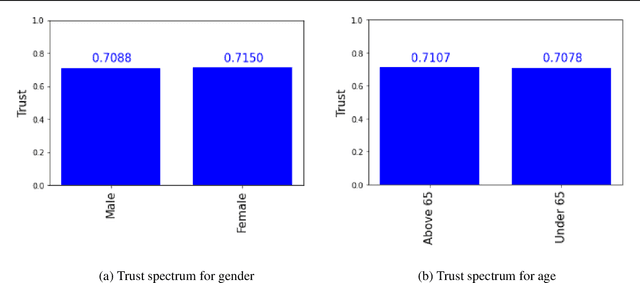

The COVID-19 pandemic continues to have a devastating global impact, and has placed a tremendous burden on struggling healthcare systems around the world. Given the limited resources, accurate patient triaging and care planning is critical in the fight against COVID-19, and one crucial task within care planning is determining if a patient should be admitted to a hospital's intensive care unit (ICU). Motivated by the need for transparent and trustworthy ICU admission clinical decision support, we introduce COVID-Net Clinical ICU, a neural network for ICU admission prediction based on patient clinical data. Driven by a transparent, trust-centric methodology, the proposed COVID-Net Clinical ICU was built using a clinical dataset from Hospital Sirio-Libanes comprising of 1,925 COVID-19 patients, and is able to predict when a COVID-19 positive patient would require ICU admission with an accuracy of 96.9% to facilitate better care planning for hospitals amidst the on-going pandemic. We conducted system-level insight discovery using a quantitative explainability strategy to study the decision-making impact of different clinical features and gain actionable insights for enhancing predictive performance. We further leveraged a suite of trust quantification metrics to gain deeper insights into the trustworthiness of COVID-Net Clinical ICU. By digging deeper into when and why clinical predictive models makes certain decisions, we can uncover key factors in decision making for critical clinical decision support tasks such as ICU admission prediction and identify the situations under which clinical predictive models can be trusted for greater accountability.

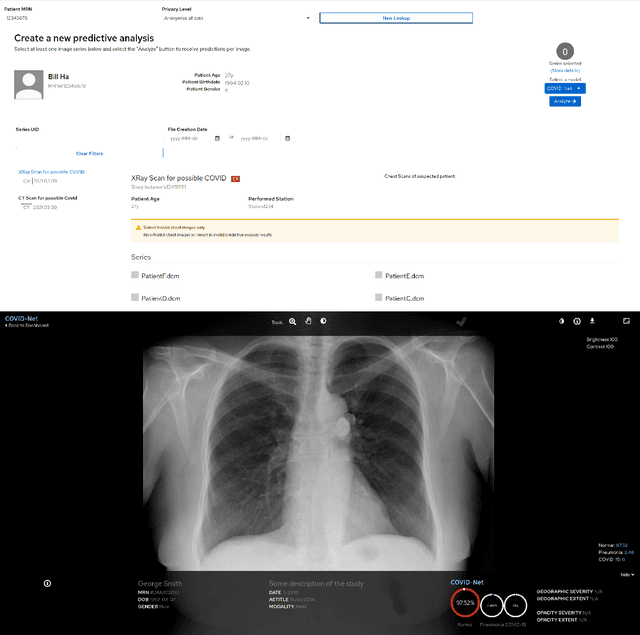

COVID-Net MLSys: Designing COVID-Net for the Clinical Workflow

Sep 14, 2021Audrey G. Chung, Maya Pavlova, Hayden Gunraj, Naomi Terhljan, Alexander MacLean, Hossein Aboutalebi, Siddharth Surana, Andy Zhao, Saad Abbasi, Alexander Wong

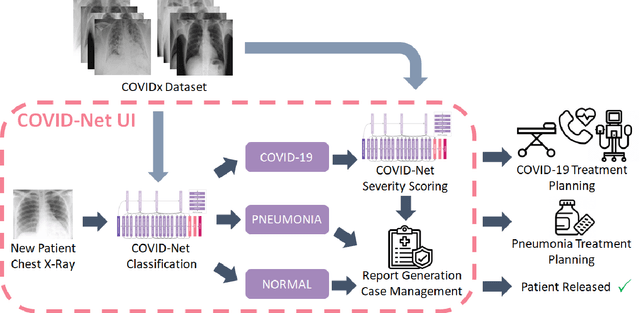

As the COVID-19 pandemic continues to devastate globally, one promising field of research is machine learning-driven computer vision to streamline various parts of the COVID-19 clinical workflow. These machine learning methods are typically stand-alone models designed without consideration for the integration necessary for real-world application workflows. In this study, we take a machine learning and systems (MLSys) perspective to design a system for COVID-19 patient screening with the clinical workflow in mind. The COVID-Net system is comprised of the continuously evolving COVIDx dataset, COVID-Net deep neural network for COVID-19 patient detection, and COVID-Net S deep neural networks for disease severity scoring for COVID-19 positive patient cases. The deep neural networks within the COVID-Net system possess state-of-the-art performance, and are designed to be integrated within a user interface (UI) for clinical decision support with automatic report generation to assist clinicians in their treatment decisions.

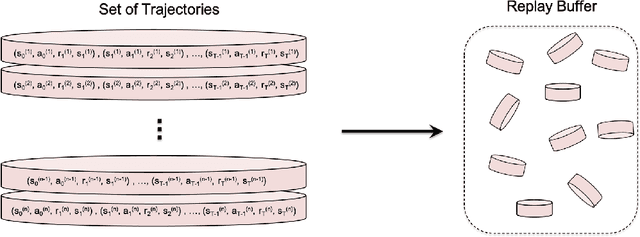

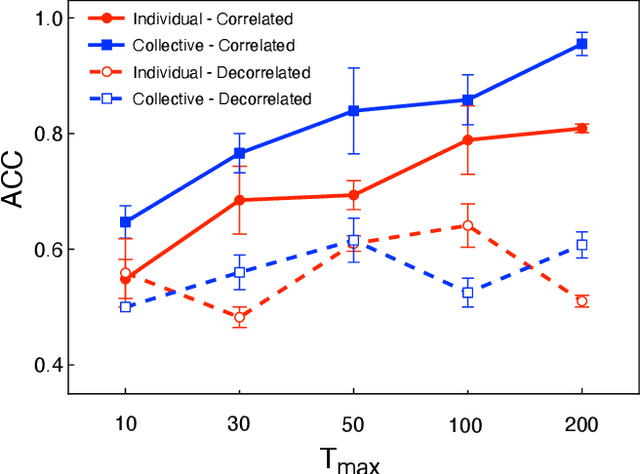

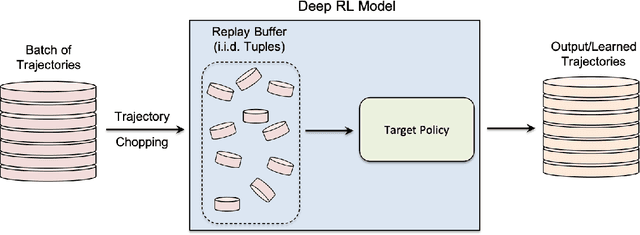

Where Did You Learn That From? Surprising Effectiveness of Membership Inference Attacks Against Temporally Correlated Data in Deep Reinforcement Learning

Sep 08, 2021Maziar Gomrokchi, Susan Amin, Hossein Aboutalebi, Alexander Wong, Doina Precup

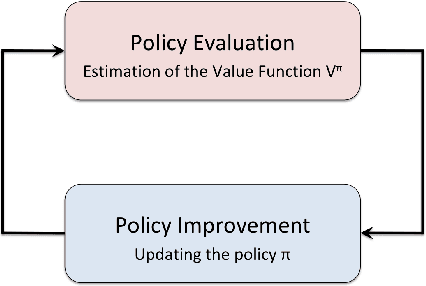

While significant research advances have been made in the field of deep reinforcement learning, a major challenge to widespread industrial adoption of deep reinforcement learning that has recently surfaced but little explored is the potential vulnerability to privacy breaches. In particular, there have been no concrete adversarial attack strategies in literature tailored for studying the vulnerability of deep reinforcement learning algorithms to membership inference attacks. To address this gap, we propose an adversarial attack framework tailored for testing the vulnerability of deep reinforcement learning algorithms to membership inference attacks. More specifically, we design a series of experiments to investigate the impact of temporal correlation, which naturally exists in reinforcement learning training data, on the probability of information leakage. Furthermore, we study the differences in the performance of \emph{collective} and \emph{individual} membership attacks against deep reinforcement learning algorithms. Experimental results show that the proposed adversarial attack framework is surprisingly effective at inferring the data used during deep reinforcement training with an accuracy exceeding $84\%$ in individual and $97\%$ in collective mode on two different control tasks in OpenAI Gym, which raises serious privacy concerns in the deployment of models resulting from deep reinforcement learning. Moreover, we show that the learning state of a reinforcement learning algorithm significantly influences the level of the privacy breach.

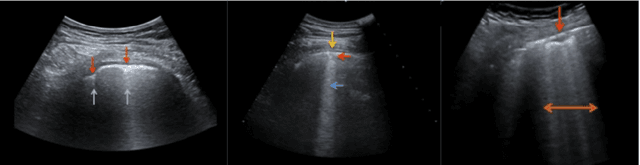

COVID-Net US: A Tailored, Highly Efficient, Self-Attention Deep Convolutional Neural Network Design for Detection of COVID-19 Patient Cases from Point-of-care Ultrasound Imaging

Aug 05, 2021Alexander MacLean, Saad Abbasi, Ashkan Ebadi, Andy Zhao, Maya Pavlova, Hayden Gunraj, Pengcheng Xi, Sonny Kohli, Alexander Wong

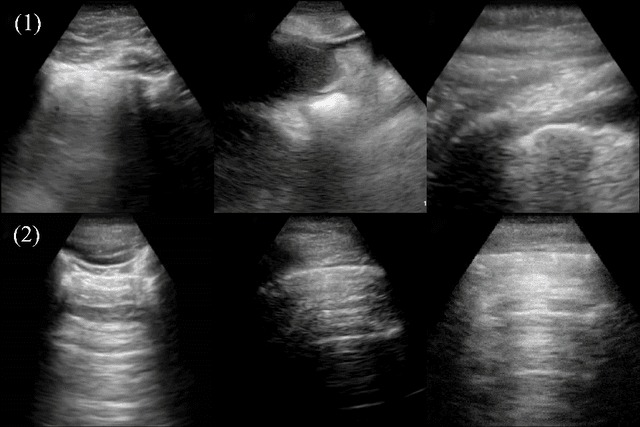

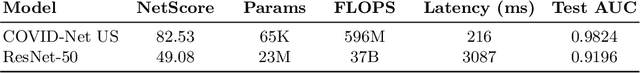

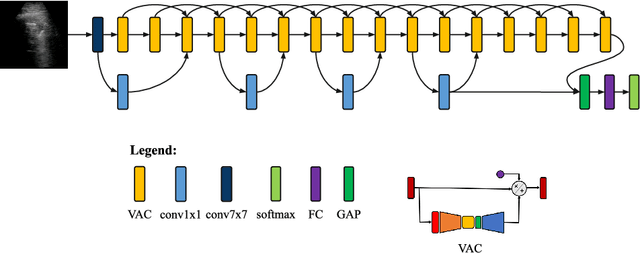

The Coronavirus Disease 2019 (COVID-19) pandemic has impacted many aspects of life globally, and a critical factor in mitigating its effects is screening individuals for infections, thereby allowing for both proper treatment for those individuals as well as action to be taken to prevent further spread of the virus. Point-of-care ultrasound (POCUS) imaging has been proposed as a screening tool as it is a much cheaper and easier to apply imaging modality than others that are traditionally used for pulmonary examinations, namely chest x-ray and computed tomography. Given the scarcity of expert radiologists for interpreting POCUS examinations in many highly affected regions around the world, low-cost deep learning-driven clinical decision support solutions can have a large impact during the on-going pandemic. Motivated by this, we introduce COVID-Net US, a highly efficient, self-attention deep convolutional neural network design tailored for COVID-19 screening from lung POCUS images. Experimental results show that the proposed COVID-Net US can achieve an AUC of over 0.98 while achieving 353X lower architectural complexity, 62X lower computational complexity, and 14.3X faster inference times on a Raspberry Pi. Clinical validation was also conducted, where select cases were reviewed and reported on by a practicing clinician (20 years of clinical practice) specializing in intensive care (ICU) and 15 years of expertise in POCUS interpretation. To advocate affordable healthcare and artificial intelligence for resource-constrained environments, we have made COVID-Net US open source and publicly available as part of the COVID-Net open source initiative.

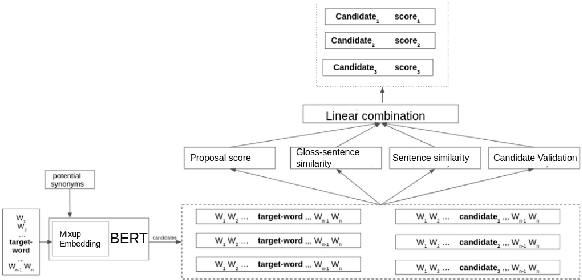

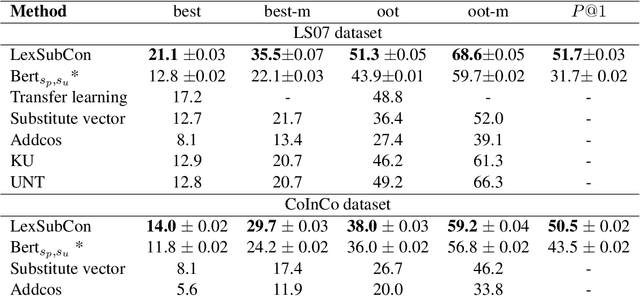

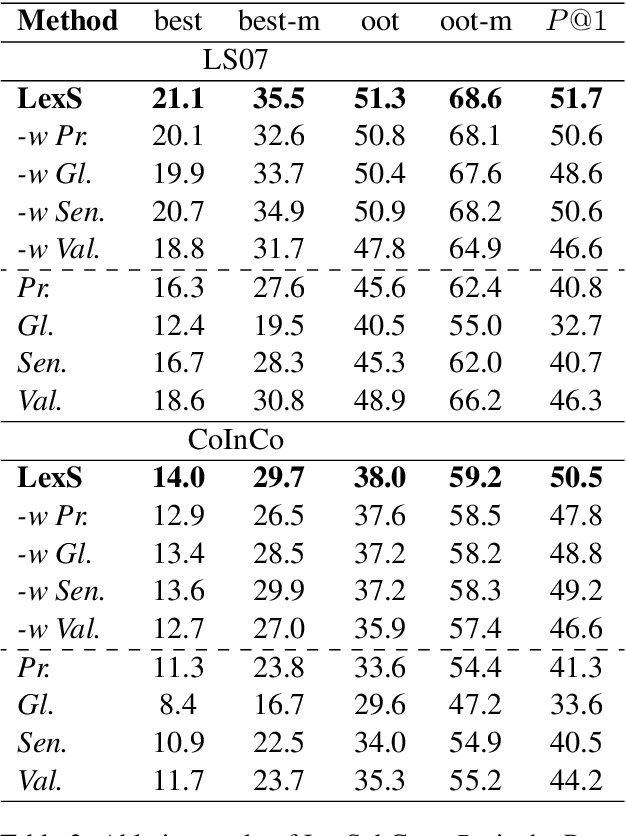

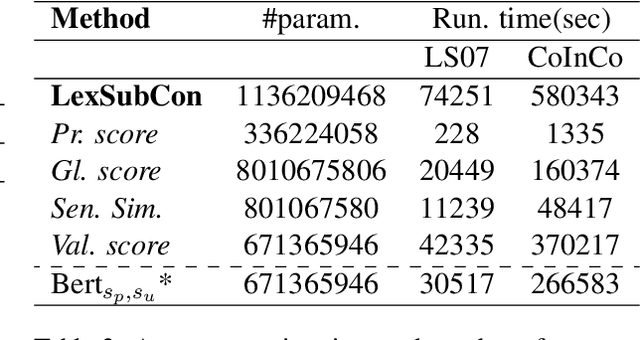

LexSubCon: Integrating Knowledge from Lexical Resources into Contextual Embeddings for Lexical Substitution

Jul 11, 2021George Michalopoulos, Ian McKillop, Alexander Wong, Helen Chen

Lexical substitution is the task of generating meaningful substitutes for a word in a given textual context. Contextual word embedding models have achieved state-of-the-art results in the lexical substitution task by relying on contextual information extracted from the replaced word within the sentence. However, such models do not take into account structured knowledge that exists in external lexical databases. We introduce LexSubCon, an end-to-end lexical substitution framework based on contextual embedding models that can identify highly accurate substitute candidates. This is achieved by combining contextual information with knowledge from structured lexical resources. Our approach involves: (i) introducing a novel mix-up embedding strategy in the creation of the input embedding of the target word through linearly interpolating the pair of the target input embedding and the average embedding of its probable synonyms; (ii) considering the similarity of the sentence-definition embeddings of the target word and its proposed candidates; and, (iii) calculating the effect of each substitution in the semantics of the sentence through a fine-tuned sentence similarity model. Our experiments show that LexSubCon outperforms previous state-of-the-art methods on LS07 and CoInCo benchmark datasets that are widely used for lexical substitution tasks.

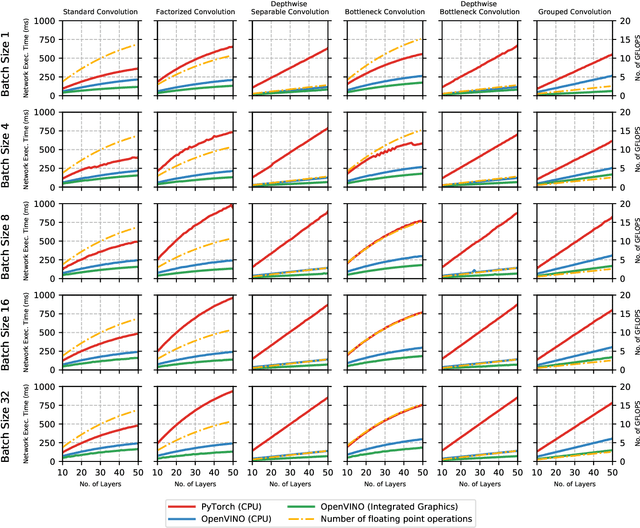

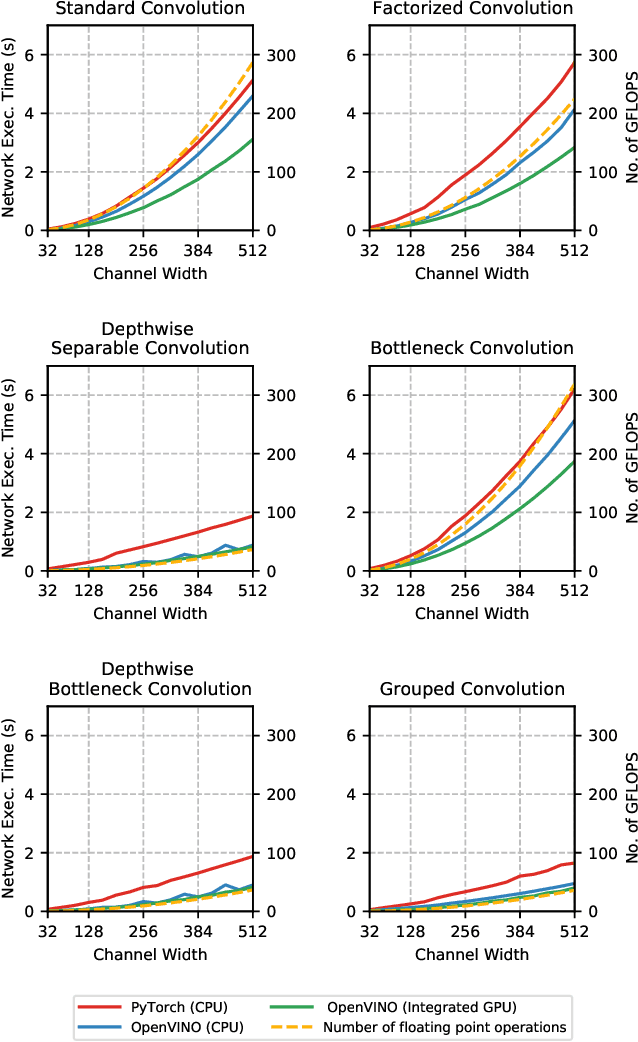

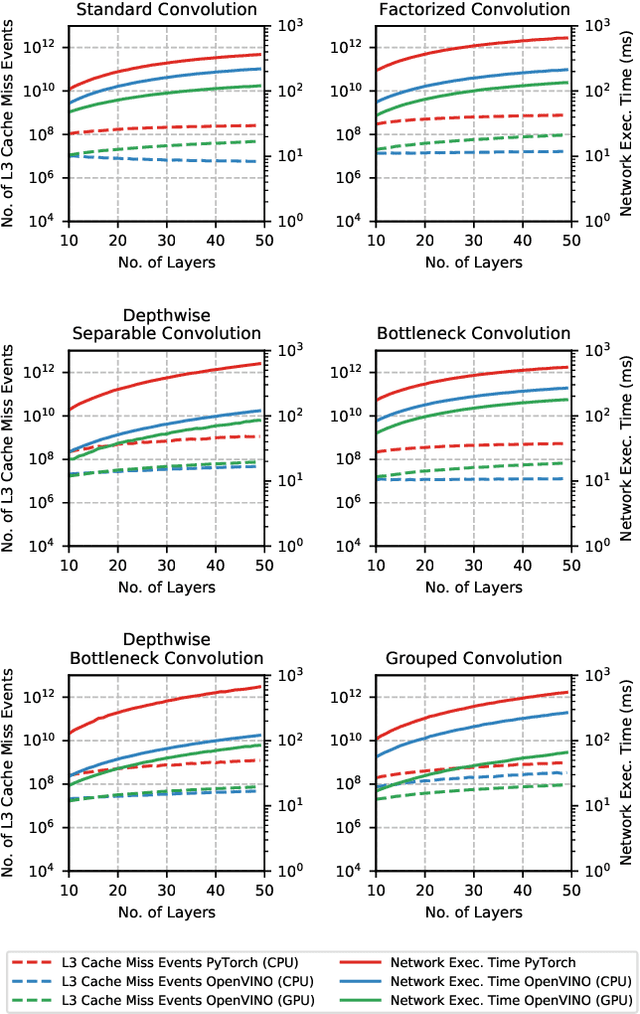

Does Form Follow Function? An Empirical Exploration of the Impact of Deep Neural Network Architecture Design on Hardware-Specific Acceleration

Jul 08, 2021Saad Abbasi, Mohammad Javad Shafiee, Ellick Chan, Alexander Wong

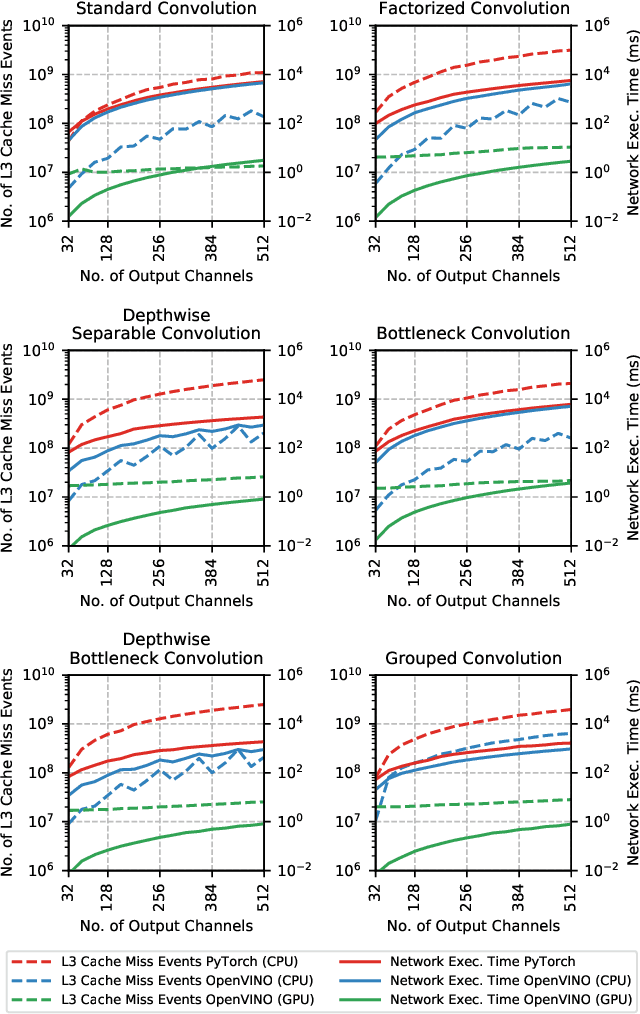

The fine-grained relationship between form and function with respect to deep neural network architecture design and hardware-specific acceleration is one area that is not well studied in the research literature, with form often dictated by accuracy as opposed to hardware function. In this study, a comprehensive empirical exploration is conducted to investigate the impact of deep neural network architecture design on the degree of inference speedup that can be achieved via hardware-specific acceleration. More specifically, we empirically study the impact of a variety of commonly used macro-architecture design patterns across different architectural depths through the lens of OpenVINO microprocessor-specific and GPU-specific acceleration. Experimental results showed that while leveraging hardware-specific acceleration achieved an average inference speed-up of 380%, the degree of inference speed-up varied drastically depending on the macro-architecture design pattern, with the greatest speedup achieved on the depthwise bottleneck convolution design pattern at 550%. Furthermore, we conduct an in-depth exploration of the correlation between FLOPs requirement, level 3 cache efficacy, and network latency with increasing architectural depth and width. Finally, we analyze the inference time reductions using hardware-specific acceleration when compared to native deep learning frameworks across a wide variety of hand-crafted deep convolutional neural network architecture designs as well as ones found via neural architecture search strategies. We found that the DARTS-derived architecture to benefit from the greatest improvement from hardware-specific software acceleration (1200%) while the depthwise bottleneck convolution-based MobileNet-V2 to have the lowest overall inference time of around 2.4 ms.

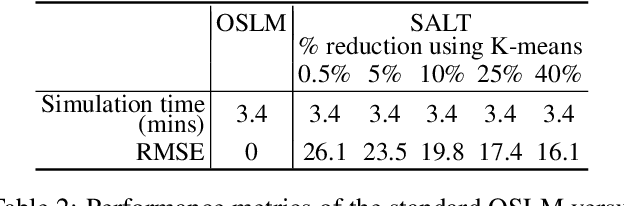

SALT: Sea lice Adaptive Lattice Tracking -- An Unsupervised Approach to Generate an Improved Ocean Model

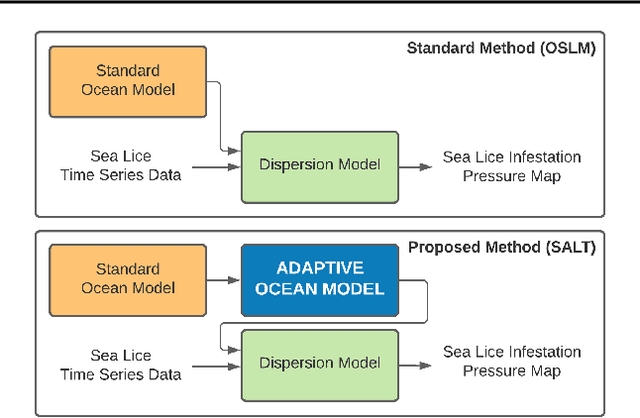

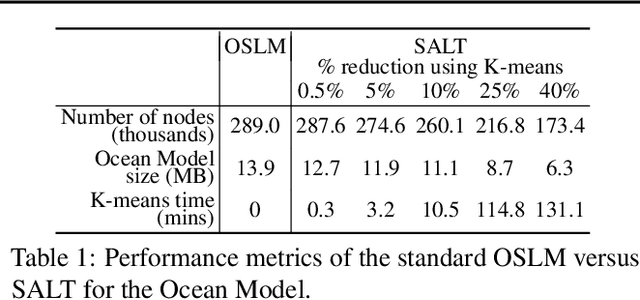

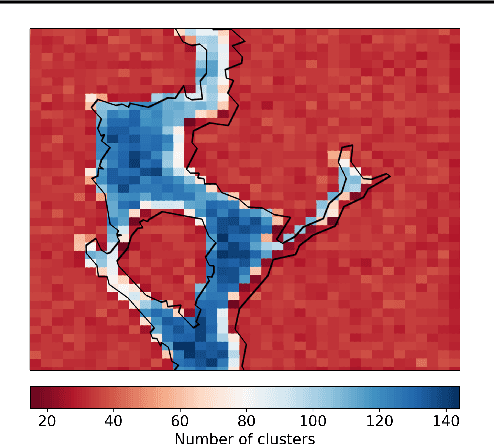

Jun 24, 2021Ju An Park, Vikram Voleti, Kathryn E. Thomas, Alexander Wong, Jason L. Deglint

Warming oceans due to climate change are leading to increased numbers of ectoparasitic copepods, also known as sea lice, which can cause significant ecological loss to wild salmon populations and major economic loss to aquaculture sites. The main transport mechanism driving the spread of sea lice populations are near-surface ocean currents. Present strategies to estimate the distribution of sea lice larvae are computationally complex and limit full-scale analysis. Motivated to address this challenge, we propose SALT: Sea lice Adaptive Lattice Tracking approach for efficient estimation of sea lice dispersion and distribution in space and time. Specifically, an adaptive spatial mesh is generated by merging nodes in the lattice graph of the Ocean Model based on local ocean properties, thus enabling highly efficient graph representation. SALT demonstrates improved efficiency while maintaining consistent results with the standard method, using near-surface current data for Hardangerfjord, Norway. The proposed SALT technique shows promise for enhancing proactive aquaculture management through predictive modelling of sea lice infestation pressure maps in a changing climate.

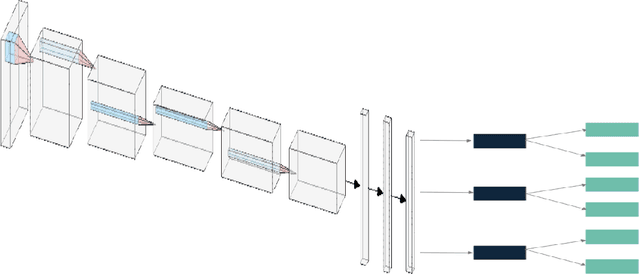

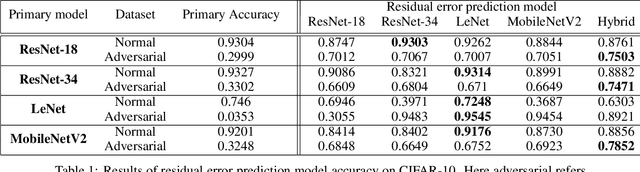

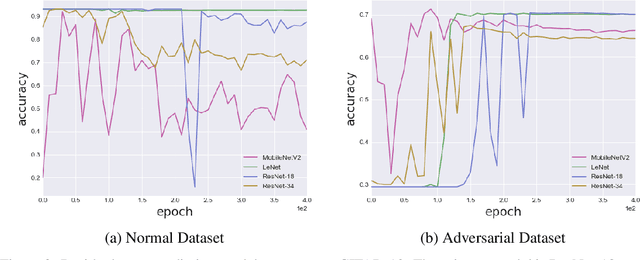

Residual Error: a New Performance Measure for Adversarial Robustness

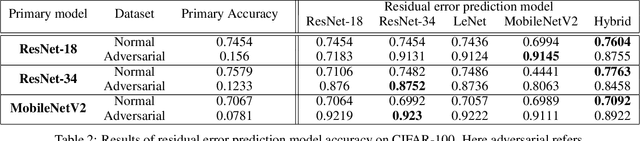

Jun 18, 2021Hossein Aboutalebi, Mohammad Javad Shafiee, Michelle Karg, Christian Scharfenberger, Alexander Wong

Despite the significant advances in deep learning over the past decade, a major challenge that limits the wide-spread adoption of deep learning has been their fragility to adversarial attacks. This sensitivity to making erroneous predictions in the presence of adversarially perturbed data makes deep neural networks difficult to adopt for certain real-world, mission-critical applications. While much of the research focus has revolved around adversarial example creation and adversarial hardening, the area of performance measures for assessing adversarial robustness is not well explored. Motivated by this, this study presents the concept of residual error, a new performance measure for not only assessing the adversarial robustness of a deep neural network at the individual sample level, but also can be used to differentiate between adversarial and non-adversarial examples to facilitate for adversarial example detection. Furthermore, we introduce a hybrid model for approximating the residual error in a tractable manner. Experimental results using the case of image classification demonstrates the effectiveness and efficacy of the proposed residual error metric for assessing several well-known deep neural network architectures. These results thus illustrate that the proposed measure could be a useful tool for not only assessing the robustness of deep neural networks used in mission-critical scenarios, but also in the design of adversarially robust models.

Insights into Data through Model Behaviour: An Explainability-driven Strategy for Data Auditing for Responsible Computer Vision Applications

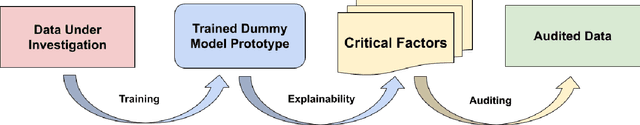

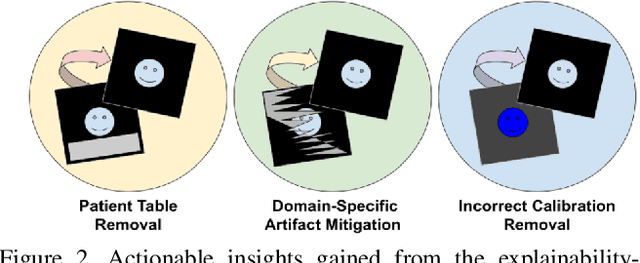

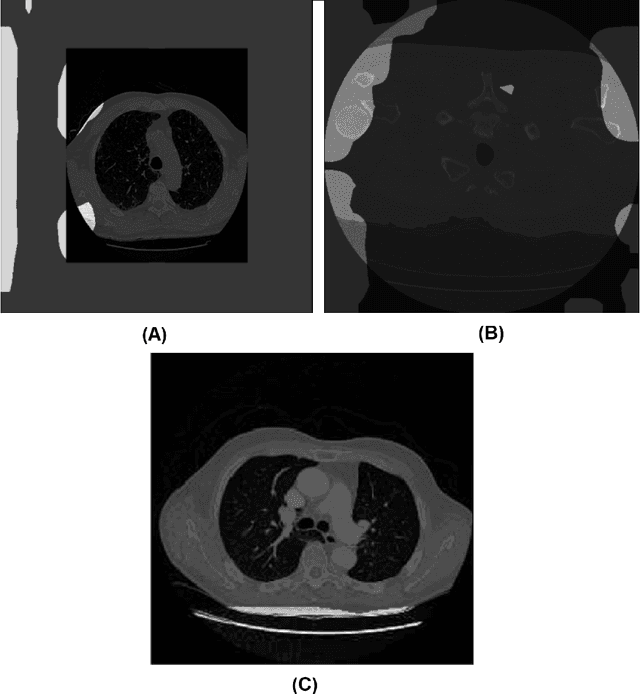

Jun 16, 2021Alexander Wong, Adam Dorfman, Paul McInnis, Hayden Gunraj

In this study, we take a departure and explore an explainability-driven strategy to data auditing, where actionable insights into the data at hand are discovered through the eyes of quantitative explainability on the behaviour of a dummy model prototype when exposed to data. We demonstrate this strategy by auditing two popular medical benchmark datasets, and discover hidden data quality issues that lead deep learning models to make predictions for the wrong reasons. The actionable insights gained from this explainability driven data auditing strategy is then leveraged to address the discovered issues to enable the creation of high-performing deep learning models with appropriate prediction behaviour. The hope is that such an explainability-driven strategy can be complimentary to data-driven strategies to facilitate for more responsible development of machine learning algorithms for computer vision applications.

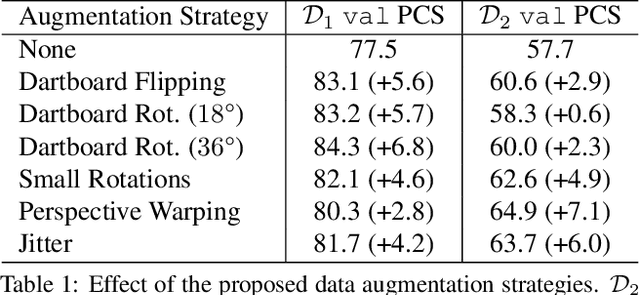

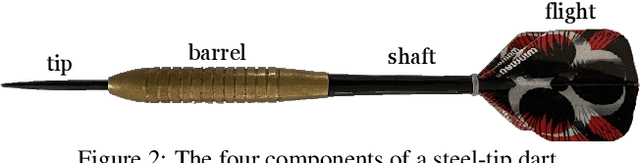

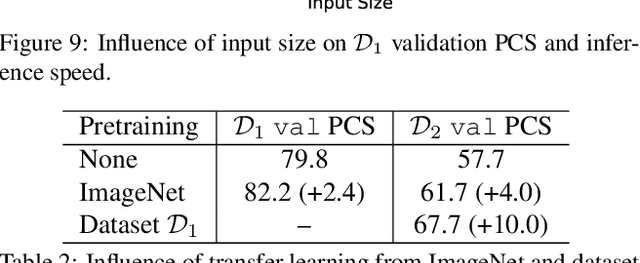

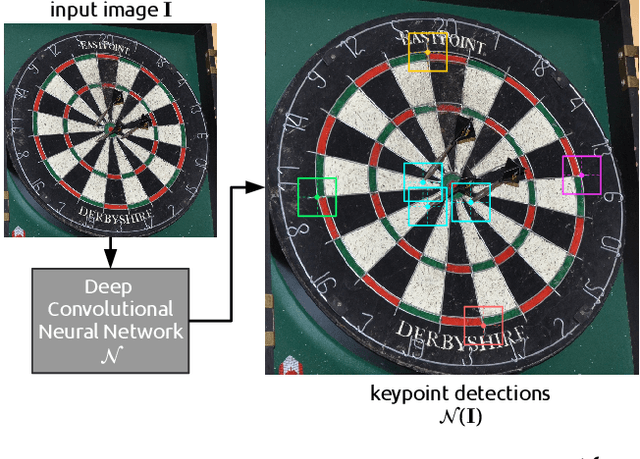

DeepDarts: Modeling Keypoints as Objects for Automatic Scorekeeping in Darts using a Single Camera

May 20, 2021William McNally, Pascale Walters, Kanav Vats, Alexander Wong, John McPhee

Existing multi-camera solutions for automatic scorekeeping in steel-tip darts are very expensive and thus inaccessible to most players. Motivated to develop a more accessible low-cost solution, we present a new approach to keypoint detection and apply it to predict dart scores from a single image taken from any camera angle. This problem involves detecting multiple keypoints that may be of the same class and positioned in close proximity to one another. The widely adopted framework for regressing keypoints using heatmaps is not well-suited for this task. To address this issue, we instead propose to model keypoints as objects. We develop a deep convolutional neural network around this idea and use it to predict dart locations and dartboard calibration points within an overall pipeline for automatic dart scoring, which we call DeepDarts. Additionally, we propose several task-specific data augmentation strategies to improve the generalization of our method. As a proof of concept, two datasets comprising 16k images originating from two different dartboard setups were manually collected and annotated to evaluate the system. In the primary dataset containing 15k images captured from a face-on view of the dartboard using a smartphone, DeepDarts predicted the total score correctly in 94.7% of the test images. In a second more challenging dataset containing limited training data (830 images) and various camera angles, we utilize transfer learning and extensive data augmentation to achieve a test accuracy of 84.0%. Because DeepDarts relies only on single images, it has the potential to be deployed on edge devices, giving anyone with a smartphone access to an automatic dart scoring system for steel-tip darts. The code and datasets are available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge