Michelle Karg

Streamlining the Development of Active Learning Methods in Real-World Object Detection

Aug 27, 2025Abstract:Active learning (AL) for real-world object detection faces computational and reliability challenges that limit practical deployment. Developing new AL methods requires training multiple detectors across iterations to compare against existing approaches. This creates high costs for autonomous driving datasets where the training of one detector requires up to 282 GPU hours. Additionally, AL method rankings vary substantially across validation sets, compromising reliability in safety-critical transportation systems. We introduce object-based set similarity ($\mathrm{OSS}$), a metric that addresses these challenges. $\mathrm{OSS}$ (1) quantifies AL method effectiveness without requiring detector training by measuring similarity between training sets and target domains using object-level features. This enables the elimination of ineffective AL methods before training. Furthermore, $\mathrm{OSS}$ (2) enables the selection of representative validation sets for robust evaluation. We validate our similarity-based approach on three autonomous driving datasets (KITTI, BDD100K, CODA) using uncertainty-based AL methods as a case study with two detector architectures (EfficientDet, YOLOv3). This work is the first to unify AL training and evaluation strategies in object detection based on object similarity. $\mathrm{OSS}$ is detector-agnostic, requires only labeled object crops, and integrates with existing AL pipelines. This provides a practical framework for deploying AL in real-world applications where computational efficiency and evaluation reliability are critical. Code is available at https://mos-ks.github.io/publications/.

Prediction Accuracy & Reliability: Classification and Object Localization under Distribution Shift

Sep 05, 2024Abstract:Natural distribution shift causes a deterioration in the perception performance of convolutional neural networks (CNNs). This comprehensive analysis for real-world traffic data addresses: 1) investigating the effect of natural distribution shift and weather augmentations on both detection quality and confidence estimation, 2) evaluating model performance for both classification and object localization, and 3) benchmarking two common uncertainty quantification methods - Ensembles and different variants of Monte-Carlo (MC) Dropout - under natural and close-to-natural distribution shift. For this purpose, a novel dataset has been curated from publicly available autonomous driving datasets. The in-distribution (ID) data is based on cutouts of a single object, for which both class and bounding box annotations are available. The six distribution-shift datasets cover adverse weather scenarios, simulated rain and fog, corner cases, and out-of-distribution data. A granular analysis of CNNs under distribution shift allows to quantize the impact of different types of shifts on both, task performance and confidence estimation: ConvNeXt-Tiny is more robust than EfficientNet-B0; heavy rain degrades classification stronger than localization, contrary to heavy fog; integrating MC-Dropout into selected layers only has the potential to enhance task performance and confidence estimation, whereby the identification of these layers depends on the type of distribution shift and the considered task.

Cost-Sensitive Uncertainty-Based Failure Recognition for Object Detection

Apr 26, 2024

Abstract:Object detectors in real-world applications often fail to detect objects due to varying factors such as weather conditions and noisy input. Therefore, a process that mitigates false detections is crucial for both safety and accuracy. While uncertainty-based thresholding shows promise, previous works demonstrate an imperfect correlation between uncertainty and detection errors. This hinders ideal thresholding, prompting us to further investigate the correlation and associated cost with different types of uncertainty. We therefore propose a cost-sensitive framework for object detection tailored to user-defined budgets on the two types of errors, missing and false detections. We derive minimum thresholding requirements to prevent performance degradation and define metrics to assess the applicability of uncertainty for failure recognition. Furthermore, we automate and optimize the thresholding process to maximize the failure recognition rate w.r.t. the specified budget. Evaluation on three autonomous driving datasets demonstrates that our approach significantly enhances safety, particularly in challenging scenarios. Leveraging localization aleatoric uncertainty and softmax-based entropy only, our method boosts the failure recognition rate by 36-60\% compared to conventional approaches. Code is available at https://mos-ks.github.io/publications.

Overcoming the Limitations of Localization Uncertainty: Efficient & Exact Non-Linear Post-Processing and Calibration

Jun 15, 2023Abstract:Robustly and accurately localizing objects in real-world environments can be challenging due to noisy data, hardware limitations, and the inherent randomness of physical systems. To account for these factors, existing works estimate the aleatoric uncertainty of object detectors by modeling their localization output as a Gaussian distribution $\mathcal{N}(\mu,\,\sigma^{2})\,$, and training with loss attenuation. We identify three aspects that are unaddressed in the state of the art, but warrant further exploration: (1) the efficient and mathematically sound propagation of $\mathcal{N}(\mu,\,\sigma^{2})\,$ through non-linear post-processing, (2) the calibration of the predicted uncertainty, and (3) its interpretation. We overcome these limitations by: (1) implementing loss attenuation in EfficientDet, and proposing two deterministic methods for the exact and fast propagation of the output distribution, (2) demonstrating on the KITTI and BDD100K datasets that the predicted uncertainty is miscalibrated, and adapting two calibration methods to the localization task, and (3) investigating the correlation between aleatoric uncertainty and task-relevant error sources. Our contributions are: (1) up to five times faster propagation while increasing localization performance by up to 1\%, (2) up to fifteen times smaller expected calibration error, and (3) the predicted uncertainty is found to correlate with occlusion, object distance, detection accuracy, and image quality.

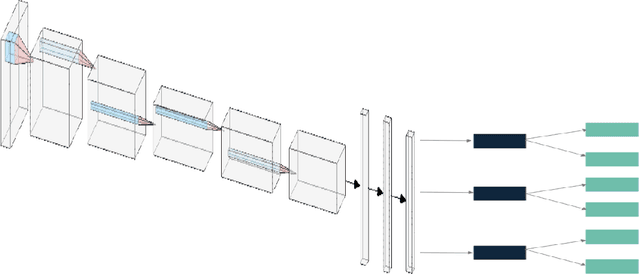

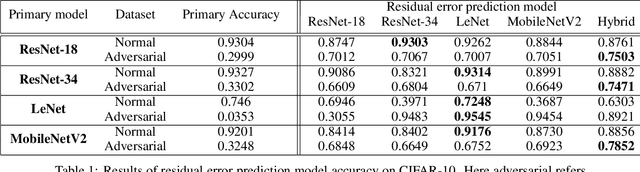

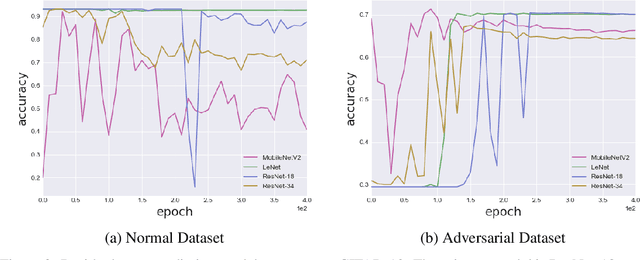

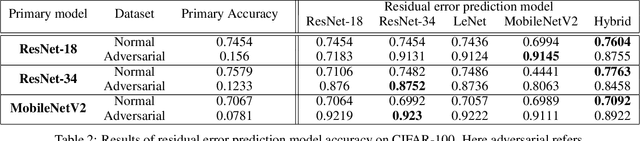

Residual Error: a New Performance Measure for Adversarial Robustness

Jun 18, 2021

Abstract:Despite the significant advances in deep learning over the past decade, a major challenge that limits the wide-spread adoption of deep learning has been their fragility to adversarial attacks. This sensitivity to making erroneous predictions in the presence of adversarially perturbed data makes deep neural networks difficult to adopt for certain real-world, mission-critical applications. While much of the research focus has revolved around adversarial example creation and adversarial hardening, the area of performance measures for assessing adversarial robustness is not well explored. Motivated by this, this study presents the concept of residual error, a new performance measure for not only assessing the adversarial robustness of a deep neural network at the individual sample level, but also can be used to differentiate between adversarial and non-adversarial examples to facilitate for adversarial example detection. Furthermore, we introduce a hybrid model for approximating the residual error in a tractable manner. Experimental results using the case of image classification demonstrates the effectiveness and efficacy of the proposed residual error metric for assessing several well-known deep neural network architectures. These results thus illustrate that the proposed measure could be a useful tool for not only assessing the robustness of deep neural networks used in mission-critical scenarios, but also in the design of adversarially robust models.

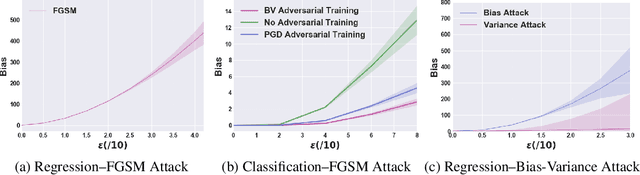

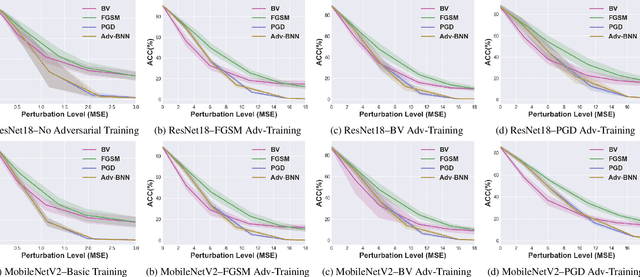

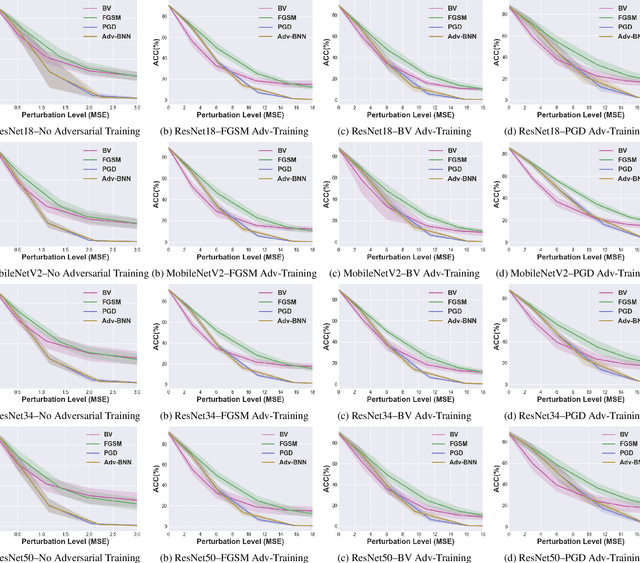

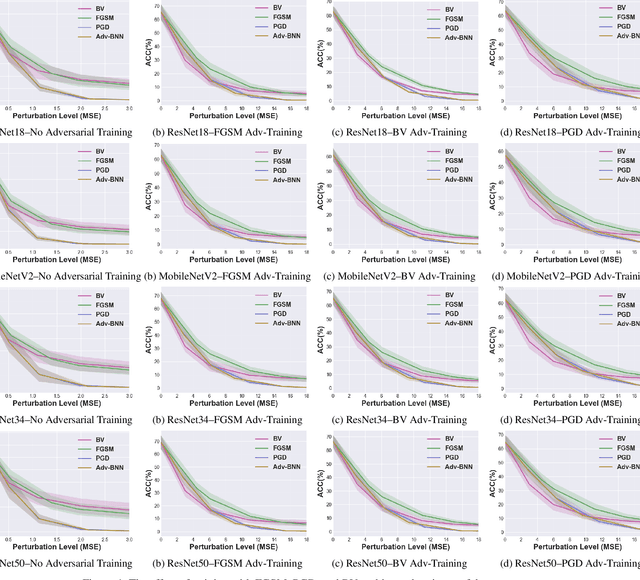

Vulnerability Under Adversarial Machine Learning: Bias or Variance?

Aug 01, 2020

Abstract:Prior studies have unveiled the vulnerability of the deep neural networks in the context of adversarial machine learning, leading to great recent attention into this area. One interesting question that has yet to be fully explored is the bias-variance relationship of adversarial machine learning, which can potentially provide deeper insights into this behaviour. The notion of bias and variance is one of the main approaches to analyze and evaluate the generalization and reliability of a machine learning model. Although it has been extensively used in other machine learning models, it is not well explored in the field of deep learning and it is even less explored in the area of adversarial machine learning. In this study, we investigate the effect of adversarial machine learning on the bias and variance of a trained deep neural network and analyze how adversarial perturbations can affect the generalization of a network. We derive the bias-variance trade-off for both classification and regression applications based on two main loss functions: (i) mean squared error (MSE), and (ii) cross-entropy. Furthermore, we perform quantitative analysis with both simulated and real data to empirically evaluate consistency with the derived bias-variance tradeoffs. Our analysis sheds light on why the deep neural networks have poor performance under adversarial perturbation from a bias-variance point of view and how this type of perturbation would change the performance of a network. Moreover, given these new theoretical findings, we introduce a new adversarial machine learning algorithm with lower computational complexity than well-known adversarial machine learning strategies (e.g., PGD) while providing a high success rate in fooling deep neural networks in lower perturbation magnitudes.

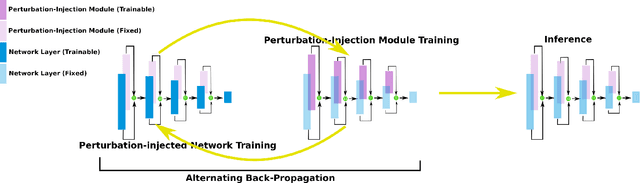

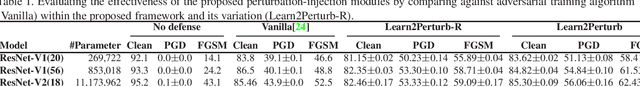

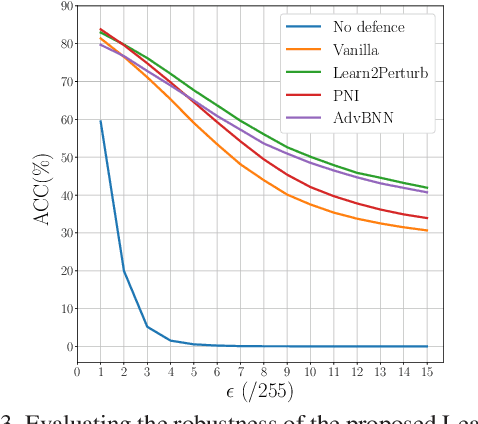

Learn2Perturb: an End-to-end Feature Perturbation Learning to Improve Adversarial Robustness

Mar 03, 2020

Abstract:While deep neural networks have been achieving state-of-the-art performance across a wide variety of applications, their vulnerability to adversarial attacks limits their widespread deployment for safety-critical applications. Alongside other adversarial defense approaches being investigated, there has been a very recent interest in improving adversarial robustness in deep neural networks through the introduction of perturbations during the training process. However, such methods leverage fixed, pre-defined perturbations and require significant hyper-parameter tuning that makes them very difficult to leverage in a general fashion. In this study, we introduce Learn2Perturb, an end-to-end feature perturbation learning approach for improving the adversarial robustness of deep neural networks. More specifically, we introduce novel perturbation-injection modules that are incorporated at each layer to perturb the feature space and increase uncertainty in the network. This feature perturbation is performed at both the training and the inference stages. Furthermore, inspired by the Expectation-Maximization, an alternating back-propagation training algorithm is introduced to train the network and noise parameters consecutively. Experimental results on CIFAR-10 and CIFAR-100 datasets show that the proposed Learn2Perturb method can result in deep neural networks which are $4-7\%$ more robust on $l_{\infty}$ FGSM and PDG adversarial attacks and significantly outperforms the state-of-the-art against $l_2$ $C\&W$ attack and a wide range of well-known black-box attacks.

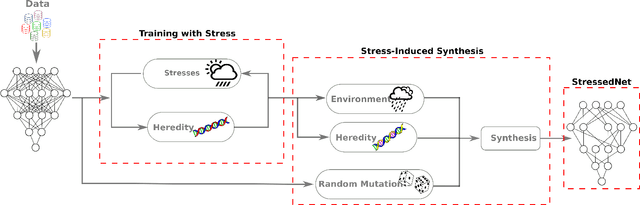

StressedNets: Efficient Feature Representations via Stress-induced Evolutionary Synthesis of Deep Neural Networks

Jan 16, 2018

Abstract:The computational complexity of leveraging deep neural networks for extracting deep feature representations is a significant barrier to its widespread adoption, particularly for use in embedded devices. One particularly promising strategy to addressing the complexity issue is the notion of evolutionary synthesis of deep neural networks, which was demonstrated to successfully produce highly efficient deep neural networks while retaining modeling performance. Here, we further extend upon the evolutionary synthesis strategy for achieving efficient feature extraction via the introduction of a stress-induced evolutionary synthesis framework, where stress signals are imposed upon the synapses of a deep neural network during training to induce stress and steer the synthesis process towards the production of more efficient deep neural networks over successive generations and improved model fidelity at a greater efficiency. The proposed stress-induced evolutionary synthesis approach is evaluated on a variety of different deep neural network architectures (LeNet5, AlexNet, and YOLOv2) on different tasks (object classification and object detection) to synthesize efficient StressedNets over multiple generations. Experimental results demonstrate the efficacy of the proposed framework to synthesize StressedNets with significant improvement in network architecture efficiency (e.g., 40x for AlexNet and 33x for YOLOv2) and speed improvements (e.g., 5.5x inference speed-up for YOLOv2 on an Nvidia Tegra X1 mobile processor).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge